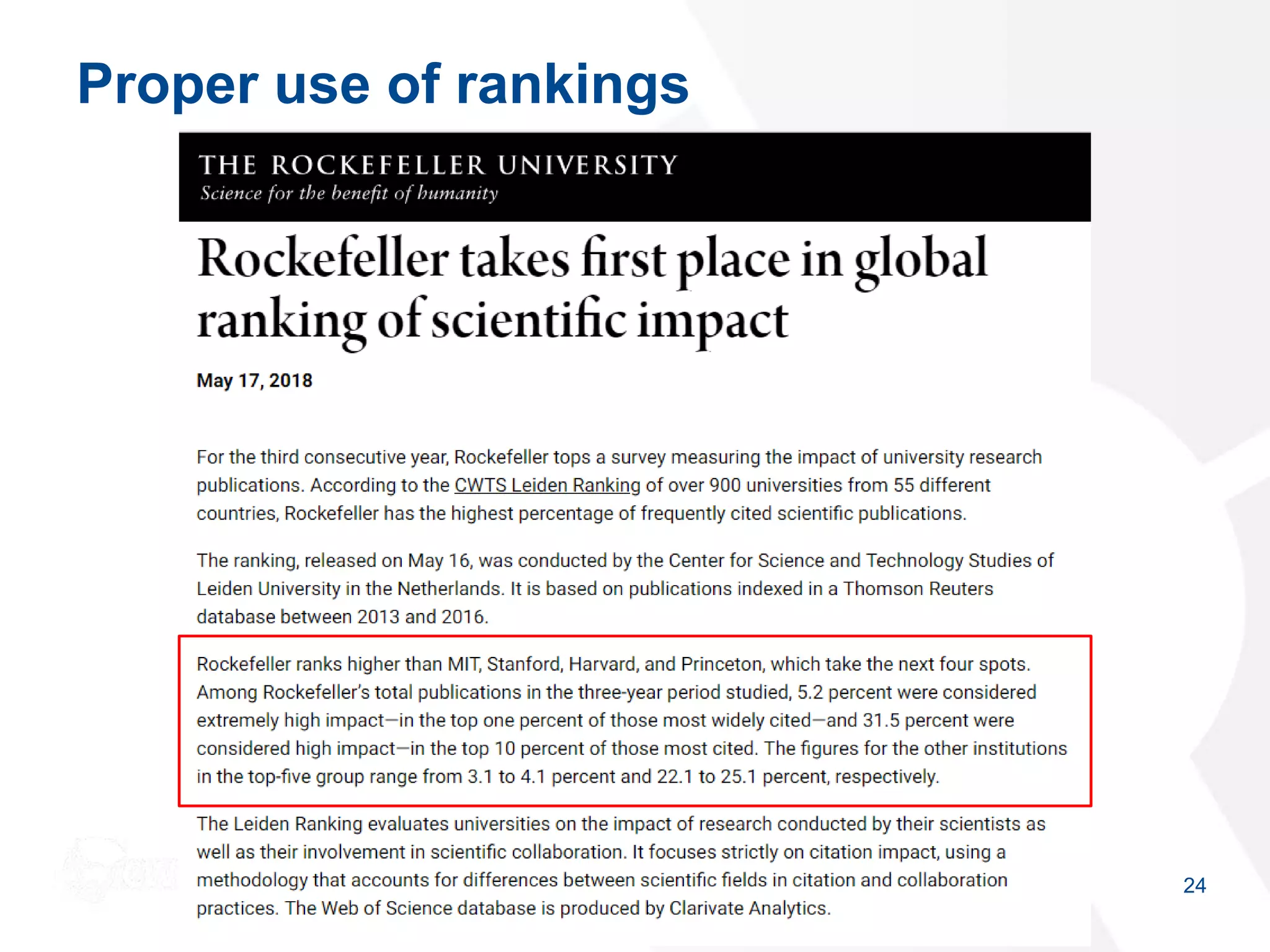

1) The CWTS Leiden Ranking focuses solely on research performance and is based purely on bibliometric indicators derived from the Web of Science database, without composite scores or input from universities.

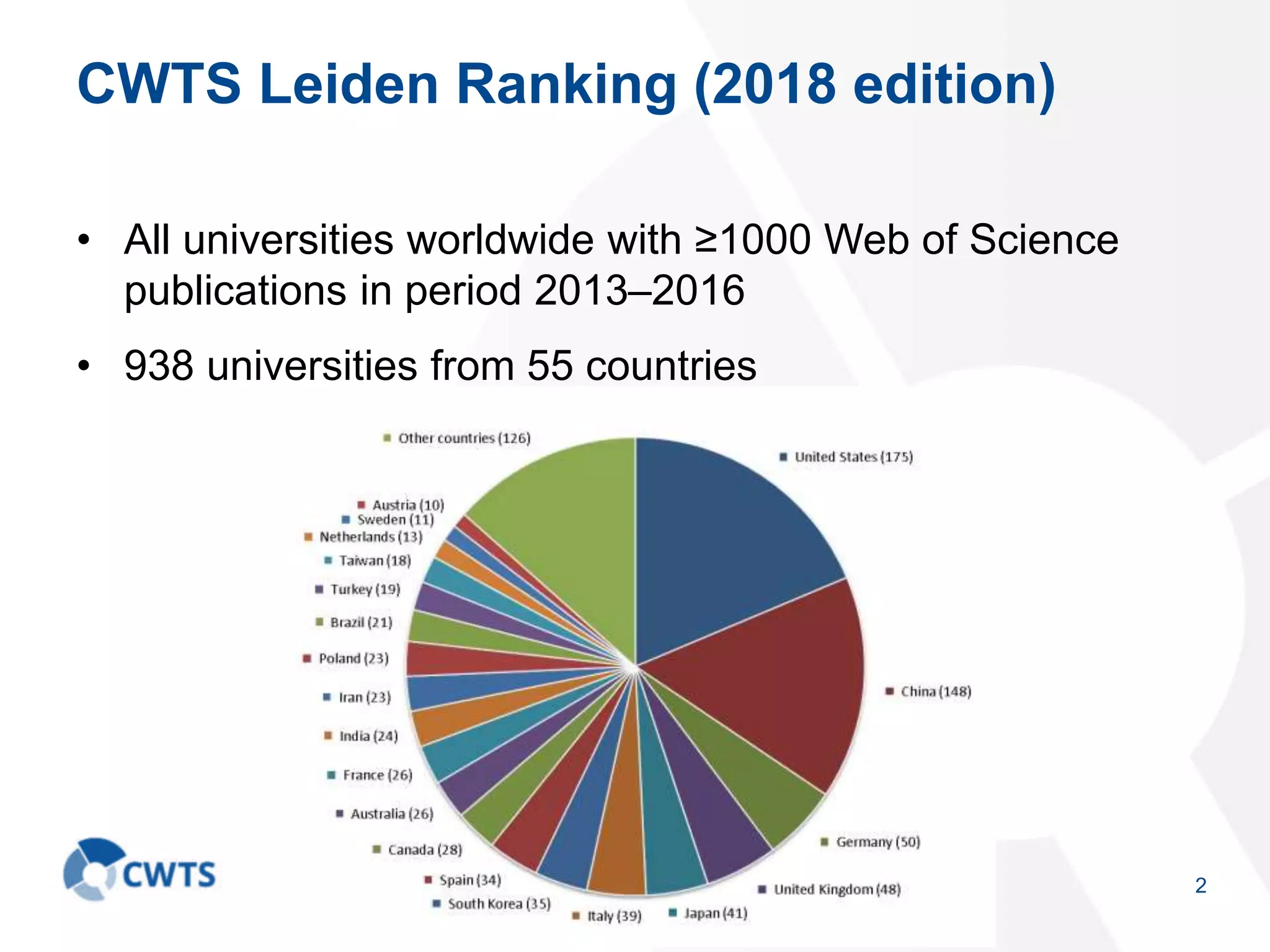

2) The 2018 edition ranked 938 universities from 55 countries that published at least 1,000 documents between 2013-2016.

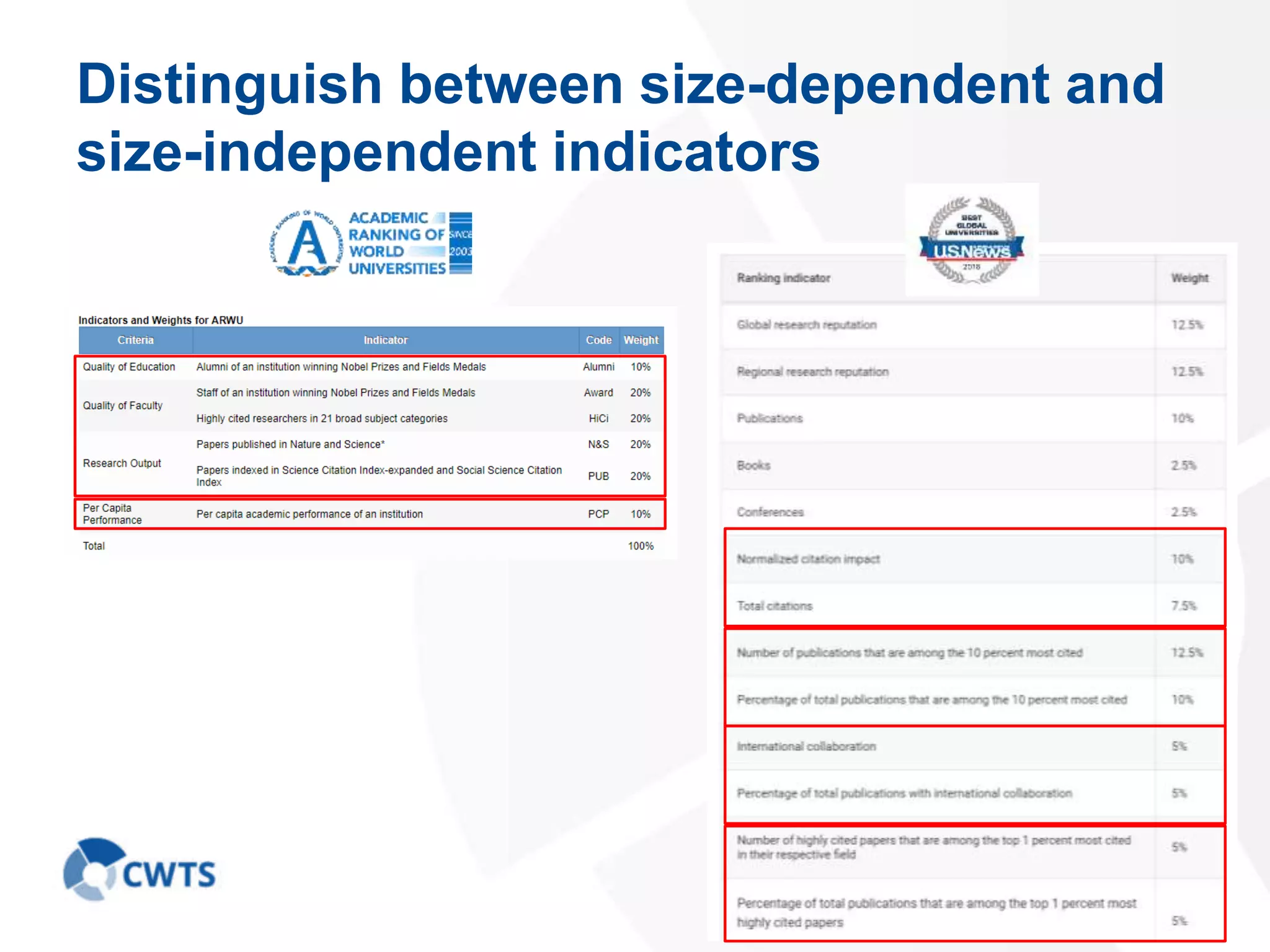

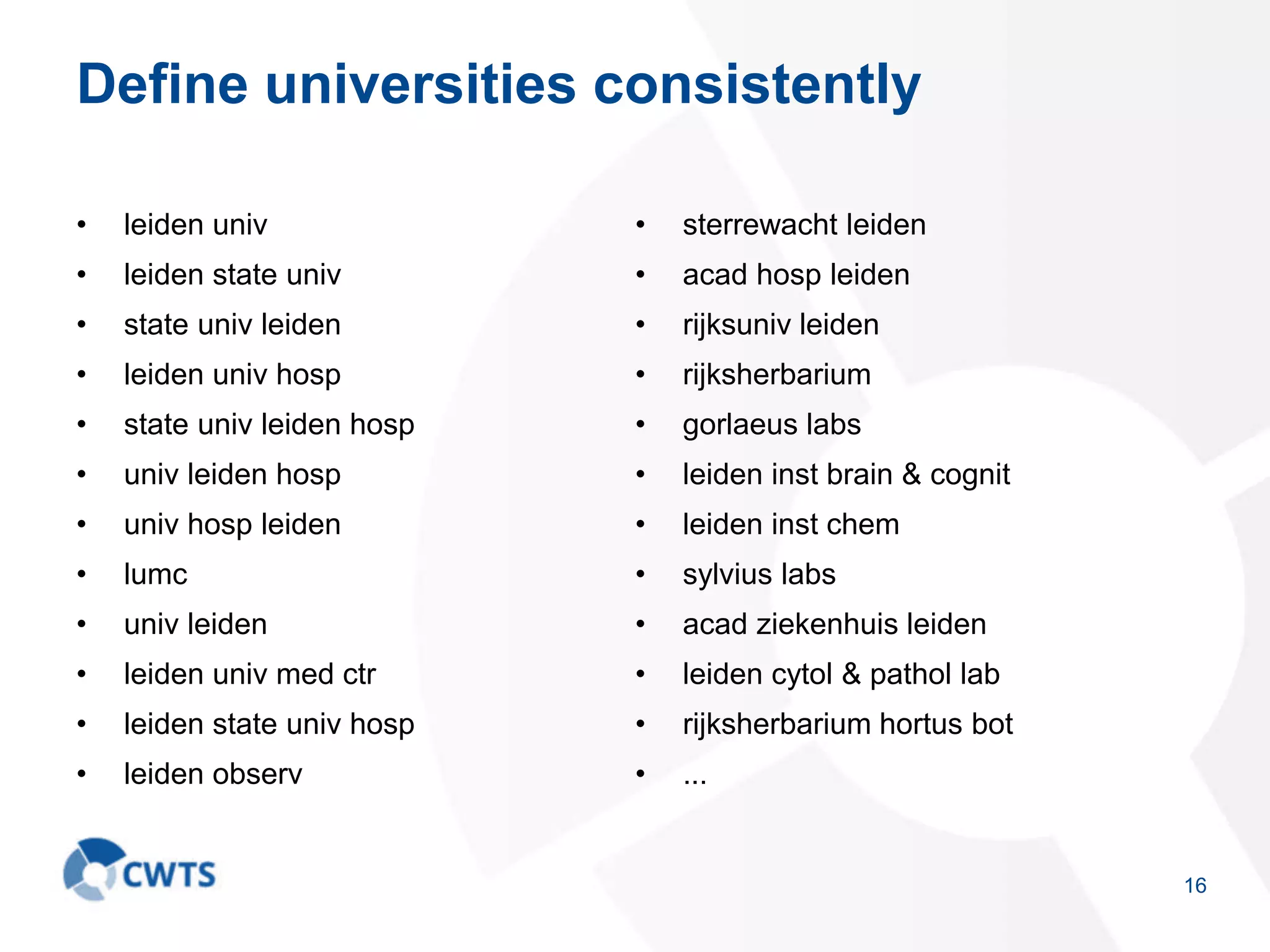

3) When designing rankings, indicators should distinguish between size-dependent and size-independent metrics, universities should be consistently defined, and rankings should be transparent. Comparisons between universities require acknowledging differences between them and the uncertainty in rankings.