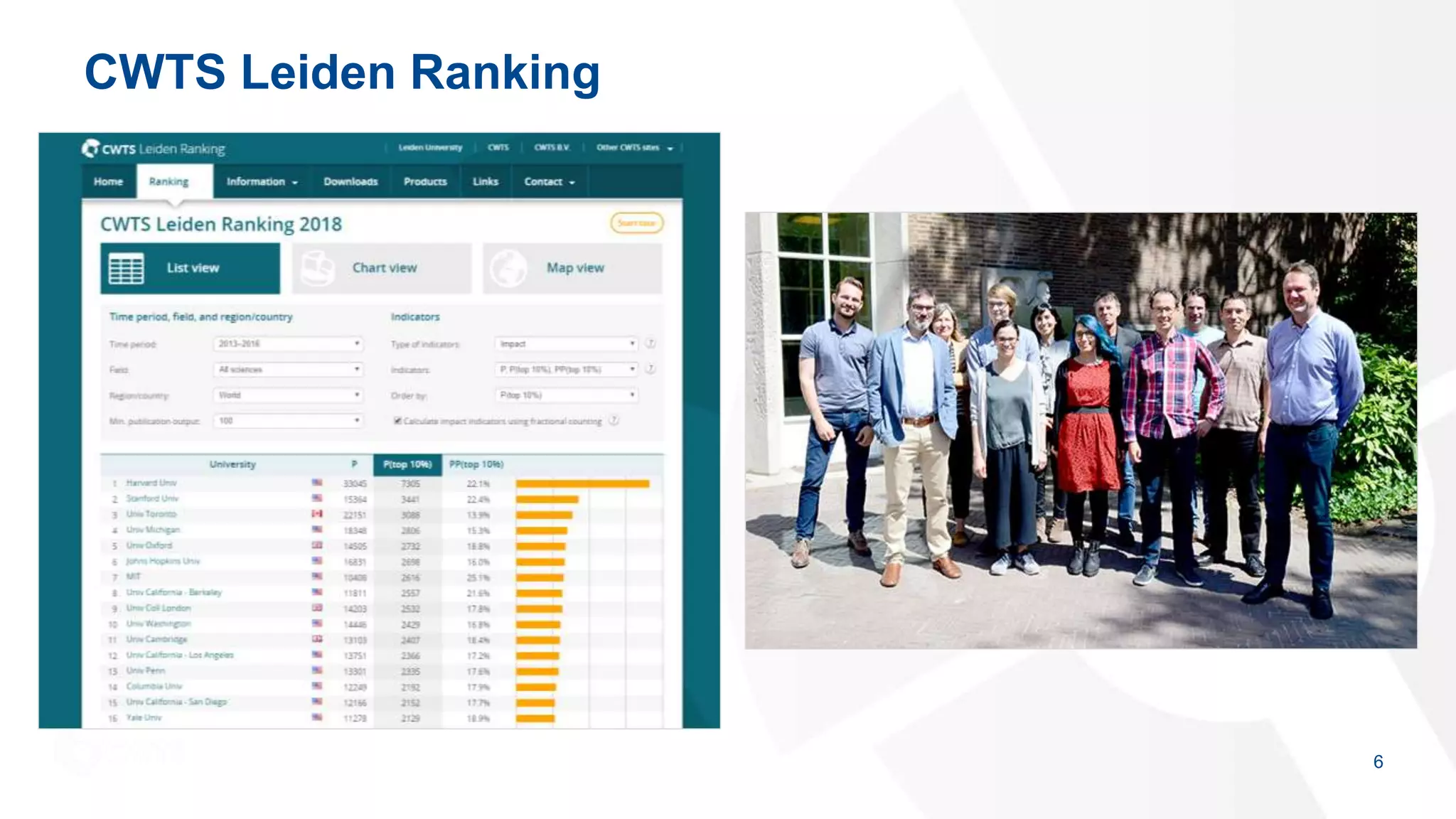

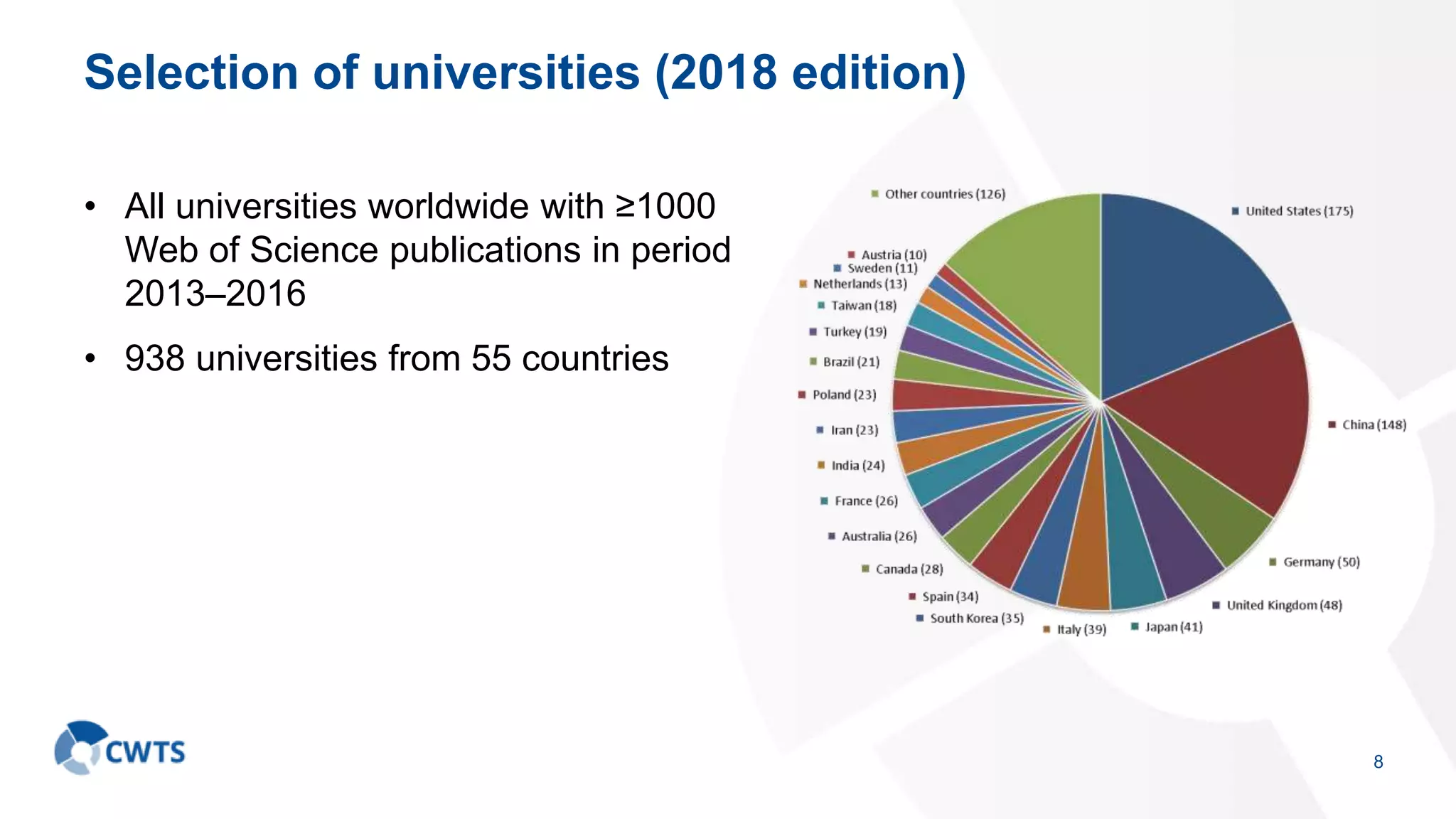

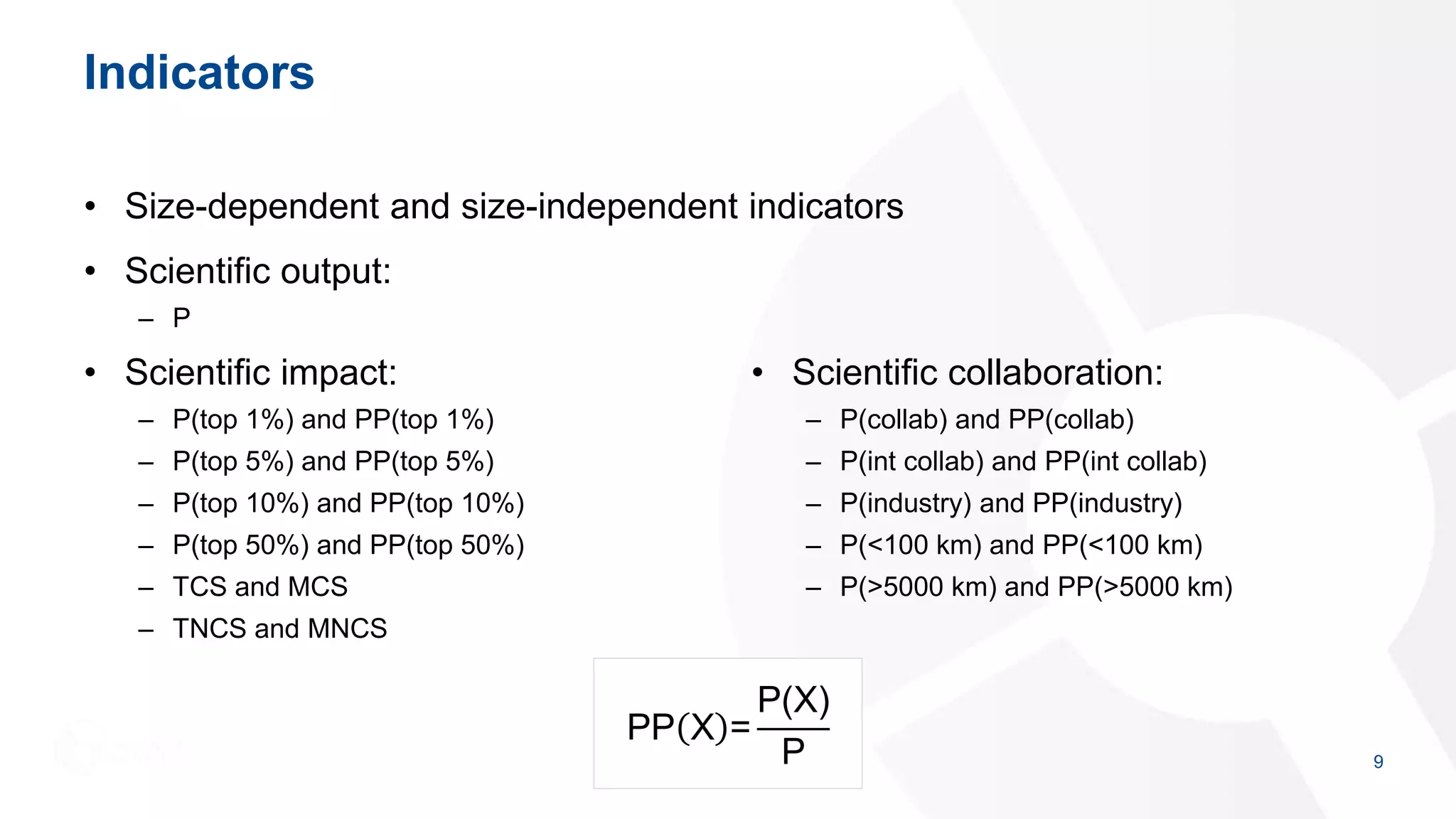

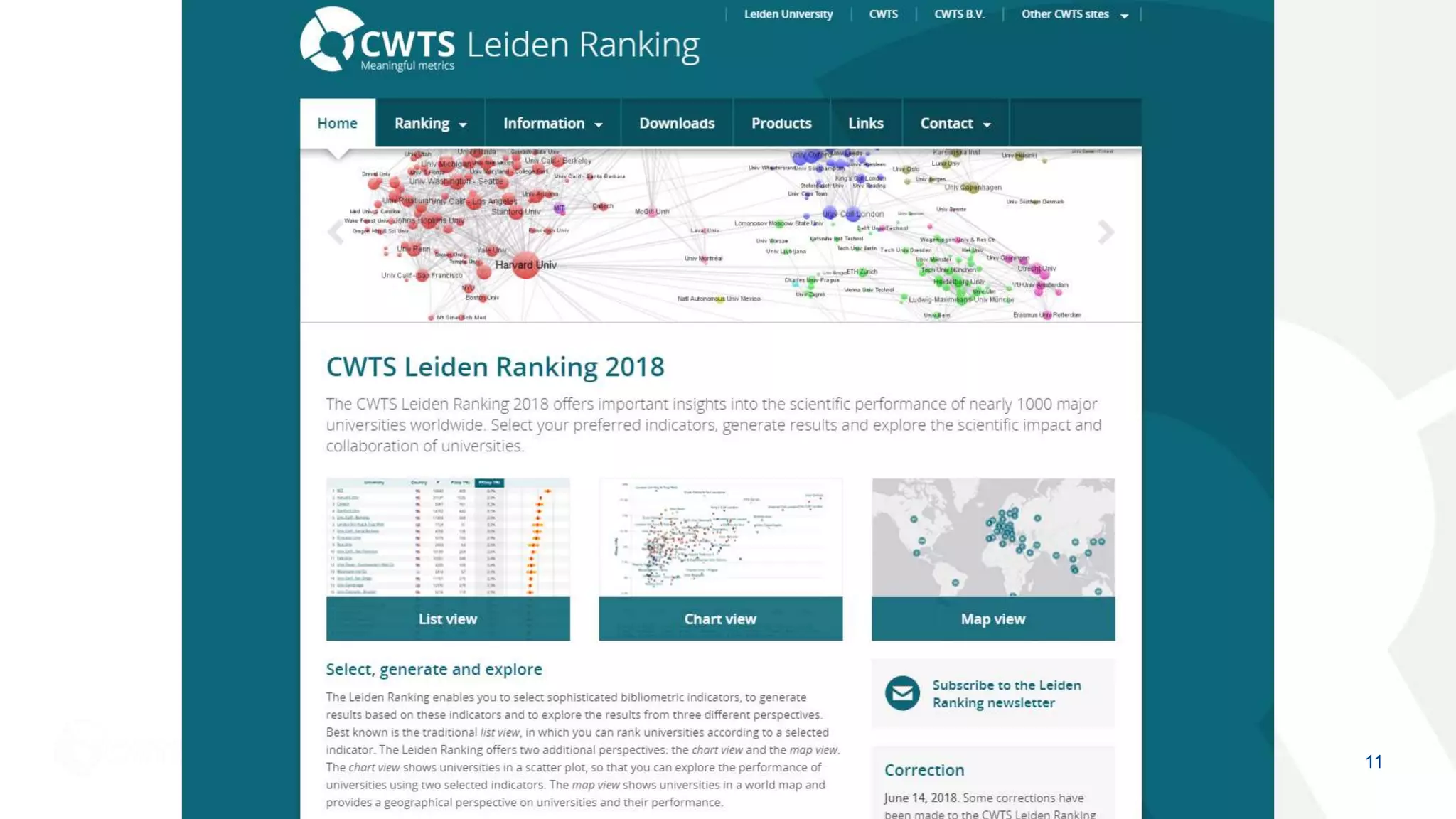

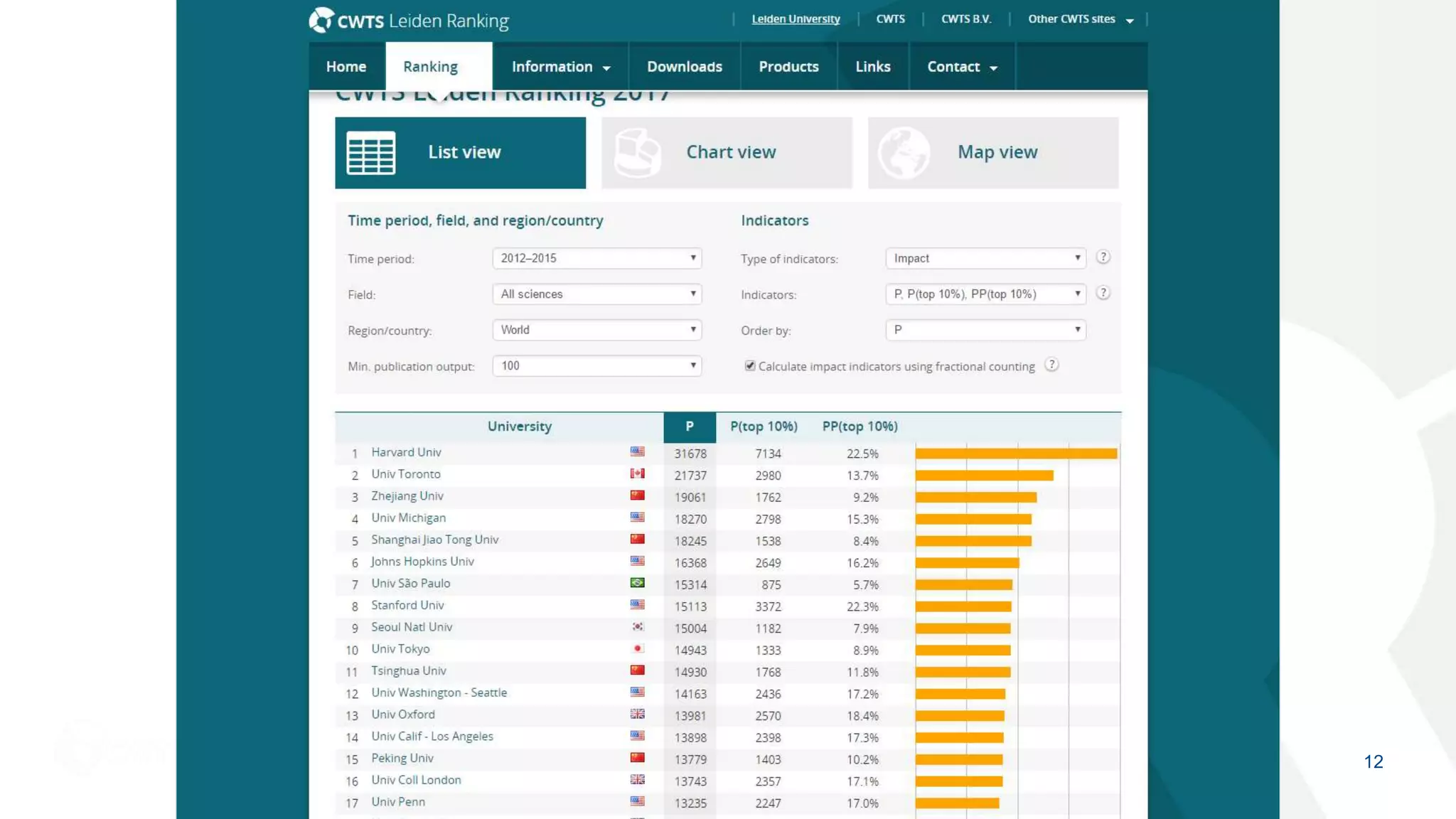

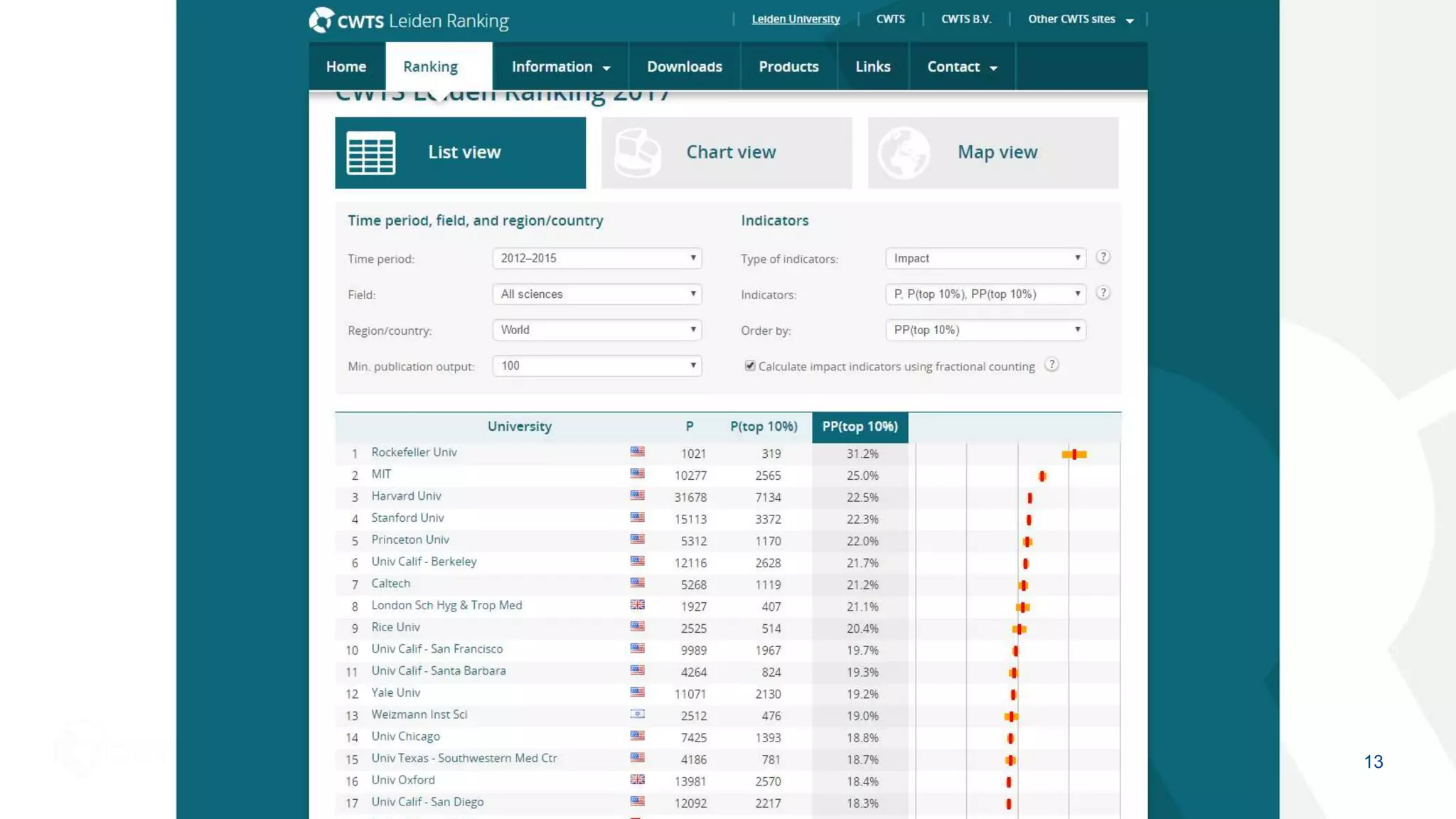

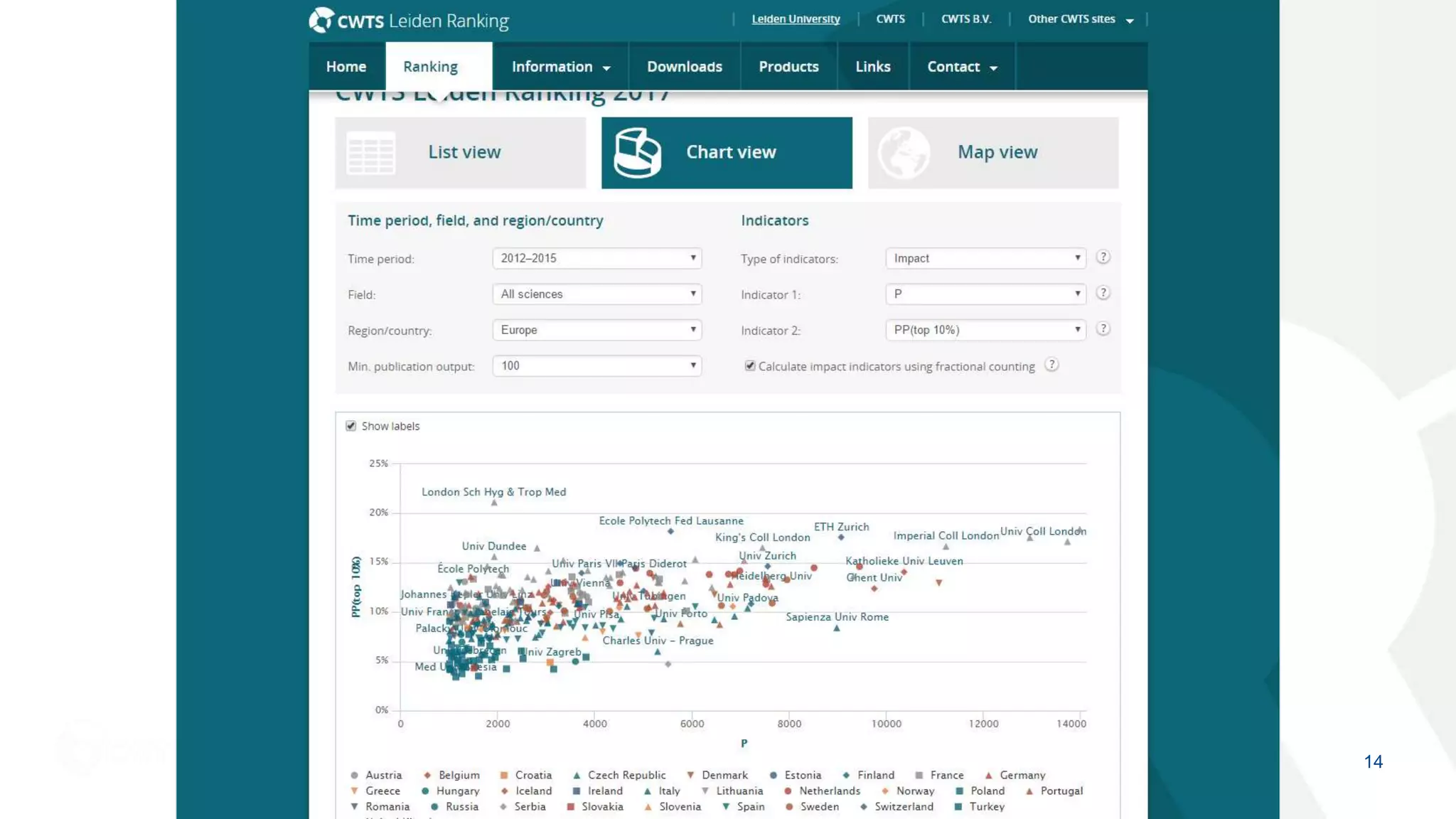

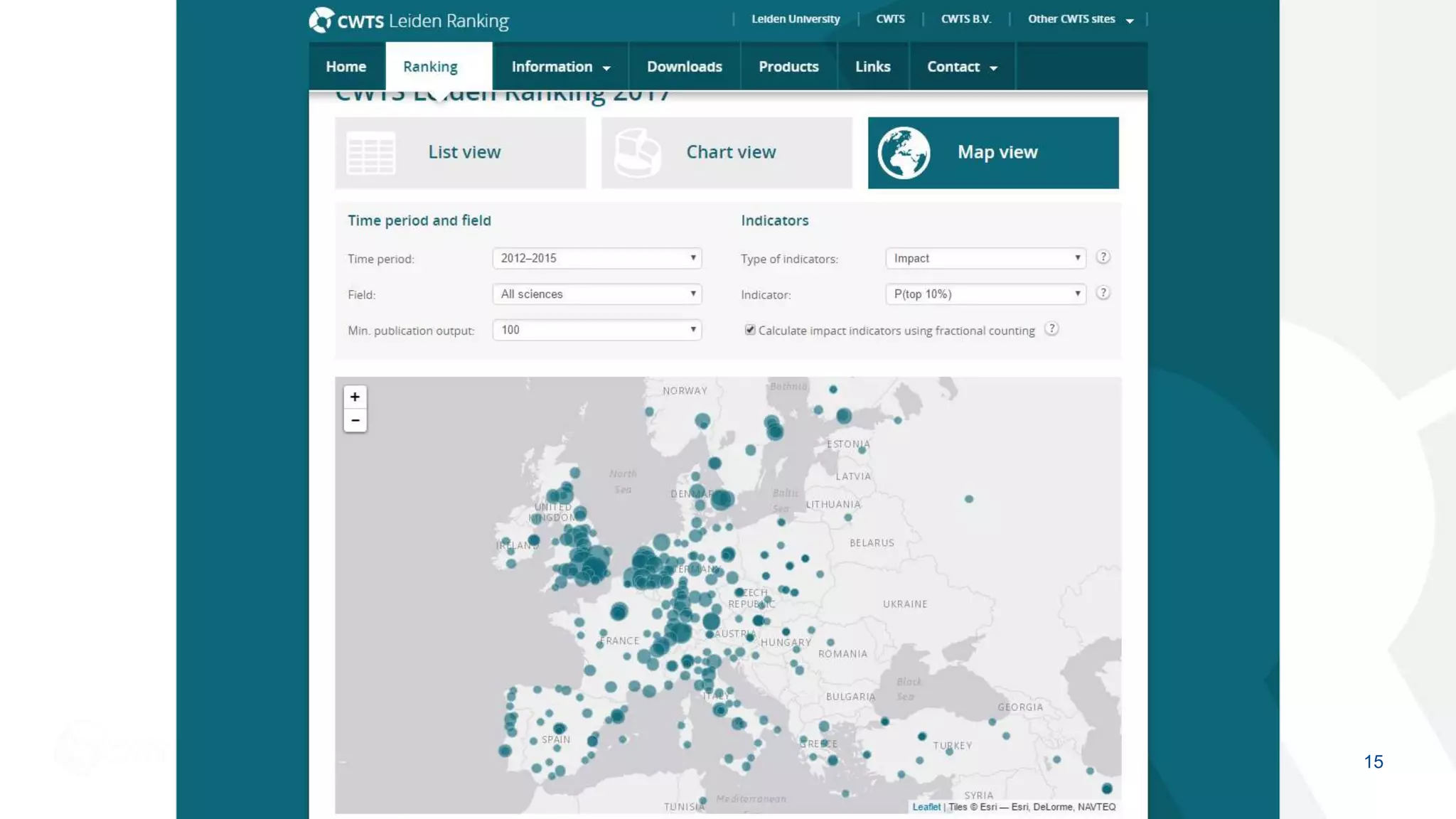

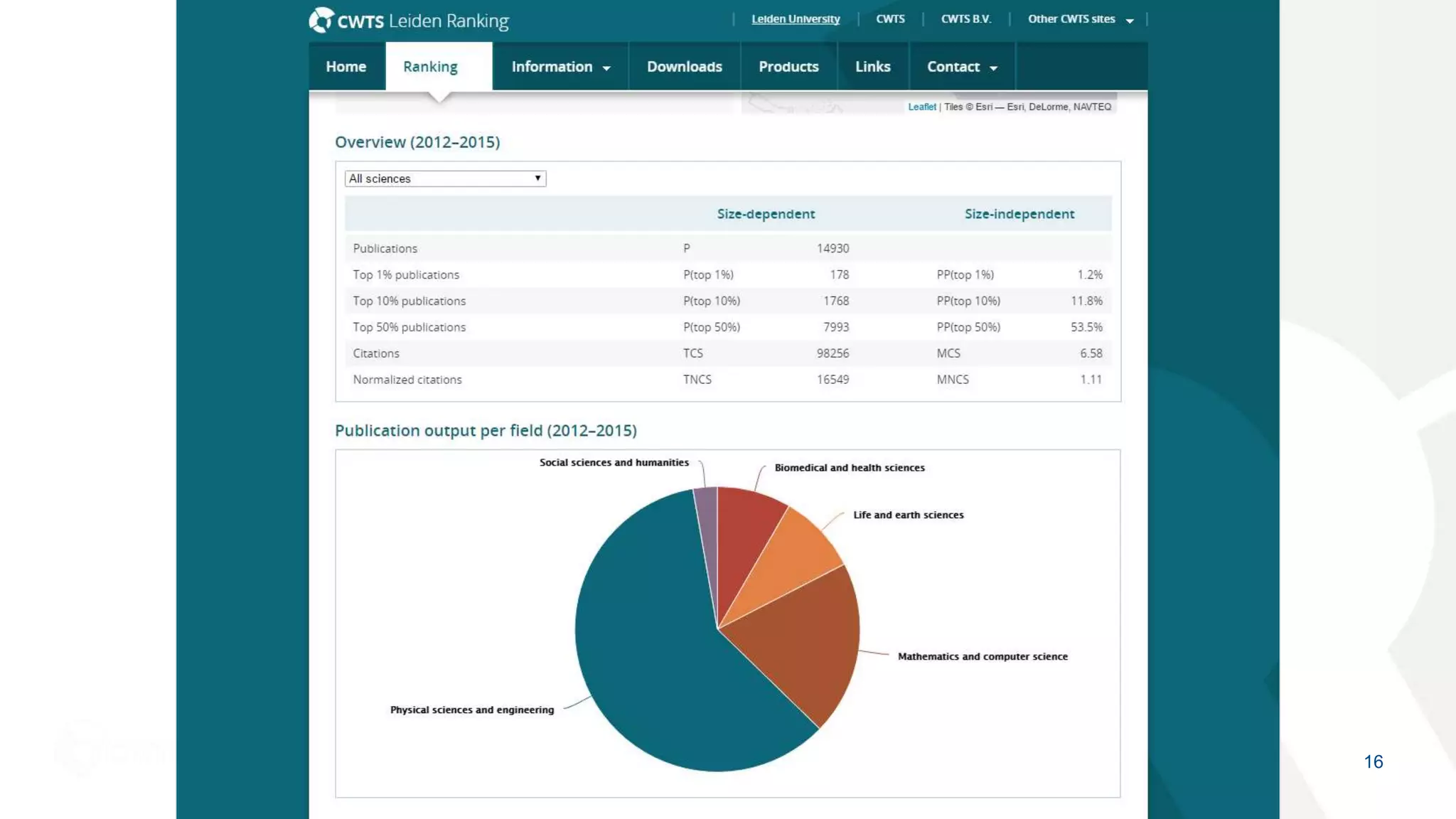

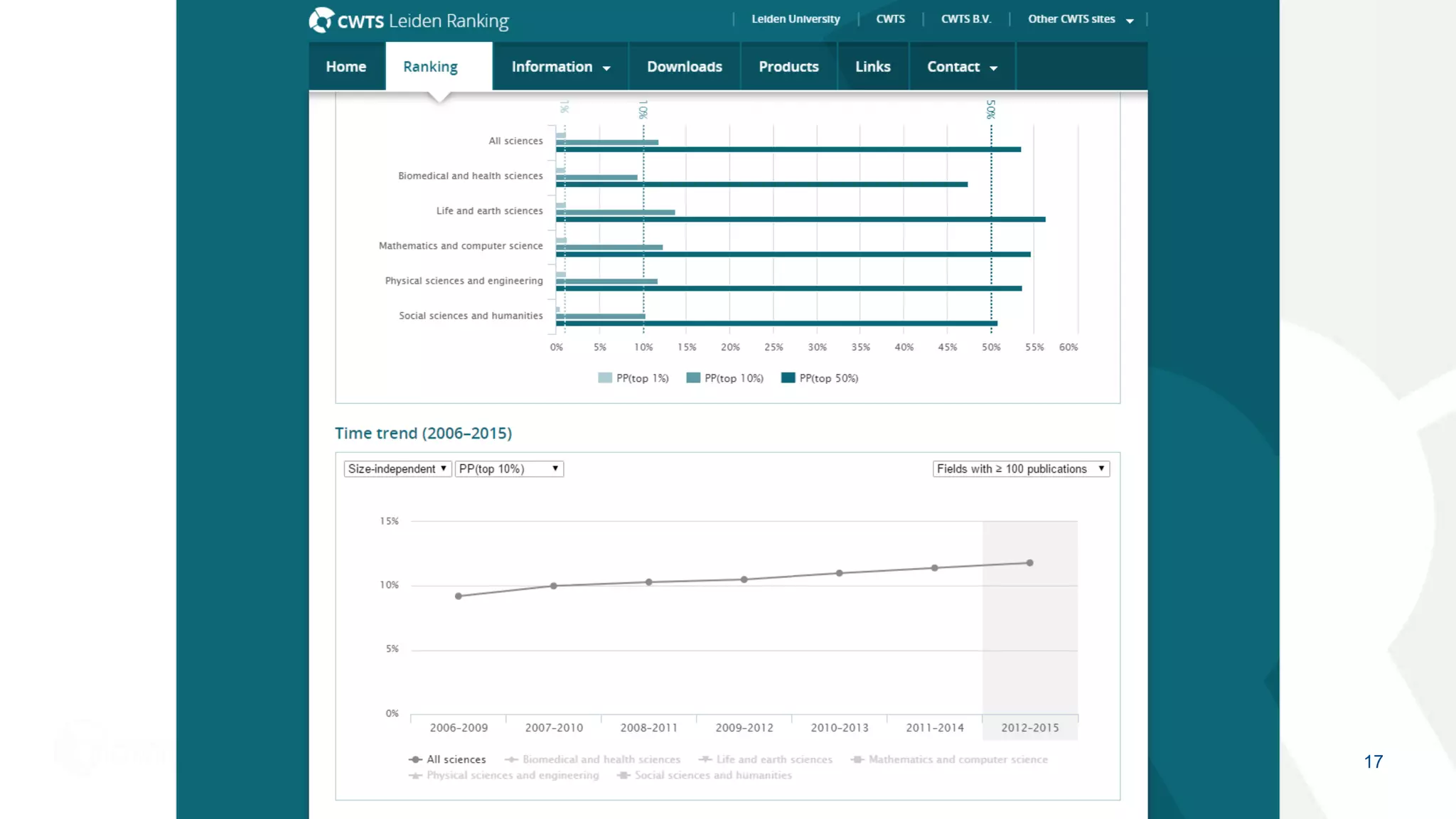

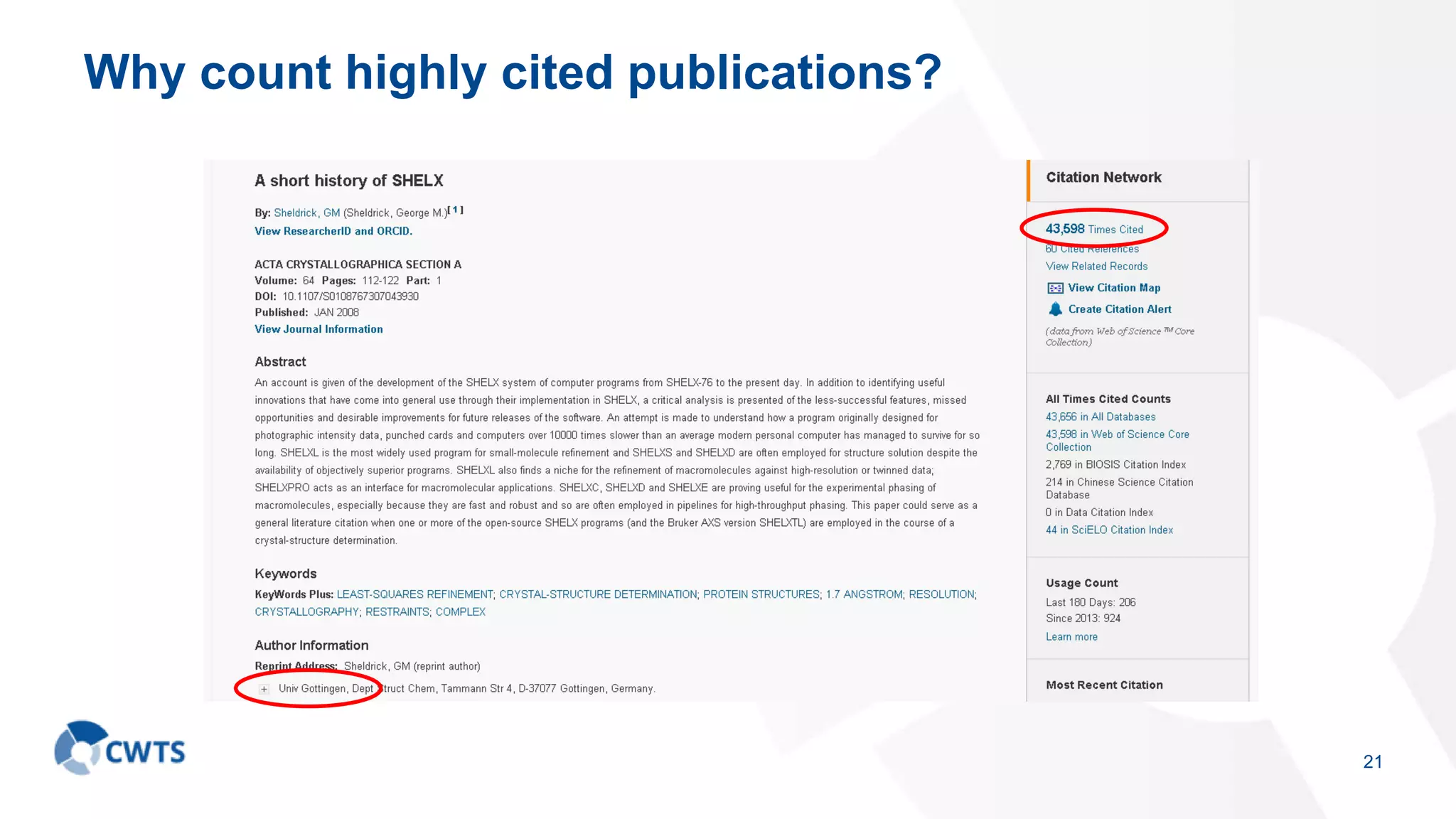

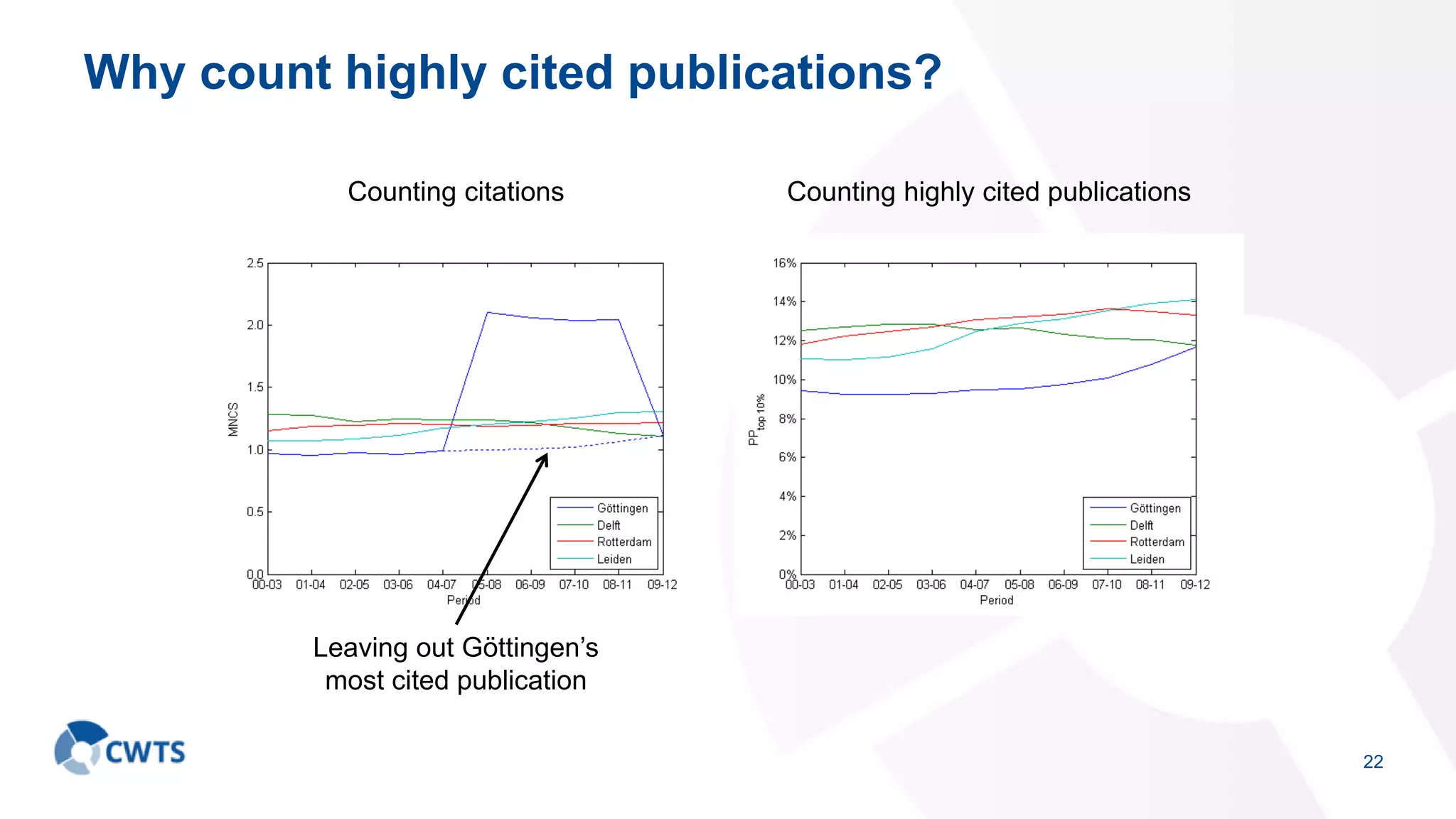

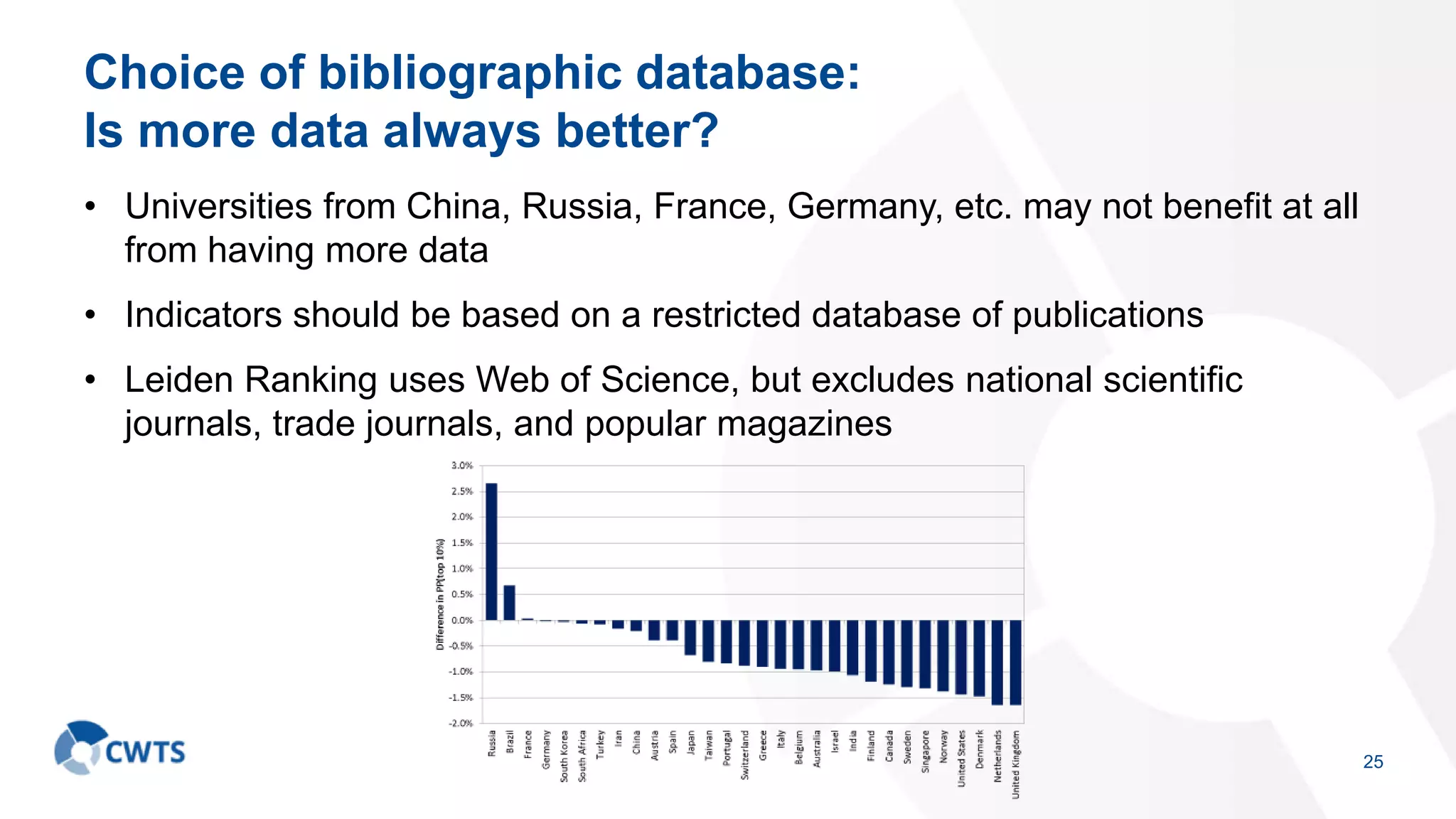

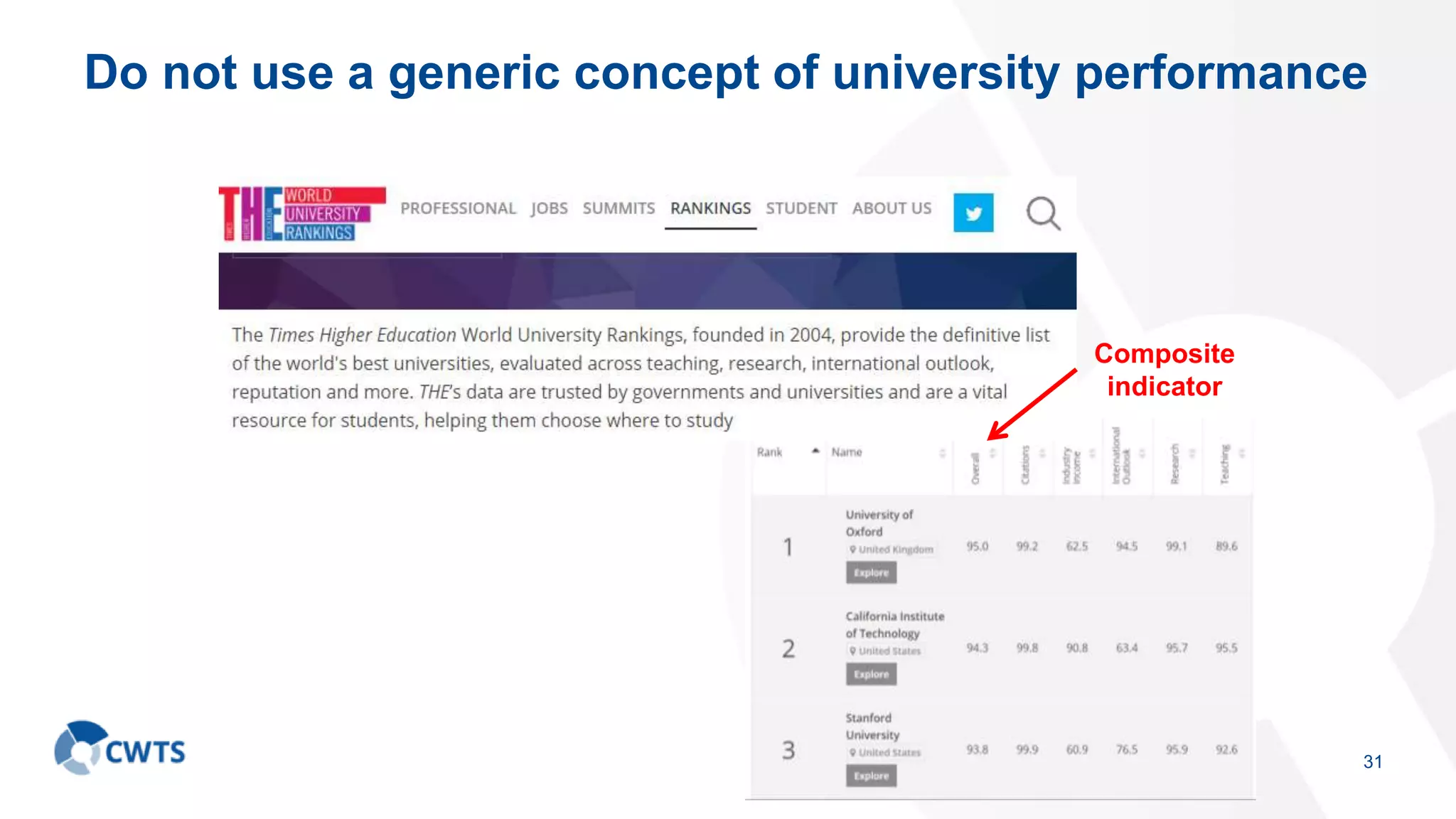

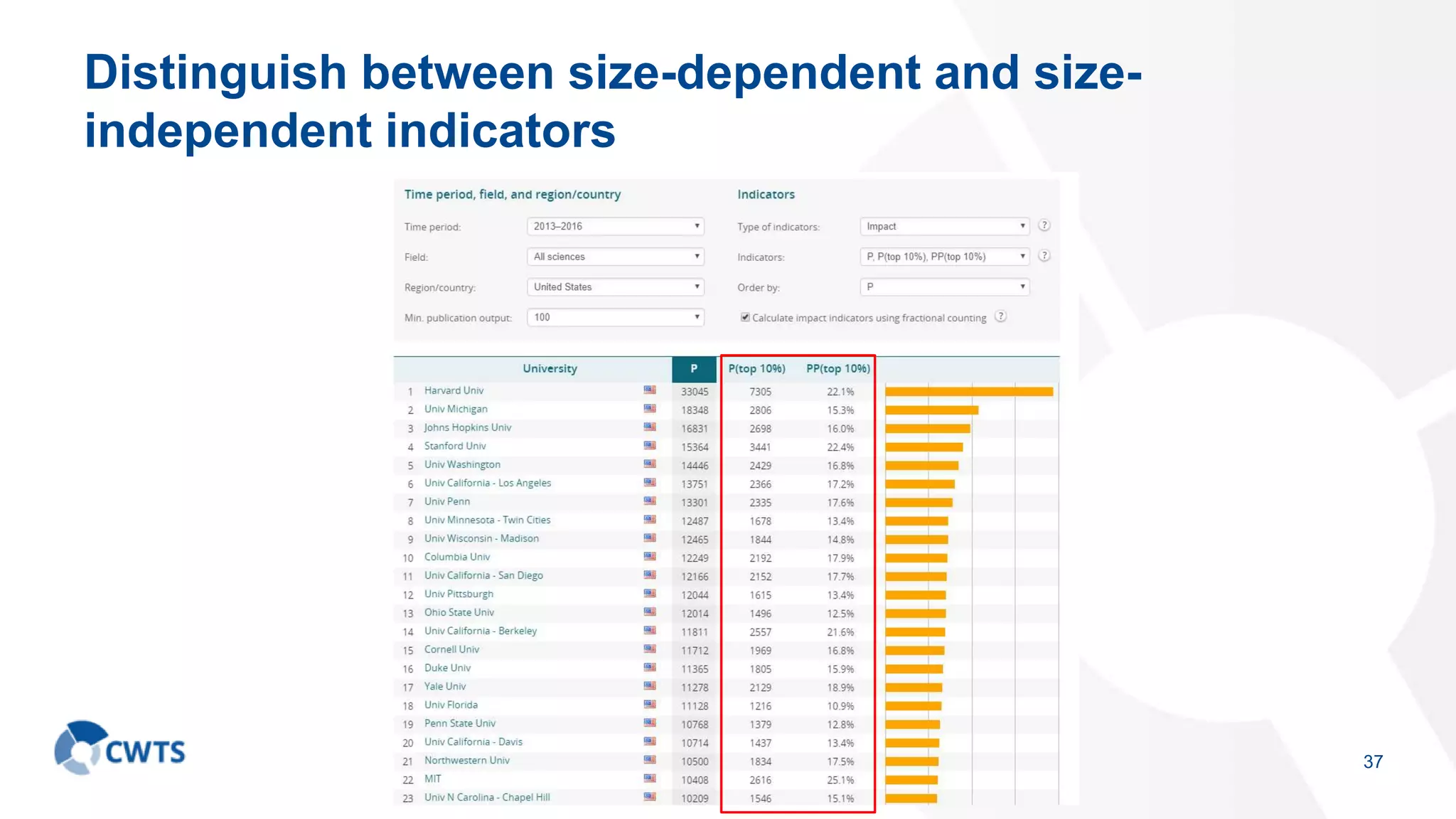

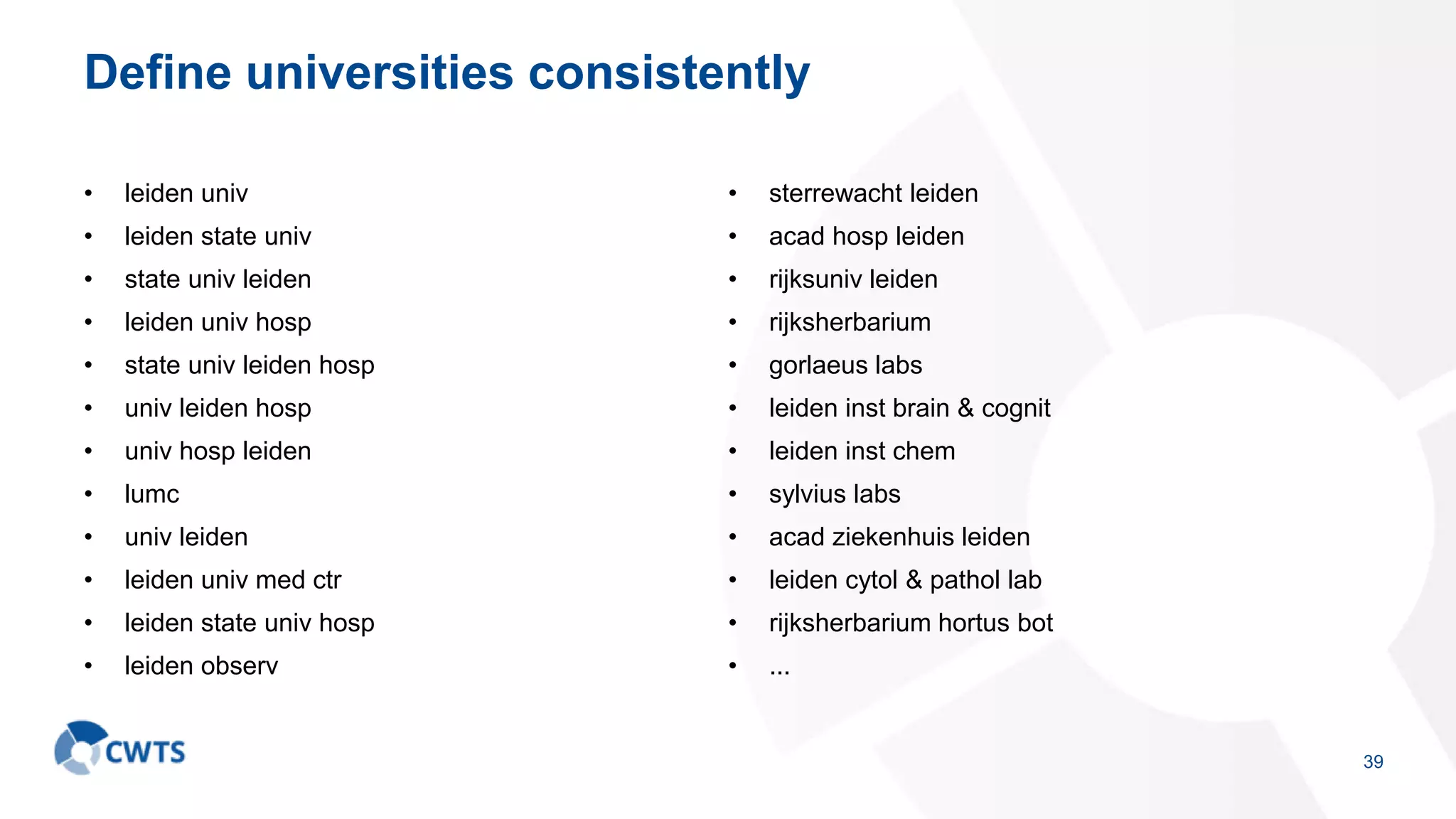

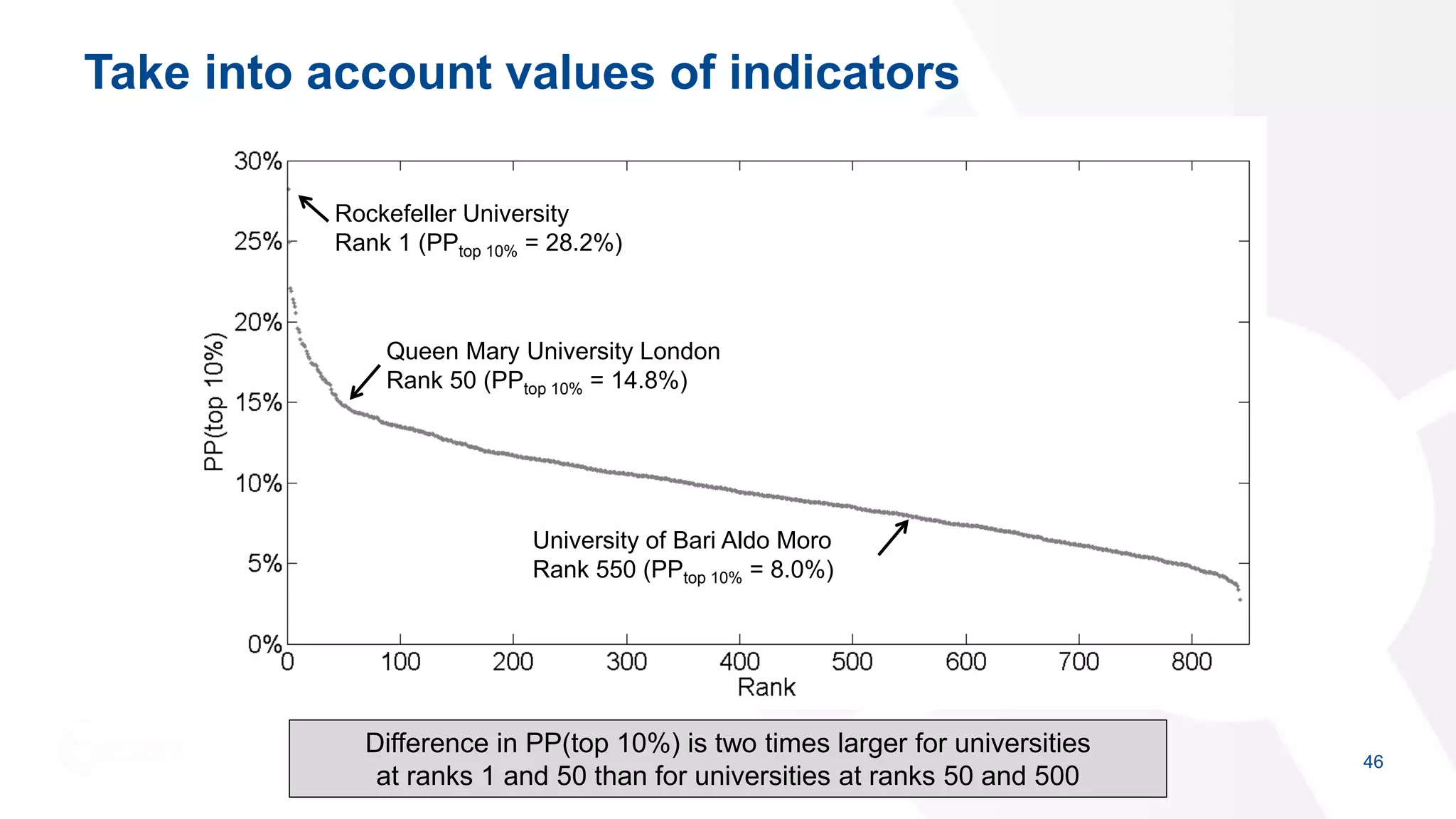

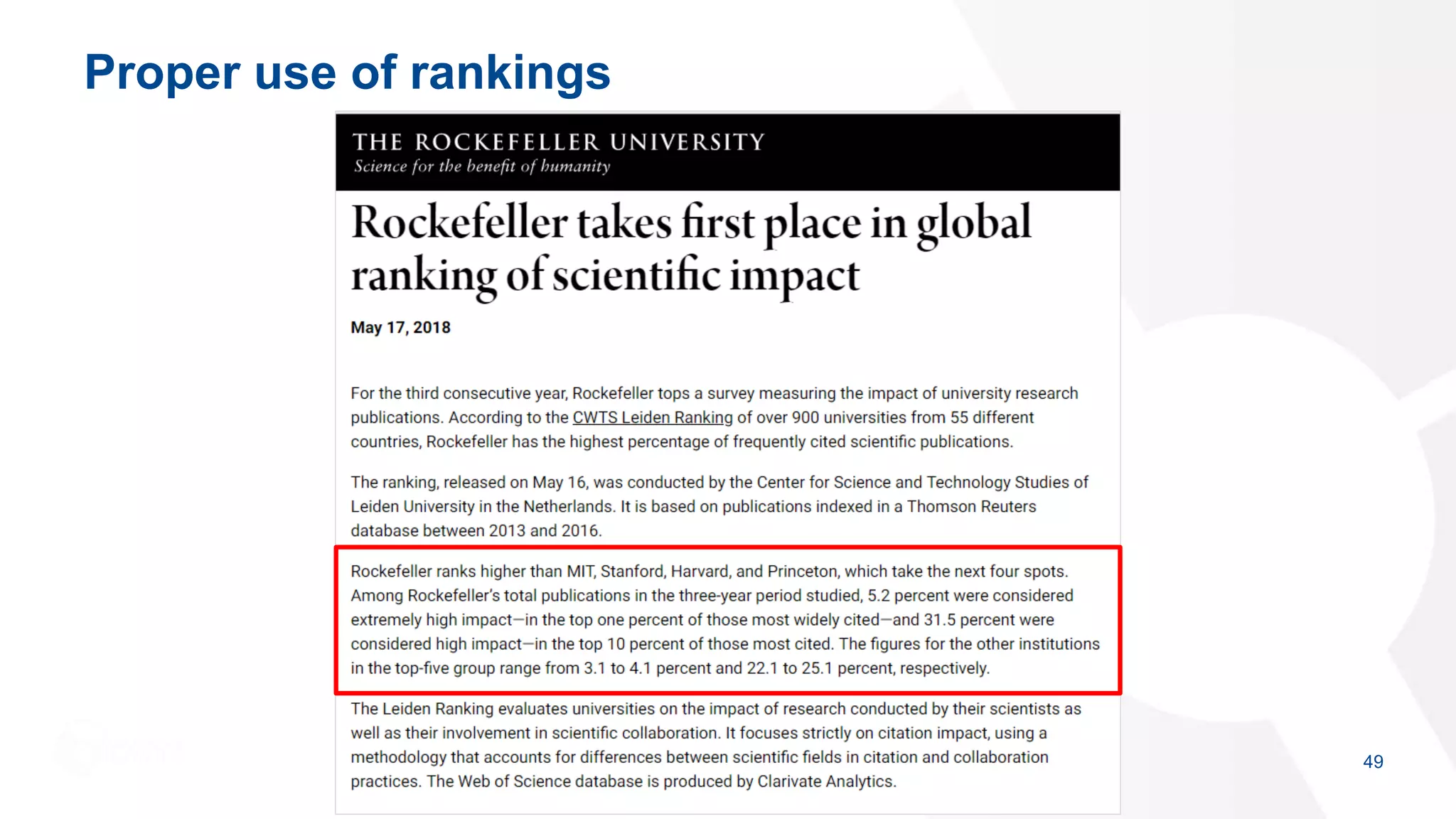

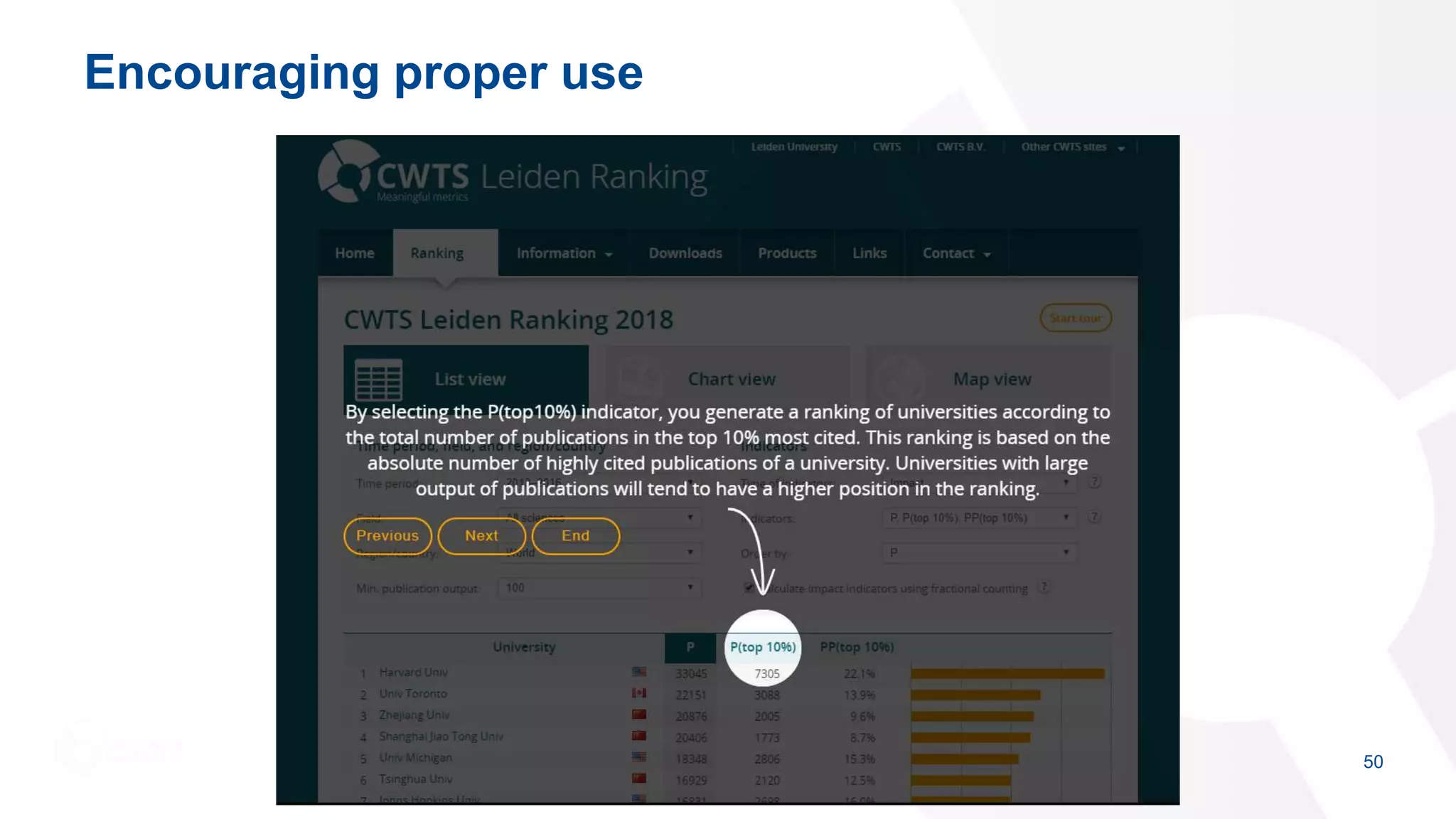

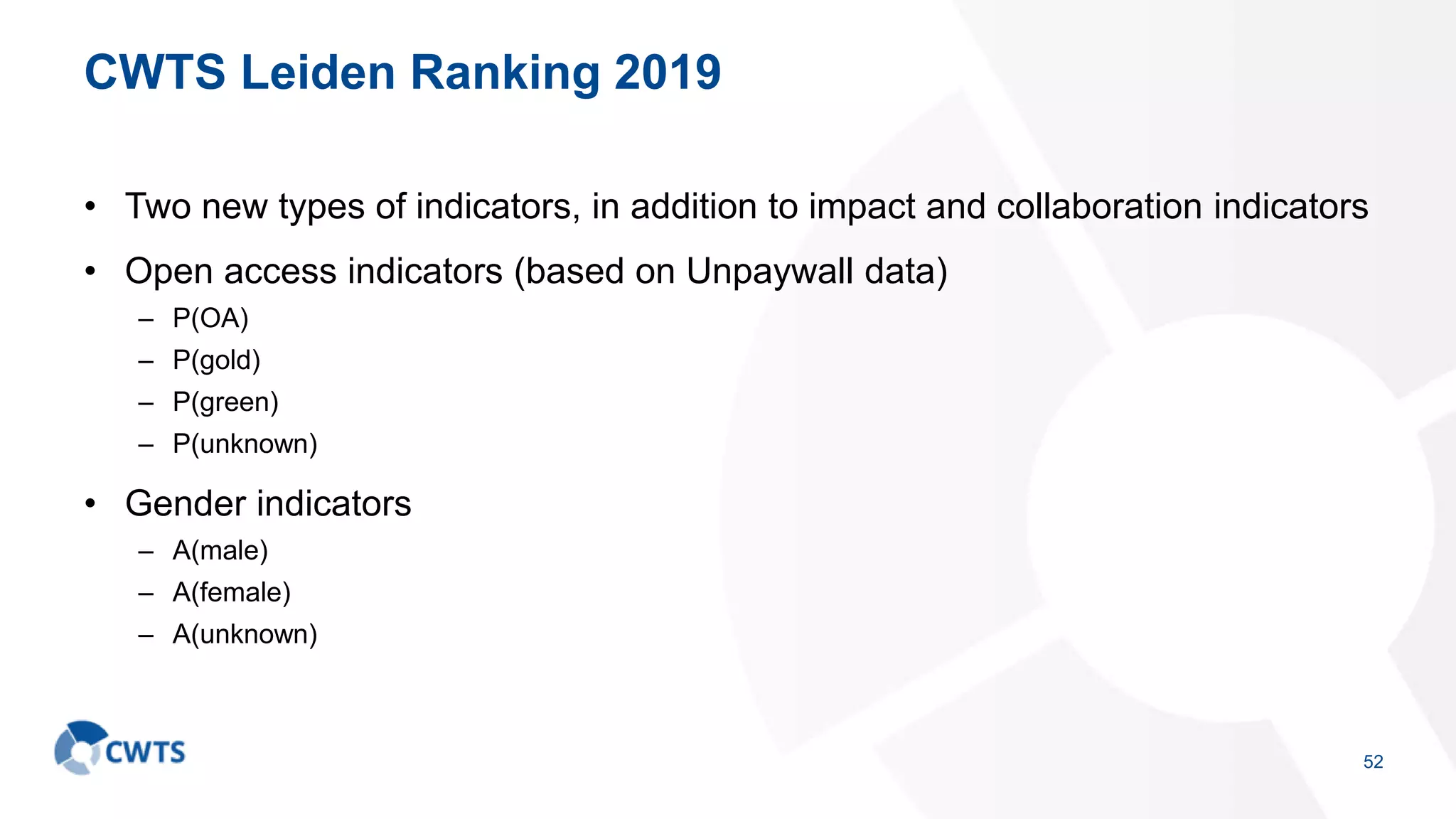

This document discusses responsible use of university rankings. It summarizes a presentation given by Nees Jan van Eck of CWTS about their Leiden university ranking methodology. The presentation outlines principles for responsible ranking design, interpretation, and use. It emphasizes using transparent, field-normalized bibliometric indicators to measure research impact rather than composite scores. Comparisons should consider size and subject differences between universities. Ranks are less important than underlying indicator values. Non-research metrics are also important to consider.