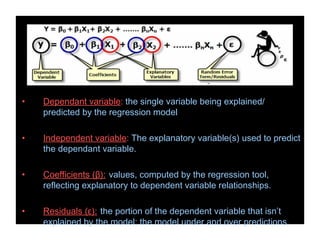

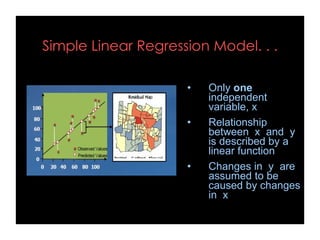

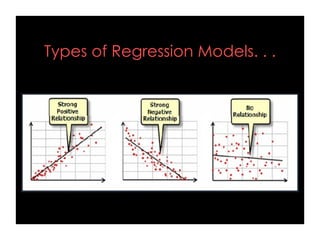

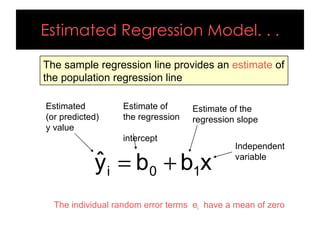

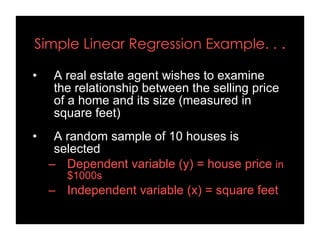

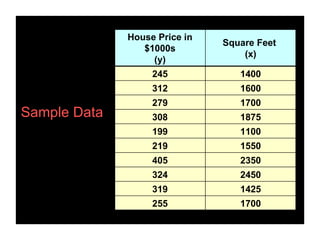

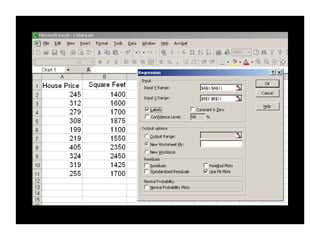

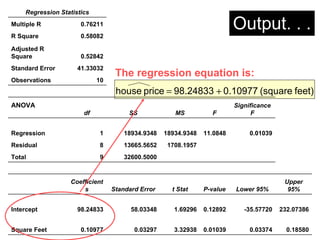

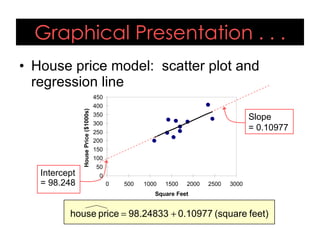

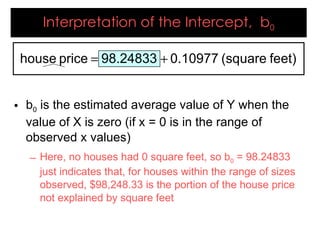

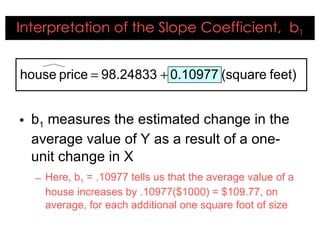

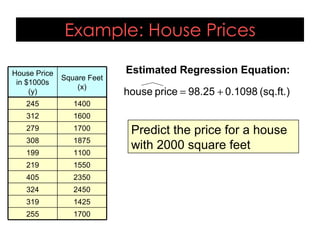

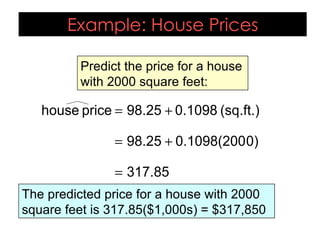

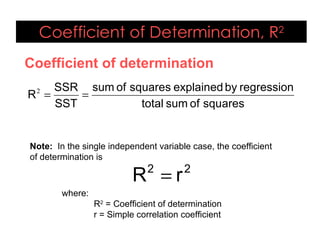

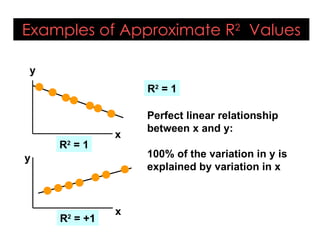

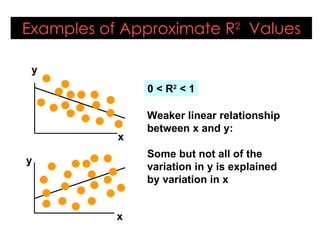

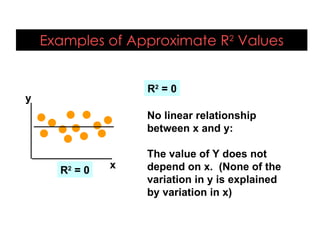

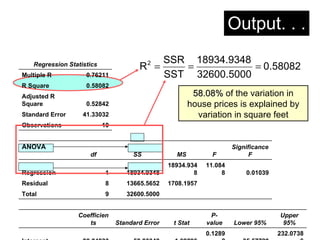

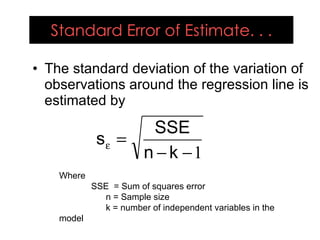

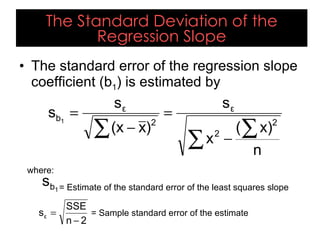

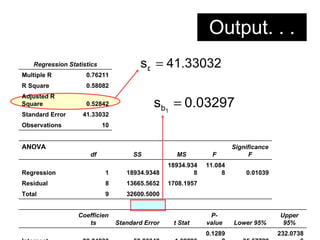

This document provides an overview of regression analysis and its applications in business. It defines regression analysis as the study of relationships between variables, with a dependent variable being explained or predicted by one or more independent variables. Simple linear regression involves one independent variable, while multiple regression can include any number. The document outlines key regression concepts like coefficients, residuals, and linear vs. nonlinear relationships. It provides an example comparing house prices to square footage to illustrate a simple linear regression model. Key outputs from the regression including the equation, R-squared, standard error, and coefficient significance are also explained.

![REGRESSION ANALYSIS

M.Ravishankar

[ And it’s application in Business ]](https://image.slidesharecdn.com/regressionanalysis-110723130213-phpapp02-230915190237-7d28a360/75/regressionanalysis-110723130213-phpapp02-pdf-1-2048.jpg)