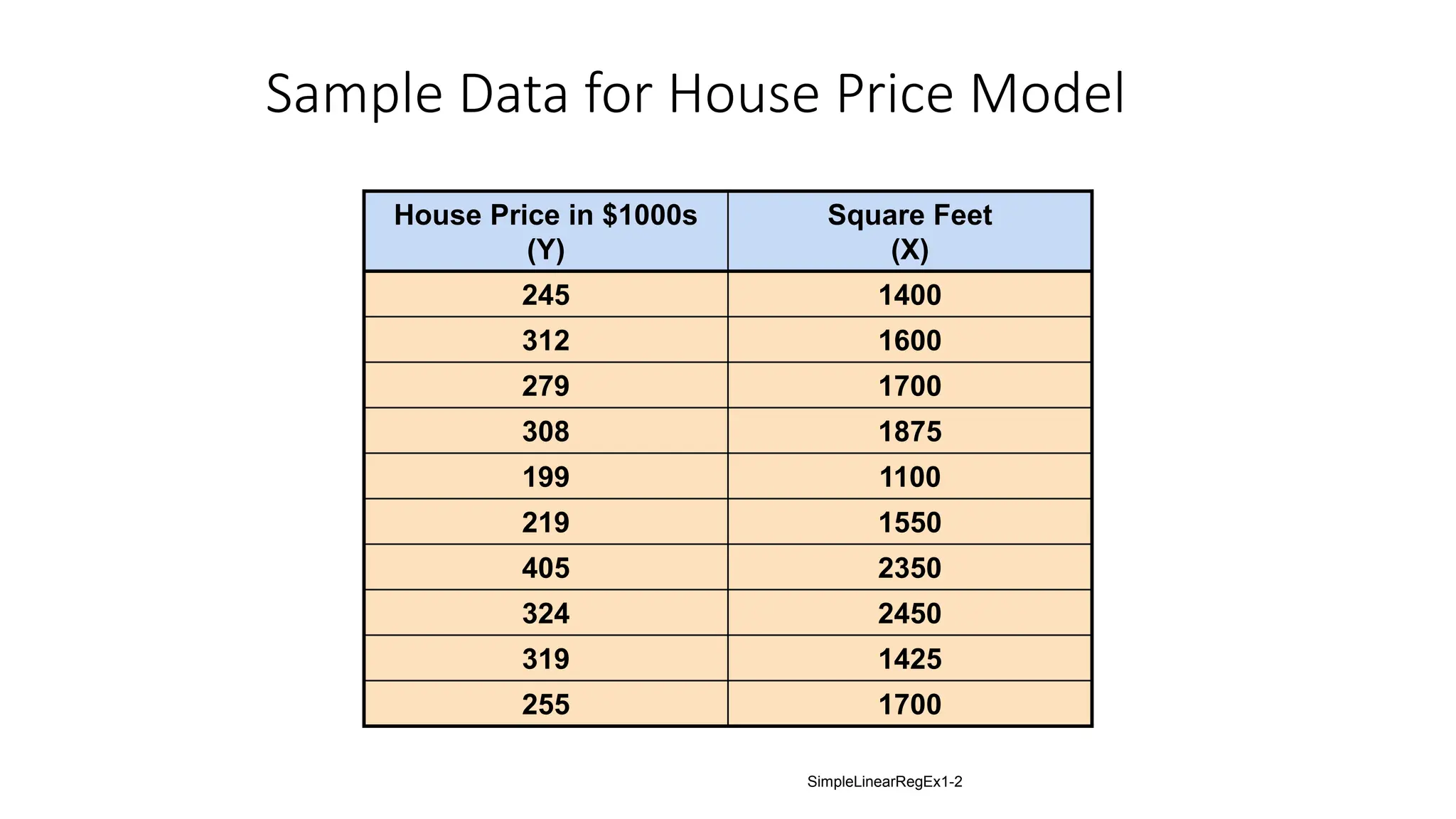

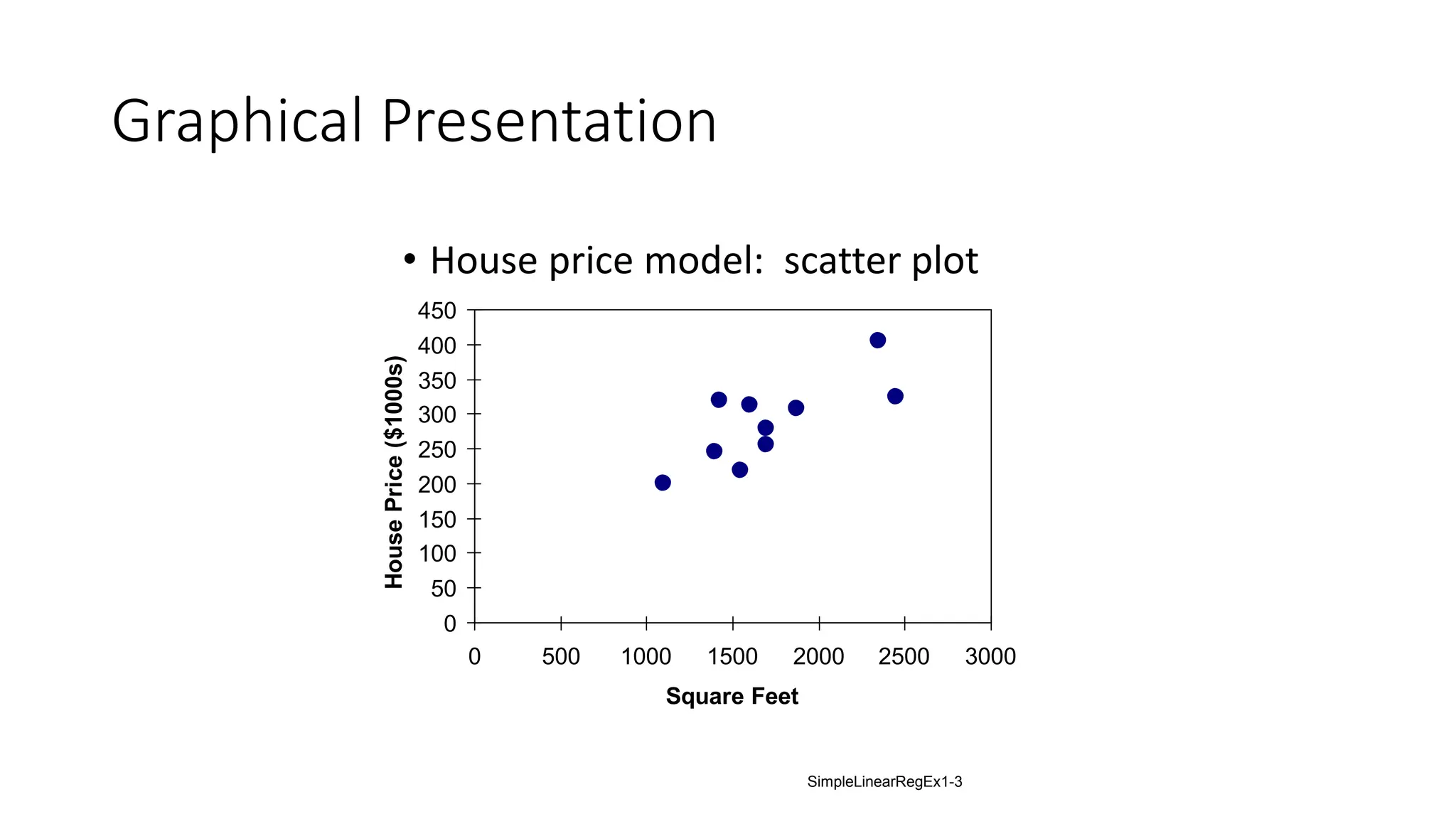

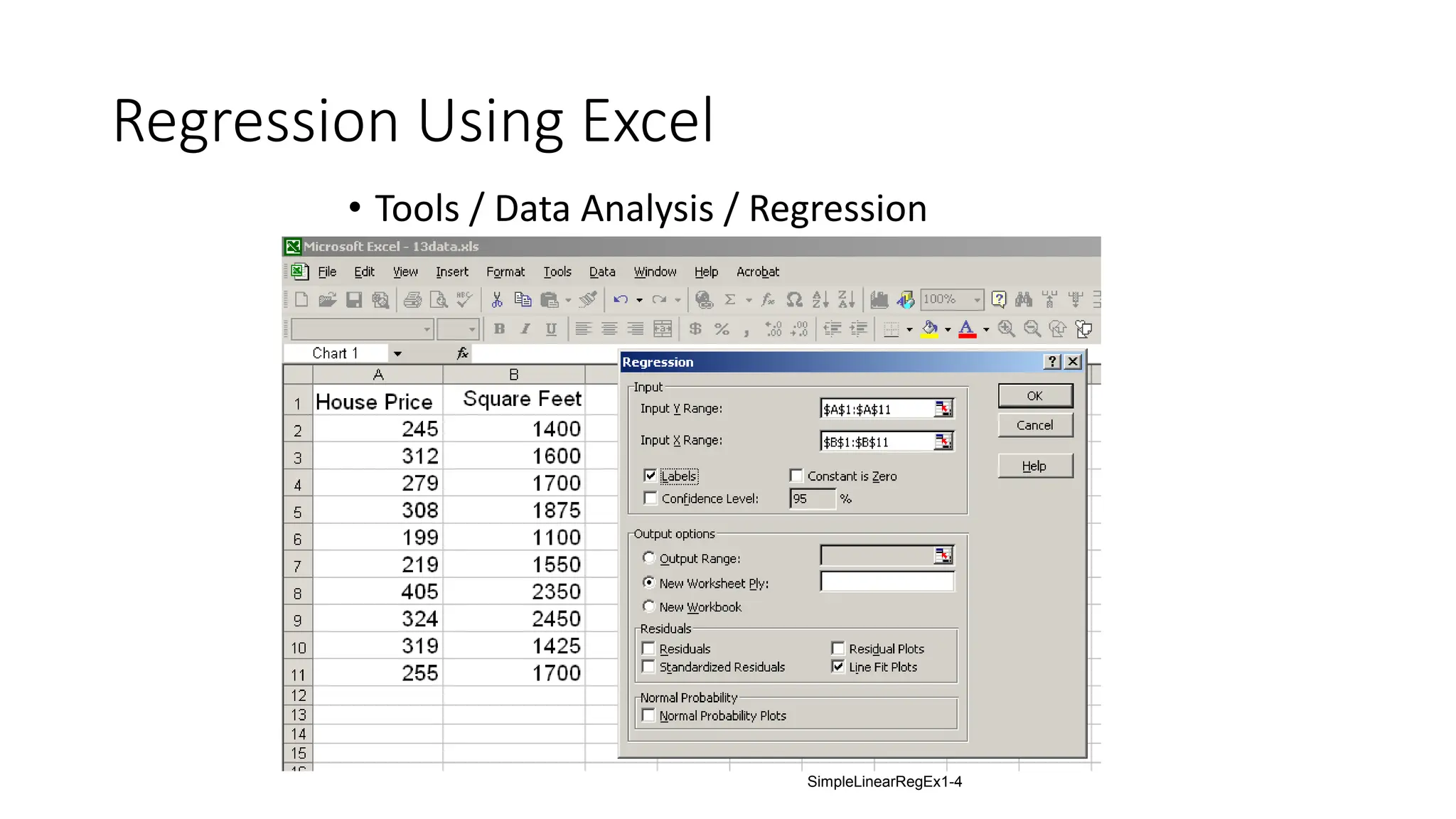

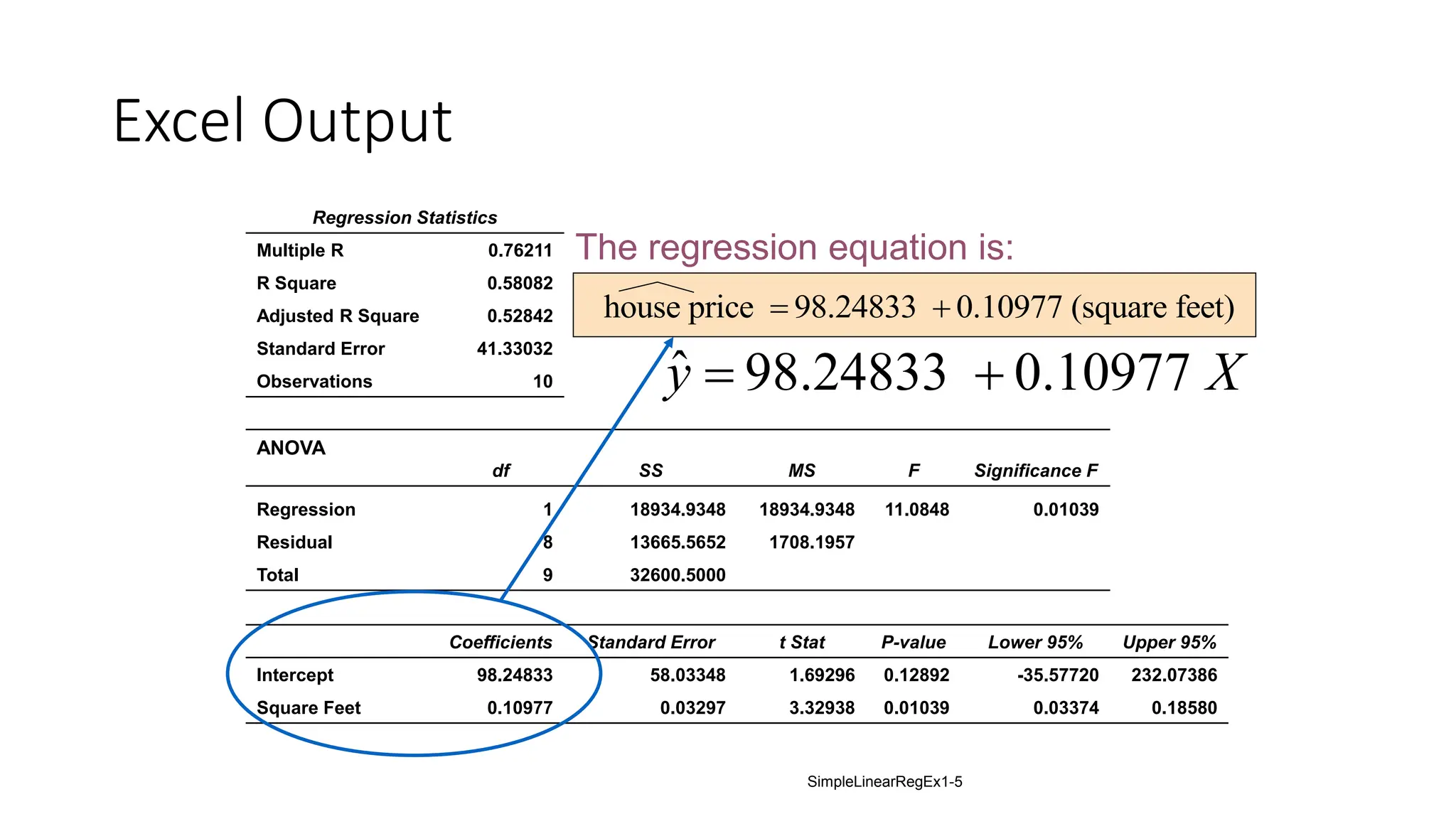

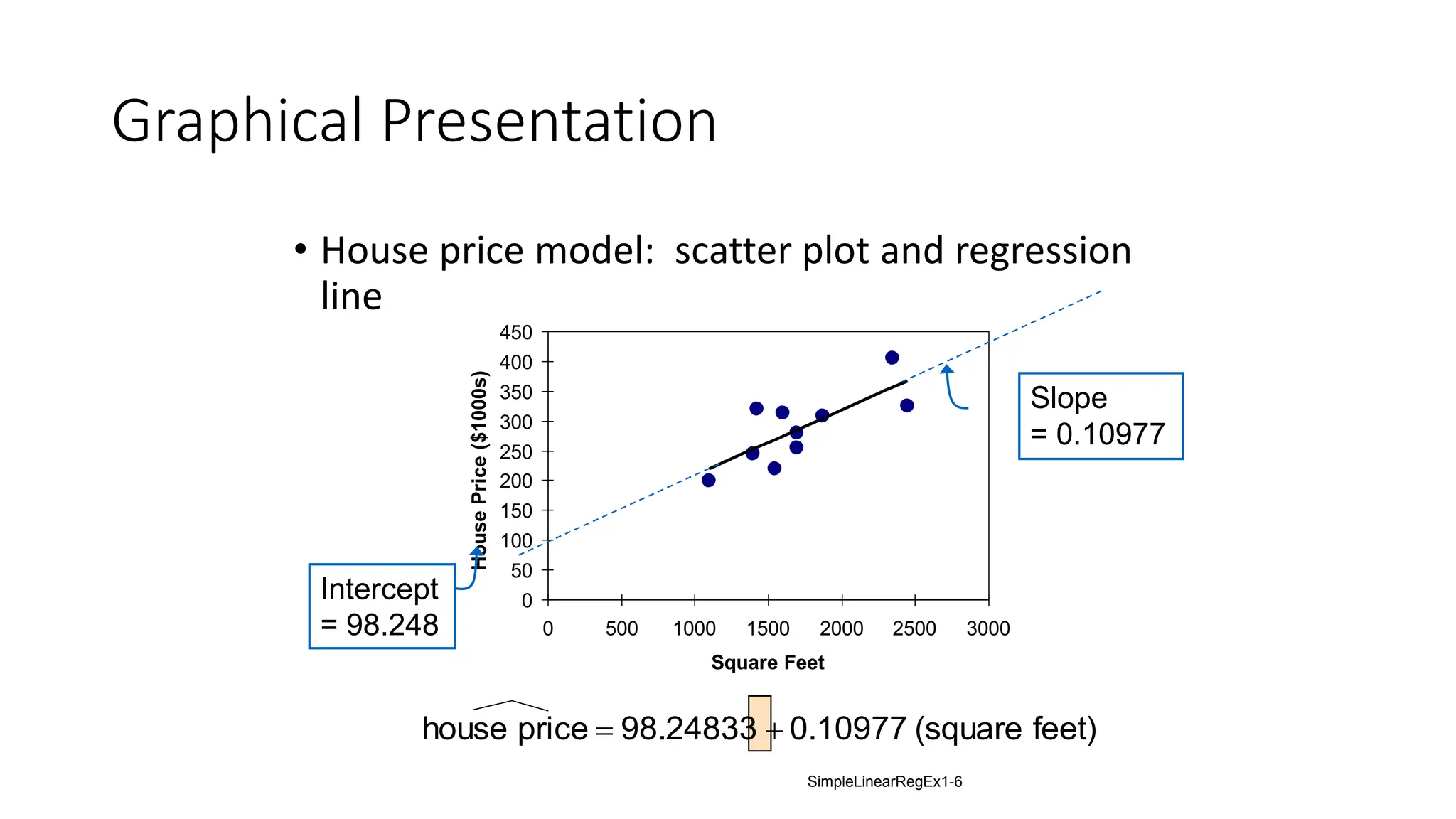

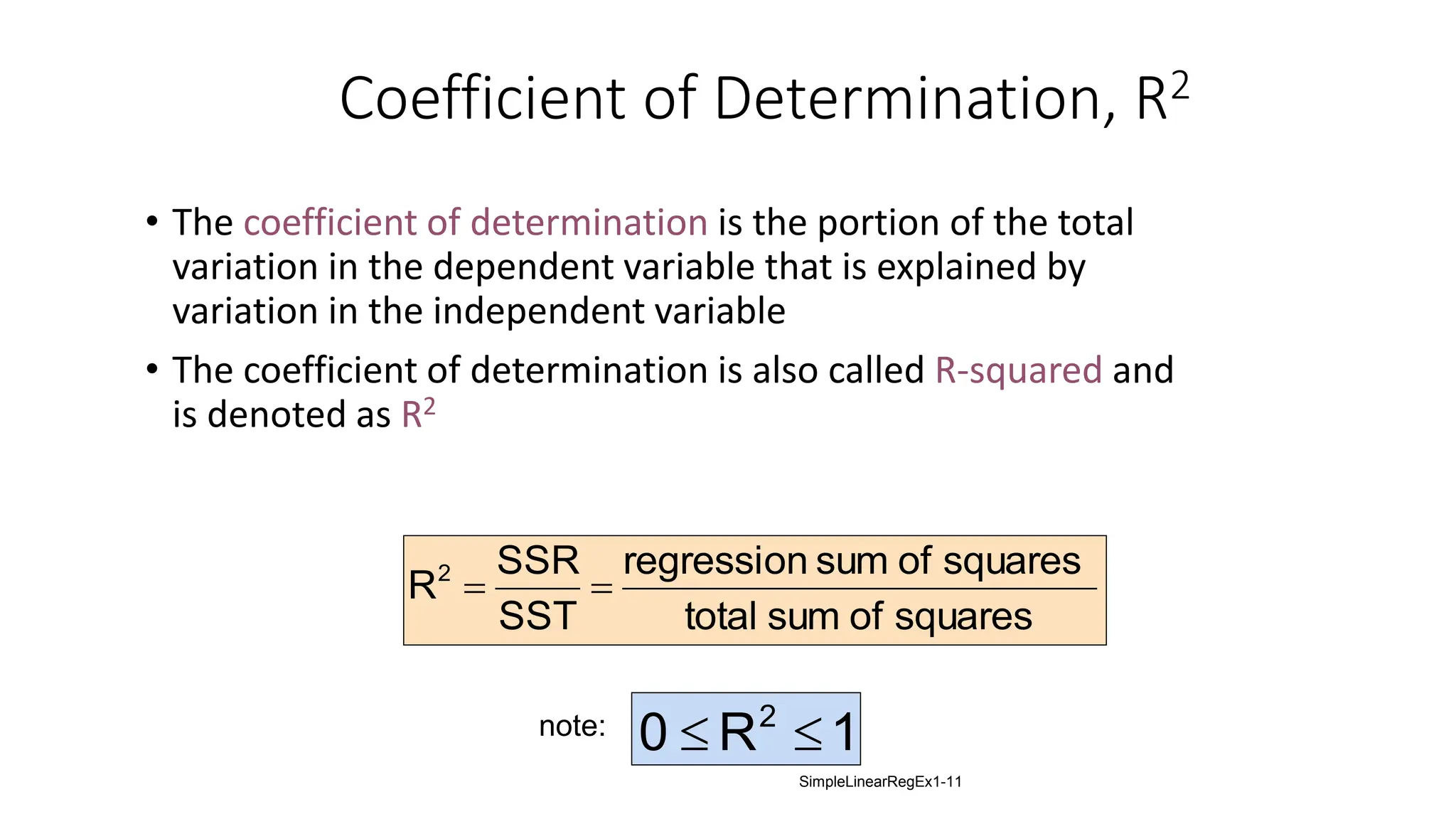

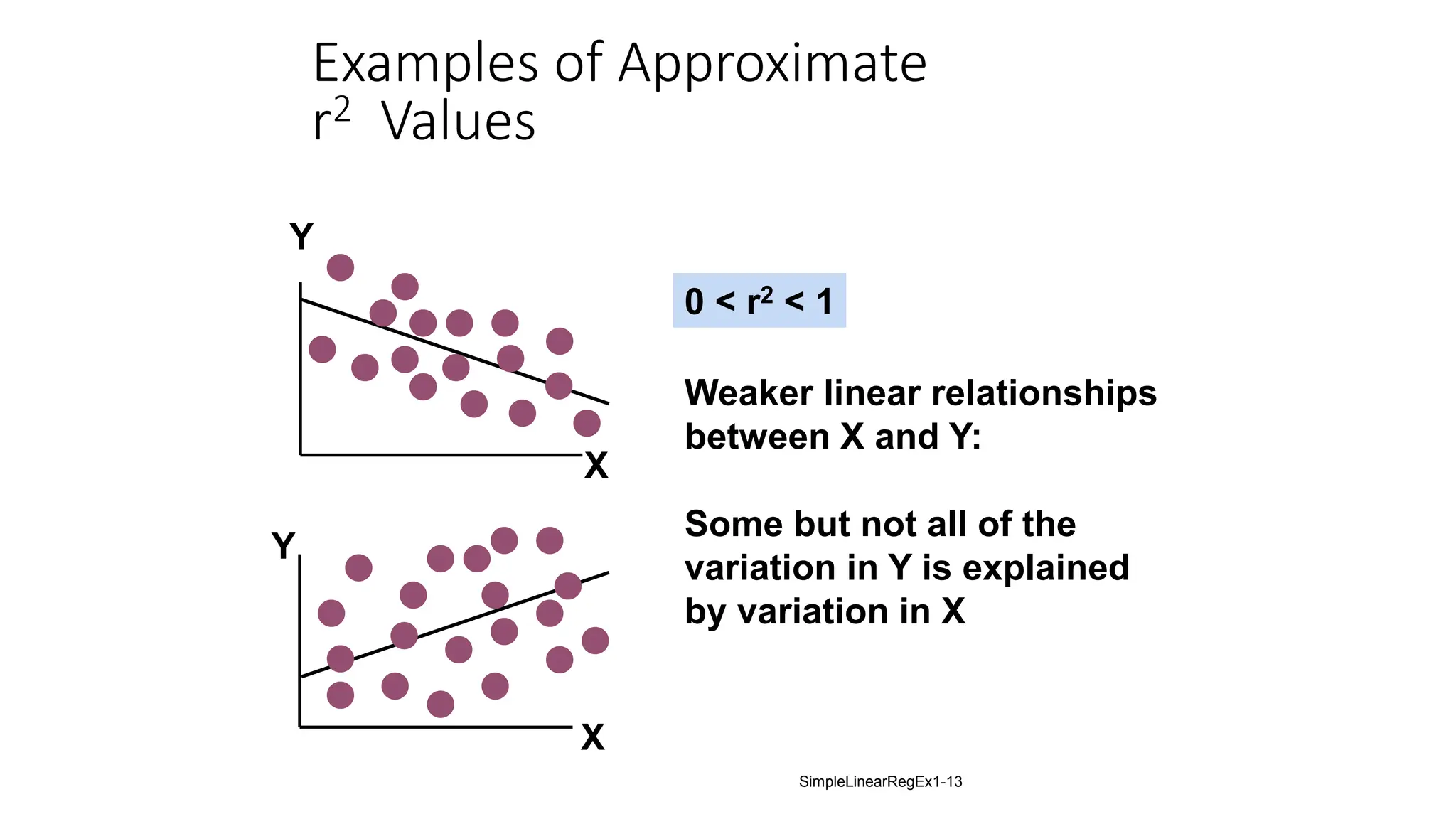

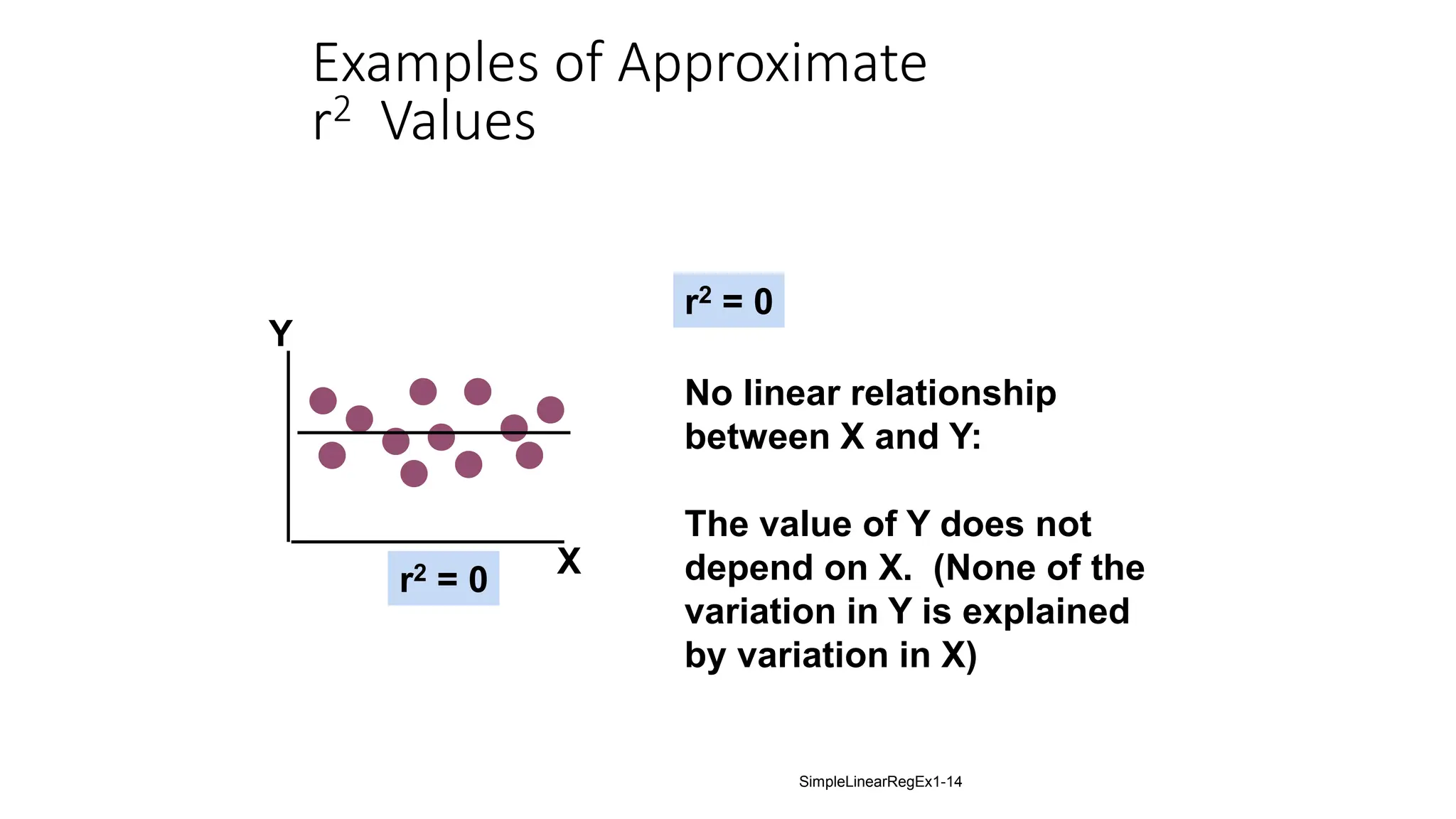

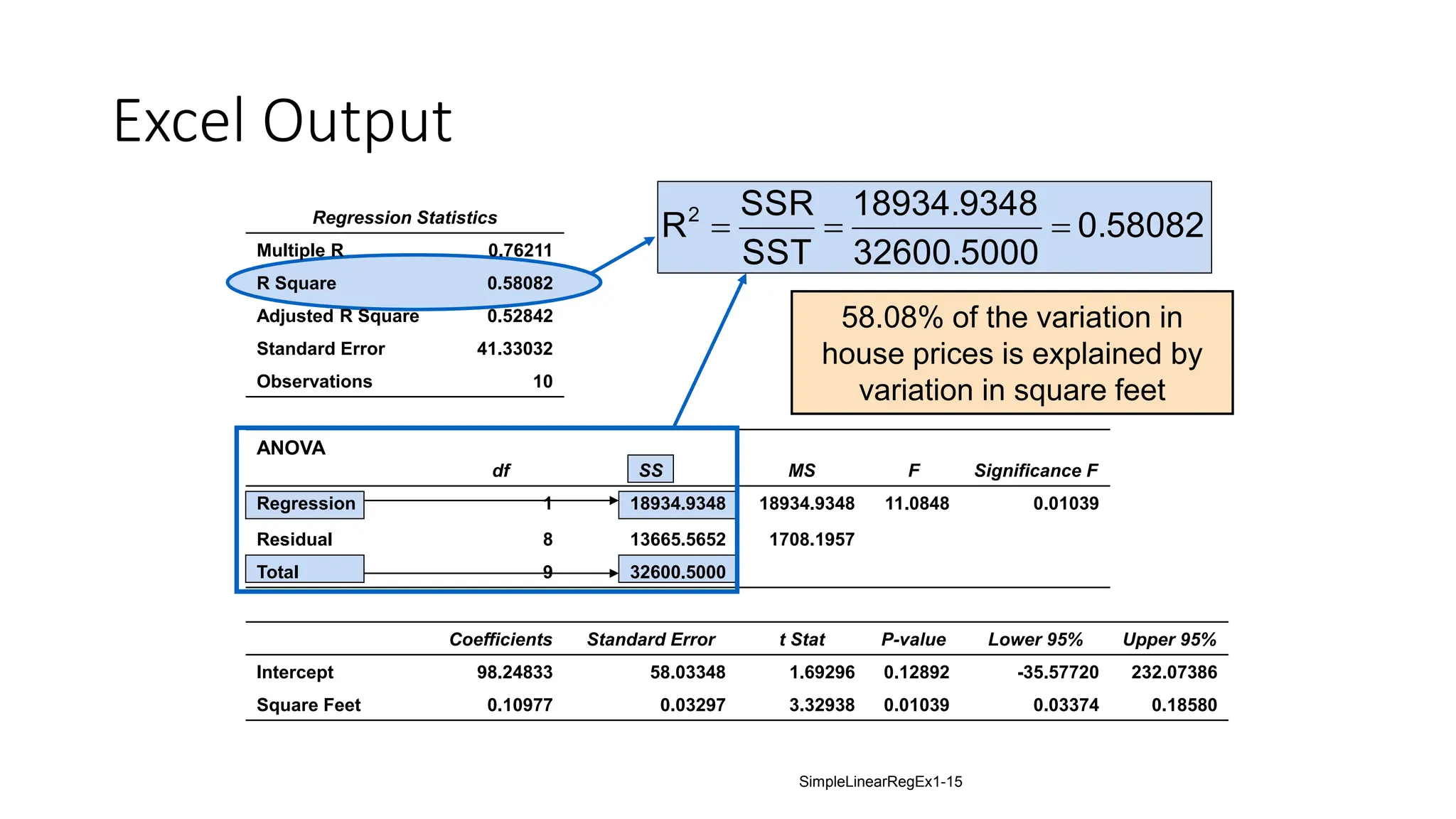

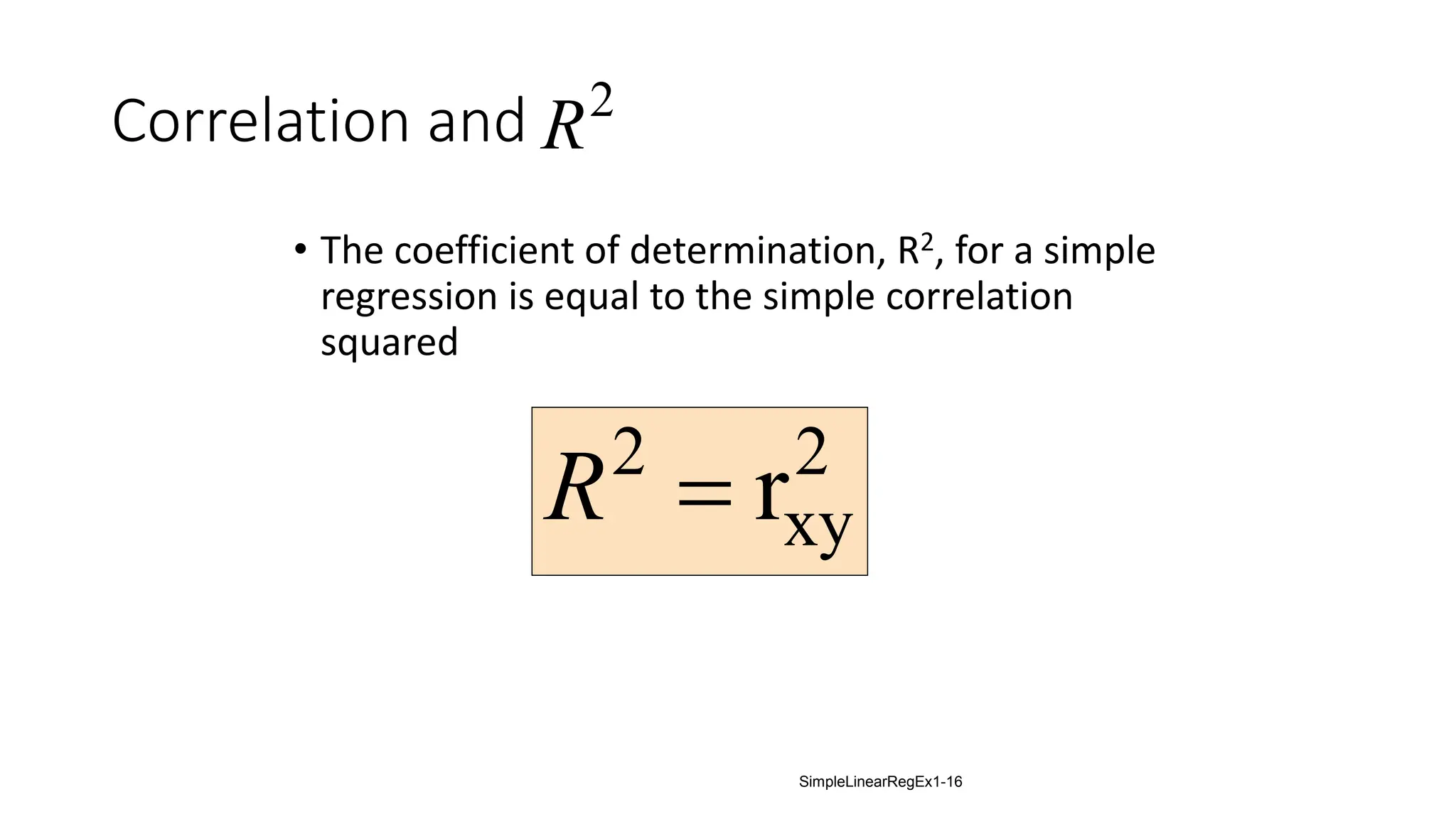

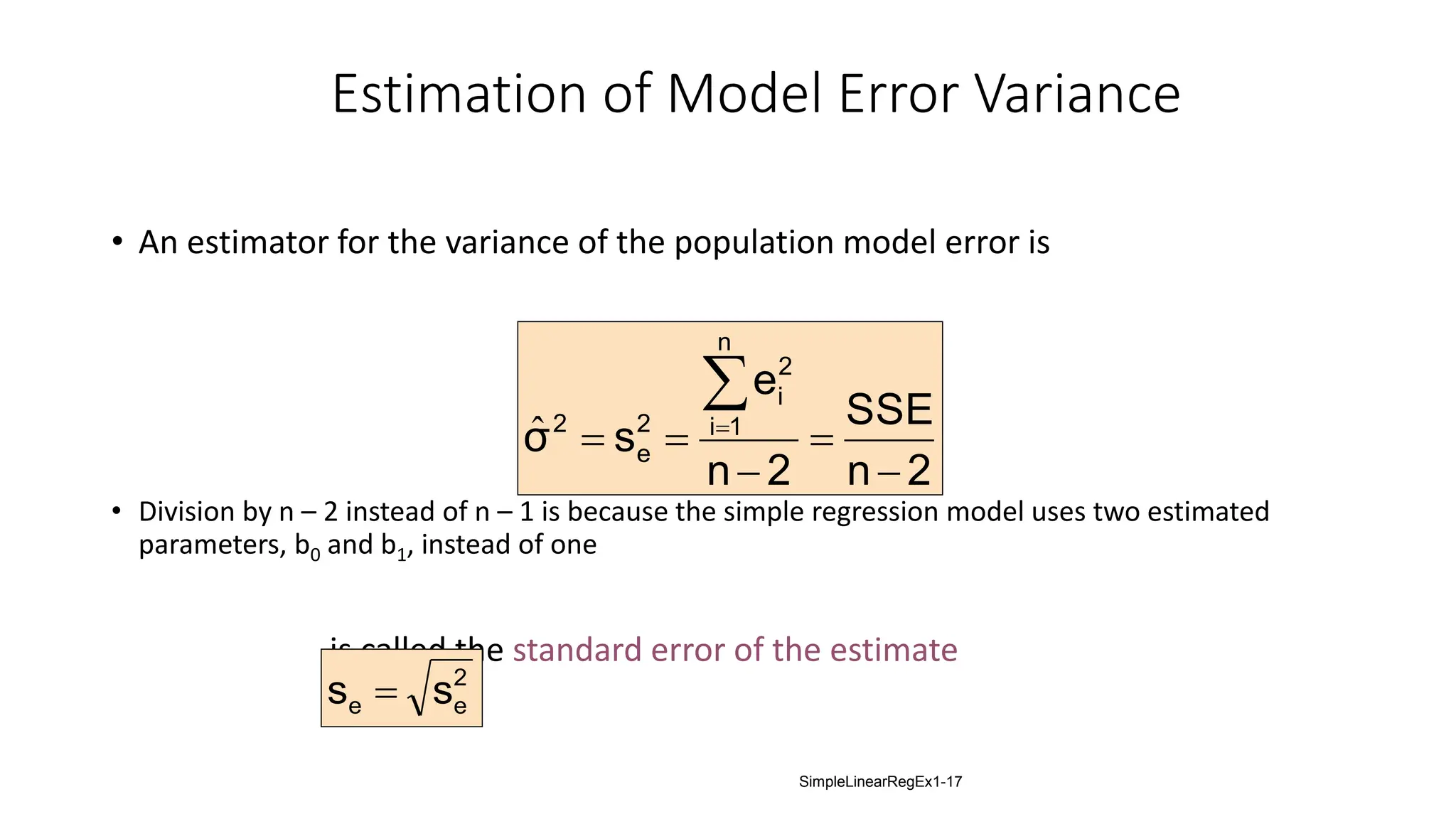

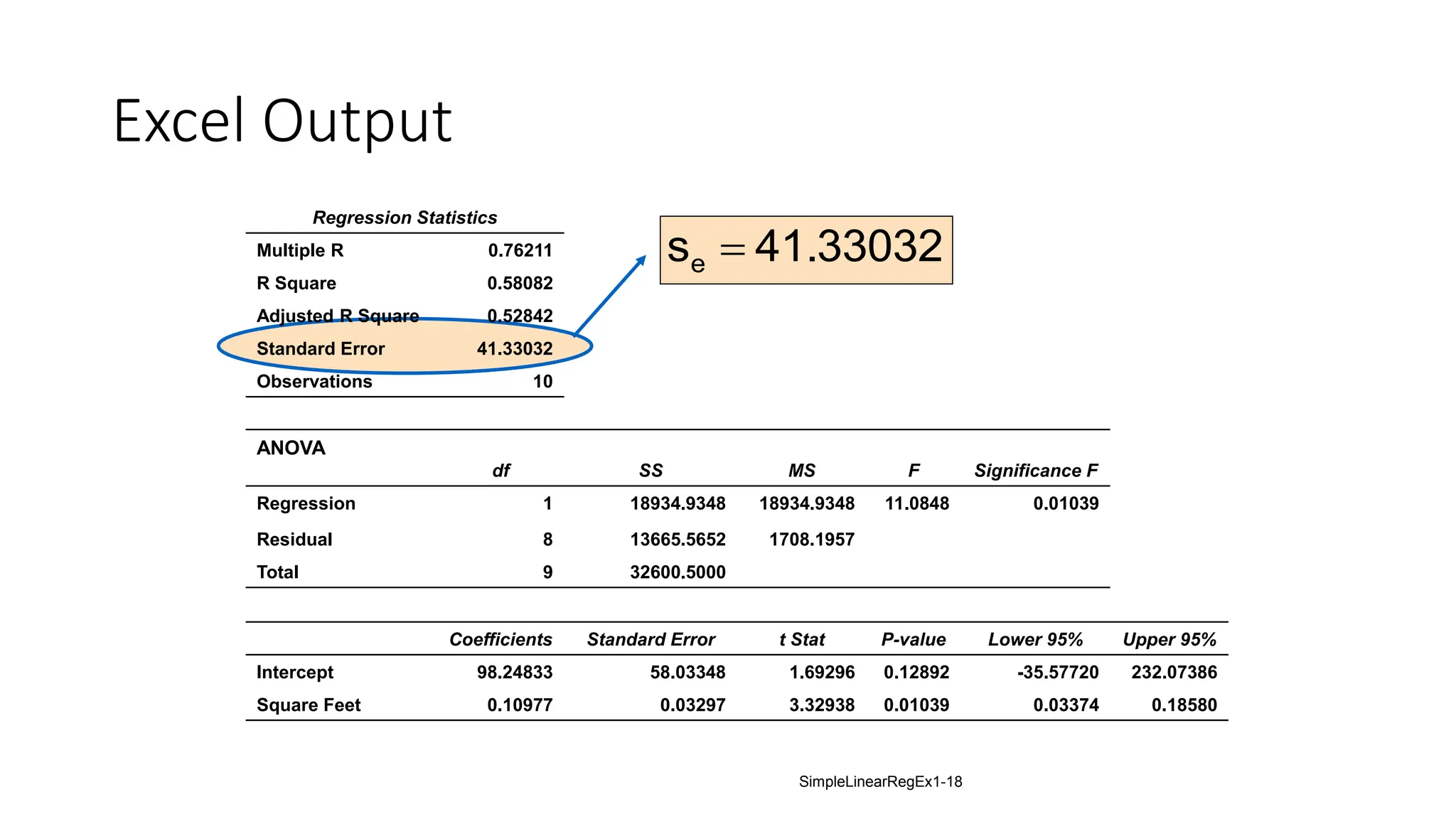

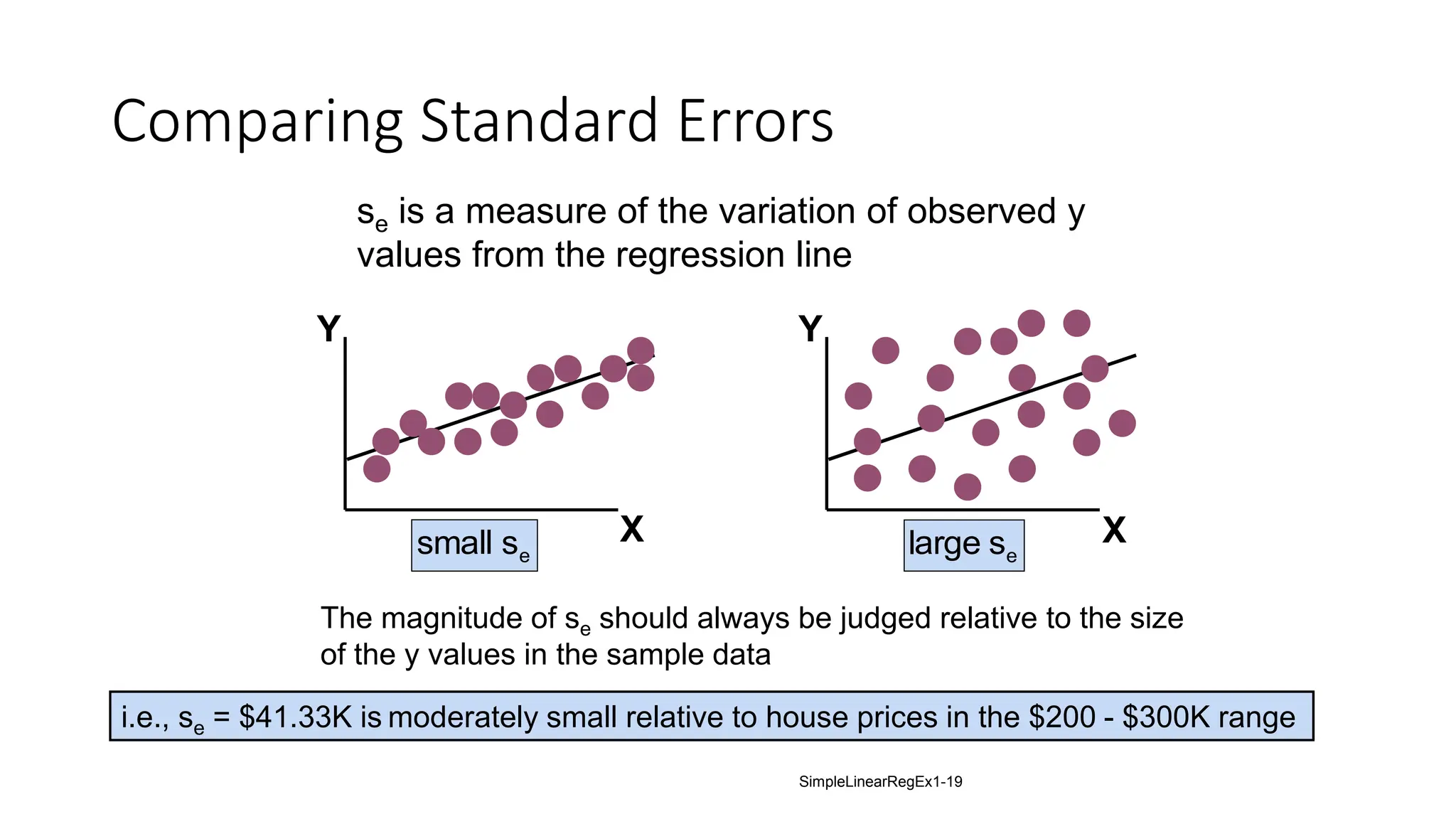

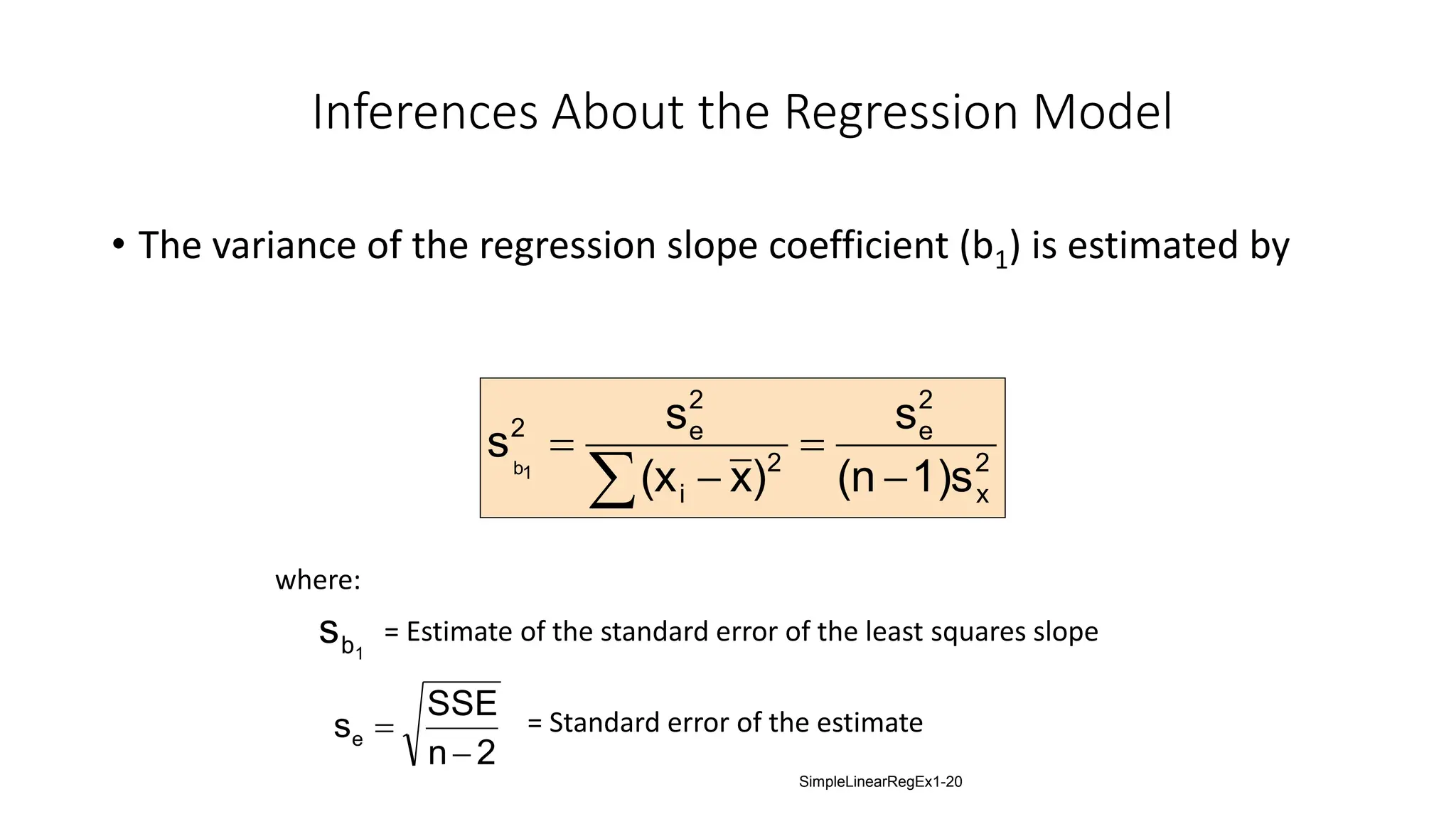

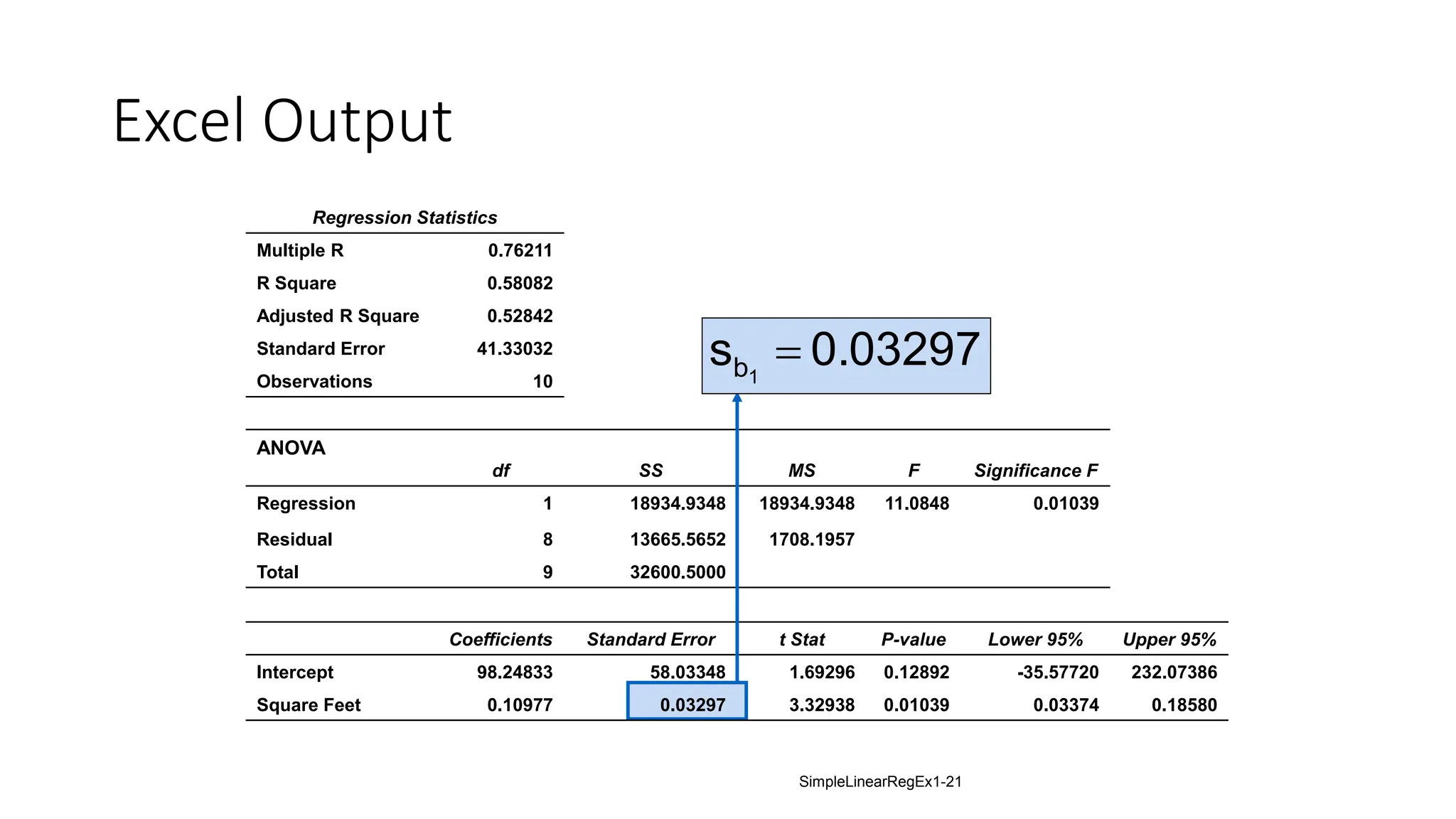

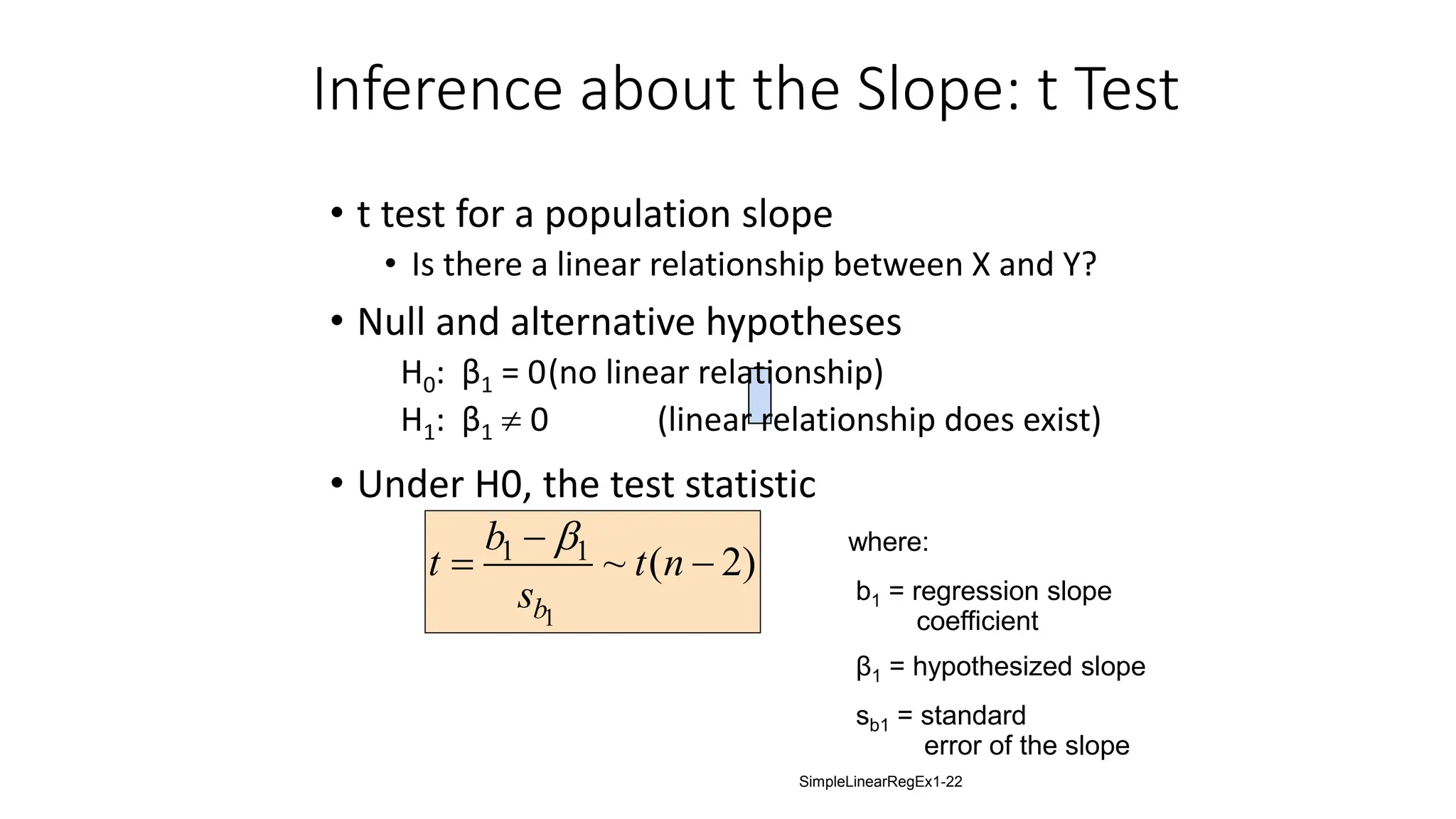

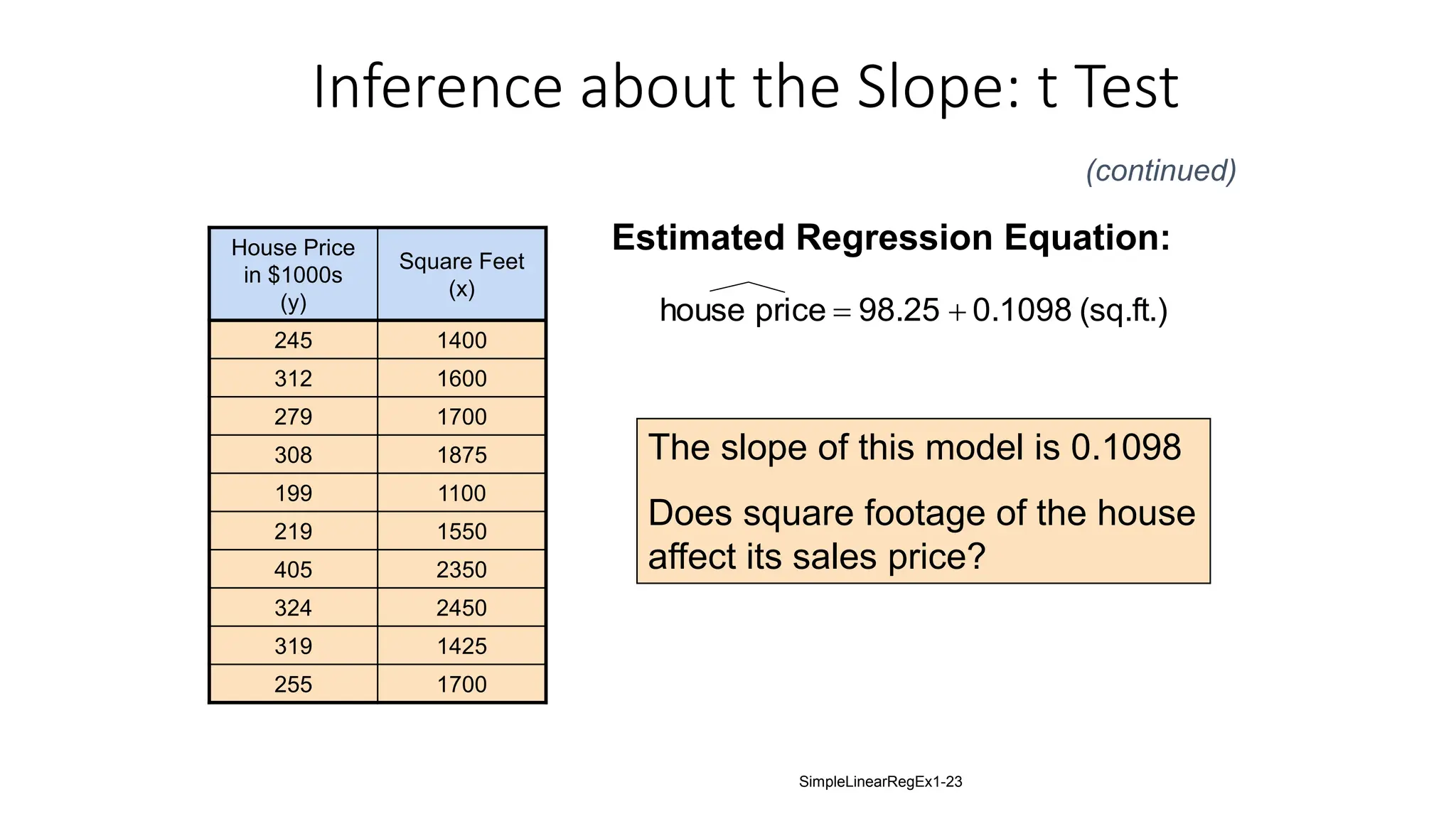

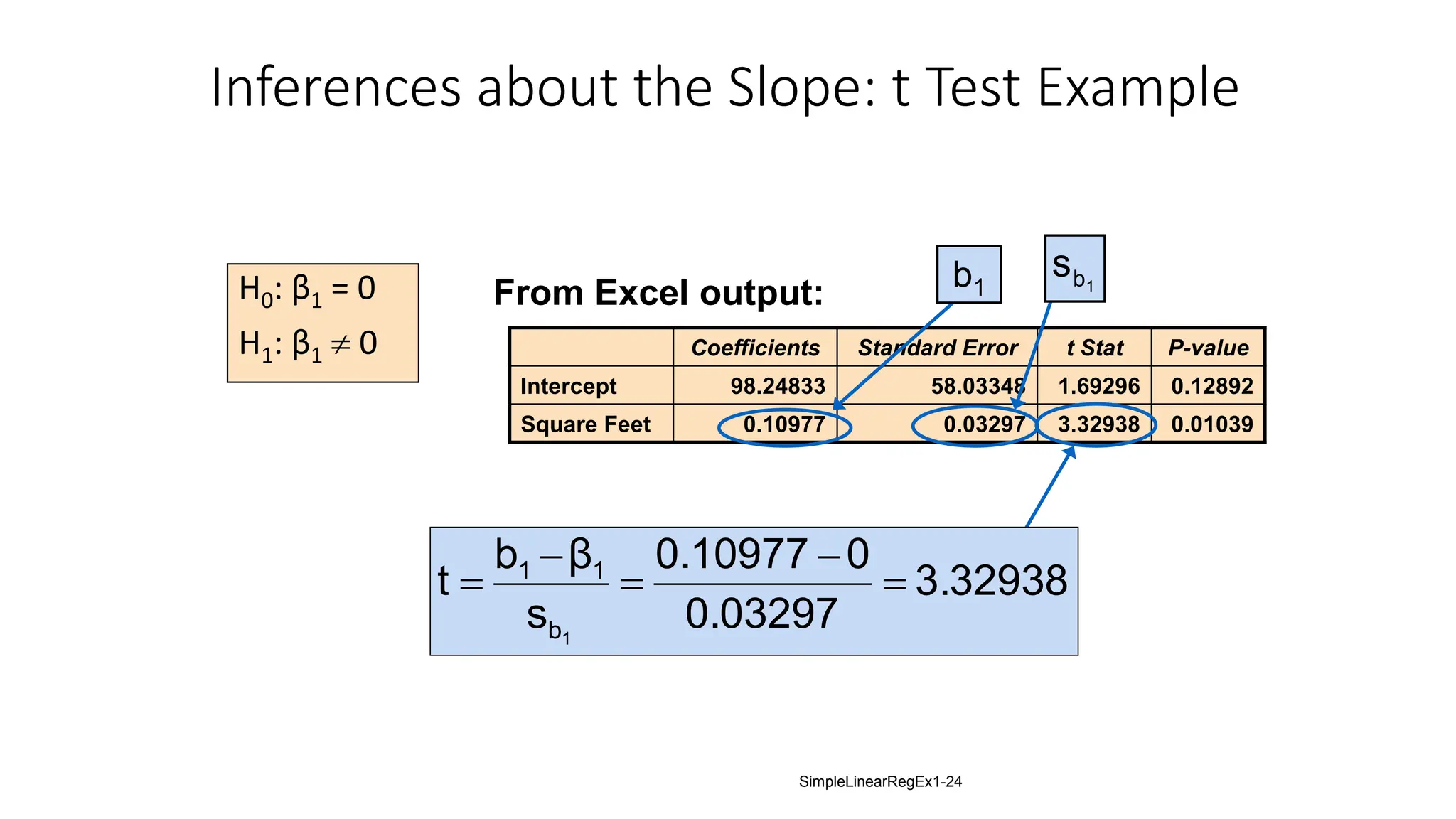

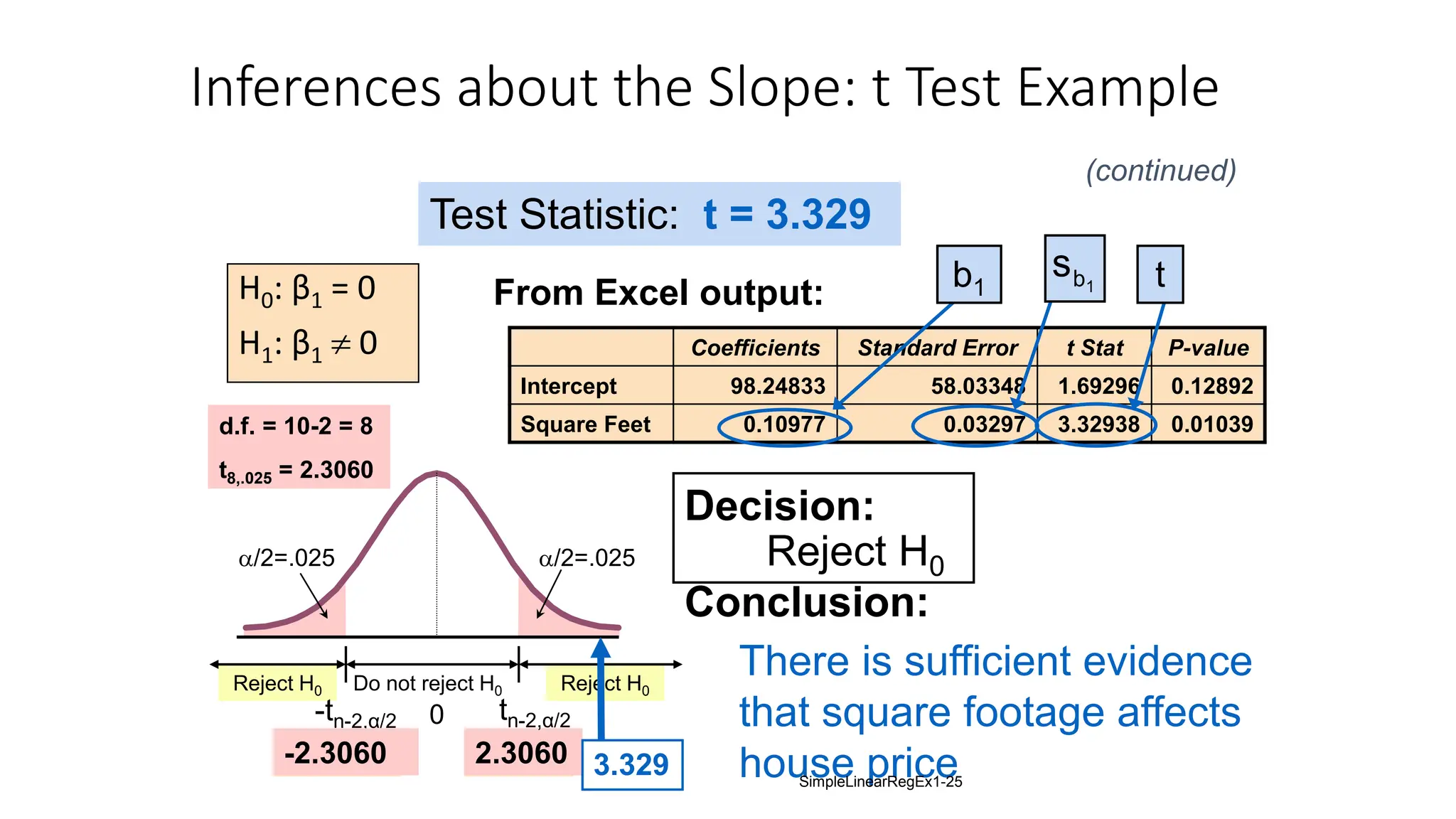

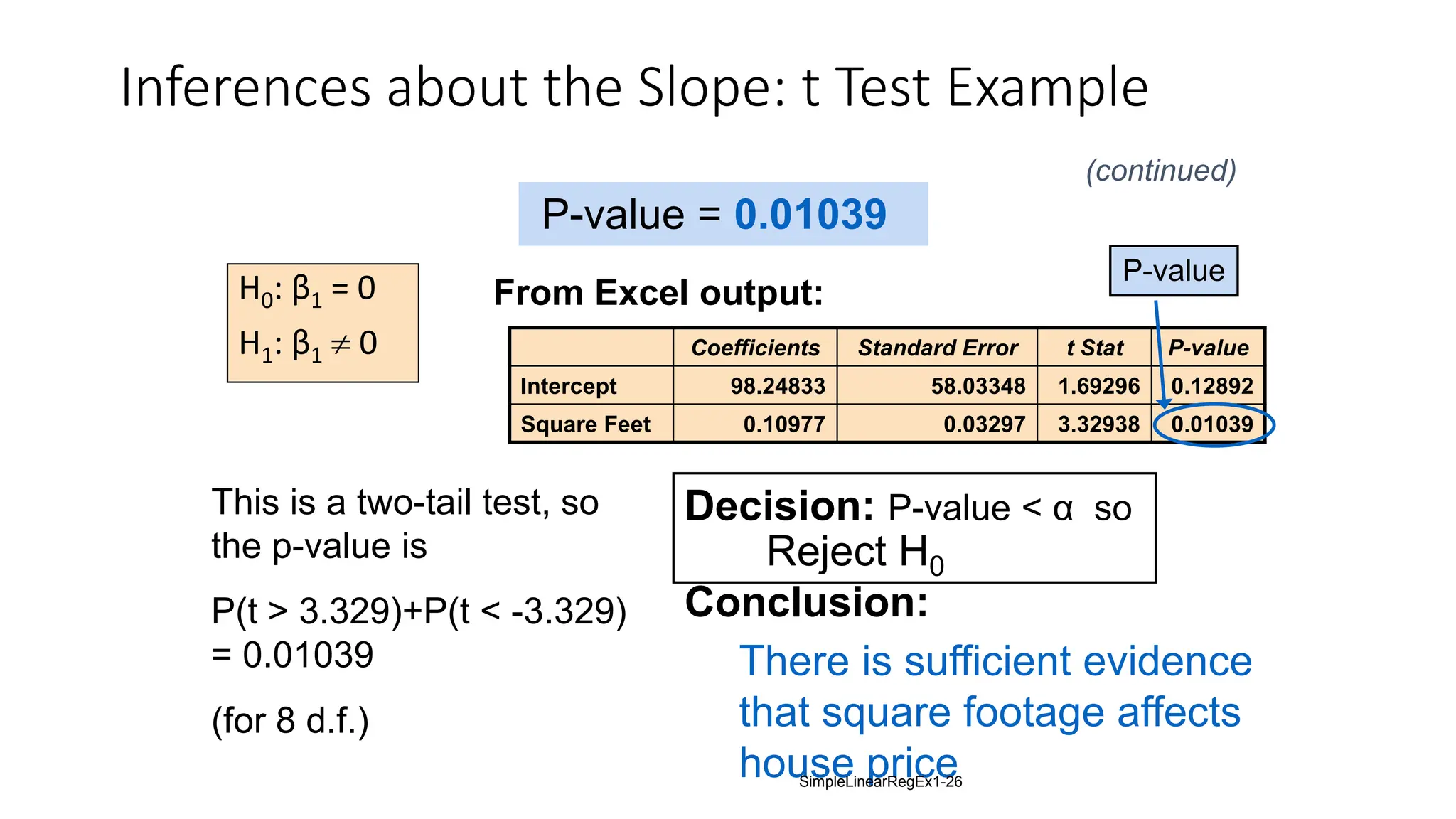

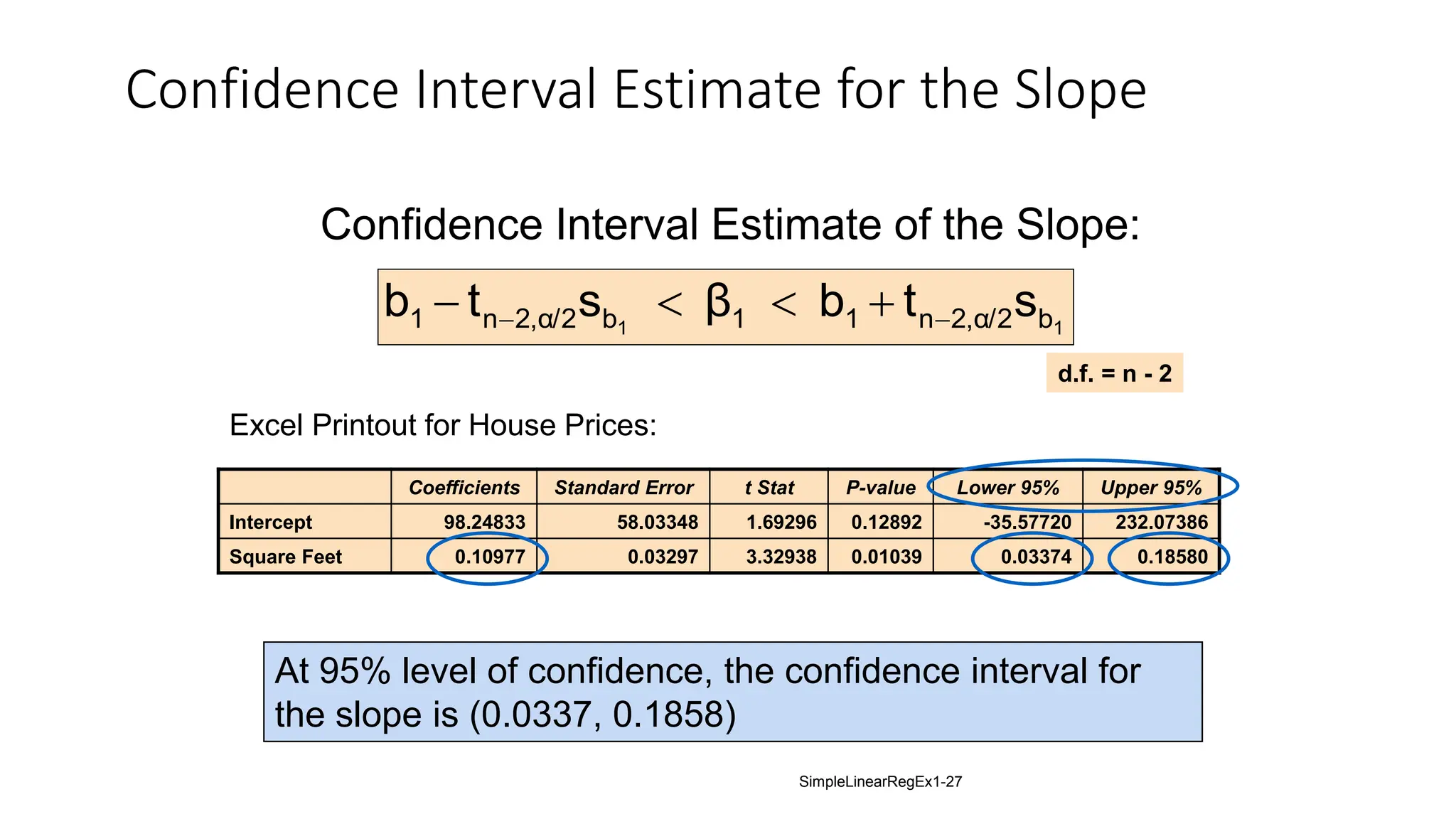

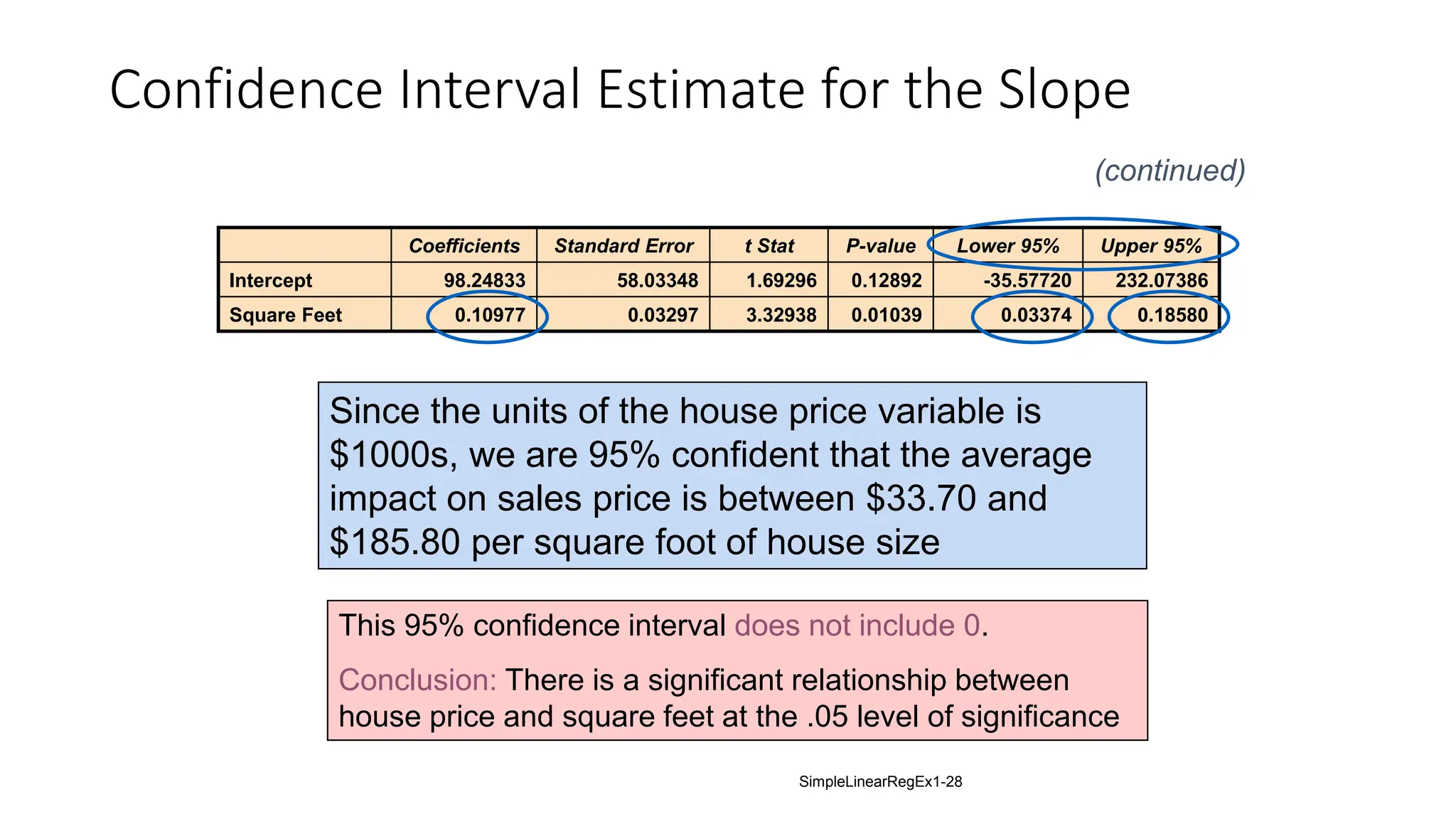

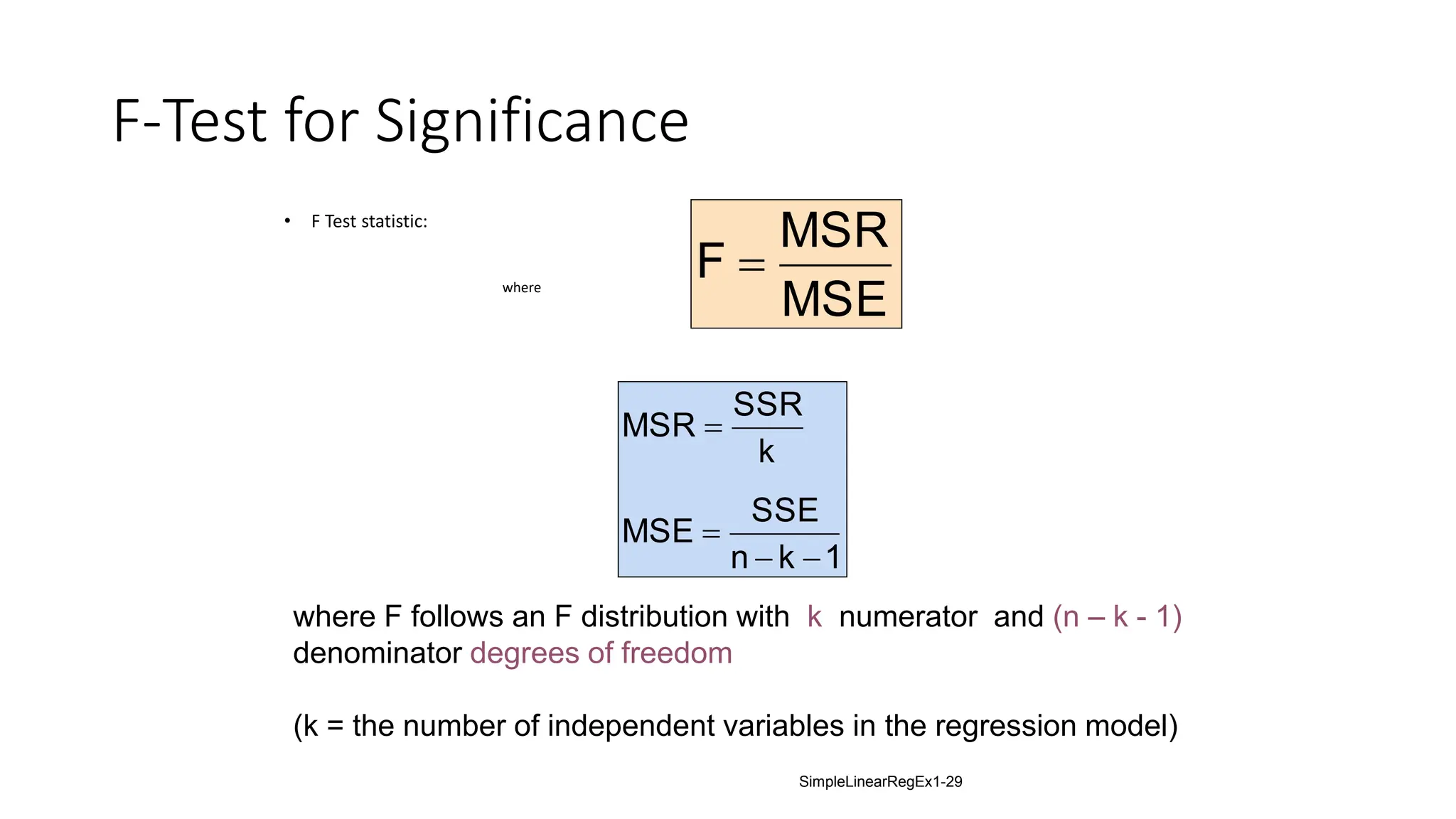

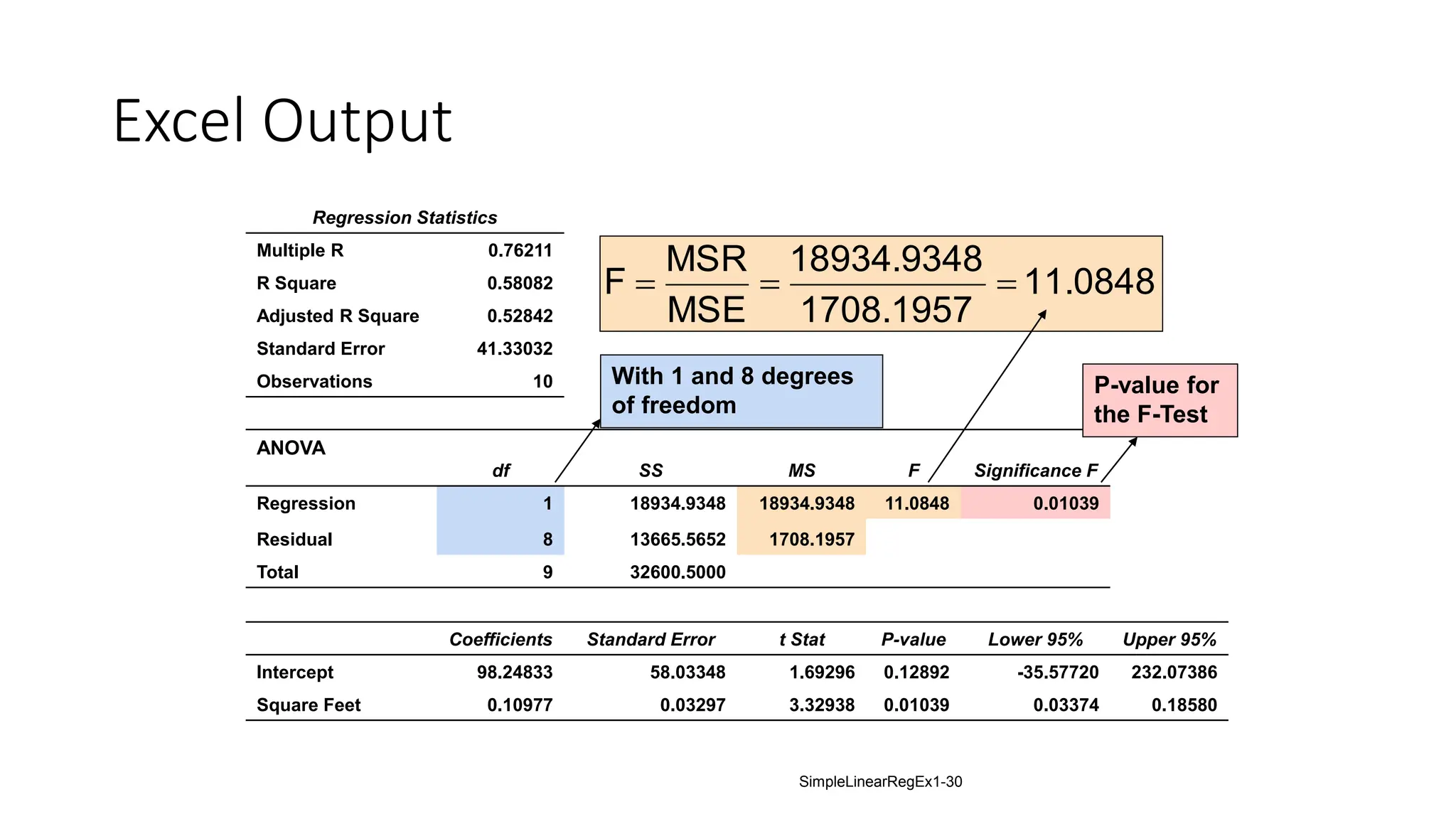

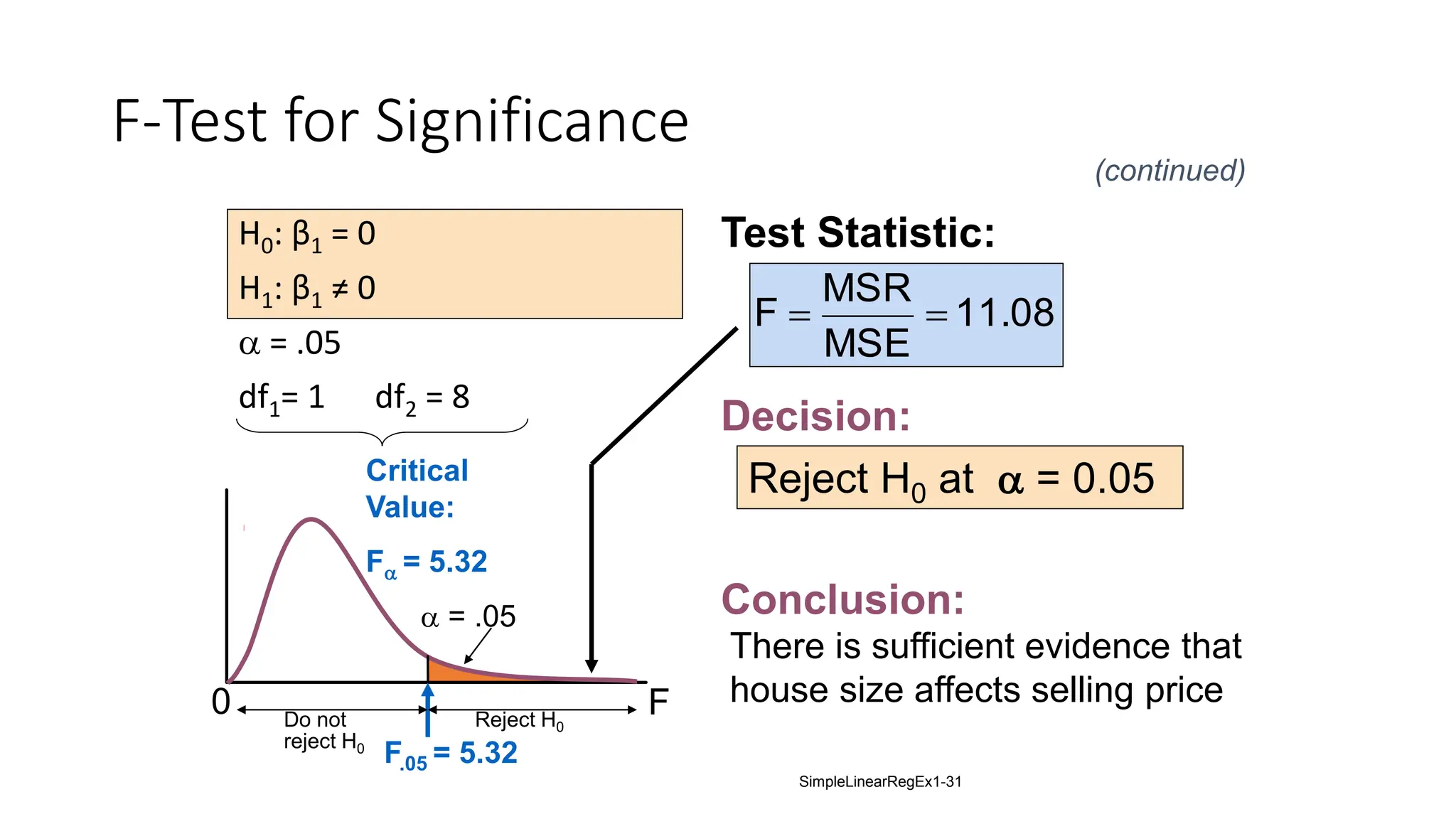

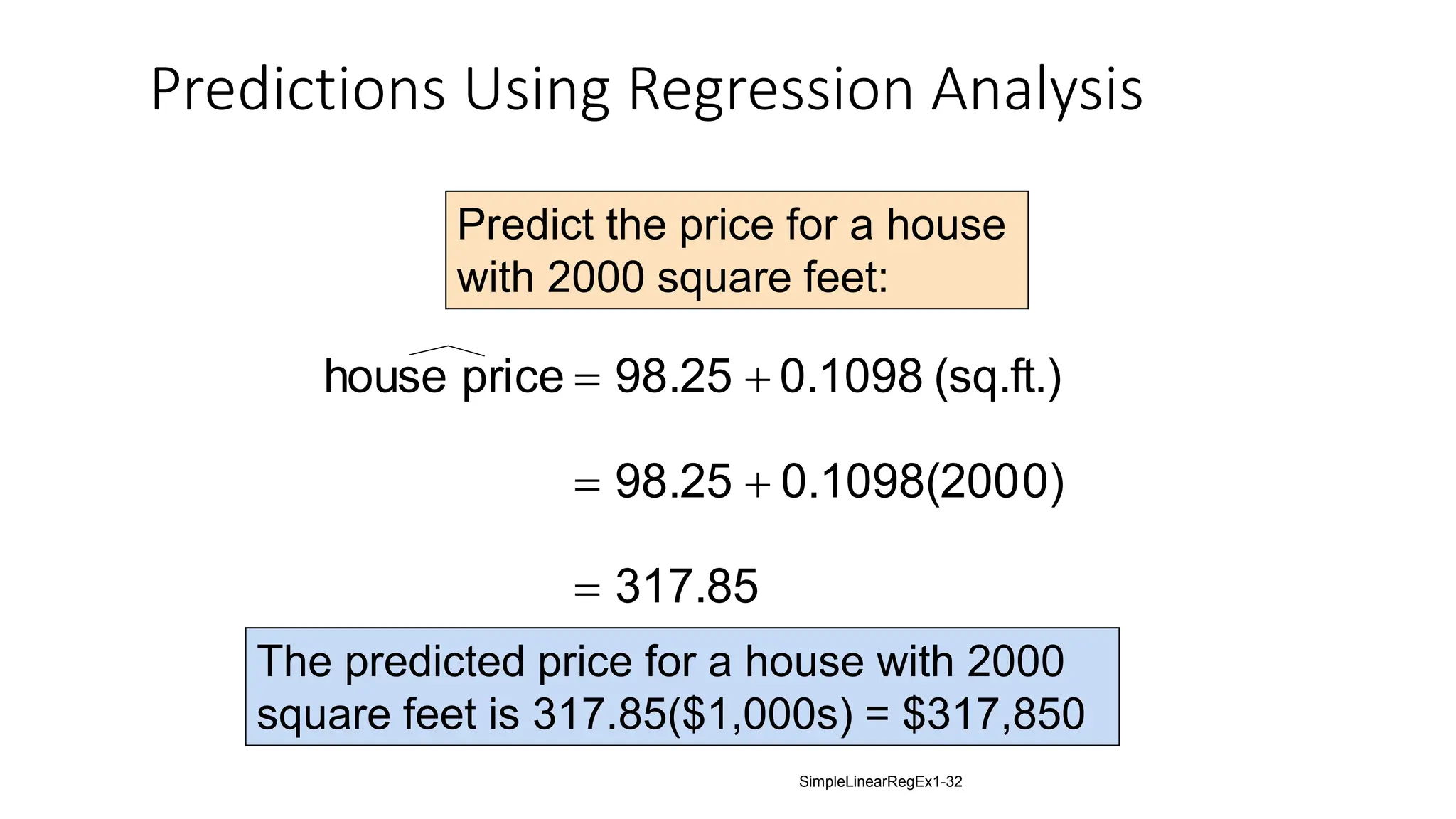

The document provides an analysis of the relationship between house prices and their sizes using simple linear regression, including a dataset of house prices and sizes. It details the regression output from Excel, explaining statistics such as the coefficient of determination (r-squared), which indicates that 58.08% of the variation in house prices is explained by house size. The findings also include a hypothesis test confirming that square footage has a statistically significant effect on house prices.