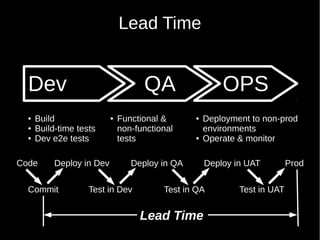

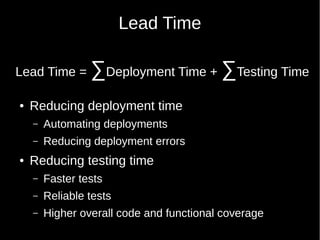

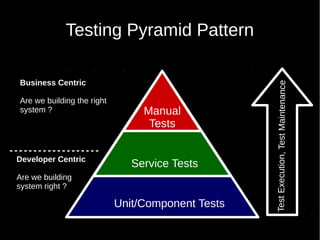

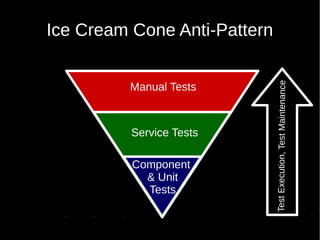

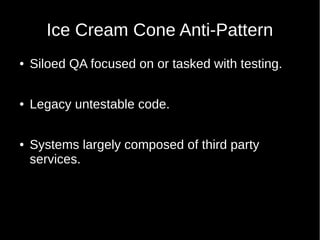

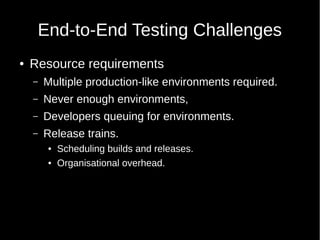

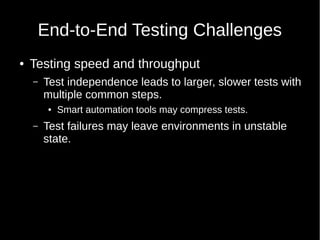

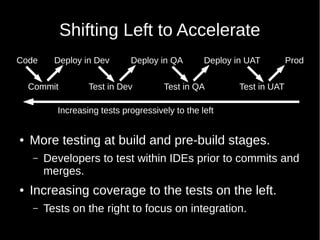

The document discusses strategies to improve deployment and testing efficiency in a development environment, focusing on the importance of automating deployments and enhancing test reliability. It highlights the challenges of end-to-end testing and the need for shifting testing earlier in the development process to increase confidence in code quality and coverage. Additionally, it emphasizes the significance of aligning tests with acceptance criteria to detect issues early and to incrementally refactor legacy code for better testability.