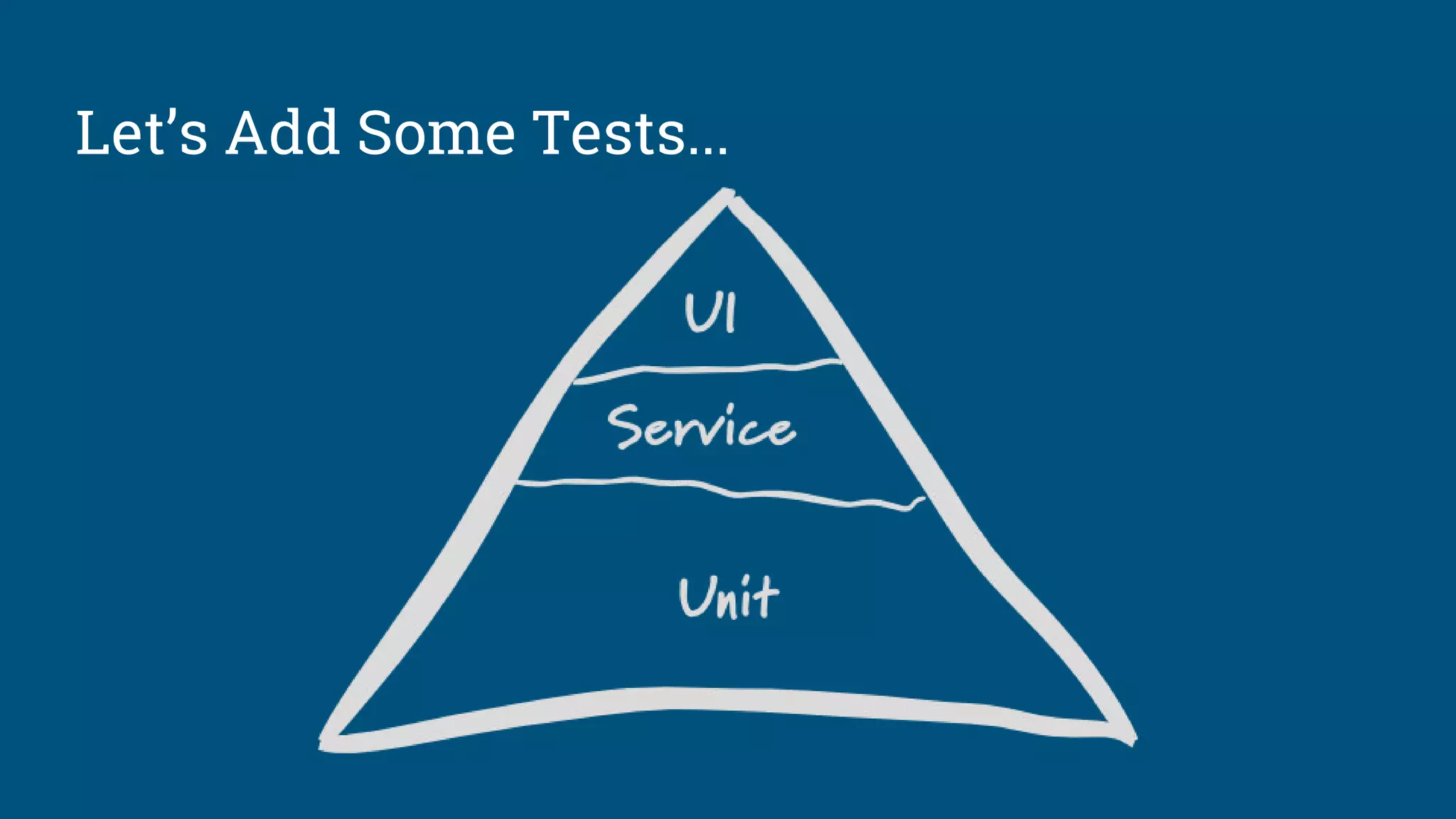

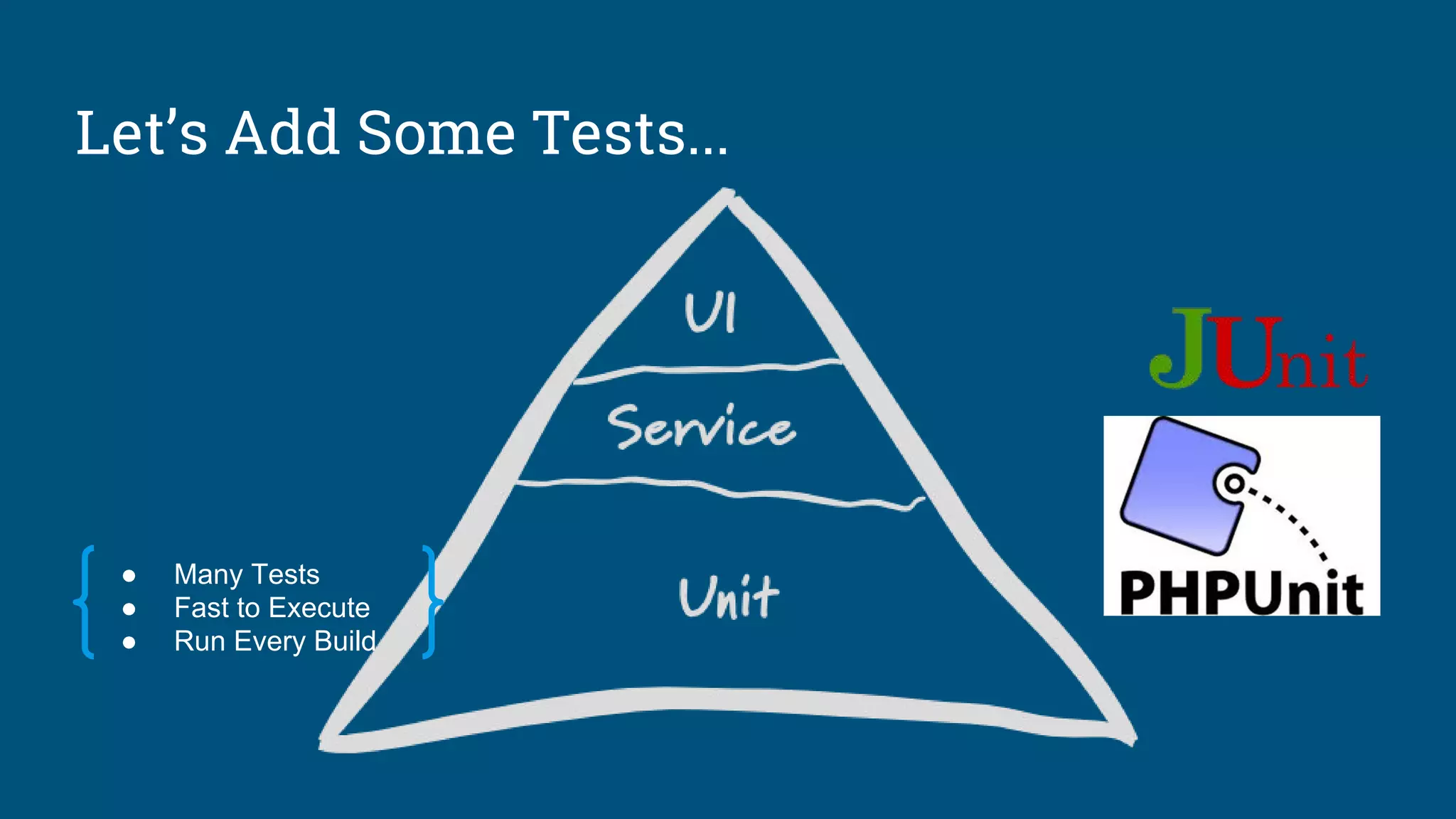

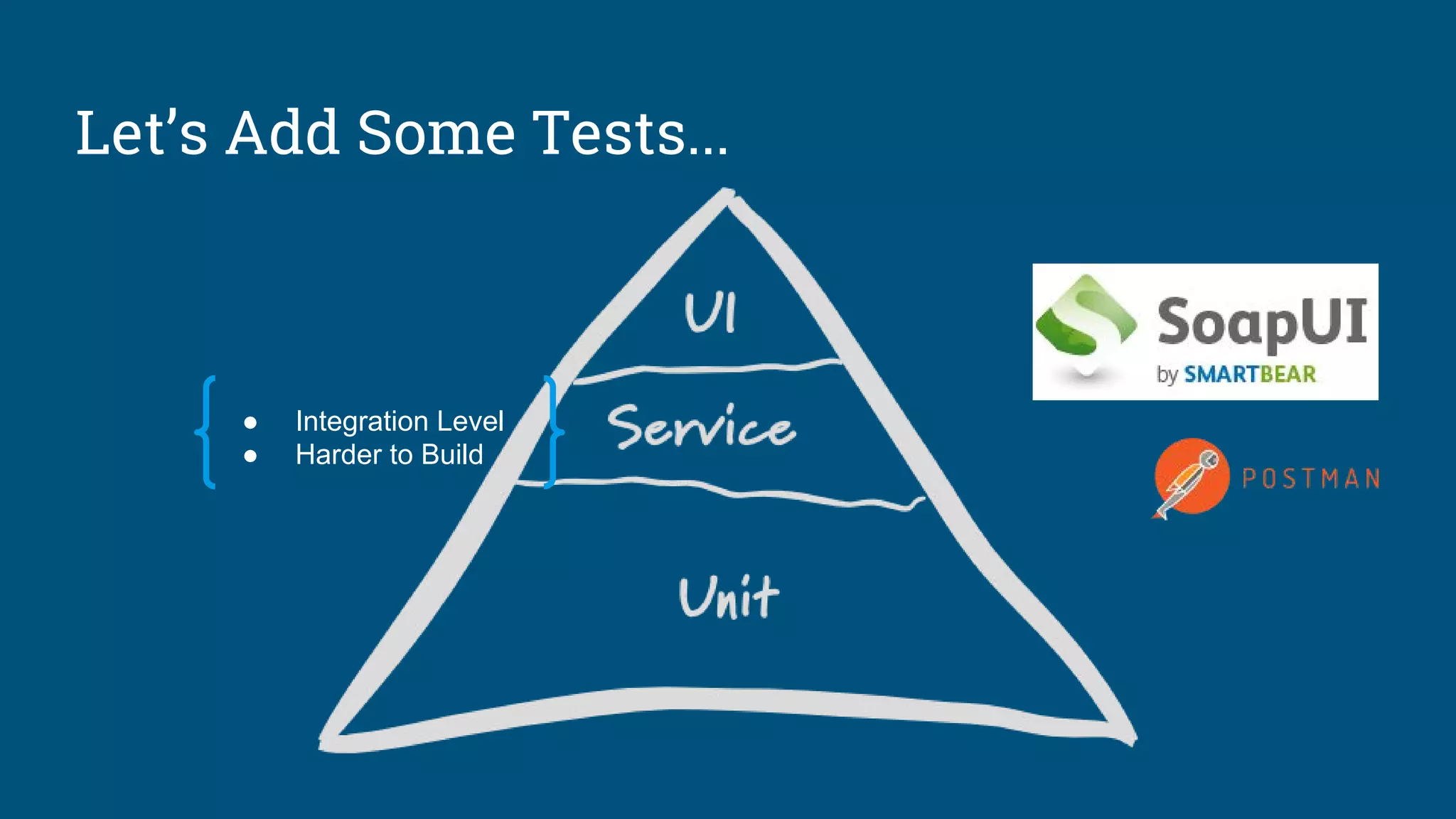

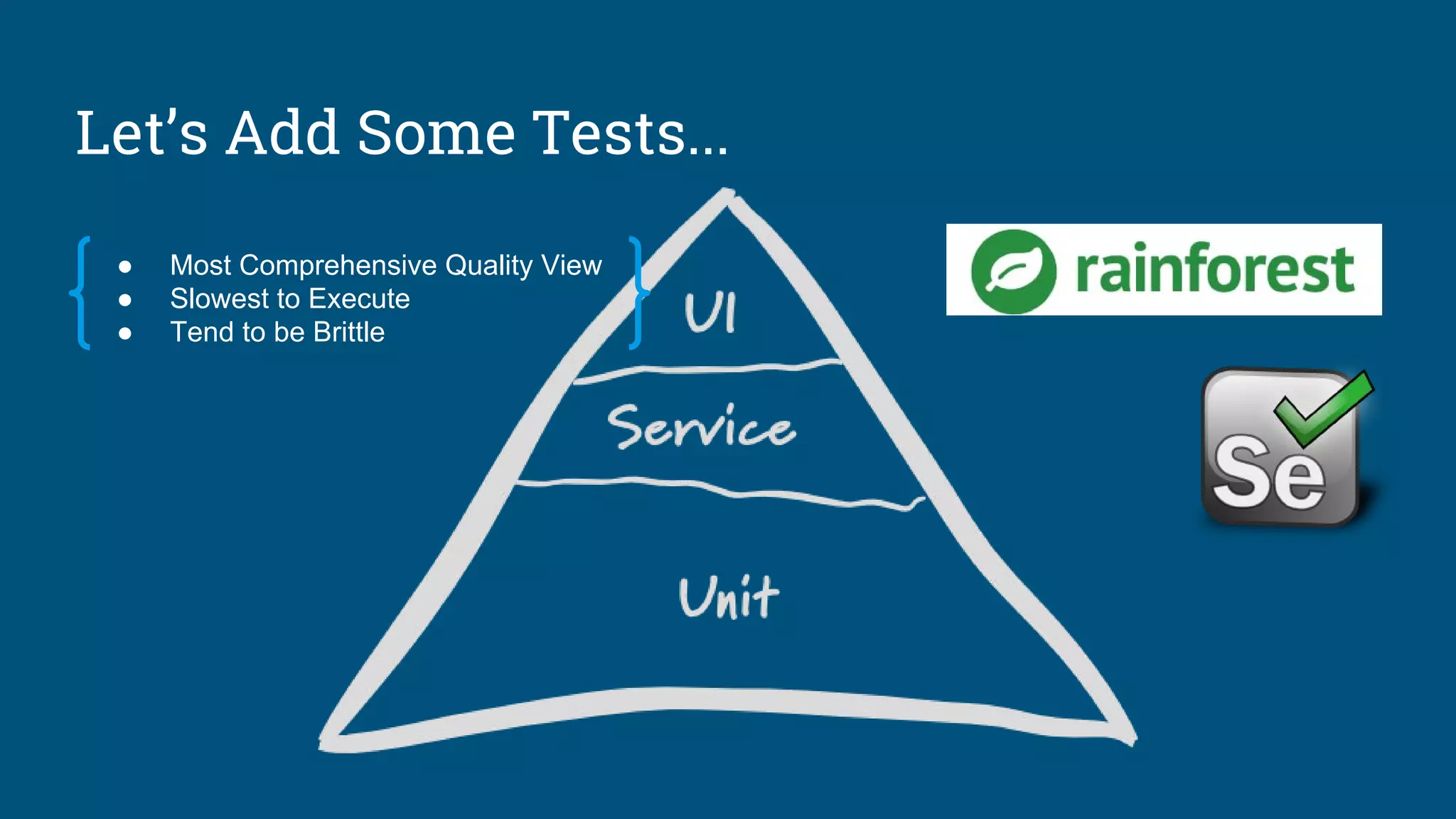

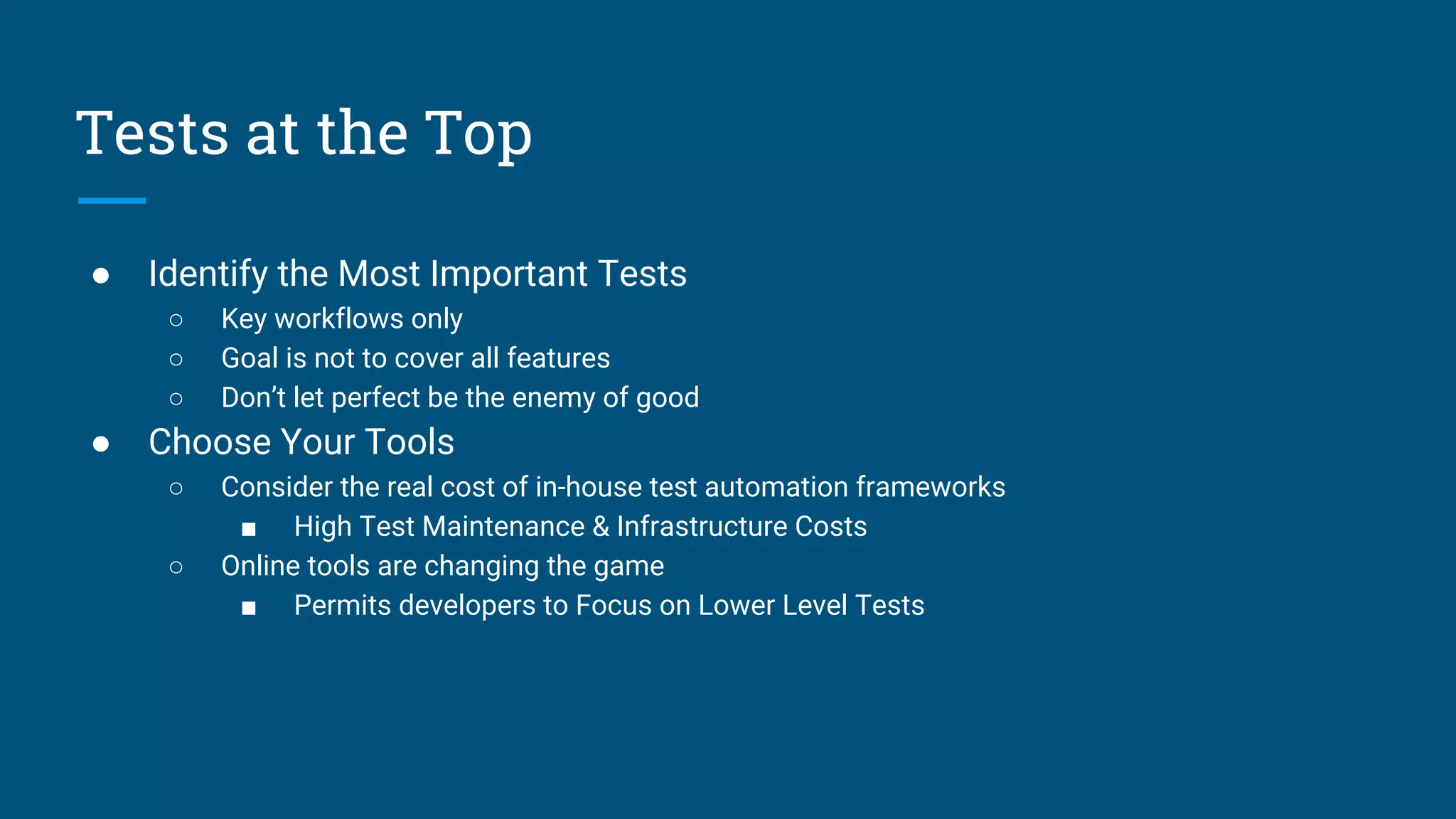

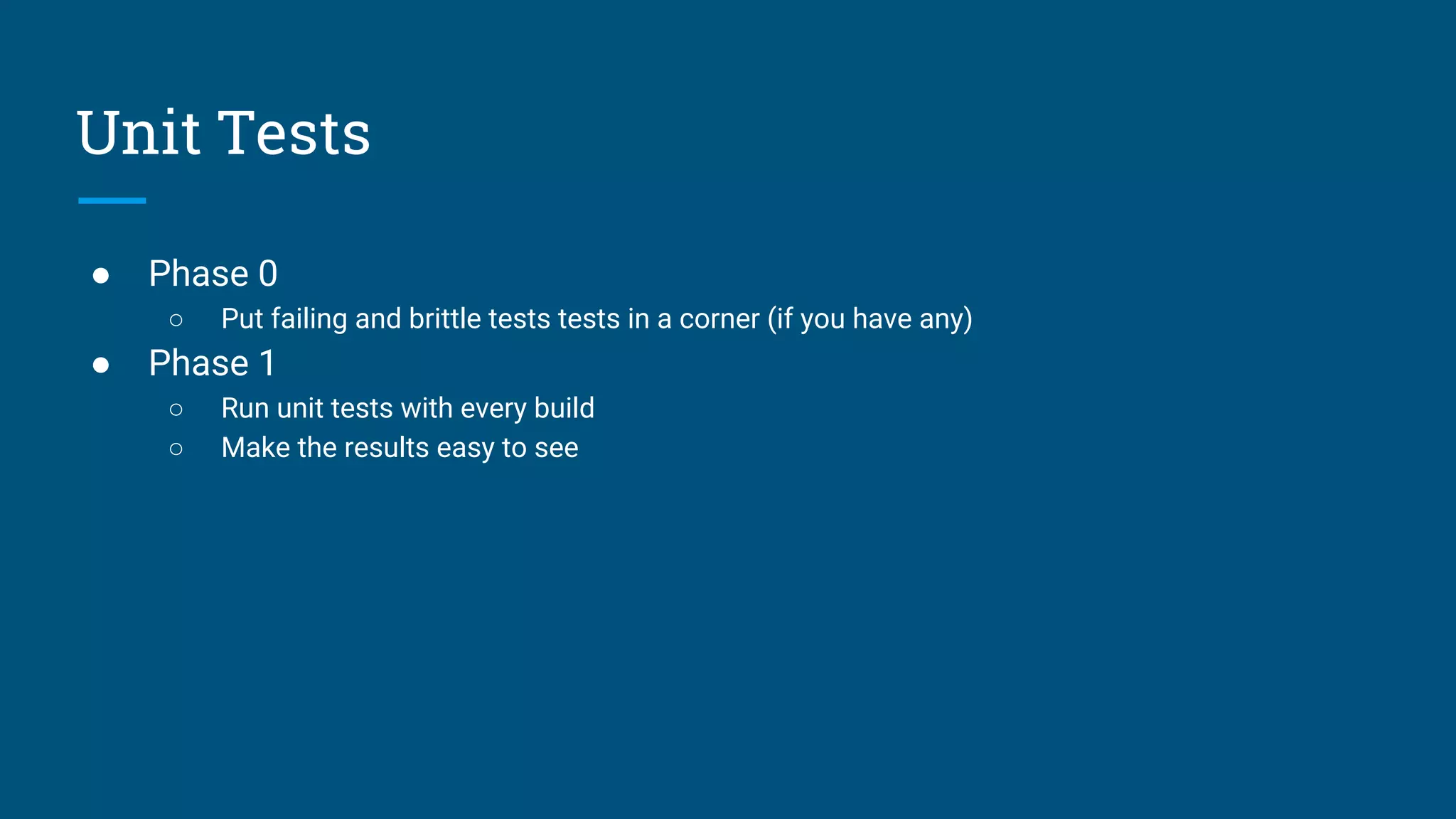

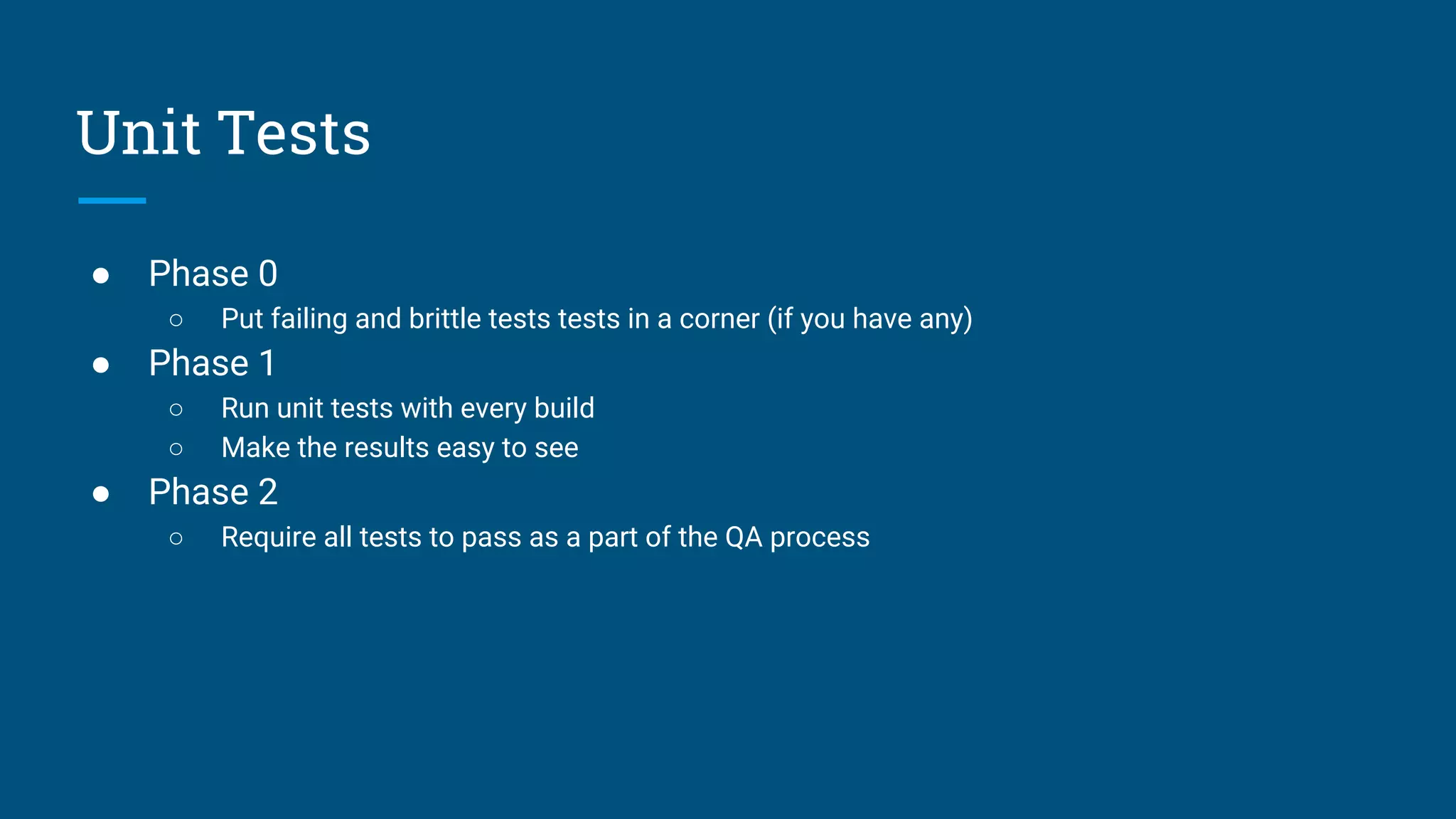

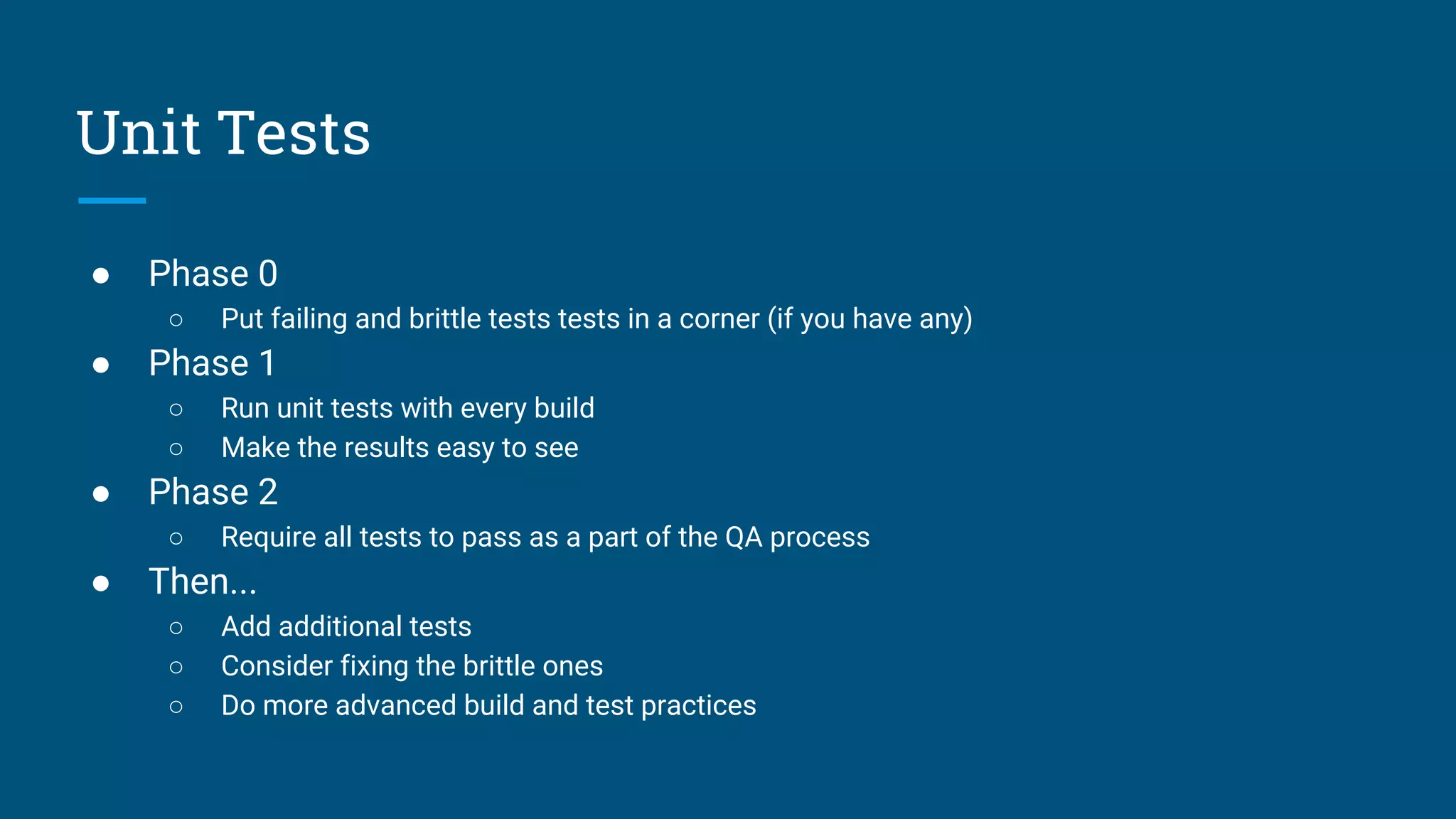

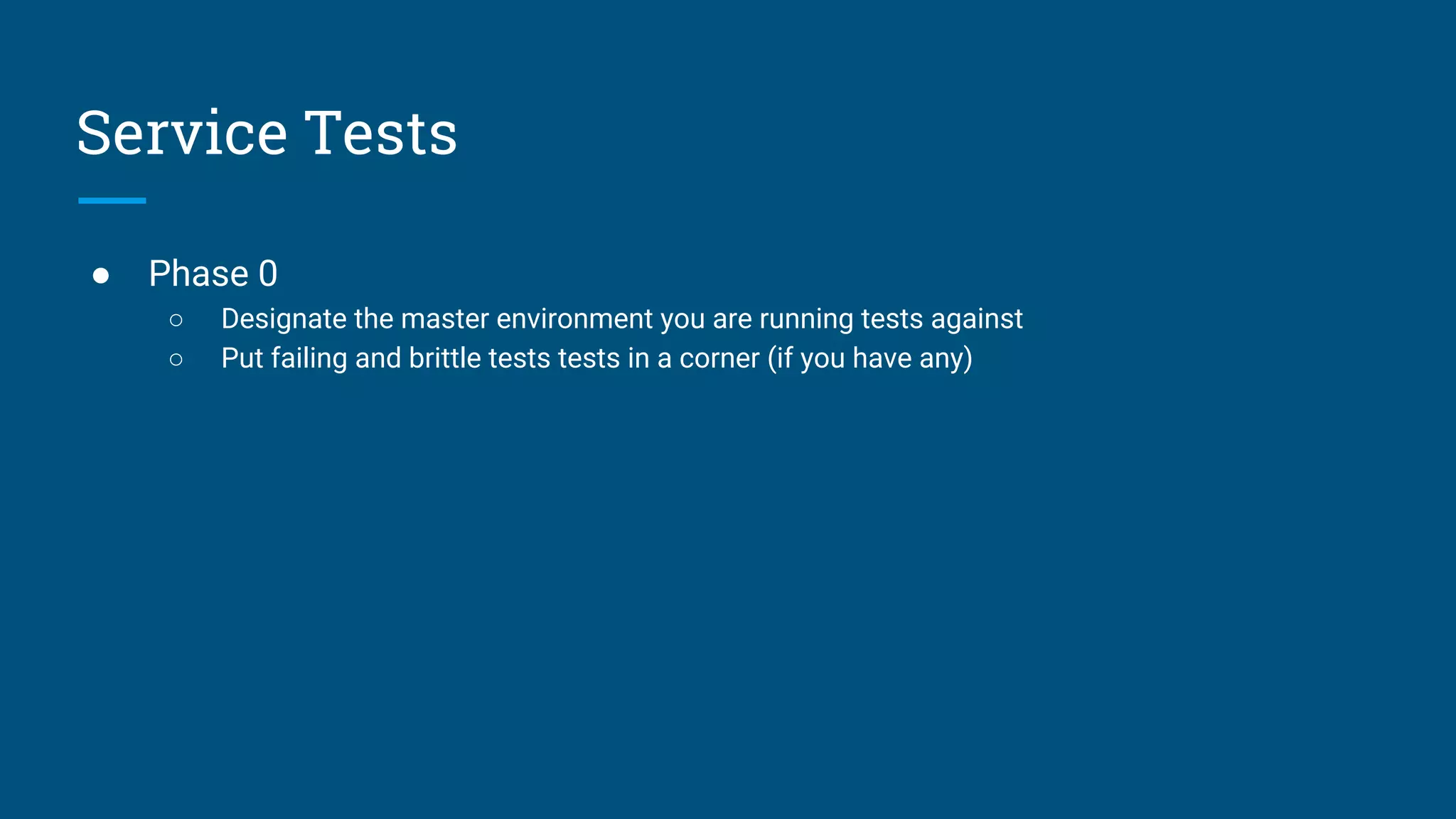

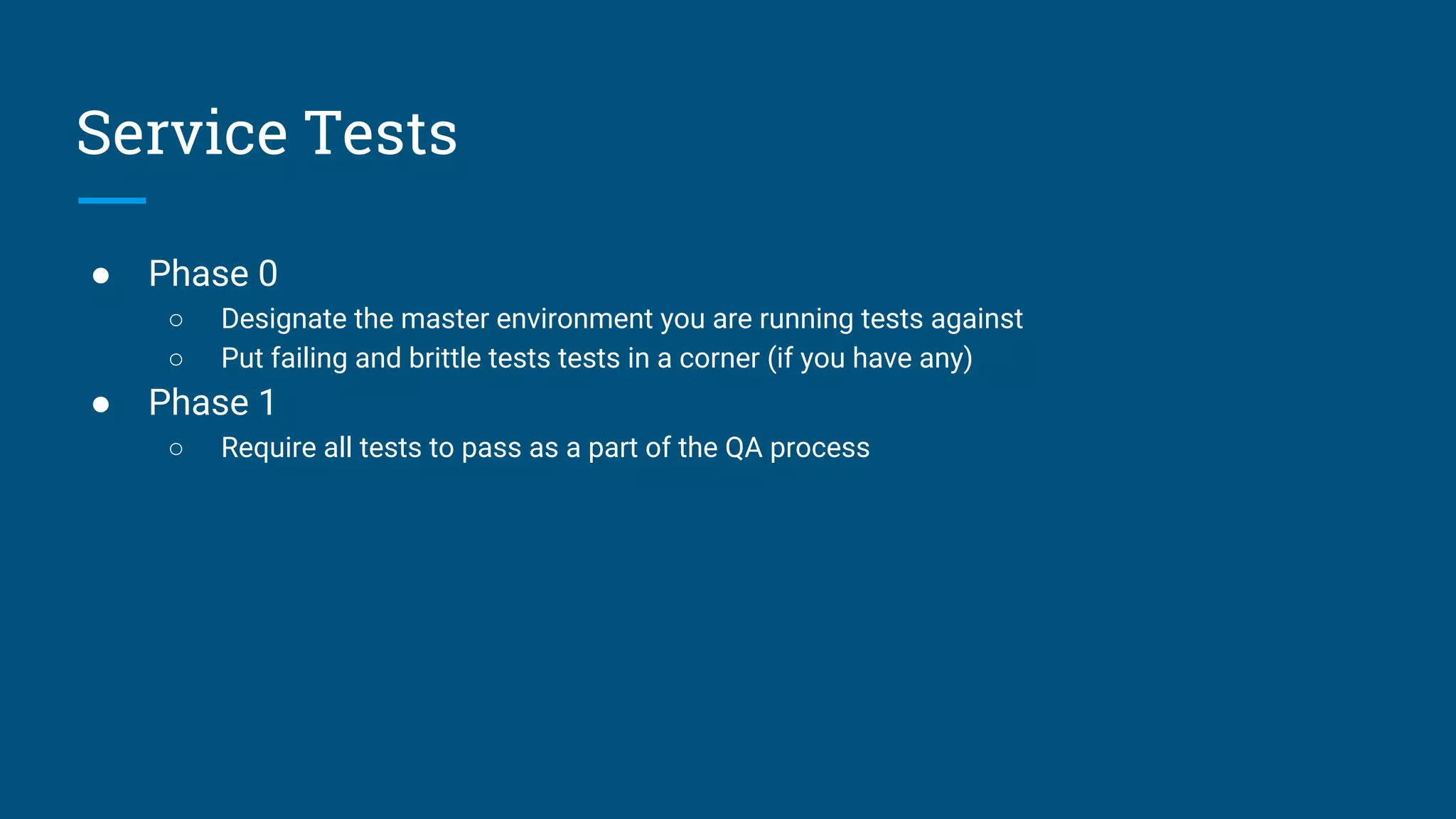

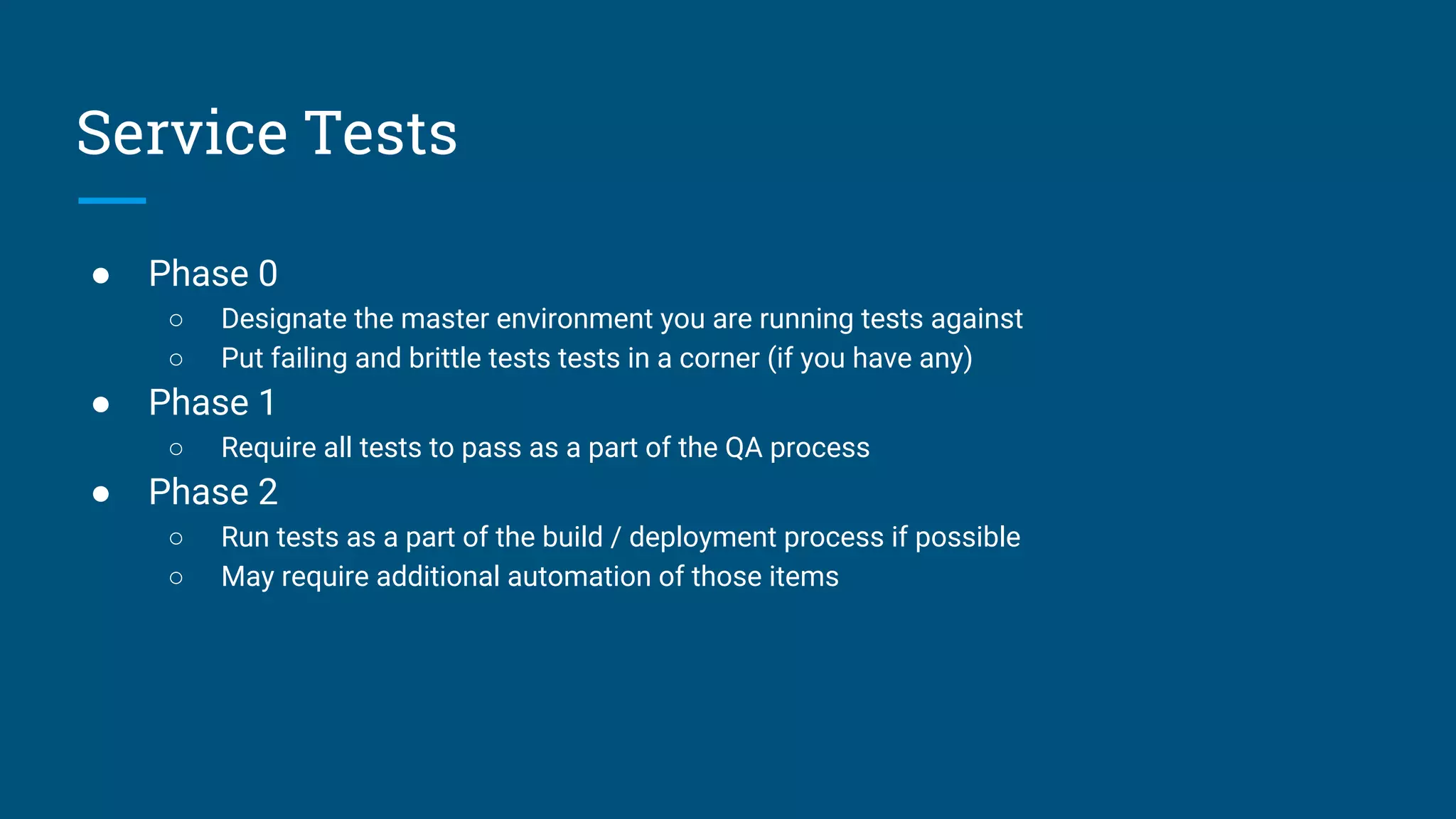

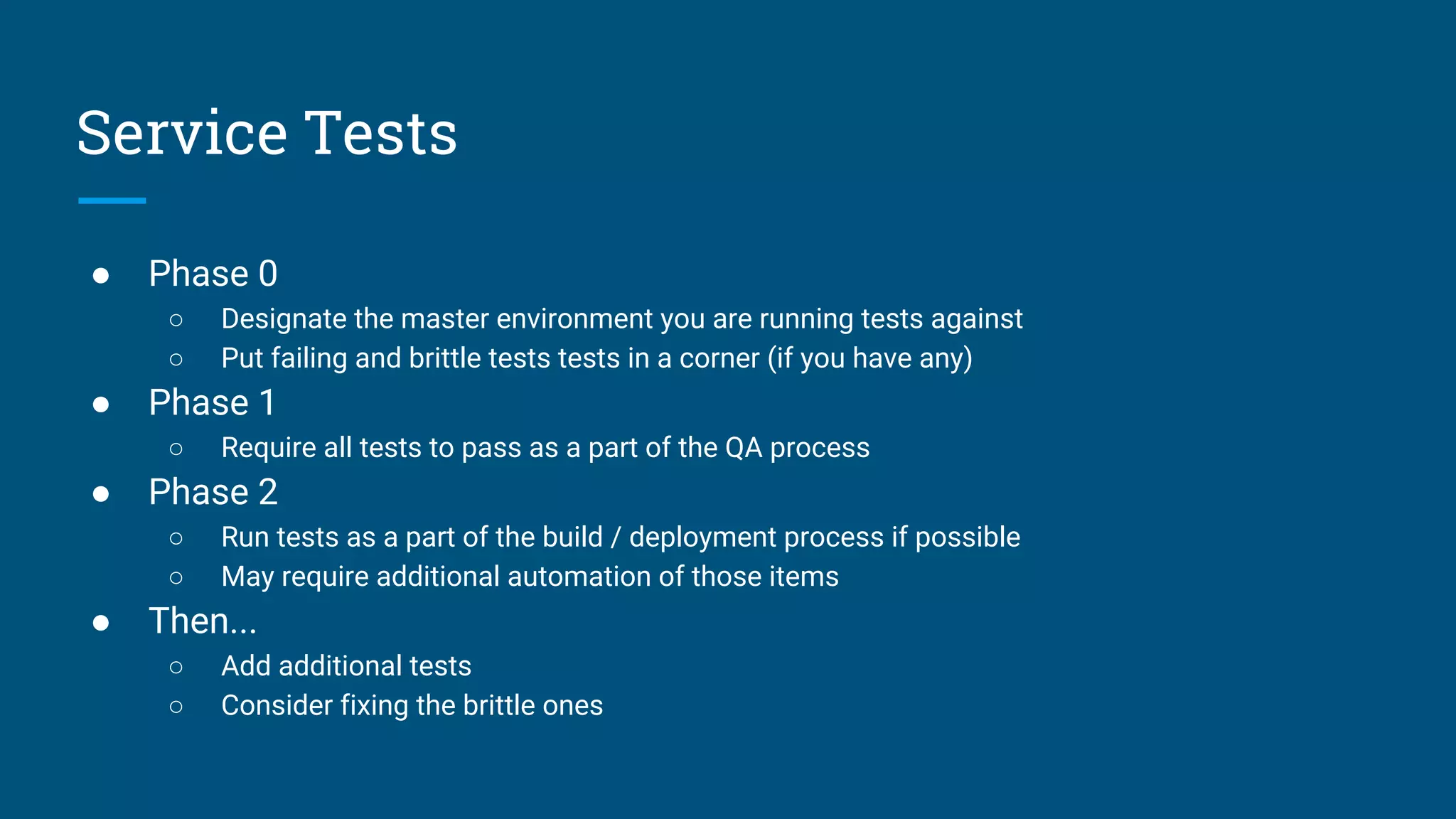

Paul Miles outlines QA strategies for testing legacy web apps, emphasizing the importance of introducing automated tests for applications lacking them. The document advises starting with key workflows, using suitable tools, and implementing a phased approach to testing, incorporating unit and service tests into the build process. It also highlights the significance of static quality analysis, early testing post-commit, and close collaboration within the team for effective testing infrastructure.