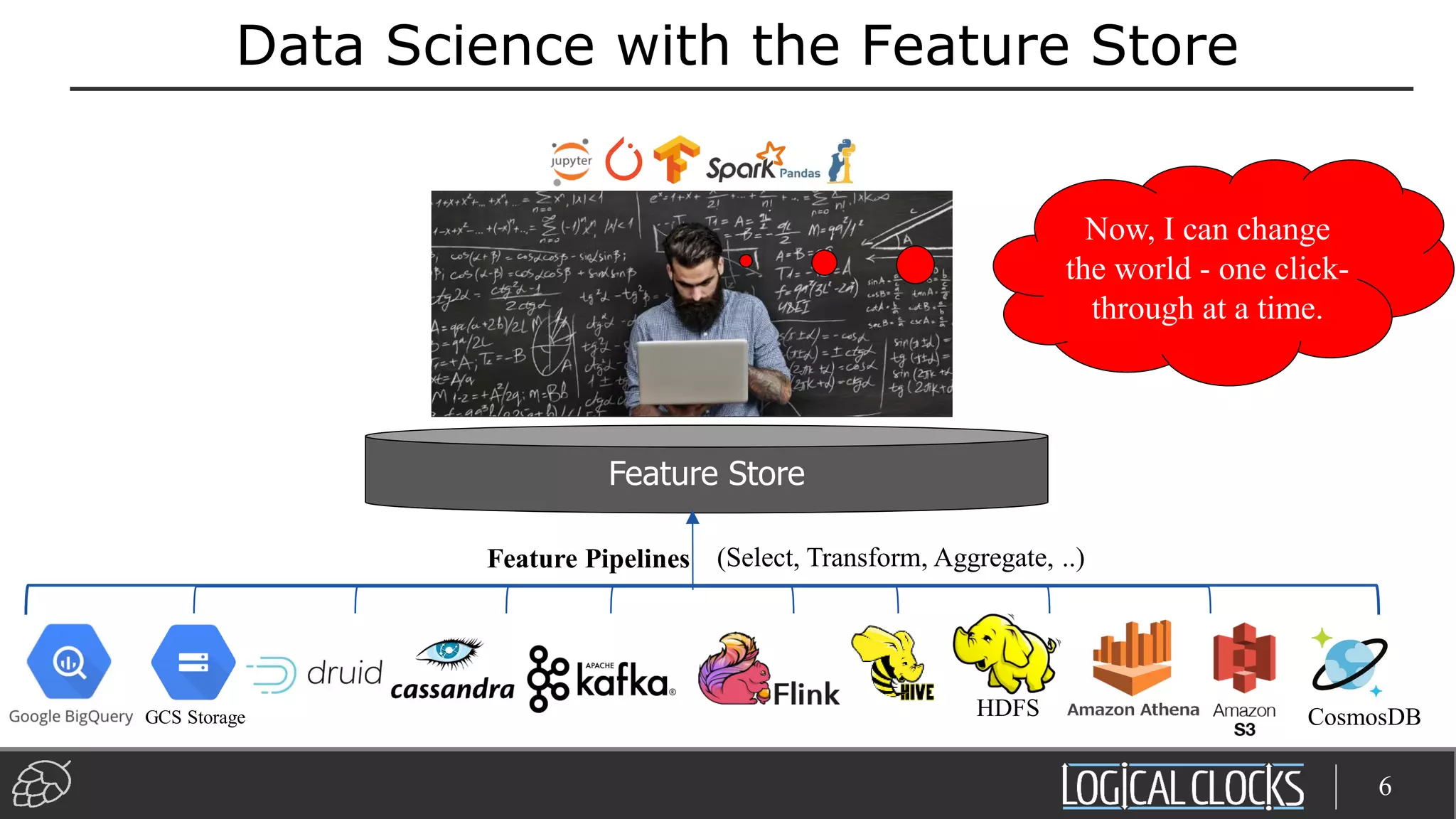

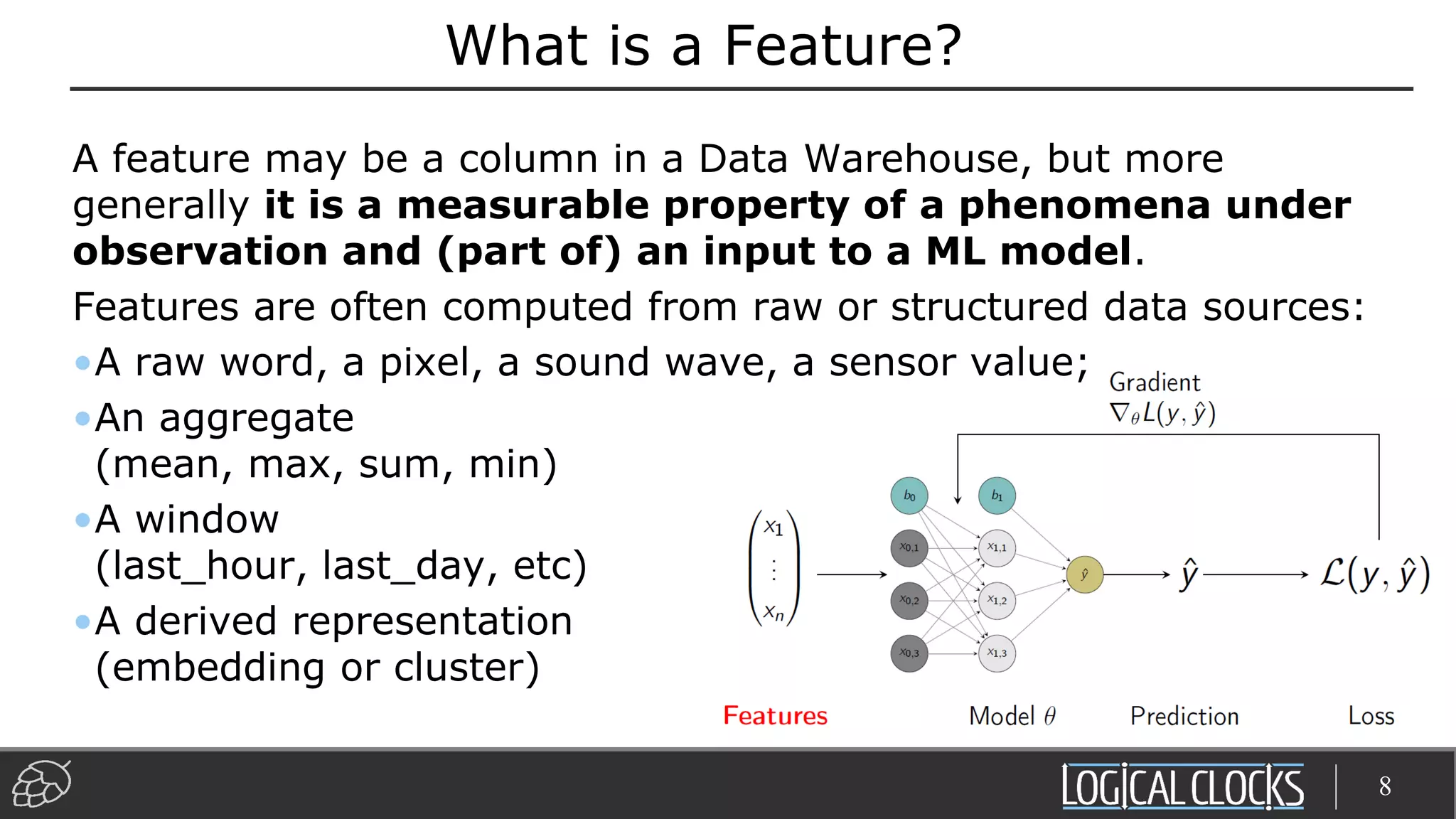

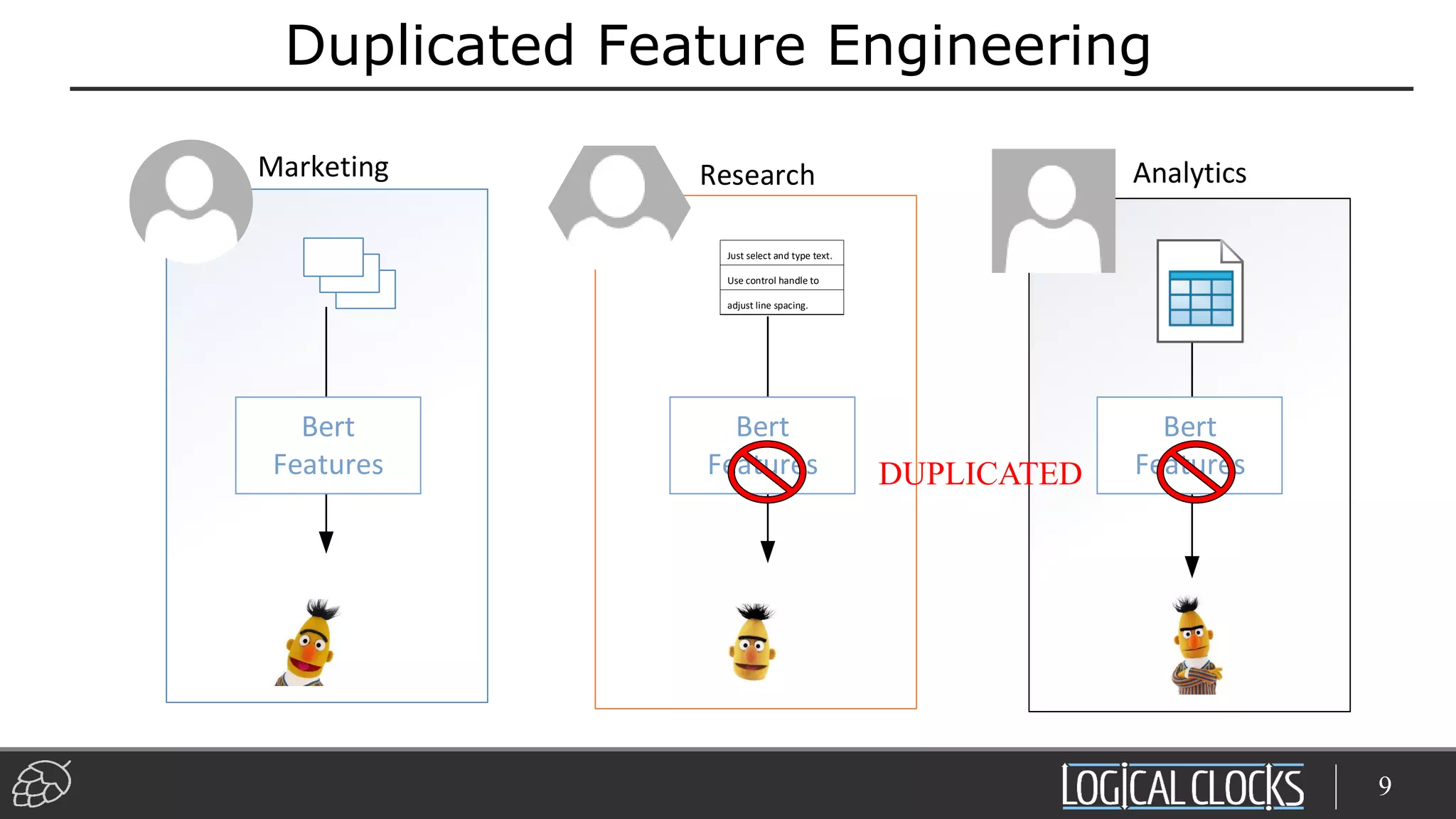

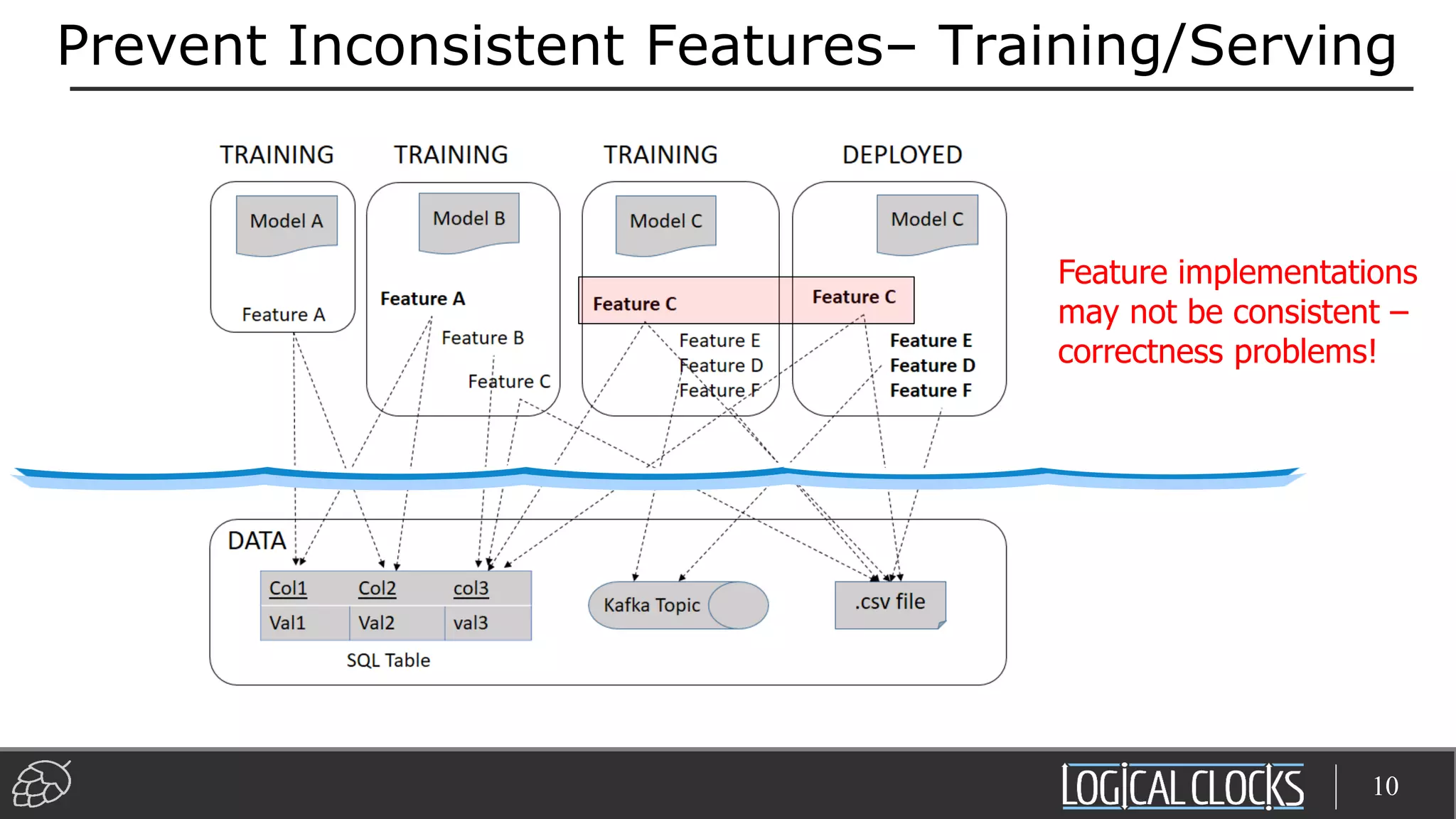

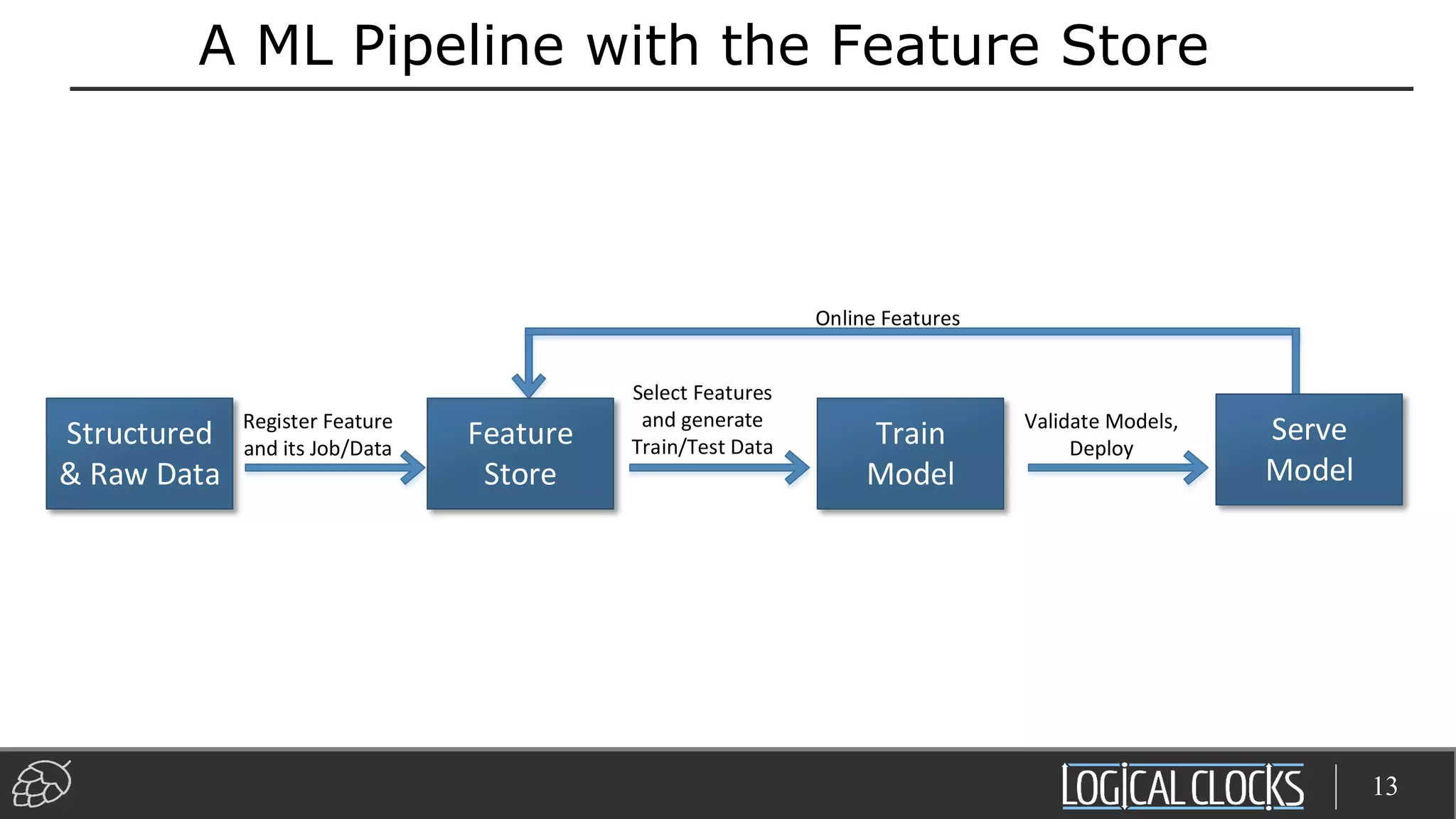

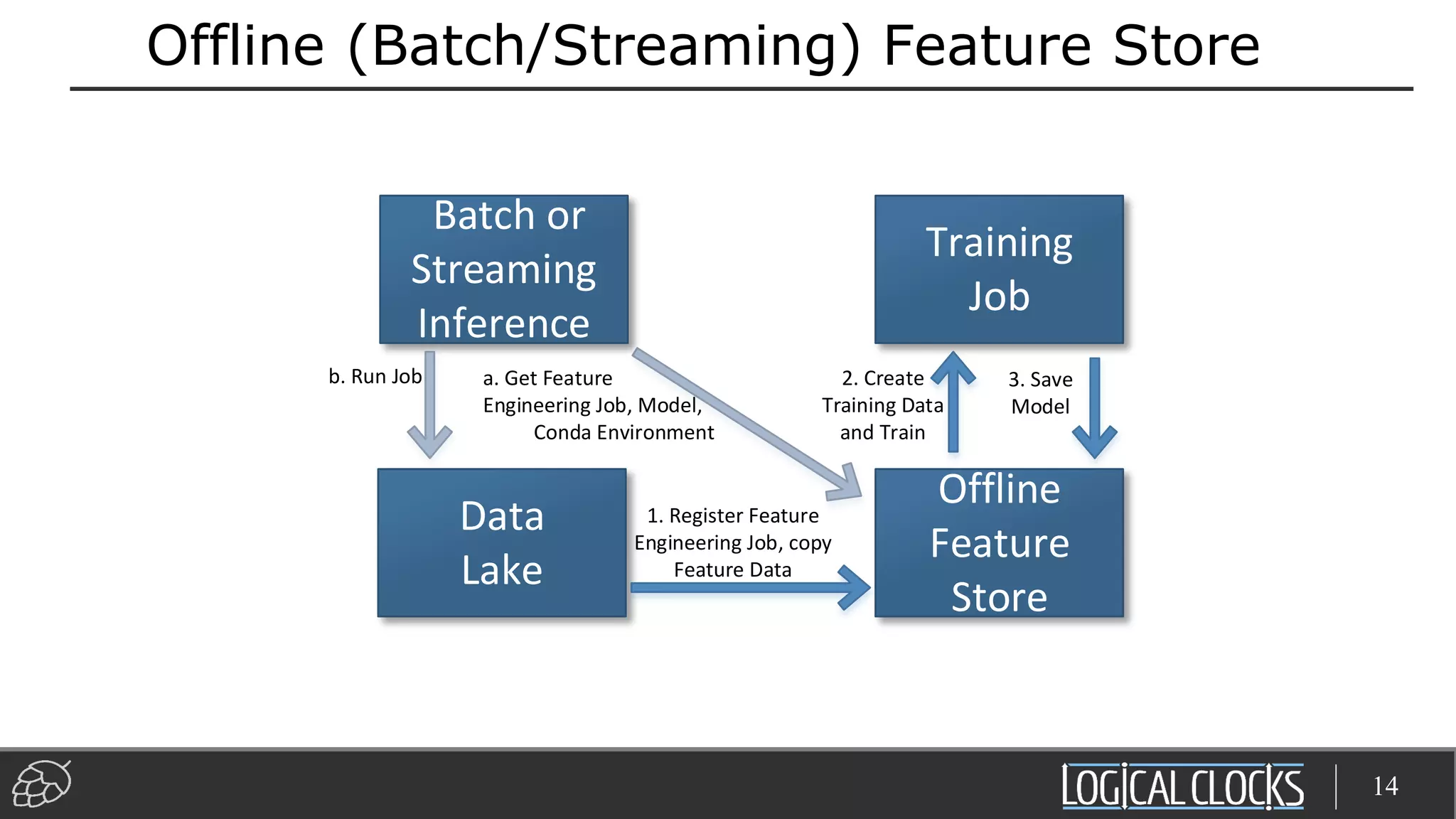

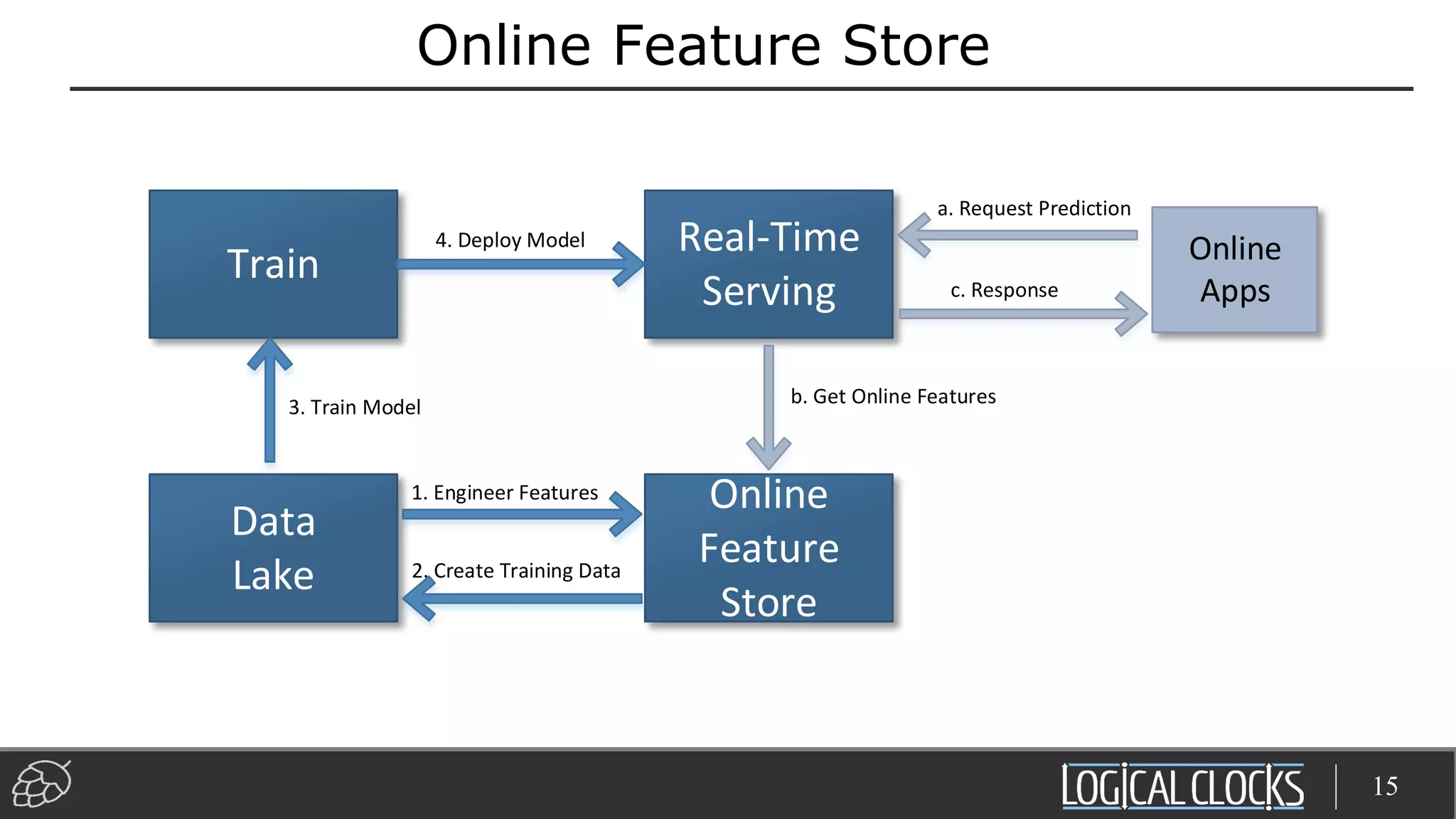

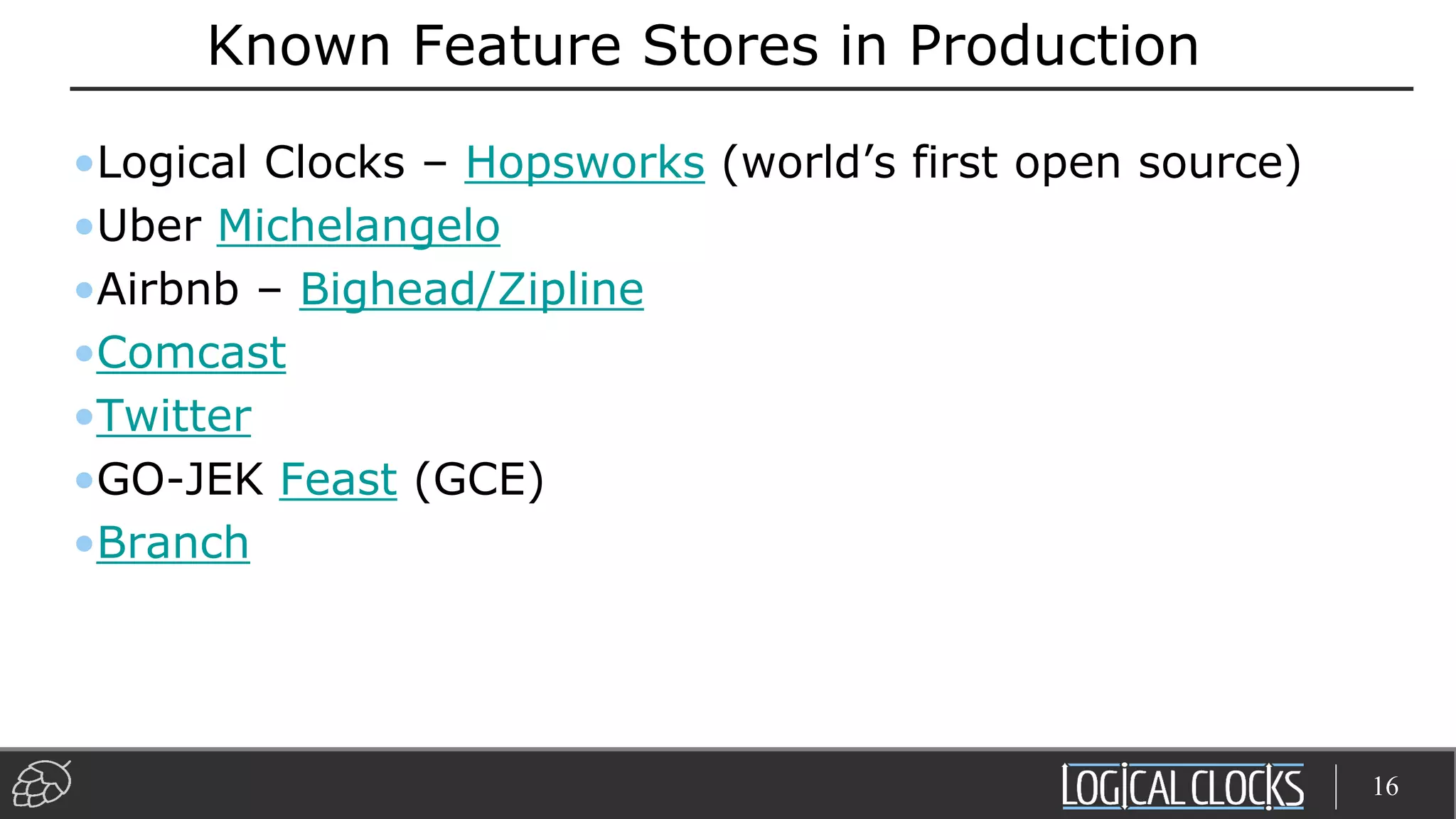

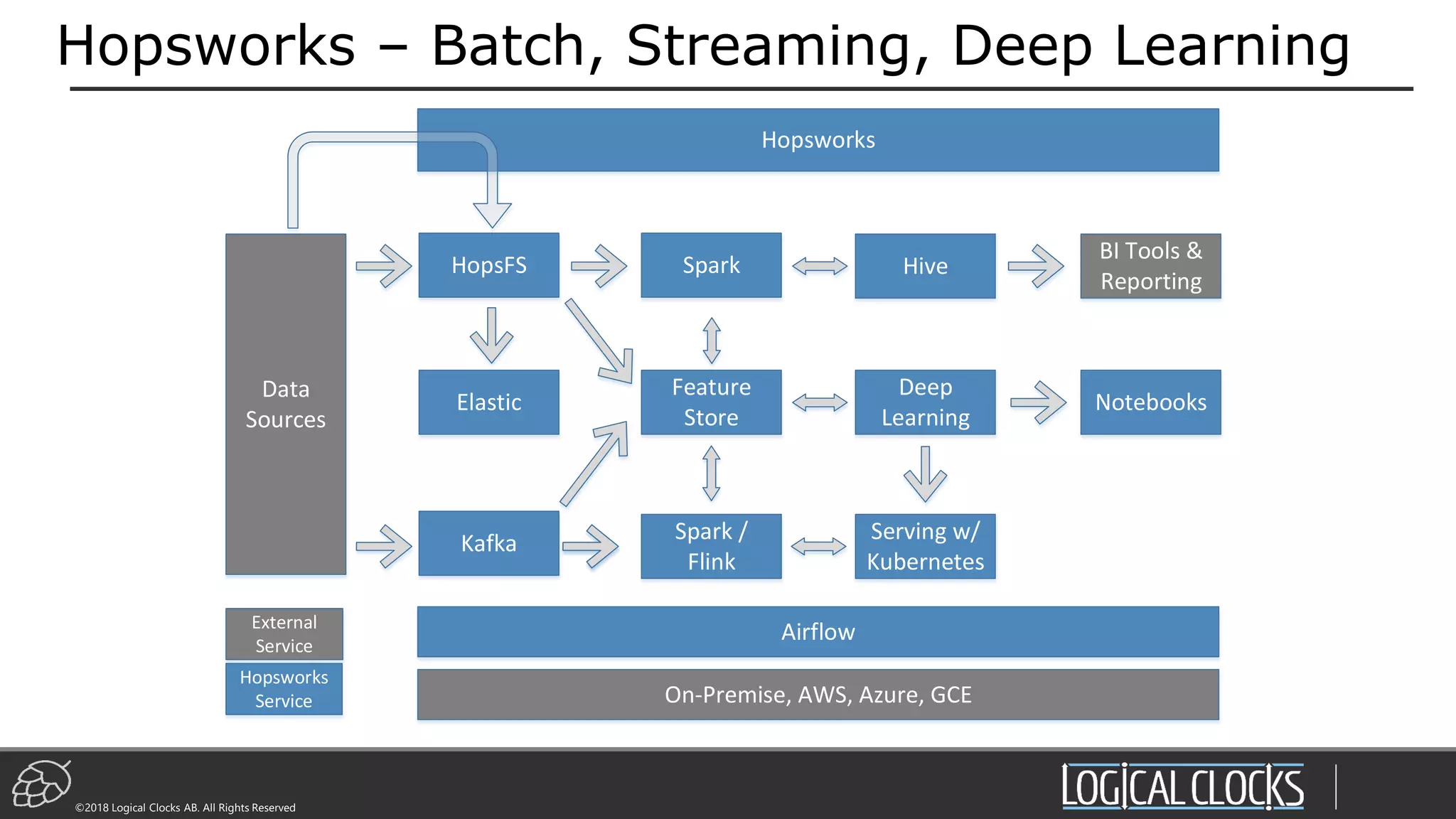

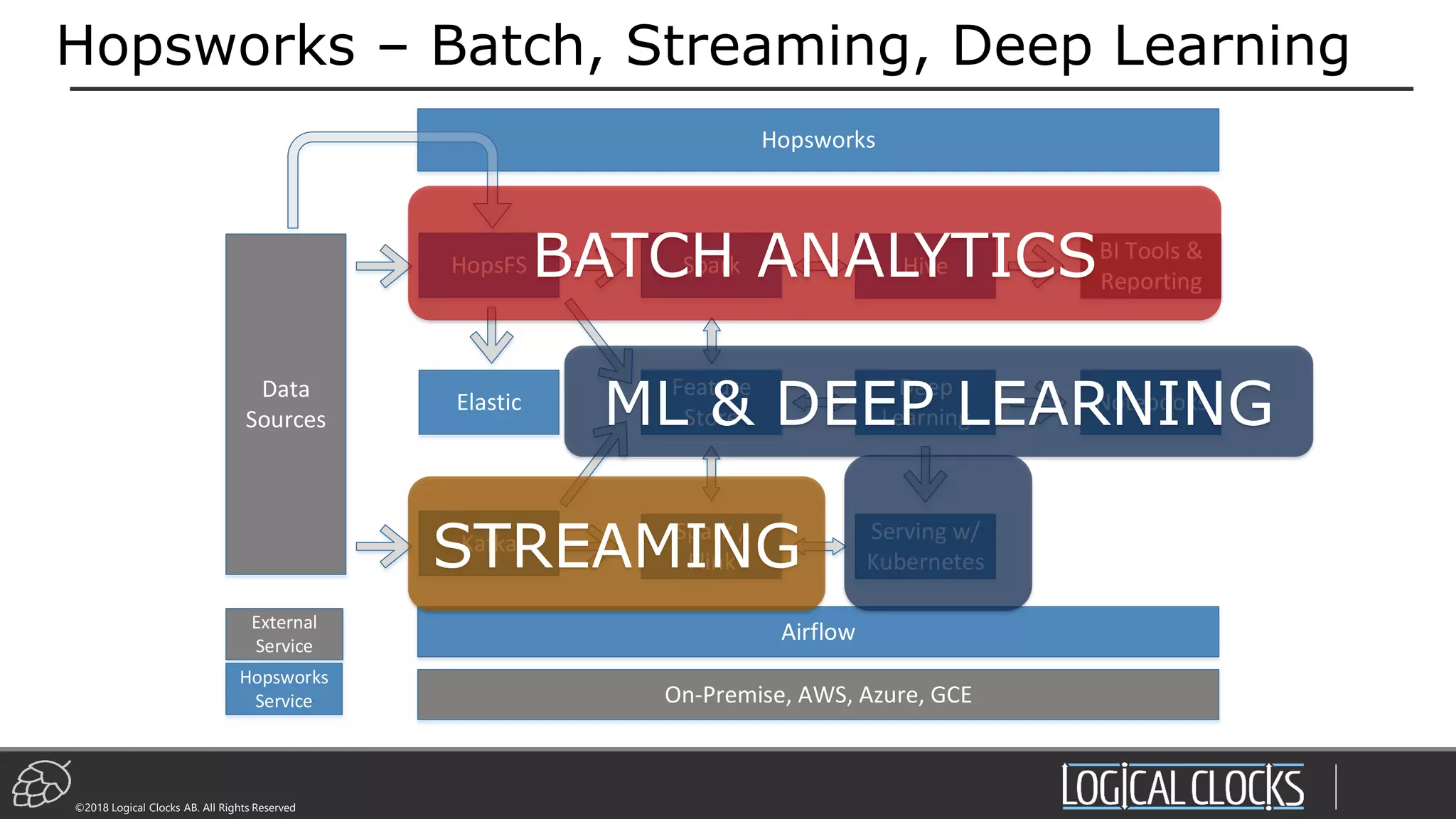

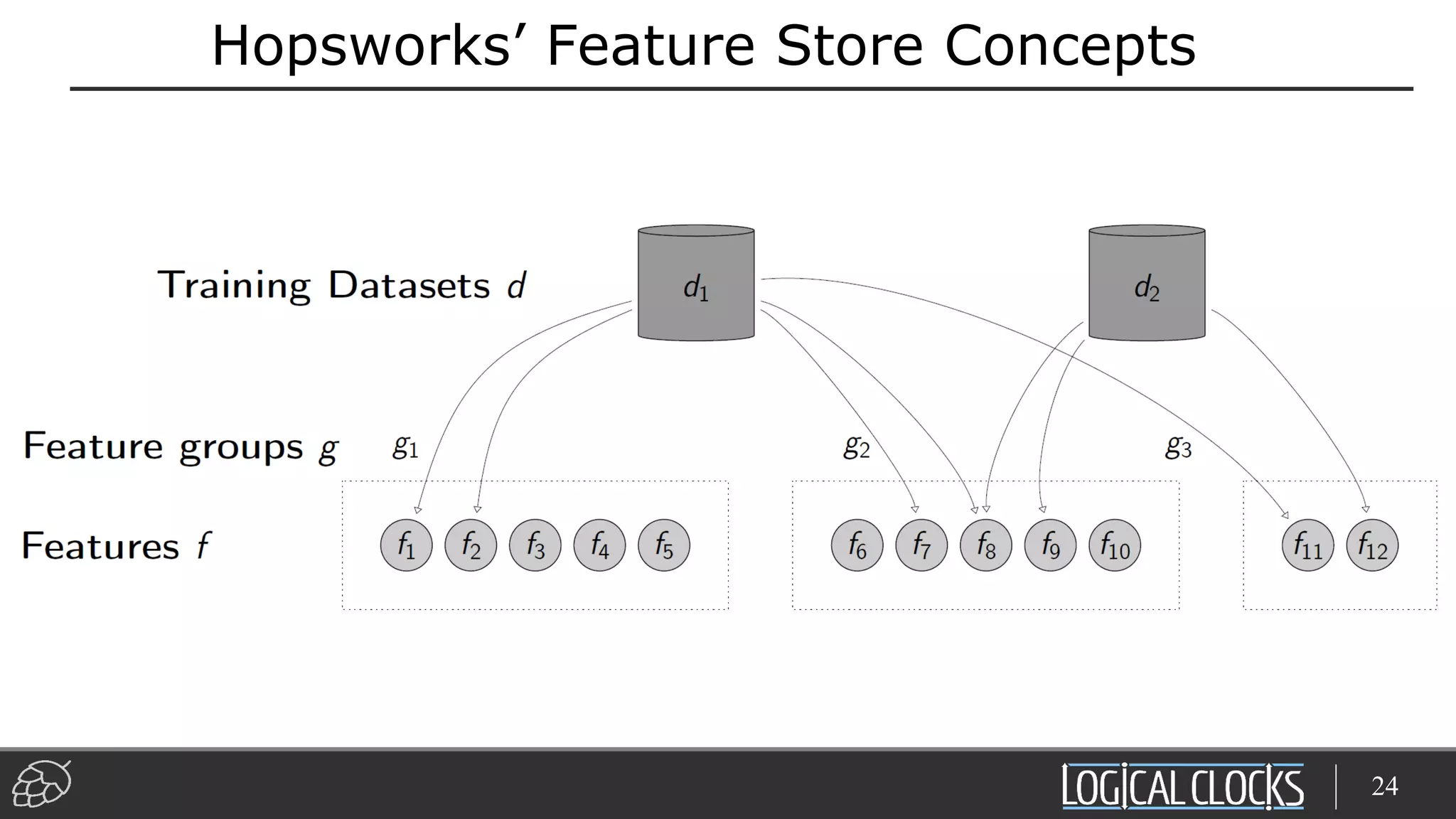

The document discusses the Feature Store, which is a system for managing features used in machine learning models. It allows data scientists to browse, select, and retrieve features to build training datasets without having to handle data engineering tasks. The Feature Store is integrated into the Hopsworks platform to support end-to-end machine learning pipelines for batch and streaming analytics, deep learning, and model serving. Features can be computed offline from raw data and automatically backfilled. The Feature Store enables reproducibility, reuse of features, and avoids duplicating feature engineering work.

![©2018 Logical Clocks AB. All Rights Reserved

7

Reading from the Feature Store (Data Scientist)

from hops import featurestore

raw_data = spark.read.parquet(filename)

polynomial_features = raw_data.map(lambda x: x^2)

featurestore.insert_into_featuregroup(polynomial_features,

"polynomial_featuregroup")

from hops import featurestore

df = featurestore.get_features([

"average_attendance", "average_player_age“])

df.create_training_dataset(df, “players_td”)

Writing to the Feature Store (Data Engineer)

tfrecords, numpy, petastorm, hdf5, csv](https://image.slidesharecdn.com/pydata-meetup-london-may-2019-dowling-logical-clocks-190508103446/75/PyData-Meetup-Feature-Store-for-Hopsworks-and-ML-Pipelines-7-2048.jpg)

![Features as first-class entities

•Features should be discoverable and reused.

•Features should be access controlled,

versioned, and governed.

- Enable reproducibility.

•Ability to pre-compute and

automatically backfill features.

- Aggregates, embeddings - avoid expensive re-computation.

- On-demand computation of features should also be possible.

•The Feature Store should help “solve the data problem, so that Data

Scientists don’t have to.” [uber]

11](https://image.slidesharecdn.com/pydata-meetup-london-may-2019-dowling-logical-clocks-190508103446/75/PyData-Meetup-Feature-Store-for-Hopsworks-and-ML-Pipelines-11-2048.jpg)

![©2018 Logical Clocks AB. All Rights Reserved

ML Infrastructure in Hopsworks

20

MODEL TRAINING

Feature

Store

HopsML API

& Airflow

[Diagram adapted from “technical debt of machine learning”]](https://image.slidesharecdn.com/pydata-meetup-london-may-2019-dowling-logical-clocks-190508103446/75/PyData-Meetup-Feature-Store-for-Hopsworks-and-ML-Pipelines-20-2048.jpg)

![©2018 Logical Clocks AB. All Rights Reserved

Hyperparameter Optimization

22

# RUNS ON THE EXECUTORS

def train(lr, dropout):

def input_fn(): # return dataset

optimizer = …

model = …

model.add(Conv2D(…))

model.compile(…)

model.fit(…)

model.evaluate(…)

# RUNS ON THE DRIVER

Hparams= {‘lr’:[0.001, 0.0001],

‘dropout’: [0.25, 0.5, 0.75]}

experiment.grid_search(train,HParams)

https://github.com/logicalclocks/hops-examples

Executor 1 Executor N

Driver

conda_env

conda_env conda_env

HopsFS (HDFS)

TensorBoard ModelsExperiments Training Data Logs](https://image.slidesharecdn.com/pydata-meetup-london-may-2019-dowling-logical-clocks-190508103446/75/PyData-Meetup-Feature-Store-for-Hopsworks-and-ML-Pipelines-22-2048.jpg)

![©2018 Logical Clocks AB. All Rights Reserved

ML Pipelines of Jupyter Notebooks

33

Convert .ipynb to .py

Jobs Service

Run .py or .jar

Schedule using

REST API or UI

materialize certs, ENV variables

View Old Notebooks, Experiments and Visualizations

Experiments &

Tensorboard

PySpark Kernel

.ipynb (HDFS contents)

[logs, results]

Livy Server

HopsYARNHopsFS

Interactive

materialize certs, ENV variables](https://image.slidesharecdn.com/pydata-meetup-london-may-2019-dowling-logical-clocks-190508103446/75/PyData-Meetup-Feature-Store-for-Hopsworks-and-ML-Pipelines-33-2048.jpg)