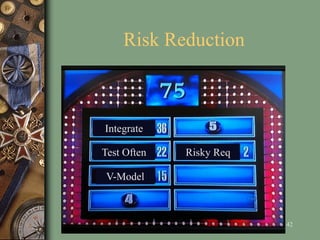

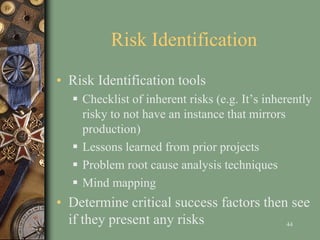

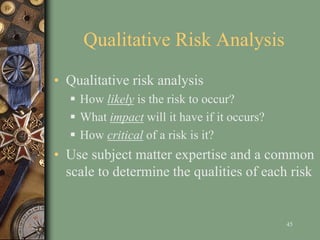

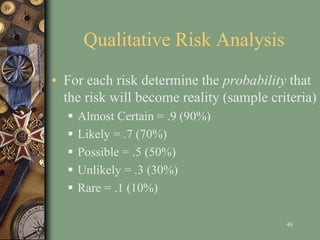

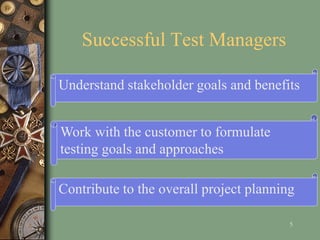

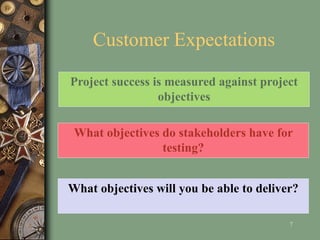

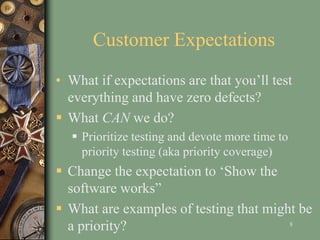

This document outlines project management tips for improving test planning, focusing on the importance of integrating testing as a sub-project of larger development efforts. Key aspects include understanding stakeholder expectations, defining test scope, prioritizing testing goals, managing risks, and incorporating QA early in the project. By applying project management processes, stakeholders can ensure that testing aligns with project objectives and enhances overall quality.

![Planning

• Human Resource planning

What skills and knowledge do you need to

perform the tests you identified?

Who will be assigned to the project?

What is their availability

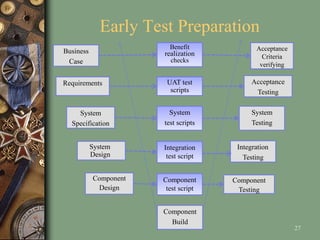

• Write test cases as soon as possible in the

project [See V-model]

26](https://image.slidesharecdn.com/t19-151213234334/85/Project-Management-Tips-to-Improve-Test-Planning-26-320.jpg)