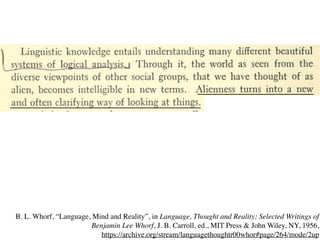

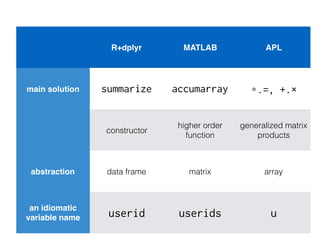

R solution: Uses dplyr functions to group the data by user ID, then calculates the average rating for each user using summarize() and mean(). Returns a tibble with user ID and average rating.

MATLAB solution: Uses accumarray() to bin the ratings by user IDs, then calculates the mean rating within each bin. Returns a vector with average ratings for each unique user ID.

APL solution: Defines vectors for user IDs and ratings. Finds unique user IDs and indexes to bin ratings. Calculates average rating within each bin by summing product of ratings and indexes, then dividing by sum of indexes. Returns vectors of average ratings and unique user

![>> userids = [381 1291 3992 193942 9493 381 3992 381

3992 193942];

>> ratings = [5 4 4 4 5 5 5 3 5 4];

>> accumarray(userids',ratings',[],@mean,[],true)

ans =

(381,1) 4.3333

(1291,1) 4.0000

(3992,1) 4.6667

(9493,1) 5.0000

(193942,1) 4.0000

A MATLAB solution](https://image.slidesharecdn.com/manchester-160920143101/85/Programming-languages-history-relativity-and-design-32-320.jpg)

![#include <stdio.h>

int main(void)

{

int userids[10] = {381, 1291, 3992, 193942, 9494, 381,

3992, 381, 3992, 193942};

int ratings[10] = {5,4,4,4,5,5,5,3,5,4};

int nuniq = 0;

int useridsuniq[10];

int counts[10];

float avgratings[10];

int isunique;

int i, j;

for(i=0; i<10; i++)

{

/*Have we seen this userid?*/

isunique = 1;

for(j=0; j<nuniq; j++)

{

if(userids[i] == useridsuniq[j])

{

isunique = 0;

/*Update accumulators*/

counts[j]++;

avgratings[j] += ratings[i];

}

}

A C solution

/* New entry*/

if(isunique)

{

useridsuniq[nuniq] = userids[i];

counts[nuniq] = 1;

avgratings[nuniq++] = ratings[i];

}

}

/* Now divide through */

for(j=0; j<nuniq; j++)

avgratings[j] /= counts[j];

/* Now print*/

for(j=0; j<nuniq; j++)

printf("%dt%fn", useridsuniq[j],

avgratings[j]);

return 0;

}](https://image.slidesharecdn.com/manchester-160920143101/85/Programming-languages-history-relativity-and-design-36-320.jpg)

![#include <stdio.h>

int main(void)

{

int userids[10] = {381, 1291, 3992, 193942, 9494, 381,

3992, 381, 3992, 193942};

int ratings[10] = {5,4,4,4,5,5,5,3,5,4};

int nuniq = 0;

int useridsuniq[10];

int counts[10];

float avgratings[10];

int isunique;

int i, j;

for(i=0; i<10; i++)

{

/*Have we seen this userid?*/

isunique = 1;

for(j=0; j<nuniq; j++)

{

if(userids[i] == useridsuniq[j])

{

isunique = 0;

/*Update accumulators*/

counts[j]++;

avgratings[j] += ratings[i];

}

}

/* New entry*/

if(isunique)

{

useridsuniq[nuniq] = userids[i];

counts[nuniq] = 1;

avgratings[nuniq++] = ratings[i];

}

}

/* Now divide through */

for(j=0; j<nuniq; j++)

avgratings[j] /= counts[j];

/* Now print*/

for(j=0; j<nuniq; j++)

printf("%dt%fn", useridsuniq[j],

avgratings[j]);

return 0;

}

boilerplate code

manual memory

management

small standard library

A C solution](https://image.slidesharecdn.com/manchester-160920143101/85/Programming-languages-history-relativity-and-design-37-320.jpg)

![Another Julia solution

userids=[381, 1291, 3992, 193942, 9494, 381, 3992, 381, 3992, 193942];

ratings=[5,4,4,4,5,5,5,3,5,4];

counts = [];

uuserids=[];

uratings=[];

for i=1:length(userids)

j = findfirst(uuserids, userids[i])

if j==0 #not found

push!(uuserids, userids[i])

push!(uratings, ratings[i])

push!(counts, 1)

else #already seen

uratings[j] += ratings[i]

counts[j] += 1

end

end

[uuserids uratings./counts]

5x2 Array{Any,2}:

381 4.33333

1291 4.0

3992 4.66667

193942 4.0

9494 5.0](https://image.slidesharecdn.com/manchester-160920143101/85/Programming-languages-history-relativity-and-design-38-320.jpg)

![Another Julia solution

userids=[381, 1291, 3992, 193942, 9494, 381, 3992, 381, 3992, 193942];

ratings=[5,4,4,4,5,5,5,3,5,4];

counts = [];

uuserids=[];

uratings=[];

for i=1:length(userids)

j = findfirst(uuserids, userids[i])

if j==0 #not found

push!(uuserids, userids[i])

push!(uratings, ratings[i])

push!(counts, 1)

else #already seen

uratings[j] += ratings[i]

counts[j] += 1

end

end

[uuserids uratings./counts]

5x2 Array{Any,2}:

381 4.33333

1291 4.0

3992 4.66667

193942 4.0

9494 5.0

fast loops

vectorized operations

mix and match what you want

other solutions: DataFrames, functional…](https://image.slidesharecdn.com/manchester-160920143101/85/Programming-languages-history-relativity-and-design-39-320.jpg)