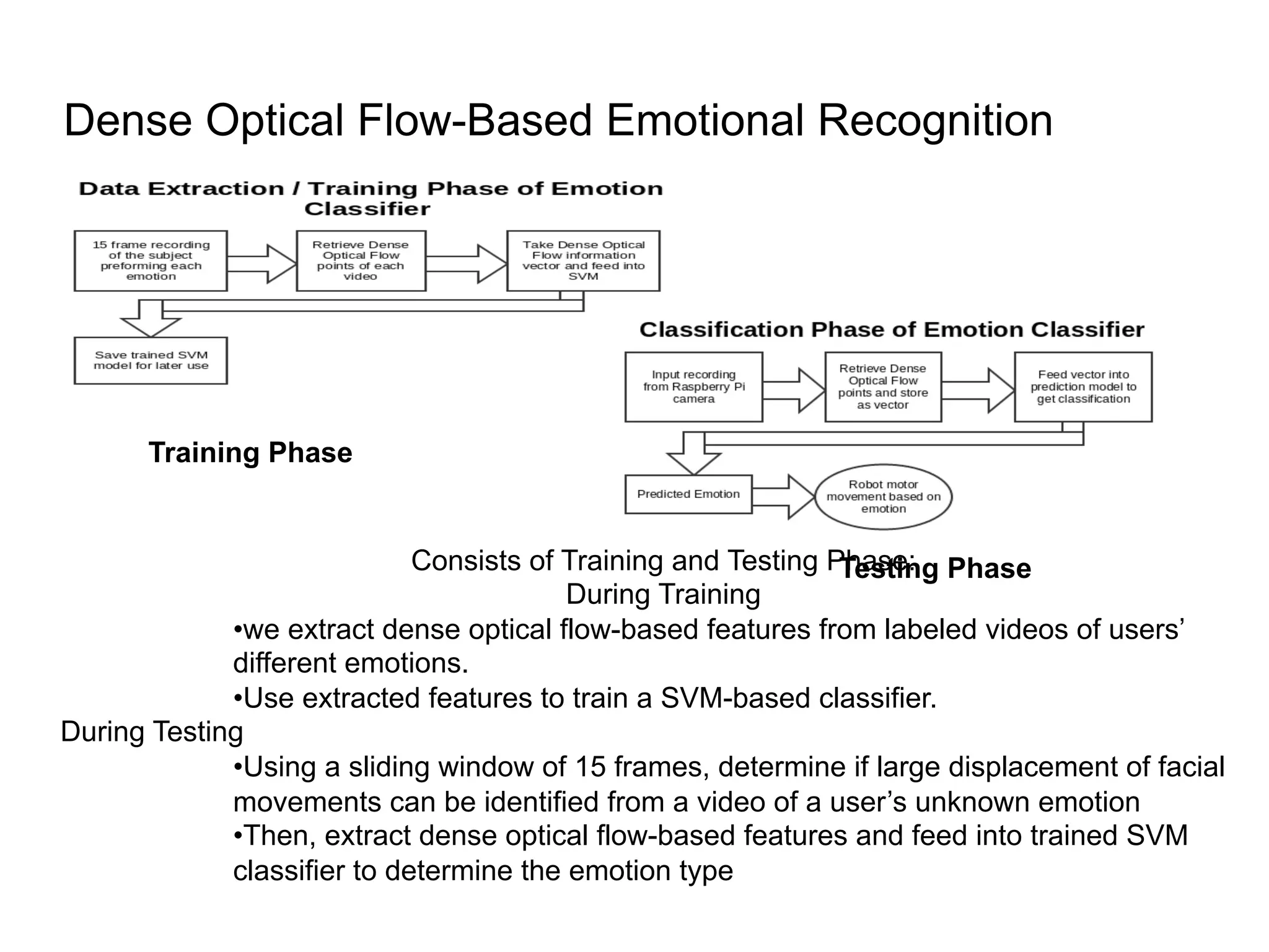

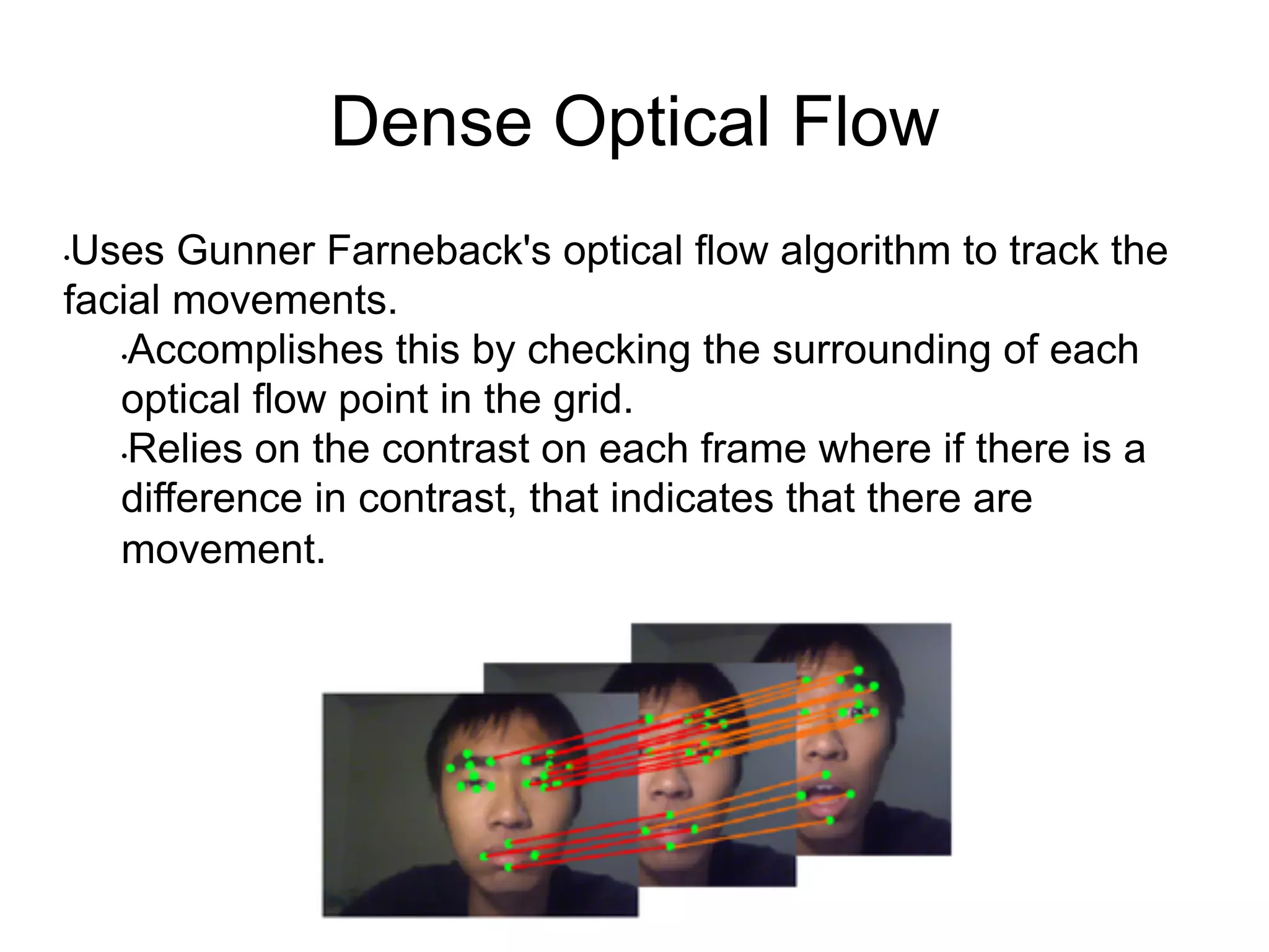

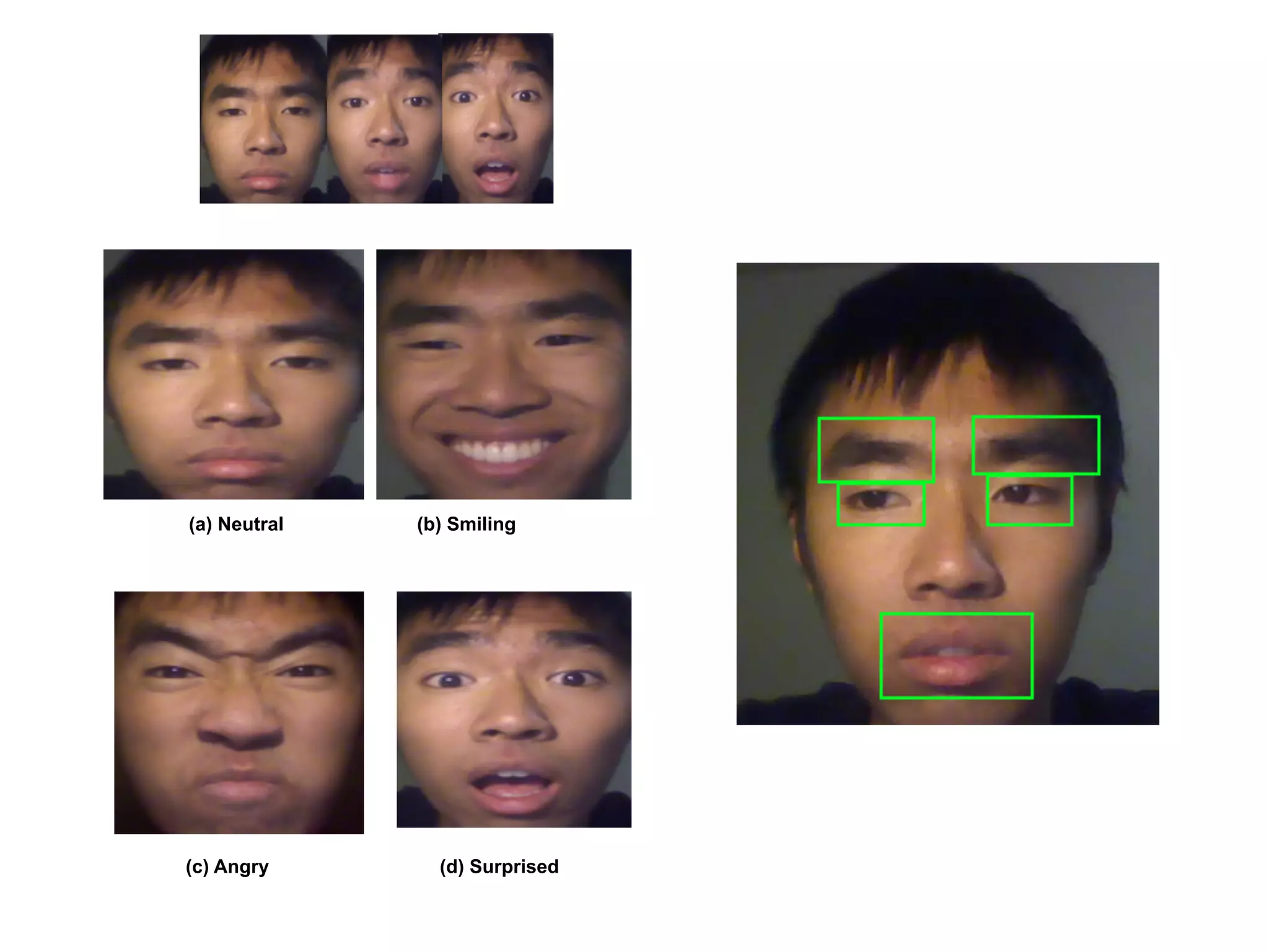

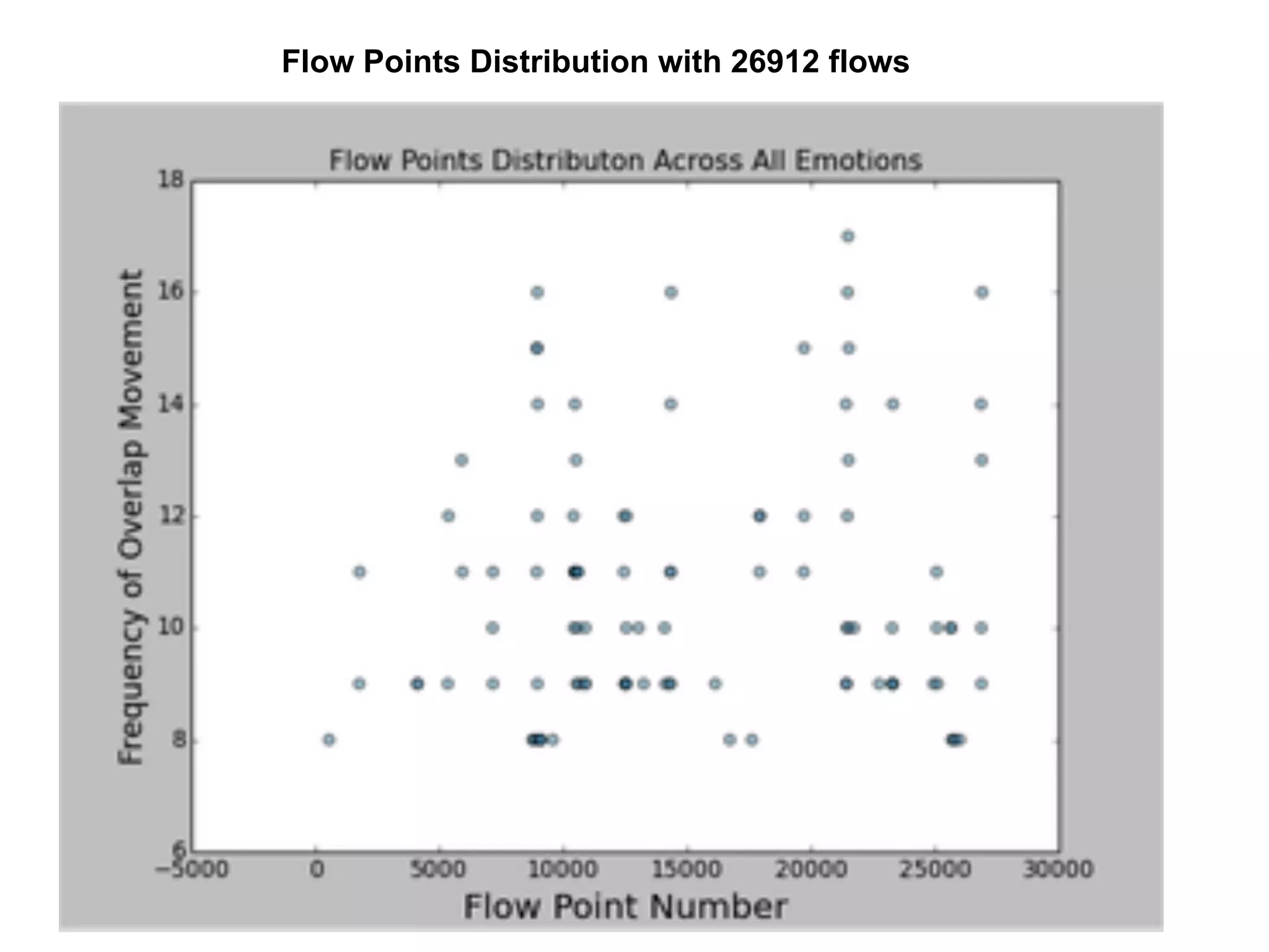

The document proposes a dense optical flow-based approach to emotion recognition from videos. It extracts dense optical flow features from labeled training videos to train an SVM classifier. During testing, it determines facial movements from unlabeled videos using optical flow and classifies the emotion. It achieved 82-90% accuracy on a dataset of 372 videos across 6 people expressing 4 emotions. Future work includes combining classifiers focusing on different facial regions and testing robustness to distance and camera angle.