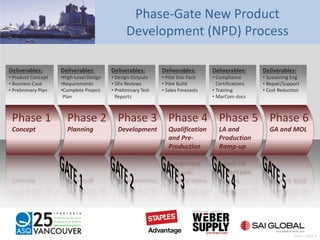

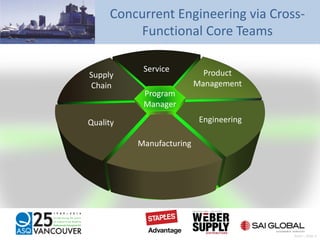

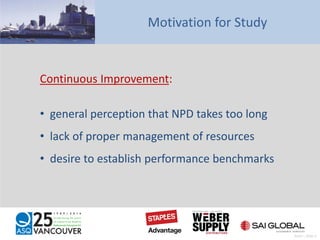

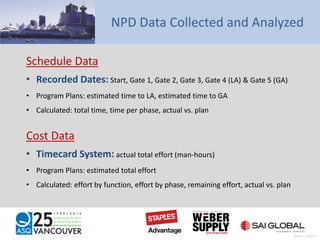

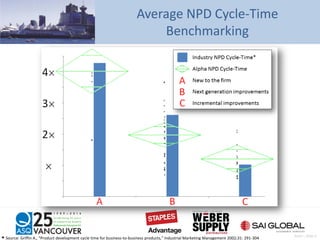

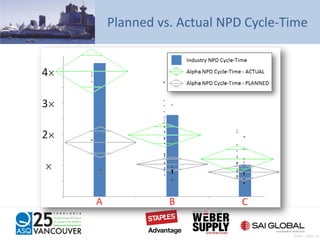

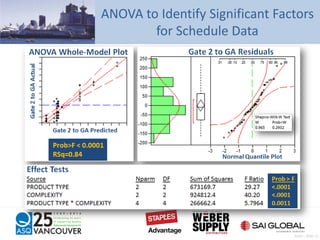

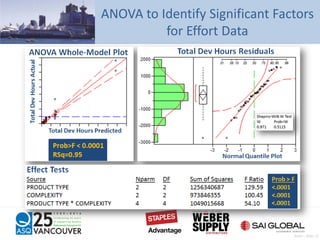

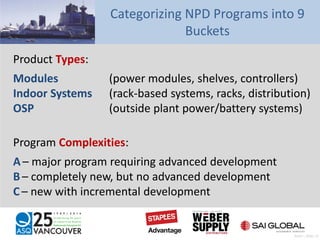

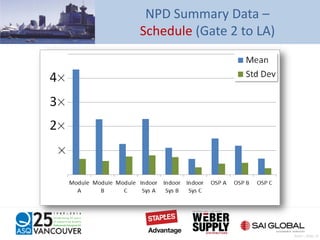

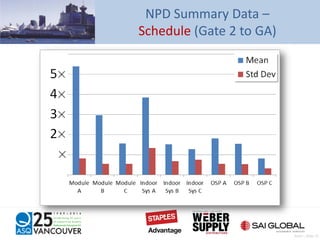

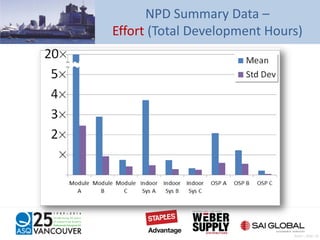

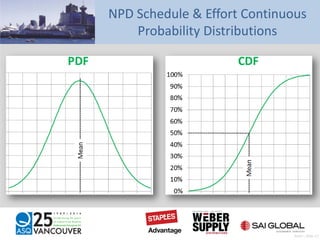

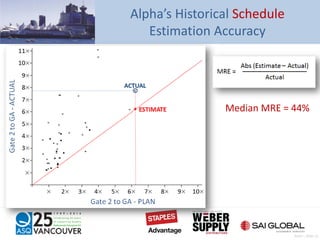

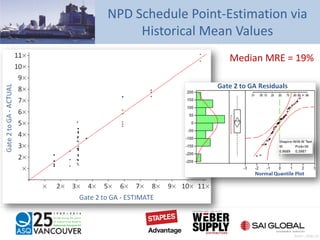

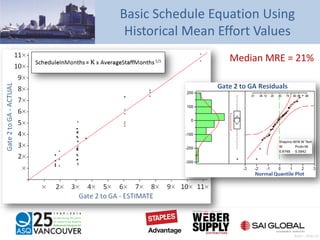

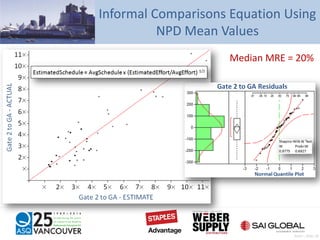

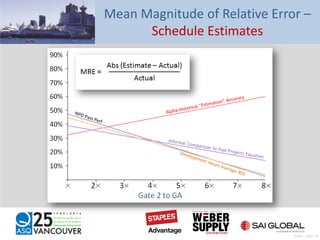

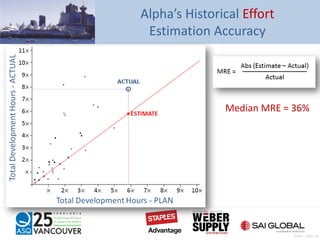

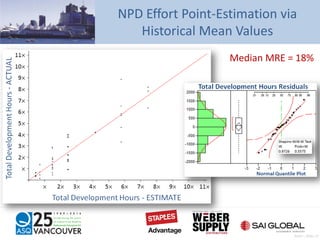

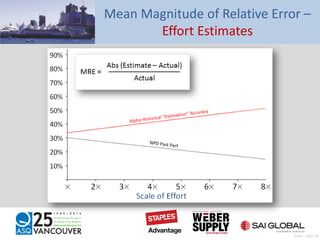

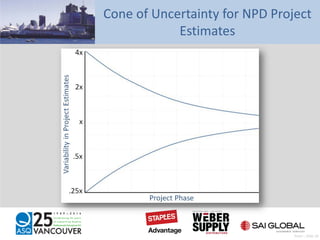

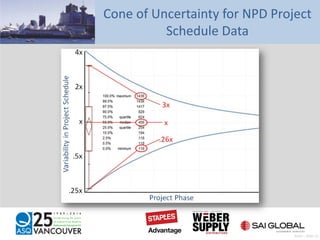

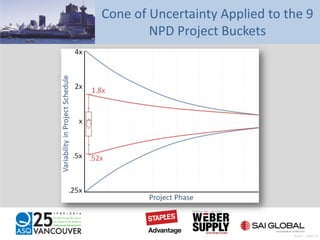

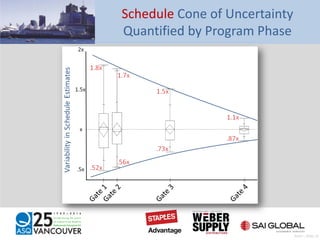

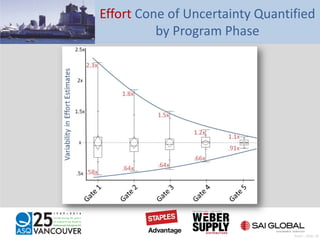

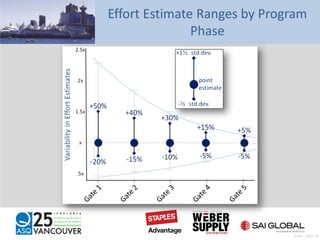

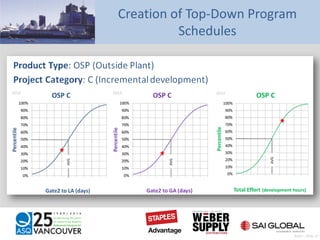

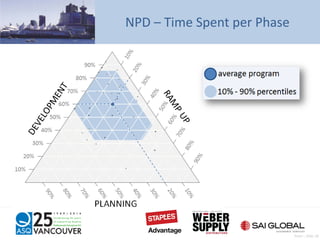

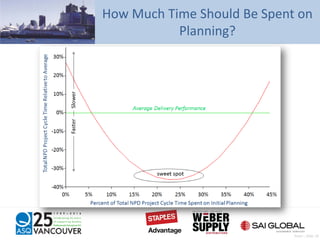

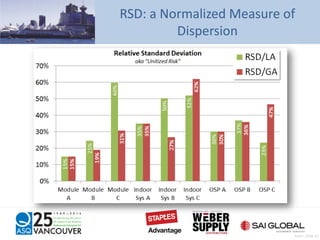

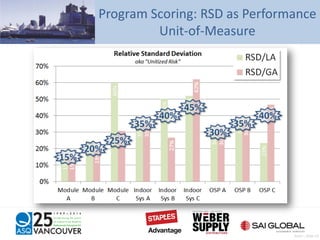

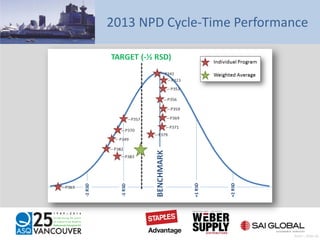

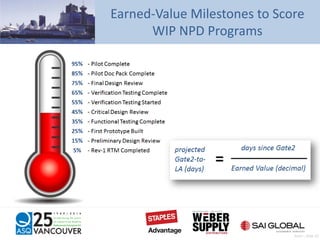

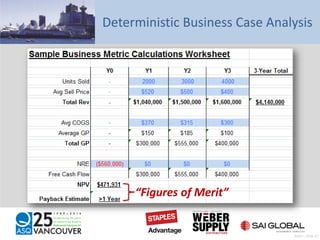

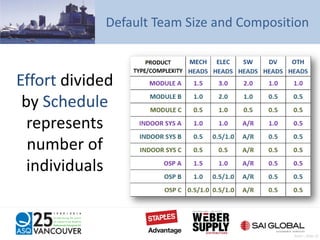

The document presents an analysis of the new product development (NPD) cycle-time at Alpha Technologies, focusing on performance benchmarks and methods for efficiency improvement. It details the phase-gate NPD process, data collected, and tools used, including statistical analysis to identify factors impacting schedule and effort. The findings emphasize the need for better estimation practices and resource management to enhance NPD cycle-time and overall project performance.