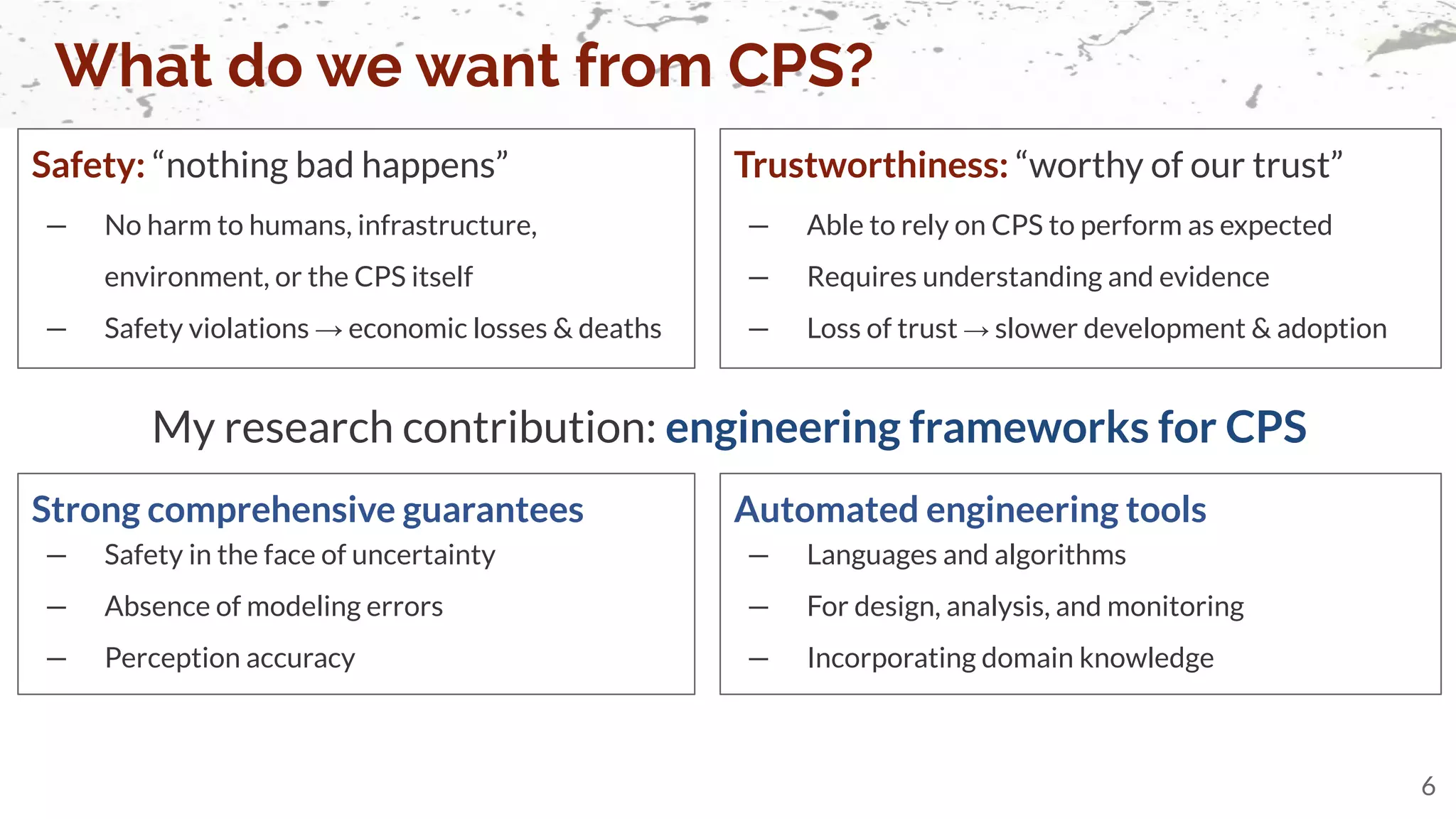

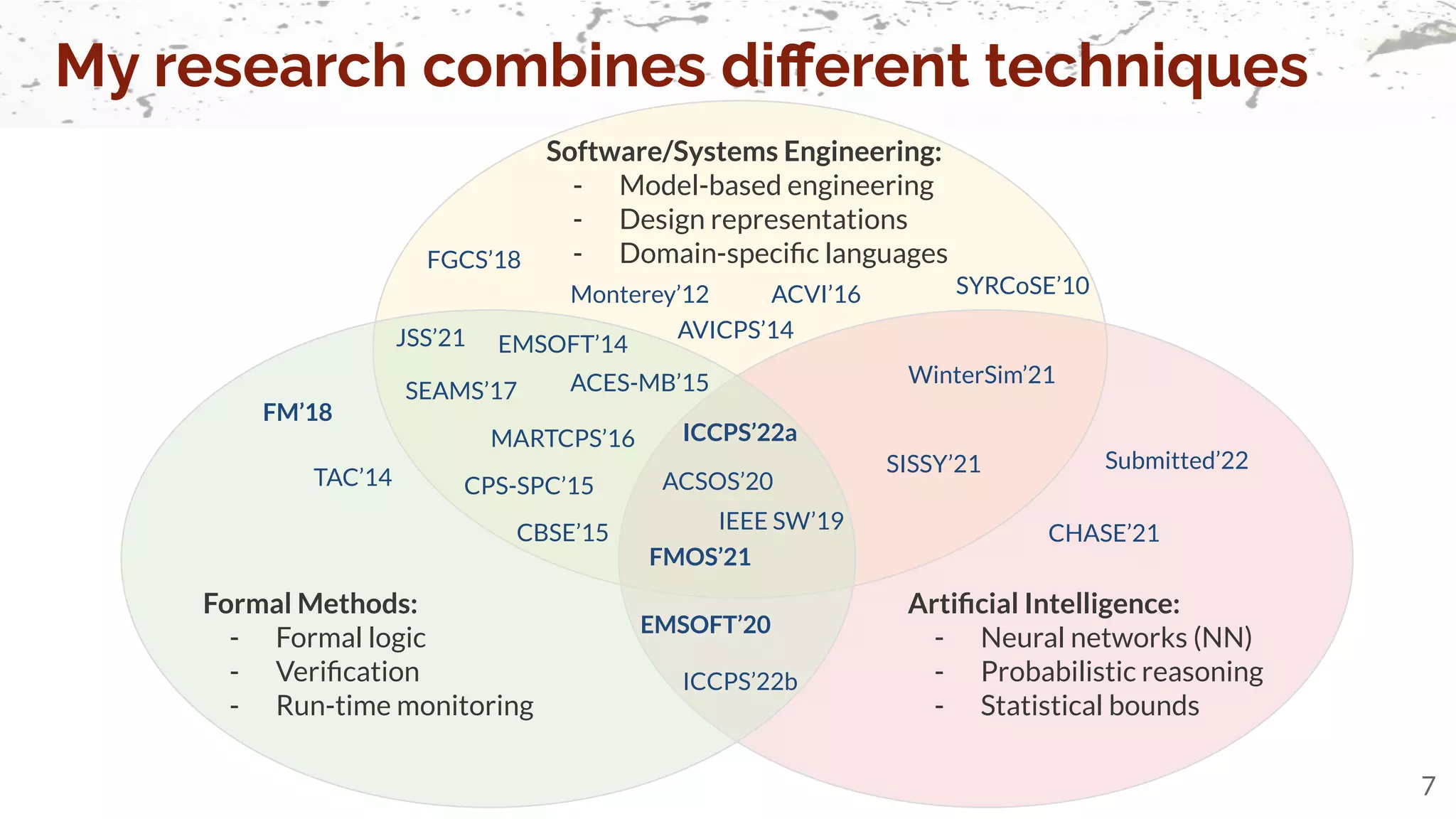

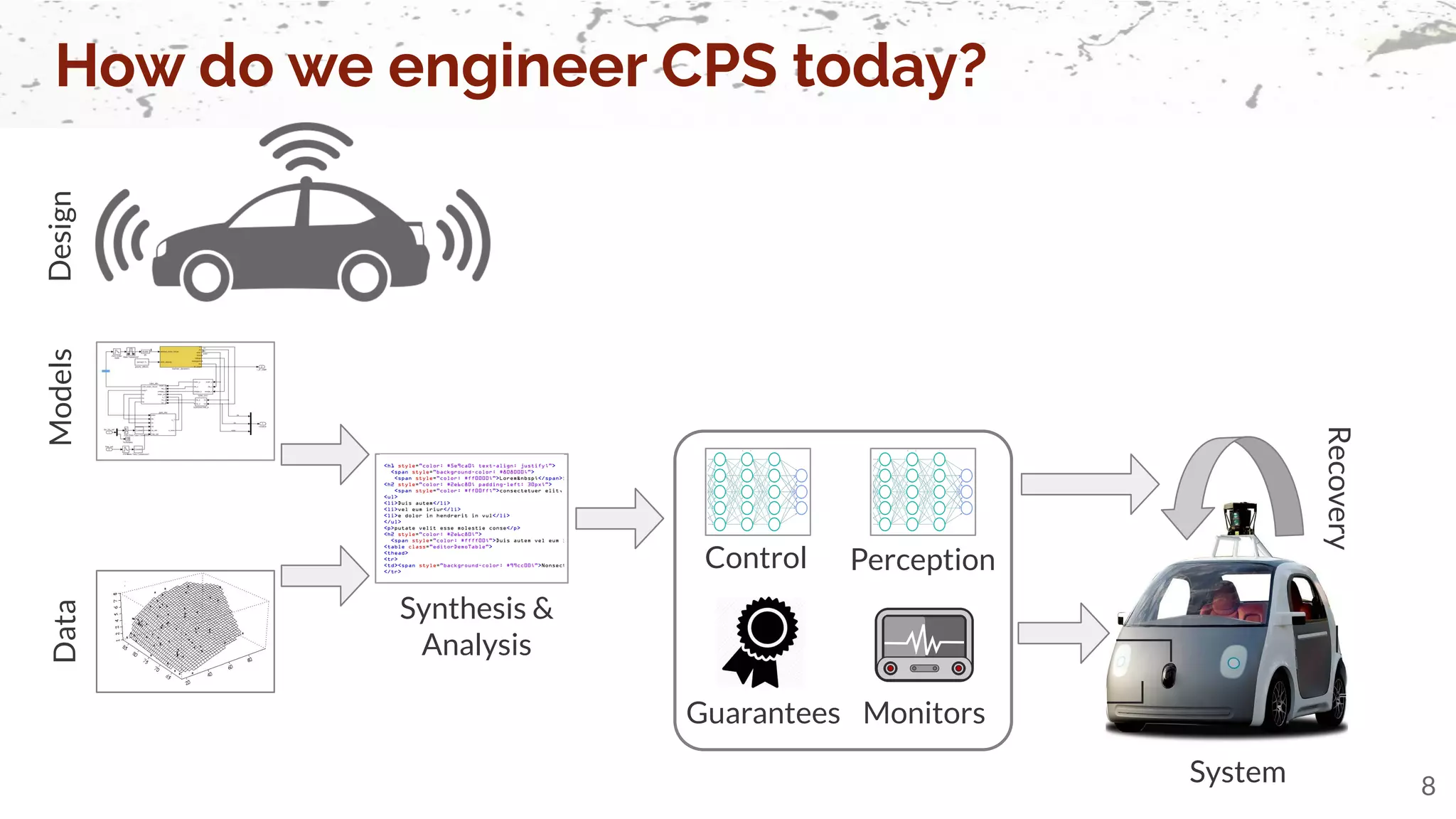

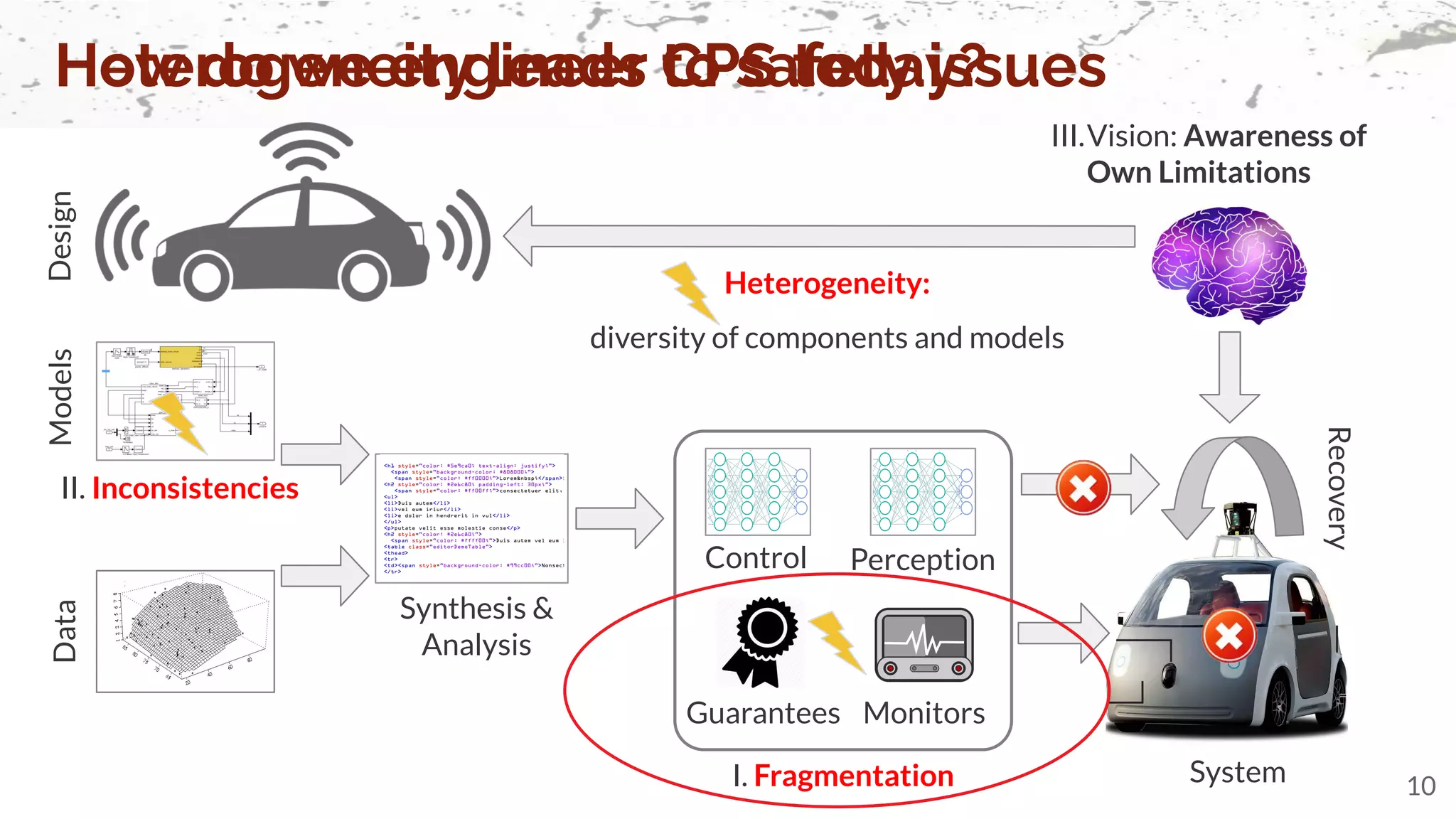

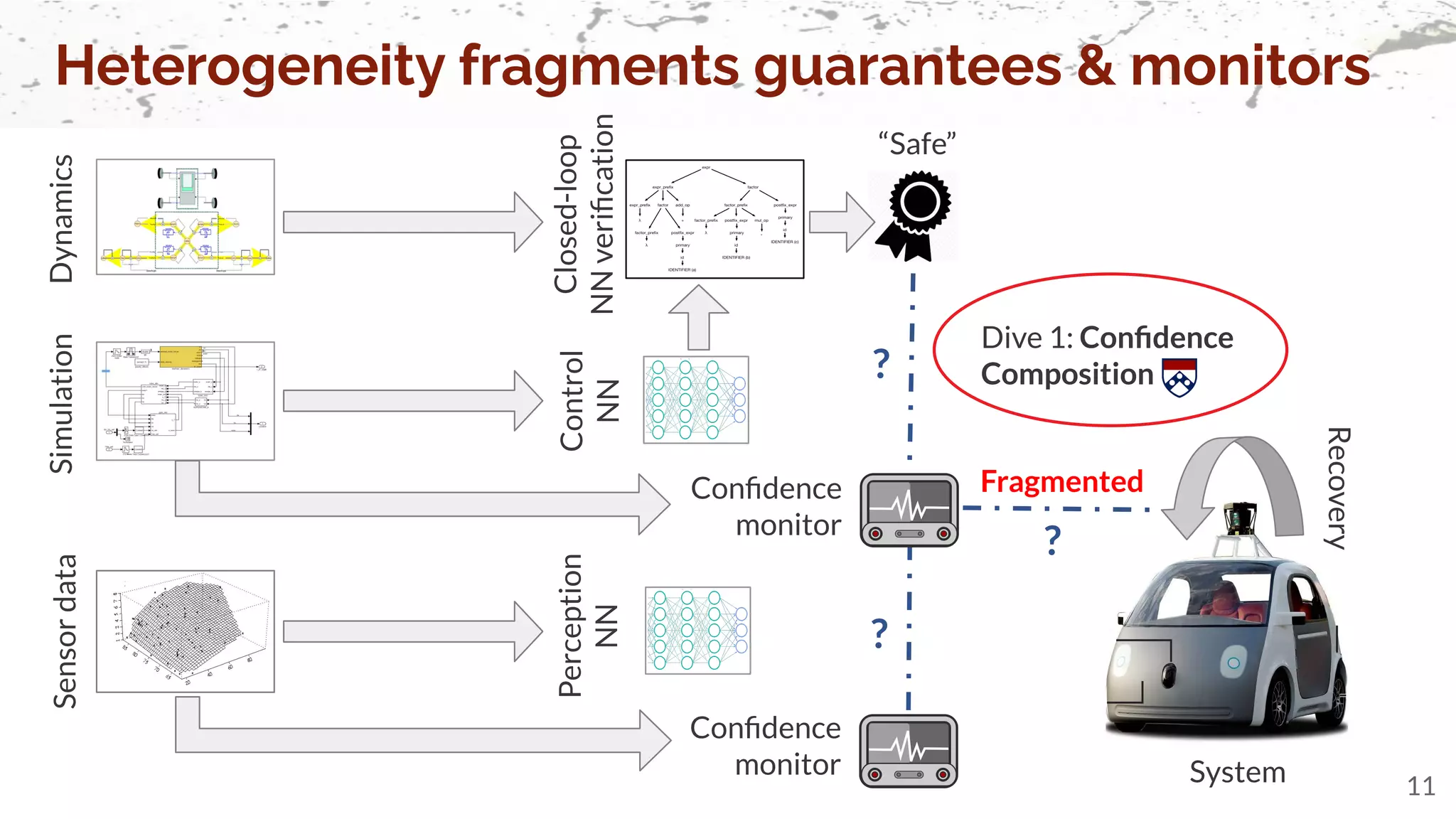

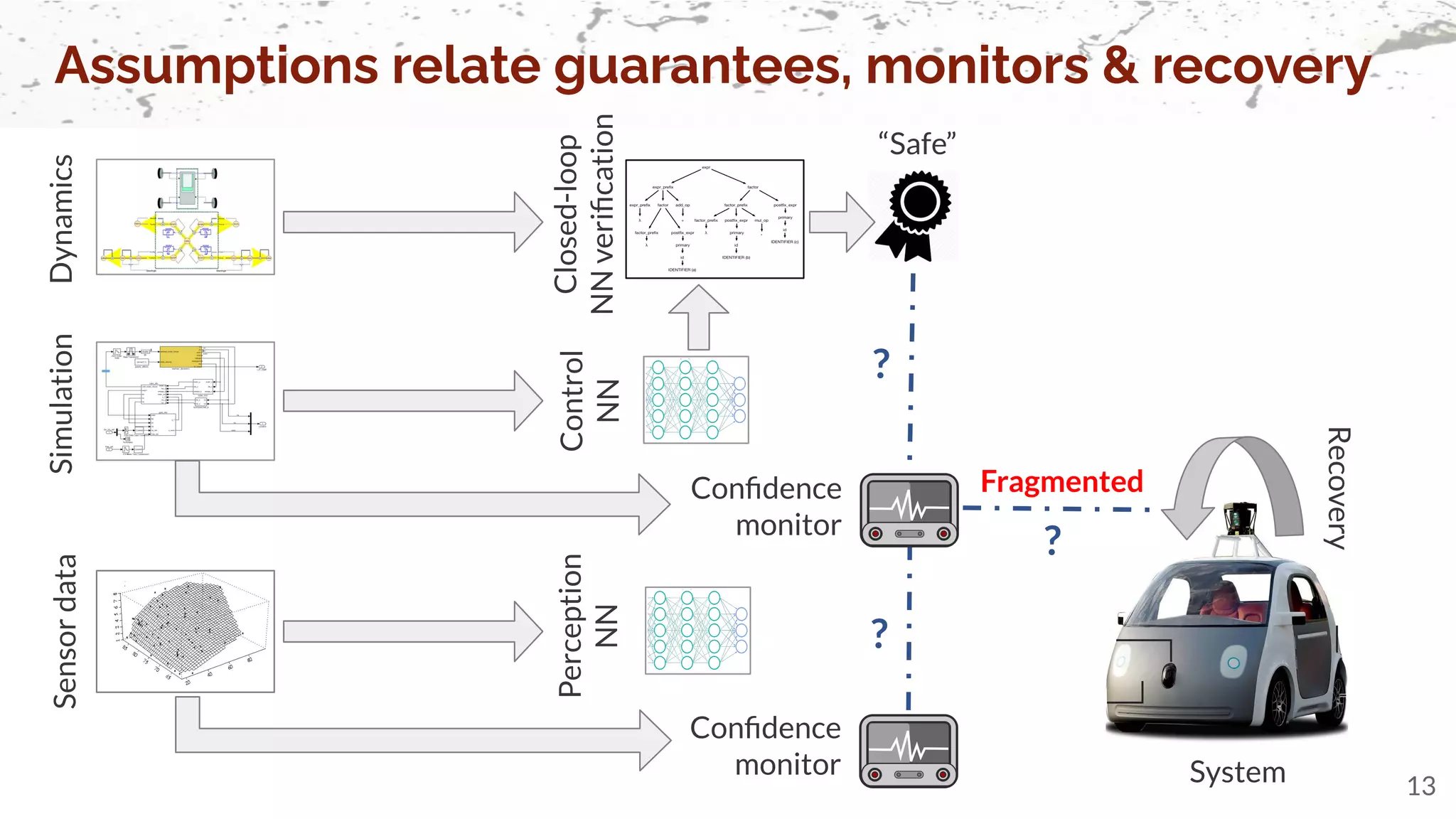

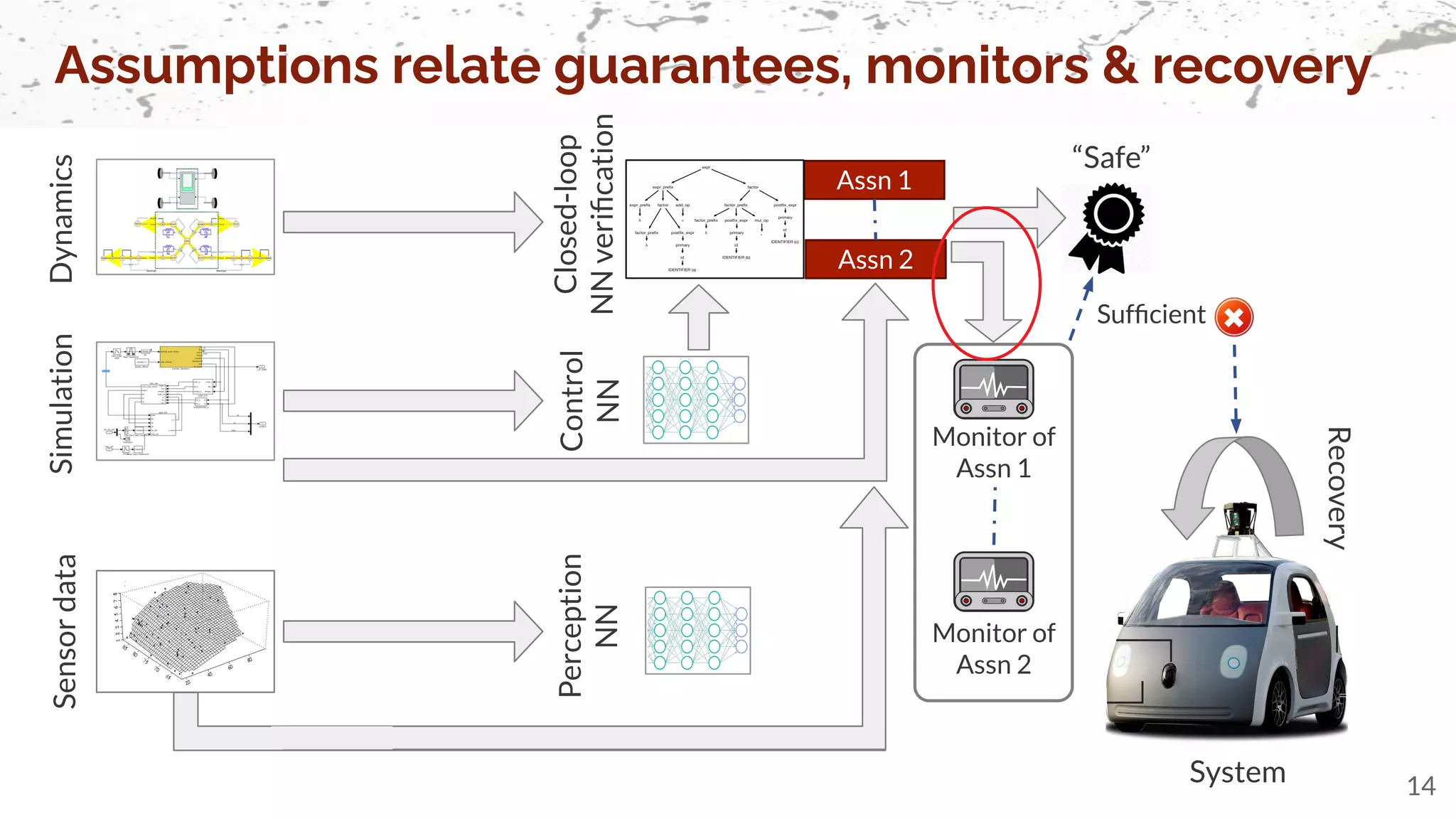

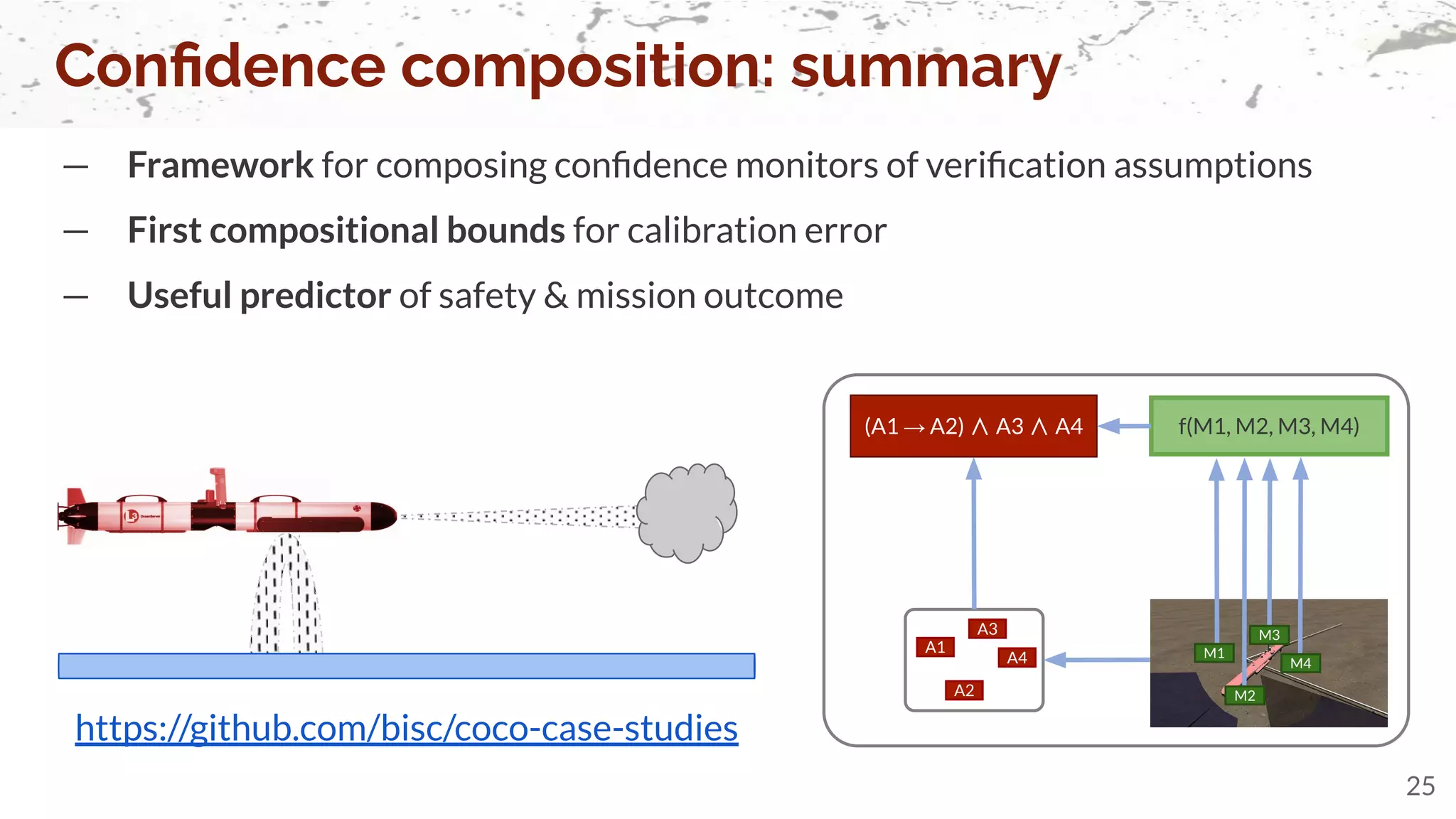

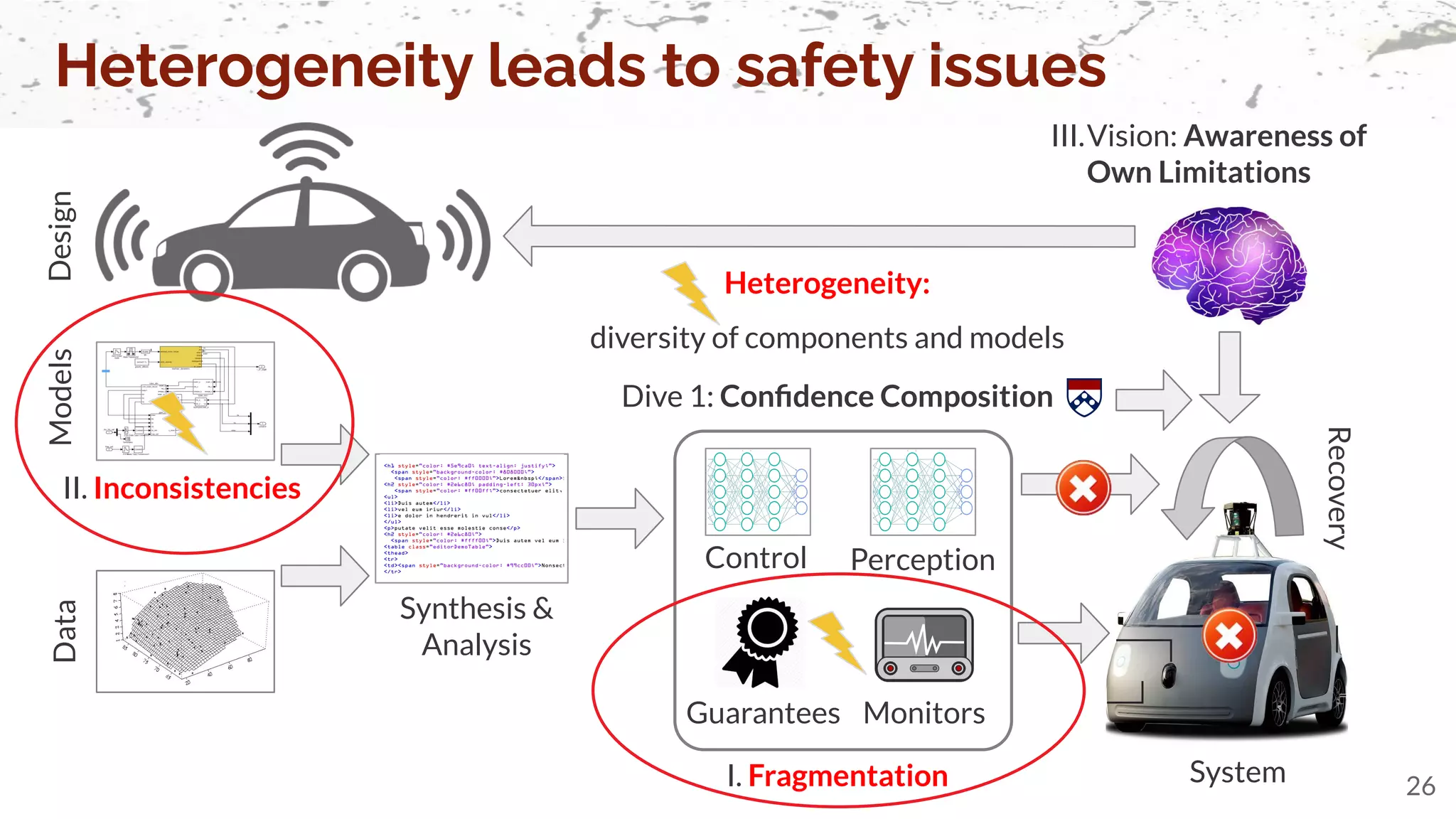

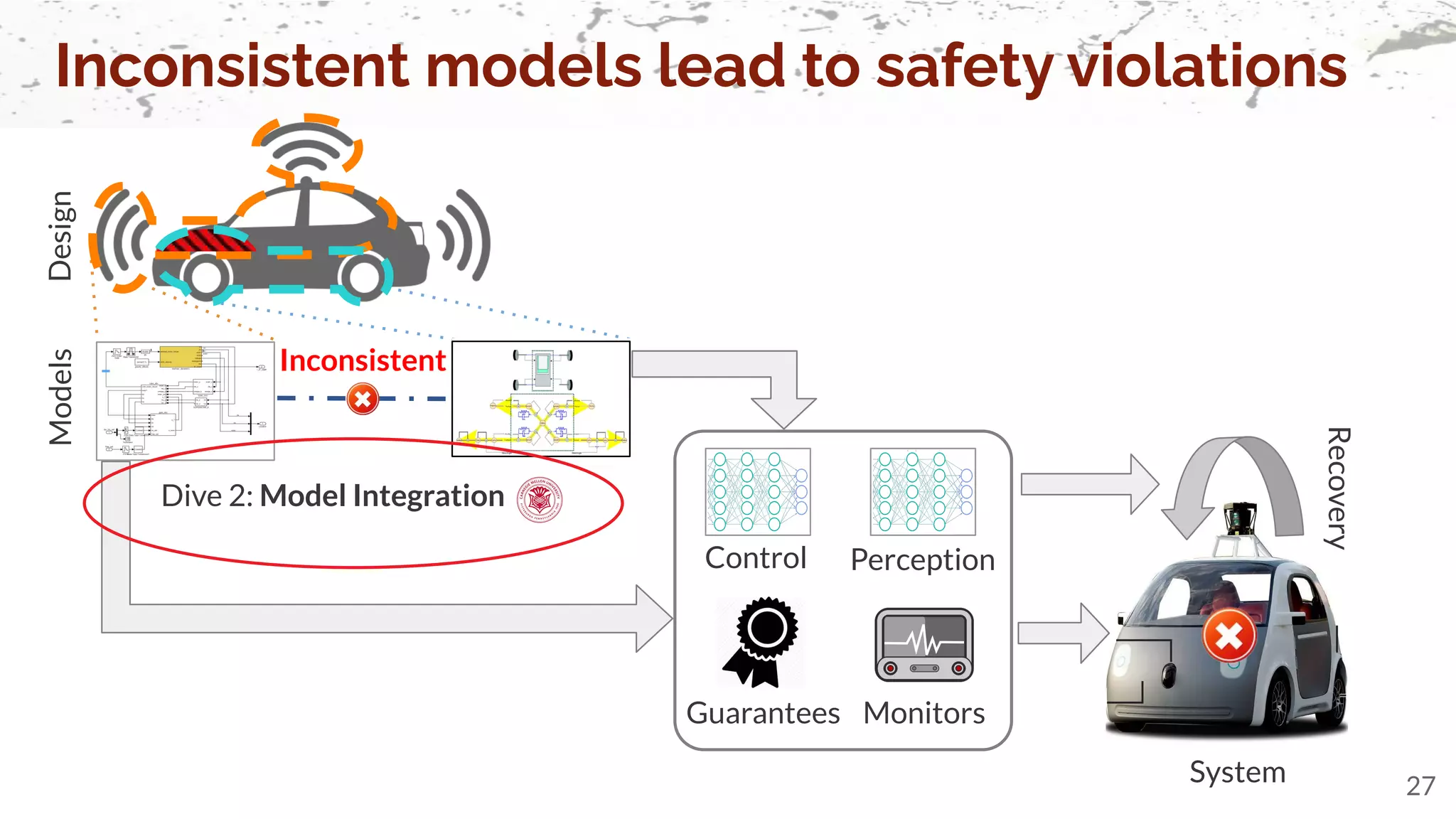

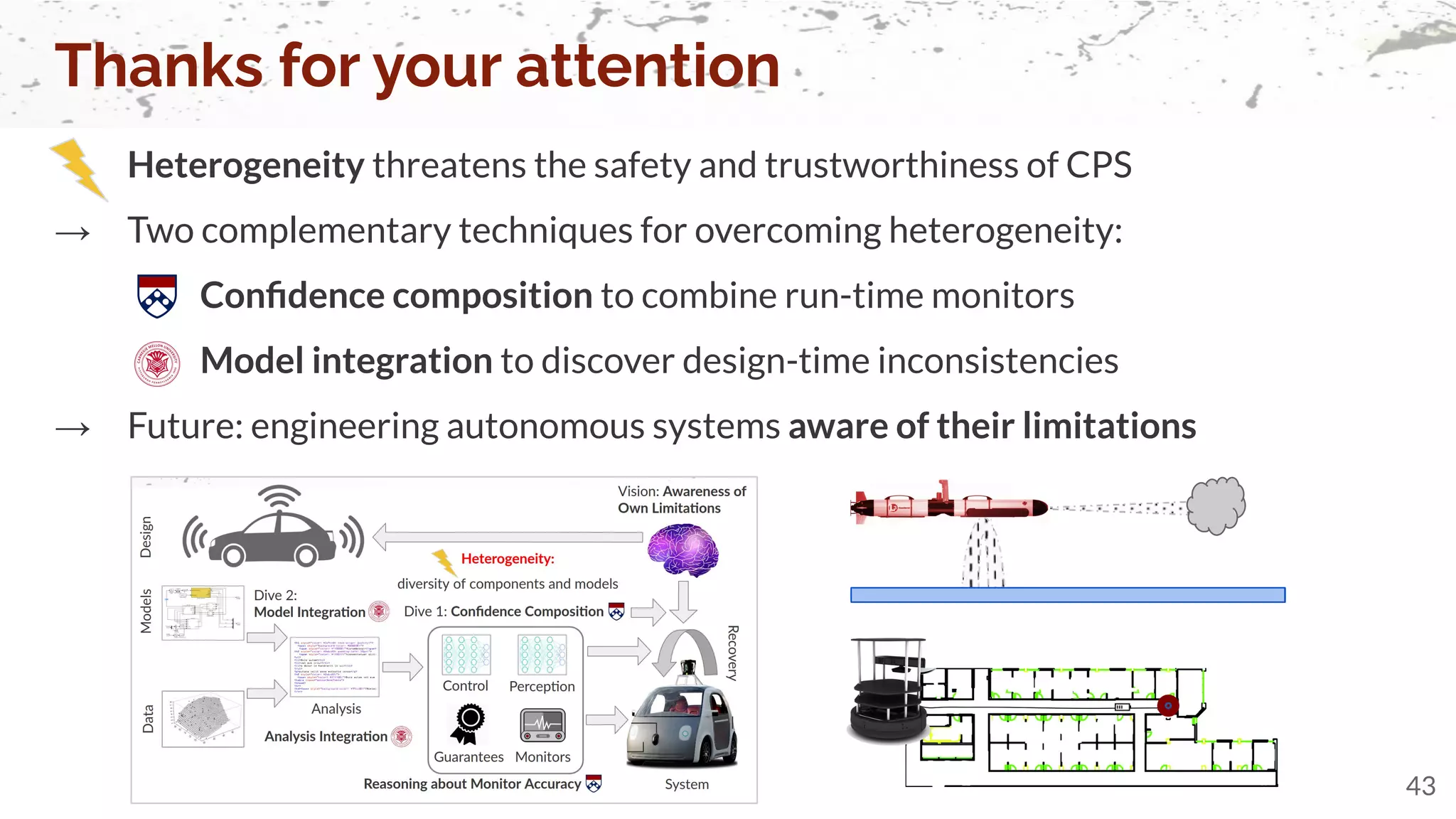

Ivan Ruchkin is a postdoctoral researcher at the University of Pennsylvania specializing in the safety and reliability of cyber-physical systems (CPS). His research focuses on integrating formal methods, machine learning, and software engineering to overcome challenges related to system heterogeneity and uncertainty. Key contributions include frameworks for confidence composition and model integration aimed at improving trustworthiness and safety in autonomous systems.

![— Correct operation of a component

○ E.g., confidence in NN outputs [Guo’17]

○ Difficult to connect to system-level safety

— Satisfaction of requirements (including safety)

○ E.g., temporal specification monitoring [Deshmukh’17]

○ Violations detected too late to recover

— Future violations of requirements

○ E.g., Simplex architecture [Sha’01]

○ Key idea: assumption monitoring, e.g., of proof premises [Mitsch’14]

What to monitor at run time?

12](https://image.slidesharecdn.com/genericjobtalkspring2022nobackup-220429172101/75/Overcoming-Heterogeneity-in-Autonomous-Cyber-Physical-Systems-12-2048.jpg)

![— Discrete-output monitors: “Yes”, “No”, “Maybe” via sequential detection [Scharf’91, Poor’13]

— Limitations of discrete assumption monitoring:

○ Too coarse for highly uncertain assumptions → uninformative

○ Errors accumulate combinatorially → decreased performance [EMSOFT’20]

▹ M1 ∧ M2 ∧ M3: 10% monitor FPR*

→ 27% composition FPR

How to monitor assumptions?

15

* FPR = false positive rate

— Key idea: Confidence monitoring instead of discrete monitoring [FMOS’21, ICCPS’22a]

○ Confidence C in assumption A is an estimate of Pr(A)

○ Expected calibration error (ECE) for confidence C predicting satisfaction of A:

▹ ECE(C, A) = E[| Pr( A | C ) - C |]](https://image.slidesharecdn.com/genericjobtalkspring2022nobackup-220429172101/75/Overcoming-Heterogeneity-in-Autonomous-Cyber-Physical-Systems-15-2048.jpg)

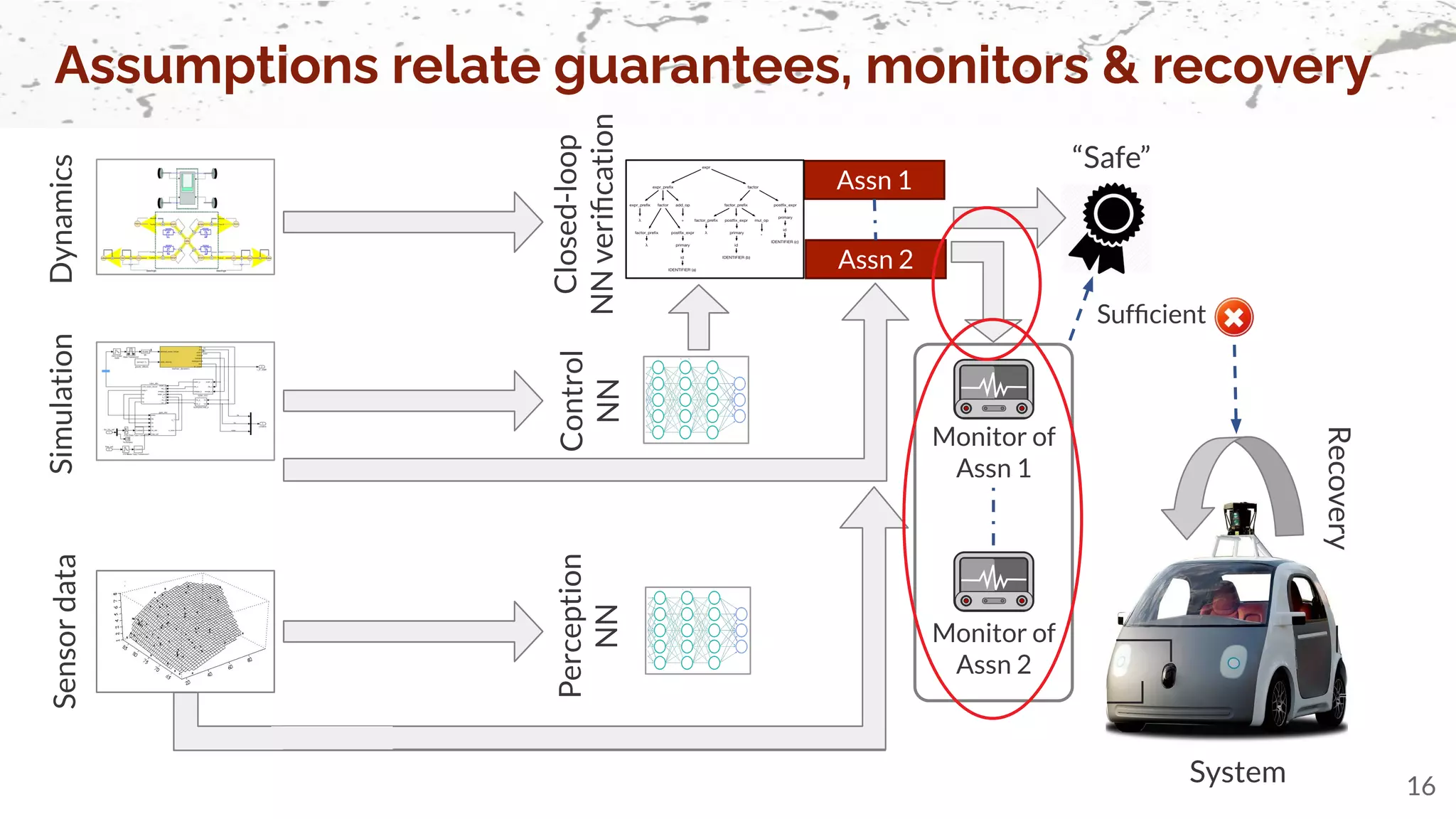

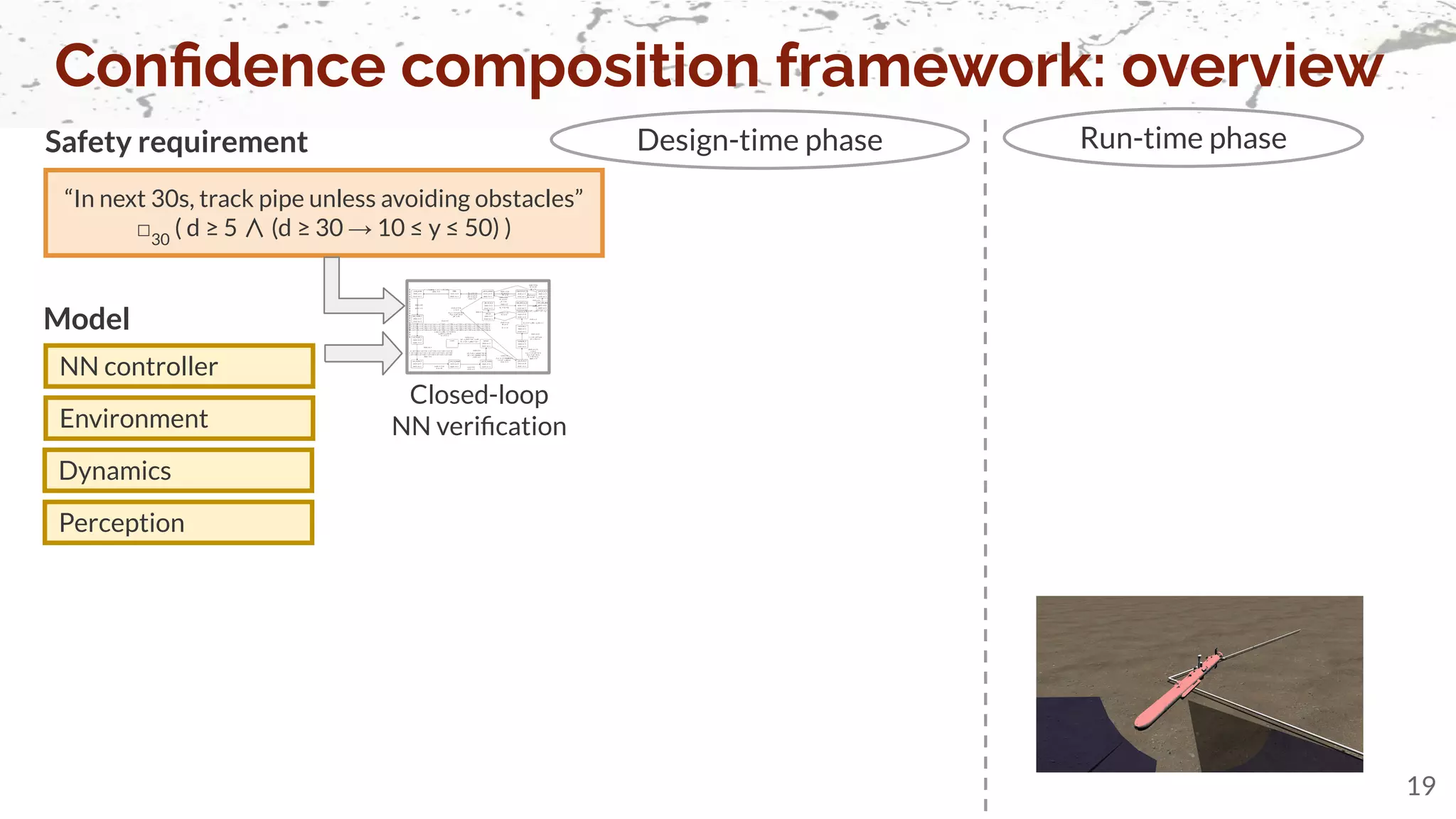

![— Build a comprehensive model

○ Probabilistic graphical models: Bayesian/Markov networks [Pearl’88, Koller’09]

○ Copulas: joint distributions for given marginals[Nelsen’06]

Our goal: combine the confidences into a predictive probability of safety

Existing work:

— Aggregate predictions of the same phenomenon

○ Forecast combination and ensemble learning[Ranjan’10, Sagi’18]

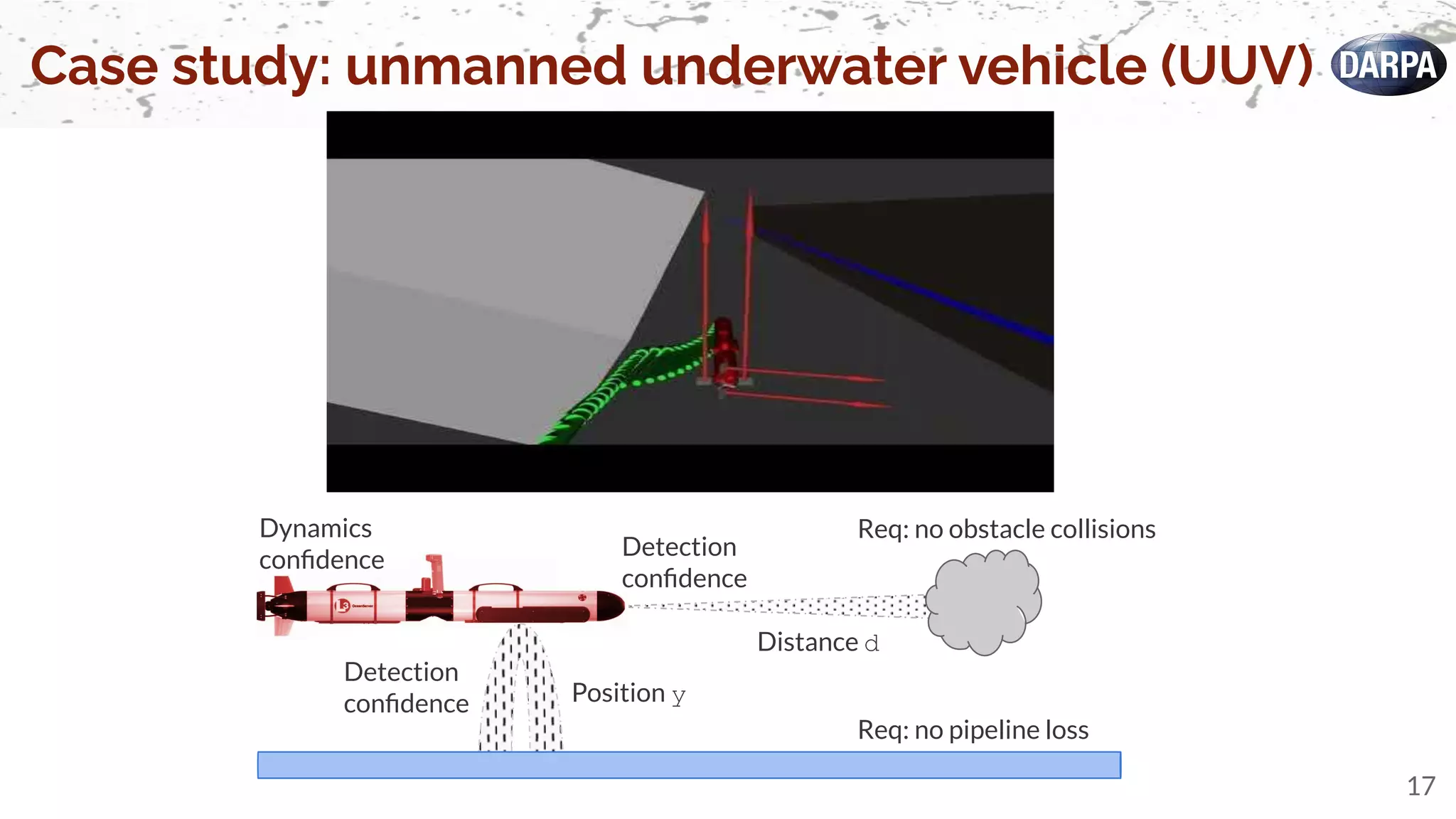

Case study: unmanned underwater vehicle (UUV)

18

Req: no obstacle collisions

Req: no pipeline loss

Detection

confidence

Distance d

Position y

Dynamics

confidence Detection

confidence

→ but we have different assumptions

→ but requires dependencies between confidences](https://image.slidesharecdn.com/genericjobtalkspring2022nobackup-220429172101/75/Overcoming-Heterogeneity-in-Autonomous-Cyber-Physical-Systems-18-2048.jpg)

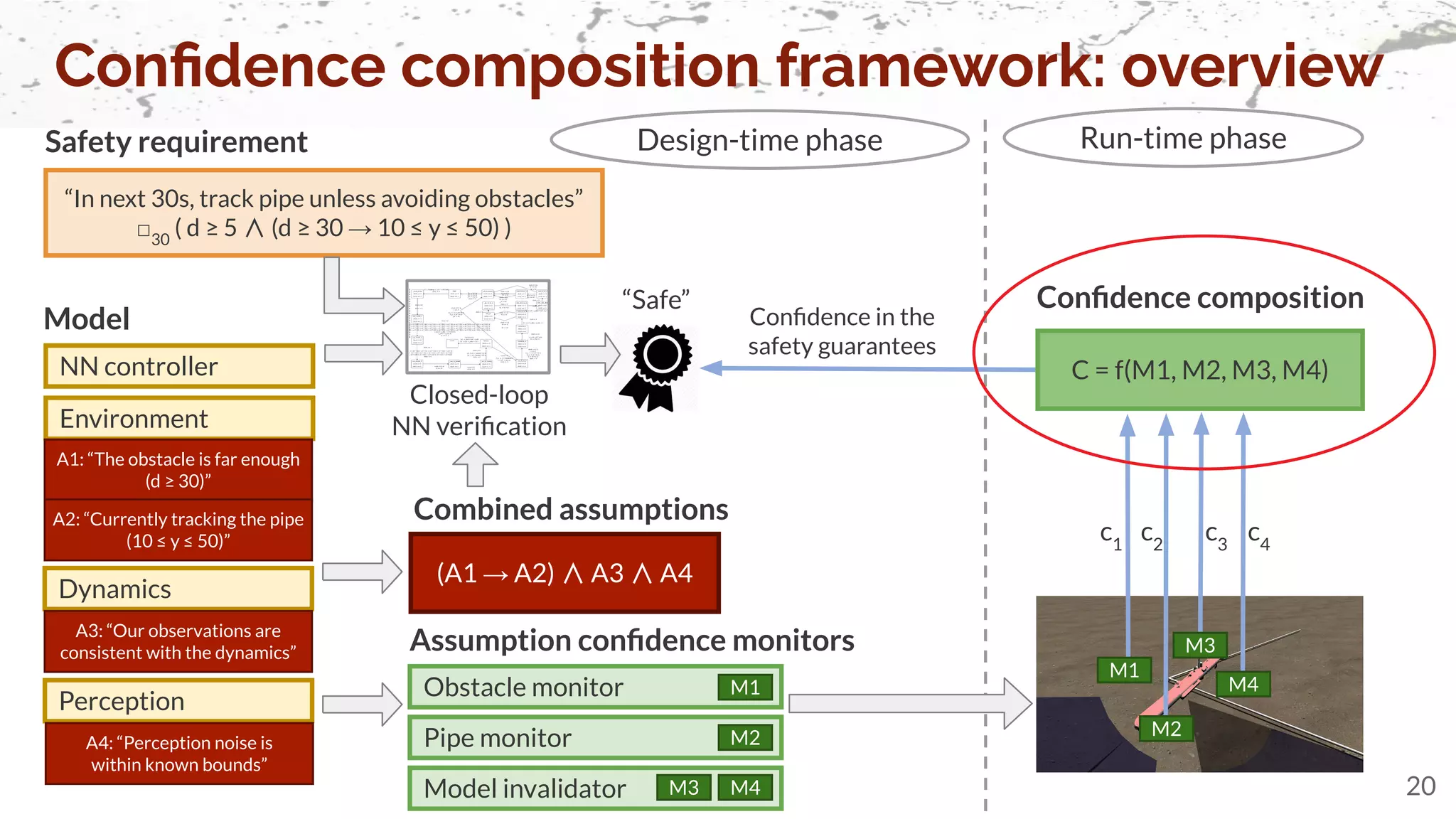

![How to compose confidences?

21

C = f(M1, M2, M3, M4)

Composed confidence

M2

M1

M3

M4

A2

A1

A3

A4

A = (A1 → A2) ∧ A3 ∧ A4

Combined assumptions

Verification

Given: calibrated monitors

ECE(M1, A1) ≤ e 1

, …

Safety outcome

S ∈ { , } Goal: calibrate C to S

ECE(C, S) ≤ e

[ICCPS’22a] Theorem: the Goal is achieved if

C is calibrated to A: ECE(C, A) ≤ g(e)

Monitors have

- Unknown dependencies

- Idiosyncratic inaccuracies

[ICCPS’22a] Ruchkin, Cleaveland, Ivanov, Lu, Carpenter, Sokolsky, Lee.

Confidence Composition for Monitors of Verification Assumptions. To appear in the Int’l Conf. on Cyber-Physical Systems (ICCPS), May 2022](https://image.slidesharecdn.com/genericjobtalkspring2022nobackup-220429172101/75/Overcoming-Heterogeneity-in-Autonomous-Cyber-Physical-Systems-21-2048.jpg)

![ECE(M1*M2, A1∧A2) ≤ max[4e1

e2

, (Var[M1]*Var[M2])0.5

+ e1

+ e2

+ e1

e2

]

ECE(w1

*M1+w2

*M2, A1∧A2) ≤ max[e1

+ e2

+ e1

e2

, max[w1

, w2

] + e1

+ e2

− e1

e2

]

How to compose confidences?

22

C = f(M1, M2, M3, M4)

Composed confidence

M2

M1

M3

M4

A2

A1

A3

A4

A = (A1 → A2) ∧ A3 ∧ A4

Combined assumptions

Given: calibrated monitors

ECE(M1, A1) ≤ e 1

, …

Pr( )=?

Problem: conjunctive composition of ECEs: [ICCPS’22a]

Given: ECE(M1, A1) ≤ e1

, ECE(M2, A2) ≤ e2

Find: f, ef

s.t. ECE(f(M1, M2), A1 ∧ A2) ≤ ef

Our solution:

Monitors have

- Unknown dependencies

- Idiosyncratic inaccuracies

[ICCPS’22a] Ruchkin, Cleaveland, Ivanov, Lu, Carpenter, Sokolsky, Lee.

Confidence Composition for Monitors of Verification Assumptions. To appear in the Int’l Conf. on Cyber-Physical Systems (ICCPS), May 2022

[ICCPS’22a] Theorem: the Goal is achieved if

C is calibrated to A: ECE(C, A) ≤ g(e)](https://image.slidesharecdn.com/genericjobtalkspring2022nobackup-220429172101/75/Overcoming-Heterogeneity-in-Autonomous-Cyber-Physical-Systems-22-2048.jpg)

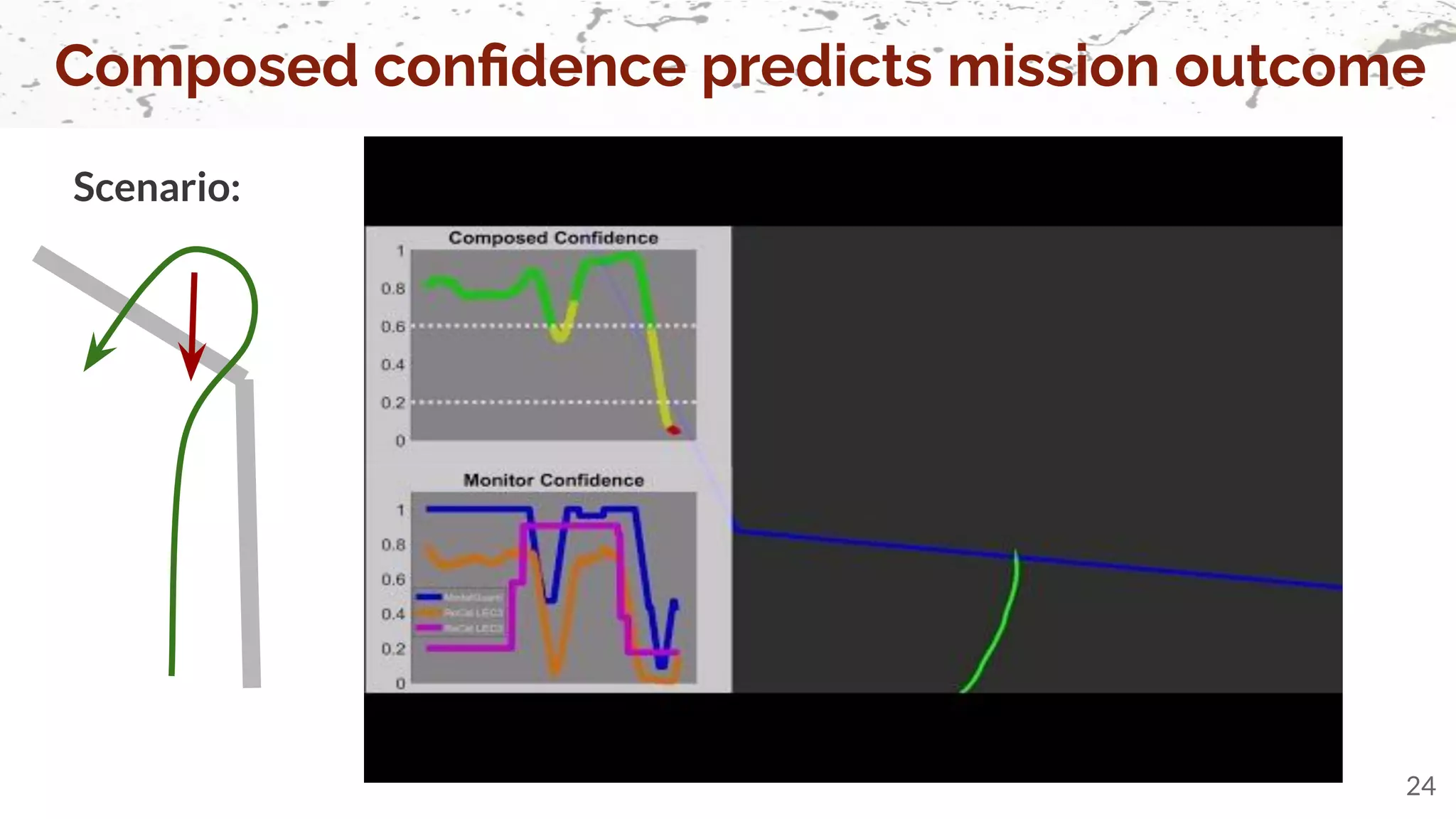

![○ Predicts mission outcome

— Case study setup: 194 simulated UUV executions

○ Random independent violations of assumptions

— Composed confidence

○ Improves calibration to safety over individual monitors

Confidence composition is useful in practice

23

[ICCPS’22a] Ruchkin, Cleaveland, Ivanov, Lu, Carpenter, Sokolsky, Lee.

Confidence Composition for Monitors of Verification Assumptions. To appear in the Int’l Conf. on Cyber-Physical Systems (ICCPS), May 2022](https://image.slidesharecdn.com/genericjobtalkspring2022nobackup-220429172101/75/Overcoming-Heterogeneity-in-Autonomous-Cyber-Physical-Systems-23-2048.jpg)

![Relating model structures and behaviors is hard

31

mode speed

power

?

Map & power models Planning model

Structural integration → inexpressive

[Sztipanovits’14, Marinescu’16]

Logic combinations → intractable

[Gabbay’96, Barbosa’16]

Behavioral integration → impractical

[Girard’11, Rajhans’13]

Hybrid simulation → no guarantees

[Lee’14, Combemale’14]

Mission plans

Tasks & their energies](https://image.slidesharecdn.com/genericjobtalkspring2022nobackup-220429172101/75/Overcoming-Heterogeneity-in-Autonomous-Cyber-Physical-Systems-31-2048.jpg)

![Integration properties co-constrain models

32

Integration property

mode speed

power

Map & power models Planning model

Objects, types,

attributes, relations

→ first-order logic

Sets of traces,

past/future/chance

→ modal logic

“For any valid sequence of tasks on a map, its total energy

matches the energy in the corresponding mission plan.”

Integration Property Language (IPL) [FM’18]

Mission plans

Tasks & their energies

[FM’18] Ruchkin, Sunshine, Iraci, Schmerl, Garlan. IPL: An Integration Property Language for

Multi-Model Cyber-Physical Systems. In the Int’l Symp. on Formal Methods (FM), 2018](https://image.slidesharecdn.com/genericjobtalkspring2022nobackup-220429172101/75/Overcoming-Heterogeneity-in-Autonomous-Cyber-Physical-Systems-32-2048.jpg)

![Integration property

33

mode speed

power

Map & power models Planning model

“For any valid sequence of tasks on a map, its total energy

matches the energy in the corresponding mission plan.”

SMT Solver* Model checker

Integration properties can be verified

Mission plans

Tasks & their energies

Soundness theorem:

Verification returns an answer → it is correct

Termination theorem:

Verification always terminates on finite structures

Implementation:

https://github.com/bisc/IPL

*SMT = Satisfiability Modulo Theories

Verification

algorithm

[FM’18] Ruchkin, Sunshine, Iraci, Schmerl, Garlan. IPL: An Integration Property Language for

Multi-Model Cyber-Physical Systems. In the Int’l Symp. on Formal Methods (FM), 2018](https://image.slidesharecdn.com/genericjobtalkspring2022nobackup-220429172101/75/Overcoming-Heterogeneity-in-Autonomous-Cyber-Physical-Systems-33-2048.jpg)

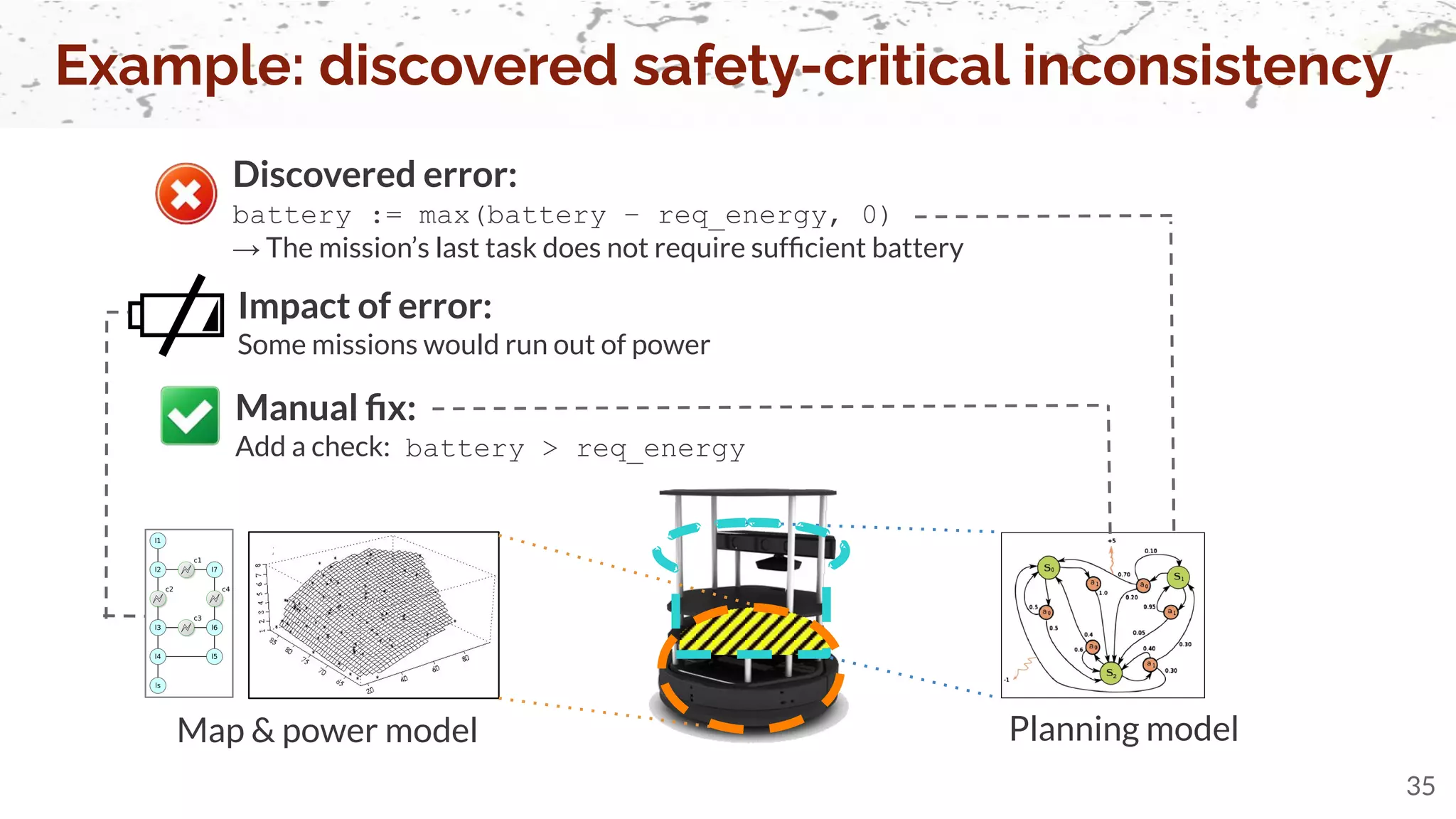

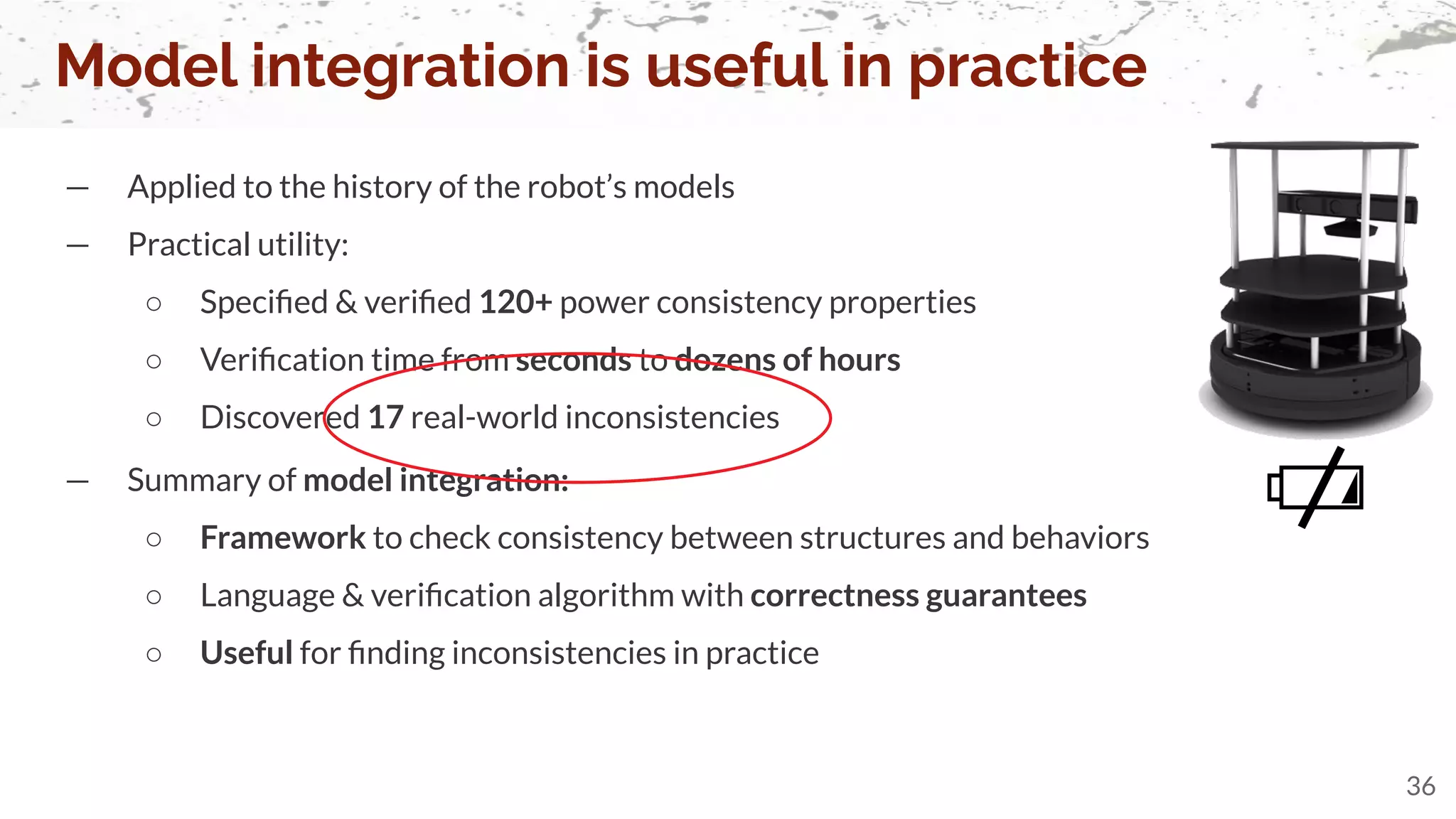

![Model integration is useful in practice

34

— Applied to the history of the robot’s models

— Practical utility:

○ Specified & verified 120+ power consistency properties

○ Verification time from seconds to dozens of hours

○ Discovered 17 real-world inconsistencies

[FM’18] Ruchkin, Sunshine, Iraci, Schmerl, Garlan. IPL: An Integration Property Language for

Multi-Model Cyber-Physical Systems. In the Int’l Symp. on Formal Methods (FM), 2018](https://image.slidesharecdn.com/genericjobtalkspring2022nobackup-220429172101/75/Overcoming-Heterogeneity-in-Autonomous-Cyber-Physical-Systems-34-2048.jpg)

![Vision: Awareness of

Own Limitations

Data

Models

Heterogeneity leads to safety issues

37

Design

System

Control Perception

Monitors

Guarantees

Recovery

II. Inconsistencies

Dive 1: Confidence Composition

Dive 2:

Model Integration

Reasoning about Monitor Accuracy

Analysis Integration

Synthesis &

Analysis

III.

[EMSOFT’14, AVICPS’14, CPS-SPC’15, ACES-MB’15]

[EMSOFT’20, CHASE’21, ICCPS’22b, Submitted’22]

Heterogeneity:

diversity of components and models](https://image.slidesharecdn.com/genericjobtalkspring2022nobackup-220429172101/75/Overcoming-Heterogeneity-in-Autonomous-Cyber-Physical-Systems-37-2048.jpg)

![— [ICCPS’22a] Ruchkin, Cleaveland, Ivanov, Lu, Carpenter, Sokolsky, Lee. Confidence Composition for

Monitors of Verification Assumptions. In Proceedings of the International Conference on

Cyber-Physical Systems (ICCPS), 2022.

— [FMOS’21] Ruchkin, Cleaveland, Sokolsky, Lee. Confidence Monitoring and Composition for Dynamic

Assurance of Learning-Enabled Autonomous Systems. In Formal Methods in Outer Space (FMOS):

Essays Dedicated to Klaus Havelund on the Occasion of His 65th Birthday, 2021.

— [EMSOFT’20] Ruchkin, Sokolsky, Weimer, Hedaoo, Lee. Compositional Probabilistic Analysis of

Temporal Properties Over Stochastic Detectors. In Proceedings of the International Conference on

Embedded Software (EMSOFT), 2020.

— [FM’18] Ruchkin, Sunshine, Iraci, Schmerl, Garlan. IPL: An Integration Property Language for

Multi-Model Cyber-Physical Systems. In Proceedings of the International Symposium on Formal

Methods (FM), 2018.

References: my work in the talk

44](https://image.slidesharecdn.com/genericjobtalkspring2022nobackup-220429172101/75/Overcoming-Heterogeneity-in-Autonomous-Cyber-Physical-Systems-44-2048.jpg)

![— [Pearl’88] Pearl. Probabilistic Reasoning in Intelligent Systems: Networks of Plausible Inference.

Morgan Kaufmann, 1988.

— [Scharf’91] Scharf. Statistical Signal Processing. Pearson, 1991.

— [Gabbay’96] Gabbay. Fibred Semantics and the Weaving of Logics Part 1: Modal and Intuitionistic

Logics. In the Journal of Symbolic Logic, 1996.

— [Sha’01] Sha. Using simplicity to control complexity. In IEEE Software, 2001.

— [Nelsen’06] Nelsen. An Introduction to Copulas. Springer-Verlag, 2006.

— [Koller’09] Koller, Friedman, Bach. Probabilistic Graphical Models: Principles and Techniques. MIT

Press, 2009.

— [Ranjan’10] Ranjan, Gneiting. Combining probability forecasts. In the Journal of the Royal Statistical

Society: Series B (Statistical Methodology), 2010.

— [Girard’11] Girard, Pappas. Approximate Bisimulation: A Bridge Between Computer Science and

Control Theory. In the European Journal of Control, 2011.

References: related work p.1

45](https://image.slidesharecdn.com/genericjobtalkspring2022nobackup-220429172101/75/Overcoming-Heterogeneity-in-Autonomous-Cyber-Physical-Systems-45-2048.jpg)

![— [Poor’13] Poor. An Introduction to Signal Detection and Estimation. Springer, 2013.

— [Rajhans’13] Rajhans, Krogh. Compositional Heterogeneous Abstraction. In Proceedings of the

International Conference on Hybrid Systems: Computation and Control (HSCC), 2013.

— [Mitsch’14] Mitsch, Platzer. ModelPlex: Verified Runtime Validation of Verified Cyber-Physical System

Models. In Proceedings of the International Conference on Runtime Verification (RV), 2014.

— [Sztipanovits’14] Sztipanovits, Bapty, Neema, Howard, Jackson. OpenMETA: A Model and

Component-Based Design Tool Chain for Cyber-Physical Systems. In From Programs to Systems - The

Systems Perspective in Computing, 2014.

— [Lee’14] Lee, Neuendorffer, Zhou. System Design, Modeling, and Simulation using Ptolemy II.

Ptolemy.org, 2014.

— [Combemale’14] Combemale, Deantoni, Baudry, France, Jezequel, Gray. Globalizing Modeling

Languages. In IEEE Computer, 2014.

References: related work p.2

46](https://image.slidesharecdn.com/genericjobtalkspring2022nobackup-220429172101/75/Overcoming-Heterogeneity-in-Autonomous-Cyber-Physical-Systems-46-2048.jpg)

![— [Marinescu’16] Marinescu. Model-driven Analysis and Verification of Automotive Embedded Systems.

PhD Thesis, Malardalen University, 2016.

— [Barbosa’16] Barbosa, Martins, Madeira, Neves. Reuse and Integration of Specification Logics: The

Hybridisation Perspective. In Theoretical Information Reuse and Integration, Springer, 2016.

— [Deshmukh’17] Deshmukh, Donze, Ghosh, Jin, Juniwal, Seshia. Robust Online Monitoring of Signal

Temporal Logic.

— [Guo’17] Guo, Pleiss, Sun, Weinberger. On calibration of modern neural networks. In Proceedings of

the 34th International Conference on Machine Learning (ICML), 2017.

— [Sagi’18] Sagi, Rokach. Ensemble learning: A survey. In Wiley Interdisciplinary Reviews: Data Mining

and Knowledge Discovery, 2018.

References: related work p.3

47](https://image.slidesharecdn.com/genericjobtalkspring2022nobackup-220429172101/75/Overcoming-Heterogeneity-in-Autonomous-Cyber-Physical-Systems-47-2048.jpg)