The document is a comprehensive guide on embedded systems, emphasizing a cyber-physical systems approach. It covers the modeling, design, and analysis of embedded systems, and is aimed at advanced undergraduate students, graduate students, and practicing engineers. The second edition includes new chapters, exercises, and improves upon previous material to better equip readers with the necessary skills in this field.

![on what that sound design practice is, and on how today’s

technologies both impede and

achieve it.

Lee & Seshia, Introduction to Embedded Systems xiii

http://LeeSeshia.org

PREFACE

Stankovic et al. (2005) support this view, stating that “existing

technology for RTES [real-

time embedded systems] design does not effectively support the

development of reliable

and robust embedded systems.” They cite a need to “raise the

level of programming

abstraction.” We argue that raising the level of abstraction is

insufficient. We also have

to fundamentally change the abstractions that are used. Timing

properties of software,

for example, cannot be effectively introduced at higher levels of

abstraction if they are

entirely absent from the lower levels of abstraction on which

these are built.

We require robust and predictable designs with repeatable

temporal dynamics (Lee, 2009a).

We must do this by building abstractions that appropriately

reflect the realities of cyber-

physical systems. The result will be CPS designs that can be

much more sophisticated,

including more adaptive control logic, evolvability over time,

and improved safety and re-

liability, all without suffering from the brittleness of today’s

designs, where small changes](https://image.slidesharecdn.com/introductiontoembeddedsystemsacyber-phys-221018140152-214d1924/85/INTRODUCTION-TO-EMBEDDED-SYSTEMSA-CYBER-PHYS-docx-23-320.jpg)

![In addition, we have provided an extensive index, with more

than 2,000 entries.

There are typographic conventions worth noting. When a term is

being defined, it will ap-

pear in bold face, and the corresponding index entry will also be

in bold face. Hyperlinks

are shown in blue in the electronic version. The notation used in

diagrams, such as those

for finite-state machines, is intended to be familiar, but not to

conform with any particular

programming or modeling language.

Intended Audience

This book is intended for students at the advanced

undergraduate level or introductory

graduate level, and for practicing engineers and computer

scientists who wish to under-

stand the engineering principles of embedded systems. We

assume that the reader has

some exposure to machine structures (e.g., should know what an

ALU is), computer pro-

gramming (we use C throughout the text), basic discrete

mathematics and algorithms, and

at least an appreciation for signals and systems (what it means

to sample a continuous-

time signal, for example).

Reporting Errors

If you find errors or typos in this book, or if you have

suggestions for improvements or

other comments, please send email to:

[email protected]](https://image.slidesharecdn.com/introductiontoembeddedsystemsacyber-phys-221018140152-214d1924/85/INTRODUCTION-TO-EMBEDDED-SYSTEMSA-CYBER-PHYS-docx-28-320.jpg)

![Please include the version number of the book, whether it is the

electronic or the hard-

copy distribution, and the relevant page numbers. Thank you!

Lee & Seshia, Introduction to Embedded Systems xvii

mailto:[email protected]

http://LeeSeshia.org

PREFACE

Acknowledgments

The authors gratefully acknowledge contributions and helpful

suggestions from Murat

Arcak, Dai Bui, Janette Cardoso, Gage Eads, Stephen Edwards,

Suhaib Fahmy, Shanna-

Shaye Forbes, Daniel Holcomb, Jeff C. Jensen, Garvit Juniwal,

Hokeun Kim, Jonathan

Kotker, Wenchao Li, Isaac Liu, Slobodan Matic, Mayeul

Marcadella, Le Ngoc Minh,

Christian Motika, Chris Myers, Steve Neuendorffer, David

Olsen, Minxue Pan, Hiren Pa-

tel, Jan Reineke, Rhonda Righter, Alberto Sangiovanni-

Vincentelli, Chris Shaver, Shih-

Kai Su (together with students in CSE 522, lectured by Dr.

Georgios E. Fainekos at

Arizona State University), Stavros Tripakis, Pravin Varaiya,

Reinhard von Hanxleden,

Armin Wasicek, Kevin Weekly, Maarten Wiggers, Qi Zhu, and

the students in UC Berke-

ley’s EECS 149 class over the past years, particularly Ned Bass

and Dan Lynch. The

authors are especially grateful to Elaine Cheong, who carefully

read most chapters and](https://image.slidesharecdn.com/introductiontoembeddedsystemsacyber-phys-221018140152-214d1924/85/INTRODUCTION-TO-EMBEDDED-SYSTEMSA-CYBER-PHYS-docx-29-320.jpg)

![http://leeseshia.org

In addition, a solutions manual and other instructional material

are available to qualified

instructors at bona fide teaching institutions. See

http://chess.eecs.berkeley.edu/instructors/

or contact [email protected]

xx Lee & Seshia, Introduction to Embedded Systems

http://leeseshia.org

http://chess.eecs.berkeley.edu/instructors/

mailto:[email protected]

http://LeeSeshia.org

1

Introduction

1.1 Applications . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 2

Sidebar: About the Term “Cyber-Physical Systems” . . . . . . . . .

. 5

1.2 Motivating Example . . . . . . . . . . . . . . . . . . . . . . . . . . 6

1.3 The Design Process . . . . . . . . . . . . . . . . . . . . . . . . . . 9

1.3.1 Modeling . . . . . . . . . . . . . . . . . . . . . . . . . . . . 12

1.3.2 Design . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 13

1.3.3 Analysis . . . . . . . . . . . . . . . . . . . . . . . . . . . . 14

1.4 Summary . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 16

A cyber-physical system (CPS) is an integration of computation

with physical processes](https://image.slidesharecdn.com/introductiontoembeddedsystemsacyber-phys-221018140152-214d1924/85/INTRODUCTION-TO-EMBEDDED-SYSTEMSA-CYBER-PHYS-docx-33-320.jpg)

![where F is the force vector in three directions, M is the mass of

the object, and ẍ is the

second derivative of x with respect to time (i.e., the

acceleration). Velocity is the integral

1If the notation is unfamiliar, see Appendix A.

2The domain of a continuous-time signal may be restricted to a

connected subset of R, such as R+, the

non-negative reals, or [0, 1], the interval between zero and one,

inclusive. The codomain may be an arbitrary

set, though when representing physical quantities, real numbers

are most useful.

20 Lee & Seshia, Introduction to Embedded Systems

http://LeeSeshia.org

2. CONTINUOUS DYNAMICS

of acceleration, given by

∀ t > 0, ẋ(t) = ẋ(0) +

t∫

0

ẍ(τ)dτ

where ẋ(0) is the initial velocity in three directions. Using

(2.1), this becomes

∀ t > 0, ẋ(t) = ẋ(0) + 1

M](https://image.slidesharecdn.com/introductiontoembeddedsystemsacyber-phys-221018140152-214d1924/85/INTRODUCTION-TO-EMBEDDED-SYSTEMSA-CYBER-PHYS-docx-67-320.jpg)

![state variables of the free mode are

s(t) =

[

y(t)

ẏ(t)

]

with the initial conditions y(0) = h0 and ẏ(0) = 0. It is then a

simple matter to

rewrite (4.4) as a first-order differential equation,

ṡ(t) = f(s(t)) (4.5)

for a suitably chosen function f .

At the time t = t1 when the ball first hits the ground, the guard

y(t) = 0

is satisfied, and the self-loop transition is taken. The output

bump is produced,

and the set action ẏ(t) := −aẏ(t) changes ẏ(t1) to have value

−aẏ(t1). Then

(4.4) is followed again until the guard becomes true again.

92 Lee & Seshia, Introduction to Embedded Systems

http://LeeSeshia.org

4. HYBRID SYSTEMS

t1 t2](https://image.slidesharecdn.com/introductiontoembeddedsystemsacyber-phys-221018140152-214d1924/85/INTRODUCTION-TO-EMBEDDED-SYSTEMSA-CYBER-PHYS-docx-176-320.jpg)

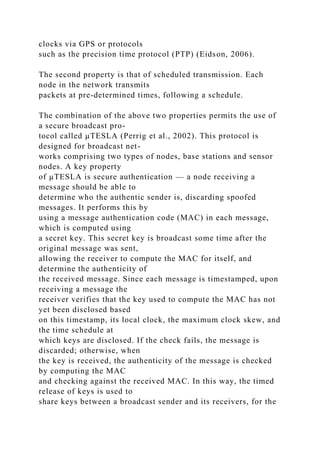

![Let (x(t), y(t)) ∈ R2 be the position relative to some fixed

coordinate frame and

θ(t) ∈ (−π, π] be the angle (in radians) of the vehicle at time t,

as shown in

Figure 4.12. In terms of this coordinate frame, the motion of the

vehicle is given

94 Lee & Seshia, Introduction to Embedded Systems

http://LeeSeshia.org

4. HYBRID SYSTEMS

track

AG

V

global

coordinate

frame

Figure 4.12: Illustration of the automated guided vehicle of

Example 4.8. The

vehicle is following a curved painted track, and has deviated

from the track by a

distance e(t). The coordinates of the vehicle at time t with

respect to the global

coordinate frame are (x(t), y(t), θ(t)).

by a system of three differential equations,

ẋ(t) = u(t) cos θ(t),](https://image.slidesharecdn.com/introductiontoembeddedsystemsacyber-phys-221018140152-214d1924/85/INTRODUCTION-TO-EMBEDDED-SYSTEMSA-CYBER-PHYS-docx-180-320.jpg)

![programmer needs to be

very careful with dynamic memory allocation, particularly for

embedded systems that are

expected to run for a very long time. Exhausting the available

memory can cause system

crashes or other undesired behavior.

256 Lee & Seshia, Introduction to Embedded Systems

http://LeeSeshia.org

9. MEMORY ARCHITECTURES

Exercises

1. Consider the function compute variance listed below, which

computes the

variance of integer numbers stored in the array data.

1 int data[N];

2

3 int compute_variance() {

4 int sum1 = 0, sum2 = 0, result;

5 int i;

6

7 for(i=0; i < N; i++) {

8 sum1 += data[i];

9 }

10 sum1 /= N;

11

12 for(i=0; i < N; i++) {](https://image.slidesharecdn.com/introductiontoembeddedsystemsacyber-phys-221018140152-214d1924/85/INTRODUCTION-TO-EMBEDDED-SYSTEMSA-CYBER-PHYS-docx-441-320.jpg)

![13 sum2 += data[i] * data[i];

14 }

15 sum2 /= N;

16

17 result = (sum2 - sum1*sum1);

18

19 return result;

20 }

Suppose this program is executing on a 32-bit processor with a

direct-mapped cache

with parameters (m,S,E,B) = (32, 8, 1, 8). We make the

following additional

assumptions:

• An int is 4 bytes wide.

• sum1, sum2, result, and i are all stored in registers.

• data is stored in memory starting at address 0x0.

Answer the following questions:

(a) Consider the case where N is 16. How many cache misses

will there be?

(b) Now suppose that N is 32. Recompute the number of cache

misses.

(c) Now consider executing for N = 16 on a 2-way set-

associative cache with

parameters (m,S,E,B) = (32, 8, 2, 4). In other words, the block

size is

halved, while there are two cache lines per set. How many cache

misses

would the code suffer?](https://image.slidesharecdn.com/introductiontoembeddedsystemsacyber-phys-221018140152-214d1924/85/INTRODUCTION-TO-EMBEDDED-SYSTEMSA-CYBER-PHYS-docx-442-320.jpg)

![3 UDR0 = x[i];

268 Lee & Seshia, Introduction to Embedded Systems

http://LeeSeshia.org

10. INPUT AND OUTPUT

4 }

How long would it take to execute this code? Suppose that the

serial port is set

to operate at 57600 baud, or bits per second (this is quite fast

for an RS-232

interface). Then after loading UDR0 with an 8-bit value, it will

take 8/57600

seconds or about 139 microseconds for the 8-bit value to be

sent. Suppose that

the frequency of the processor is operating at 18 MHz

(relatively slow for a mi-

crocontroller). Then except for the first time through the for

loop, each while

loop will need to consume approximately 2500 cycles, during

which time the

processor is doing no useful work.

To receive a byte over the serial port, a programmer may use

the following C

code:

1 while(!(UCSR0A & 0x80));

2 return UDR0;

In this case, the while loop waits until the UART has received

an incoming](https://image.slidesharecdn.com/introductiontoembeddedsystemsacyber-phys-221018140152-214d1924/85/INTRODUCTION-TO-EMBEDDED-SYSTEMSA-CYBER-PHYS-docx-461-320.jpg)

![The emphasis is on un-

derstanding the principles behind the mechanisms, with a

particular focus on the bridging

between the sequential world of software and the parallel

physical world.

Lee & Seshia, Introduction to Embedded Systems 283

http://LeeSeshia.org

EXERCISES

Exercises

1. Similar to Example 10.6, consider a C program for an Atmel

AVR that uses a UART

to send 8 bytes to an RS-232 serial interface, as follows:

1 for(i = 0; i < 8; i++) {

2 while(!(UCSR0A & 0x20));

3 UDR0 = x[i];

4 }

Assume the processor runs at 50 MHz; also assume that initially

the UART is idle,

so when the code begins executing, UCSR0A & 0x20 == 0x20 is

true; further,

assume that the serial port is operating at 19,200 baud. How

many cycles are re-

quired to execute the above code? You may assume that the for

statement executes

in three cycles (one to increment i, one to compare it to 8, and

one to perform the

conditional branch); the while statement executes in 2 cycles

(one to compute](https://image.slidesharecdn.com/introductiontoembeddedsystemsacyber-phys-221018140152-214d1924/85/INTRODUCTION-TO-EMBEDDED-SYSTEMSA-CYBER-PHYS-docx-485-320.jpg)

![6 desired_climb

7 = pre_climb + altitude_pgain * err;

8 if (desired_climb < -CLIMB_MAX) {

9 desired_climb = -CLIMB_MAX;

10 }

11 if (desired_climb > CLIMB_MAX) {

12 desired_climb = CLIMB_MAX;

13 }

14 }

15 }

16 }

For this example, it is required that the value of the desired

climb vari-

able at the end of altitude control task remains within the range

[-

CLIMB MAX, CLIMB MAX]. This is an example of a special

kind of invariant, a

postcondition, that must be maintained every time altitude

control task

returns. Determining whether this is the case requires analyzing

the control flow

of the program.

Lee & Seshia, Introduction to Embedded Systems 361

http://LeeSeshia.org

13.2. LINEAR TEMPORAL LOGIC

13.2 Linear Temporal Logic

We now give a formal description of temporal logic and

illustrate with examples of how](https://image.slidesharecdn.com/introductiontoembeddedsystemsacyber-phys-221018140152-214d1924/85/INTRODUCTION-TO-EMBEDDED-SYSTEMSA-CYBER-PHYS-docx-620-320.jpg)

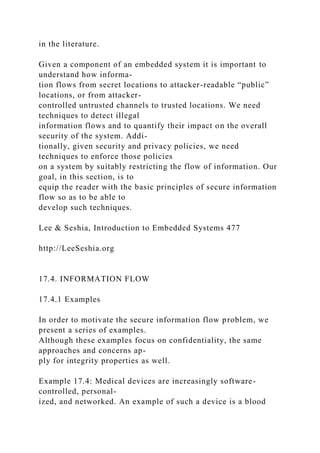

![Compose Verify

Property

System

Environment

YES

[proof]

NO

counterexample

M

Figure 15.2: Formal verification procedure.

• The property to be verified Φ.

The verifier generates as output a YES/NO answer, indicating

whether or not S satisfies

the property Φ in environment E. Typically, a NO output is

accompanied by a counterex-

ample, also called an error trace, which is a trace of the system

that indicates how Φ is

violated. Counterexamples are very useful aids in the debugging

process. Some formal

verification tools also include a proof or certificate of

correctness with a YES answer;

such an output can be useful for certification of system

correctness.

The form of composition used to combine system model S with

environment model E](https://image.slidesharecdn.com/introductiontoembeddedsystemsacyber-phys-221018140152-214d1924/85/INTRODUCTION-TO-EMBEDDED-SYSTEMSA-CYBER-PHYS-docx-686-320.jpg)

![termination – by defining a progress measure or ranking

function that maps each state

of the program to a mathematical structure called a well order.

Intuitively, a well order is

like a program that counts down to zero from some initial value

in the natural numbers.

16.3.2 Exponential Path Space

Execution time is a path property. In other words, the amount of

time taken by the pro-

gram is a function of how conditional statements in the program

evaluate to true or false.

A major source of complexity in execution time analysis (and

other program analysis

problems as well) is that the number of program paths can be

very large — exponential in

the size of the program. We illustrate this point with the

example below.

Example 16.6: Consider the function count listed below, which

runs over

a two-dimensional array, counting and accumulating non-

negative and negative

elements of the array separately.

1 #define MAXSIZE 100

2

3 int Array[MAXSIZE][MAXSIZE];

4 int Ptotal, Pcnt, Ntotal, Ncnt;

5 ...

6 void count() {

7 int Outer, Inner;

8 for (Outer = 0; Outer < MAXSIZE; Outer++) {

9 for (Inner = 0; Inner < MAXSIZE; Inner++) {](https://image.slidesharecdn.com/introductiontoembeddedsystemsacyber-phys-221018140152-214d1924/85/INTRODUCTION-TO-EMBEDDED-SYSTEMSA-CYBER-PHYS-docx-738-320.jpg)

![10 if (Array[Outer][Inner] >= 0) {

11 Ptotal += Array[Outer][Inner];

438 Lee & Seshia, Introduction to Embedded Systems

http://LeeSeshia.org

16. QUANTITATIVE ANALYSIS

12 Pcnt++;

13 } else {

14 Ntotal += Array[Outer][Inner];

15 Ncnt++;

16 }

17 }

18 }

19 }

The function includes a nested loop. Each loop executes

MAXSIZE (100) times.

Thus, the inner body of the loop (comprising lines 10–16) will

execute 10,000

times – as many times as the number of elements of Array. In

each iteration of

the inner body of the loop, the conditional on line 10 can either

evaluate to true or

false, thus resulting in 210000 possible ways the loop can

execute. In other words,

this program has 210000 paths.

Fortunately, as we will see in Section 16.4.1, one does not need

to explicitly enumerate

all possible program paths in order to perform execution time

analysis.](https://image.slidesharecdn.com/introductiontoembeddedsystemsacyber-phys-221018140152-214d1924/85/INTRODUCTION-TO-EMBEDDED-SYSTEMSA-CYBER-PHYS-docx-739-320.jpg)

![4 for(i=0; i < n; i++) {

5 result += x[i] * y[i];

6 }

7 return result;

8 }

Suppose this program is executing on a 32-bit processor with a

direct-mapped

cache. Suppose also that the cache can hold two sets, each of

which can hold 4

floats. Finally, let us suppose that x and y are stored

contiguously in memory

starting with address 0.

Let us first consider what happens if n = 2. In this case, the

entire arrays x and

y will be in the same block and thus in the same cache set.

Thus, in the very

first iteration of the loop, the first access to read x[0] will be a

cache miss, but

thereafter every read to x[i] and y[i] will be a cache hit,

yielding best case

performance for loads.

Consider next what happens when n = 8. In this case, each x[i]

and y[i] map

to the same cache set. Thus, not only will the first access to

x[0] be a miss, the

first access to y[0] will also be a miss. Moreover, the latter

access will evict

the block containing x[0]-x[3], leading to a cache miss on x[1],

x[2], and

x[3] as well. The reader can see that every access to an x[i] or

y[i] will

lead to a cache miss.](https://image.slidesharecdn.com/introductiontoembeddedsystemsacyber-phys-221018140152-214d1924/85/INTRODUCTION-TO-EMBEDDED-SYSTEMSA-CYBER-PHYS-docx-743-320.jpg)

![Vienna M./P. Measurement Technical University of Vienna

http://www.wcet.at/

454 Lee & Seshia, Introduction to Embedded Systems

http://www.absint.com/ait/

http://www.bound-t.com/

http://www.comp.nus.edu.sg/~rpembed/chronos/

http://www.irisa.fr/aces/work/heptane-demo/heptane.html

http://www.mrtc.mdh.se/projects/wcet/

http://www.rapitasystems.com/

http://www.ida.ing.tu-bs.de/research/projects/symtap/

http://www.wcet.at/

http://LeeSeshia.org

16. QUANTITATIVE ANALYSIS

Exercises

1. This problem studies execution time analysis. Consider the C

program listed below:

1 int arr[100];

2

3 int foo(int flag) {

4 int i;

5 int sum = 0;

6

7 if (flag) {

8 for(i=0;i<100;i++)

9 arr[i] = i;

10 }](https://image.slidesharecdn.com/introductiontoembeddedsystemsacyber-phys-221018140152-214d1924/85/INTRODUCTION-TO-EMBEDDED-SYSTEMSA-CYBER-PHYS-docx-767-320.jpg)

![11

12 for(i=0;i<100;i++)

13 sum += arr[i];

14

15 return sum;

16 }

Assume that this program is run on a processor with data cache

of size big enough

that the entire array arr can fit in the cache.

(a) How many paths does the function foo of this program have?

Describe what

they are.

(b) Let T denote the execution time of the second for loop in the

program. How

does executing the first for loop affect the value of T ? Justify

your answer.

2. Consider the program given below:

1 void testFn(int *x, int flag) {

2 while (flag != 1) {

3 flag = 1;

4 *x = flag;

5 }

6 if (*x > 0)

7 *x += 2;

8 }

In answering the questions below, assume that x is not NULL.

(a) Draw the control-flow graph of this program. Identify the](https://image.slidesharecdn.com/introductiontoembeddedsystemsacyber-phys-221018140152-214d1924/85/INTRODUCTION-TO-EMBEDDED-SYSTEMSA-CYBER-PHYS-docx-768-320.jpg)

![function. Iden-

tify the basic blocks whose execution time will be impacted by

this modified

assumption.

3. Consider the function check password given below that takes

two arguments: a

user ID uid and candidate password pwd (both modeled as ints

for simplicity).

This function checks that password against a list of user IDs and

passwords stored

in an array, returning 1 if the password matches and 0

otherwise.

1 struct entry {

2 int user;

3 int pass;

4 };

5 typedef struct entry entry_t;

6

7 entry_t all_pwds[1000];

8

9 int check_password(int uid, int pwd) {

10 int i = 0;

11 int retval = 0;

12

13 while(i < 1000) {

14 if (all_pwds[i].user == uid && all_pwds[i].pass == pwd) {

15 retval = 1;

16 break;

17 }

18 i++;

19 }](https://image.slidesharecdn.com/introductiontoembeddedsystemsacyber-phys-221018140152-214d1924/85/INTRODUCTION-TO-EMBEDDED-SYSTEMSA-CYBER-PHYS-docx-770-320.jpg)

![17. SECURITY AND PRIVACY

the absence of automatic bounds-checking for accessing arrays

or pointers in C programs.

More precisely, a buffer overflow is a error arising from the

absence of a bounds check

resulting in the program writing past the end of an array or

memory region. Attackers can

use this out-of-bounds write to corrupt trusted locations in a

program such as the value

of a secret variable or the return address of a function. (Tip:

material in Chapter 9 on

Memory Architectures might be worth reviewing.) Let us begin

with a simple example.

Example 17.2: The sensors in certain embedded systems use

communication

protocols where data from various on-board sensors is read as a

stream of bytes

from a designated port or network socket. The code example

below illustrates one

such scenario. Here, the programmer expects to read at most 16

bytes of sensor

data, storing them into the array sensor data.

1 char sensor_data[16];

2 int secret_key;

3

4 void read_sensor_data() {

5 int i = 0;

6

7 // more_data returns 1 if there is more data,](https://image.slidesharecdn.com/introductiontoembeddedsystemsacyber-phys-221018140152-214d1924/85/INTRODUCTION-TO-EMBEDDED-SYSTEMSA-CYBER-PHYS-docx-806-320.jpg)

![8 // and 0 otherwise

9 while(more_data()) {

10 sensor_data[i] = get_next_byte();

11 i++;

12 }

13

14 return;

15 }

The problem with this code is that it implicitly trusts the sensor

stream to be no

more than 16 bytes long. Suppose an attacker has control of that

stream, either

through physical access to the sensors or over the network.

Then an attacker can

provide more than 16 bytes and cause the program to write past

the end of the

array sensor data. Notice further how the variable secret key is

defined

right after sensor data, and assume that the compiler allocates

them adja-

cently. In this case, an attacker can exploit the buffer oveflow

vulnerability to

provide a stream of length 20 bytes and overwrite secret key

with a key of

his choosing. This exploit can then be used to compromise the

system in other

ways.

Lee & Seshia, Introduction to Embedded Systems 475

http://LeeSeshia.org](https://image.slidesharecdn.com/introductiontoembeddedsystemsacyber-phys-221018140152-214d1924/85/INTRODUCTION-TO-EMBEDDED-SYSTEMSA-CYBER-PHYS-docx-807-320.jpg)

![17.3. SOFTWARE SECURITY

The example above involves an out-of-bounds write in an array

stored in global memory.

Consider next the case when the array is stored on the stack. In

this case, a buffer overrun

vulnerability can be exploited to overwrite the return address of

the function, and cause it

to execute some code of the attacker’s choosing. This can lead

to the attacker gaining an

arbitrary level of control over the embedded system.

Example 17.3: Consider below a variant on the code in Example

17.2 where

the sensor data array is stored on the stack. As before, the

function reads

a stream of bytes and stores them into sensor data. However, in

this case,

the read sensor data is then processed within this function and

used to set certain

globally-stored flags (which can then be used to take control

decisions).

1 int sensor_flags[4];

2

3 void process_sensor_data() {

4 int i = 0;

5 char sensor_data[16];

6

7 // more_data returns 1 if there is more data,

8 // and 0 otherwise

9 while(more_data()) {

10 sensor_data[i] = get_next_byte();](https://image.slidesharecdn.com/introductiontoembeddedsystemsacyber-phys-221018140152-214d1924/85/INTRODUCTION-TO-EMBEDDED-SYSTEMSA-CYBER-PHYS-docx-808-320.jpg)

![4

5 float stored_readings[100];

6

7 void show_readings() {

480 Lee & Seshia, Introduction to Embedded Systems

http://LeeSeshia.org

17. SECURITY AND PRIVACY

8 int input_pwd = read_input(); // prompt user for

9 // password and read it

10 if (input_pwd == patient_pwd) // check password

11 display(&stored_readings);

12 else

13 display_error_mesg();

14

15 return;

16 }

Assuming the attacker does not know the value of patient pwd,

we can see

that the above code does not leak any of the 100 stored

readings. However, it

does leak one bit of information: whether the input password

provided by the

attacker equals the correct password or not. In practice, such a

leak is deemed

acceptable since, for a strong choice of patient password, it

would take even the](https://image.slidesharecdn.com/introductiontoembeddedsystemsacyber-phys-221018140152-214d1924/85/INTRODUCTION-TO-EMBEDDED-SYSTEMSA-CYBER-PHYS-docx-817-320.jpg)

![rity and side-channel attacks.

490 Lee & Seshia, Introduction to Embedded Systems

http://LeeSeshia.org

17. SECURITY AND PRIVACY

Exercises

1. Consider the buffer overflow vulnerability in Example 17.2.

Modify the code so as

to prevent the buffer overflow.

2. Suppose a system M has a secret input s, a public input x, and

a public output z.

Let all three variables be Boolean. Answer the following

TRUE/FALSE questions

with justification:

(a) Suppose M satisfies the linear temporal logic (LTL) property

G¬z. Then M

must also satisfy observational determinism.

(b) Suppose M satisfies the linear temporal logic (LTL)

property G [(s ∧ x) ⇒ z].

Then M must also satisfy observational determinism.

3. Consider the finite-state machine below with one input x and

one output z, both

taking values in {0, 1}. Both x and z are considered public

(“low”) signals from a

security viewpoint. However, the state of the FSM (i.e., “A” or

“B”) is considered

secret (“high”).](https://image.slidesharecdn.com/introductiontoembeddedsystemsacyber-phys-221018140152-214d1924/85/INTRODUCTION-TO-EMBEDDED-SYSTEMSA-CYBER-PHYS-docx-836-320.jpg)