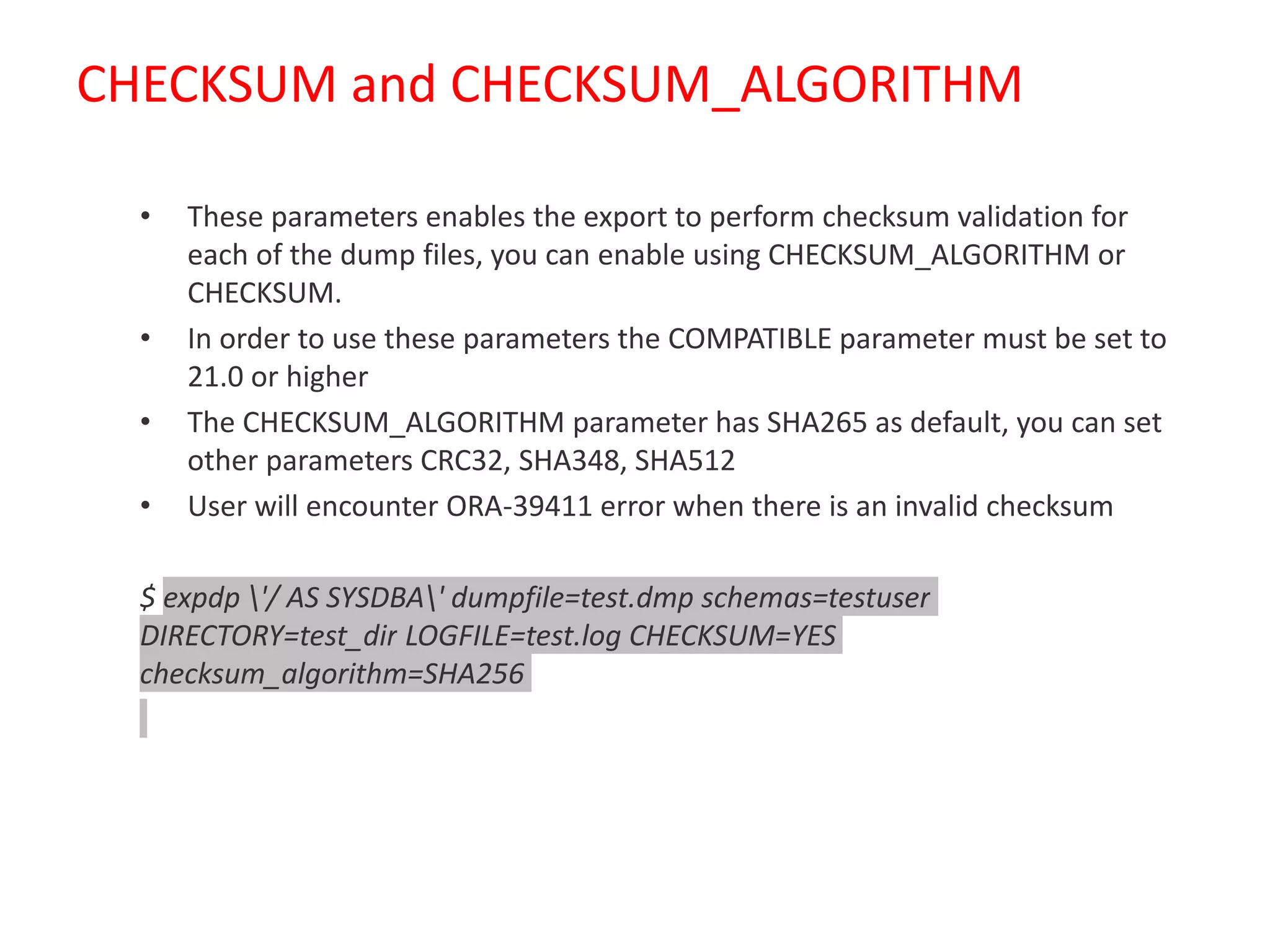

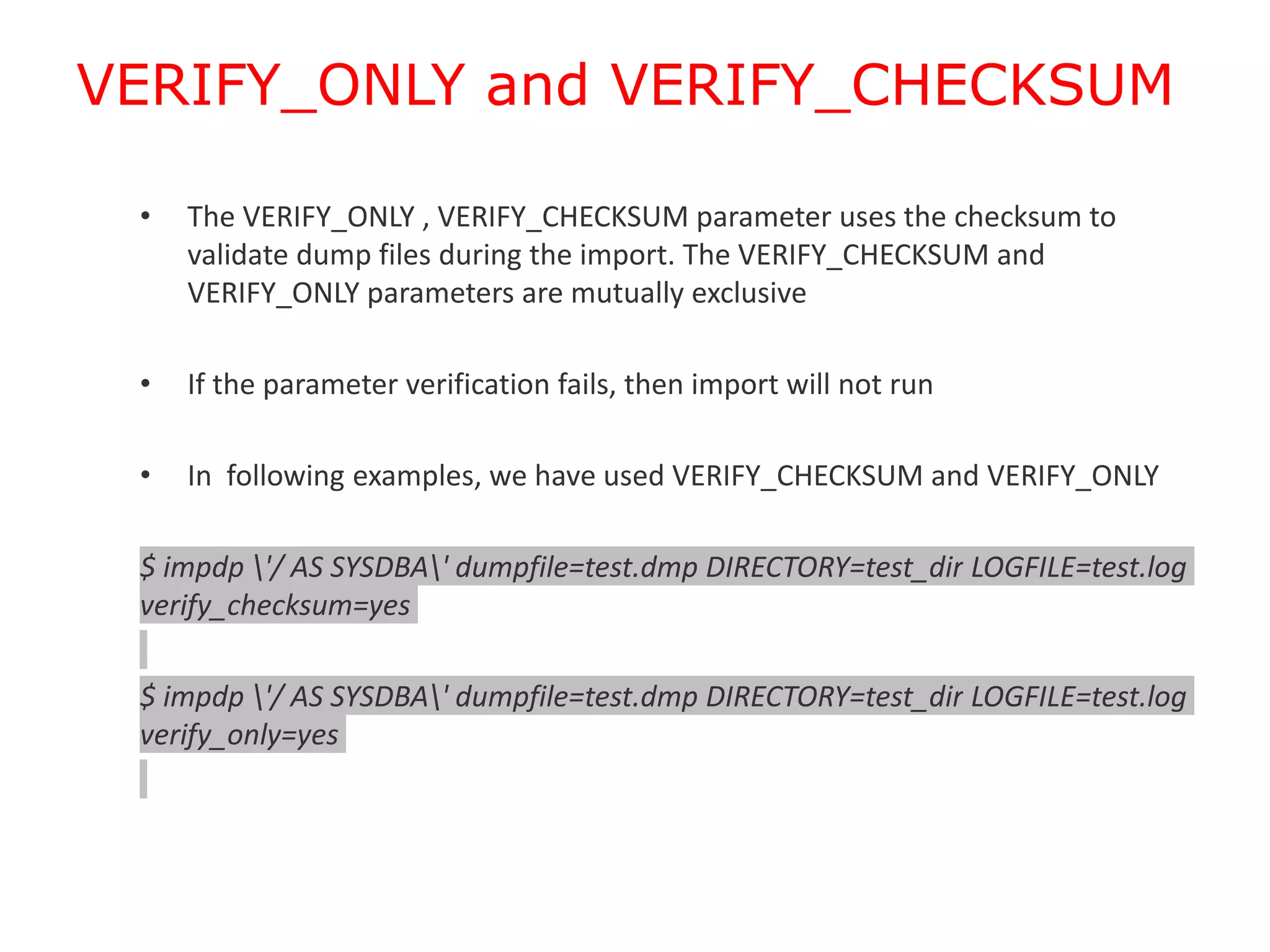

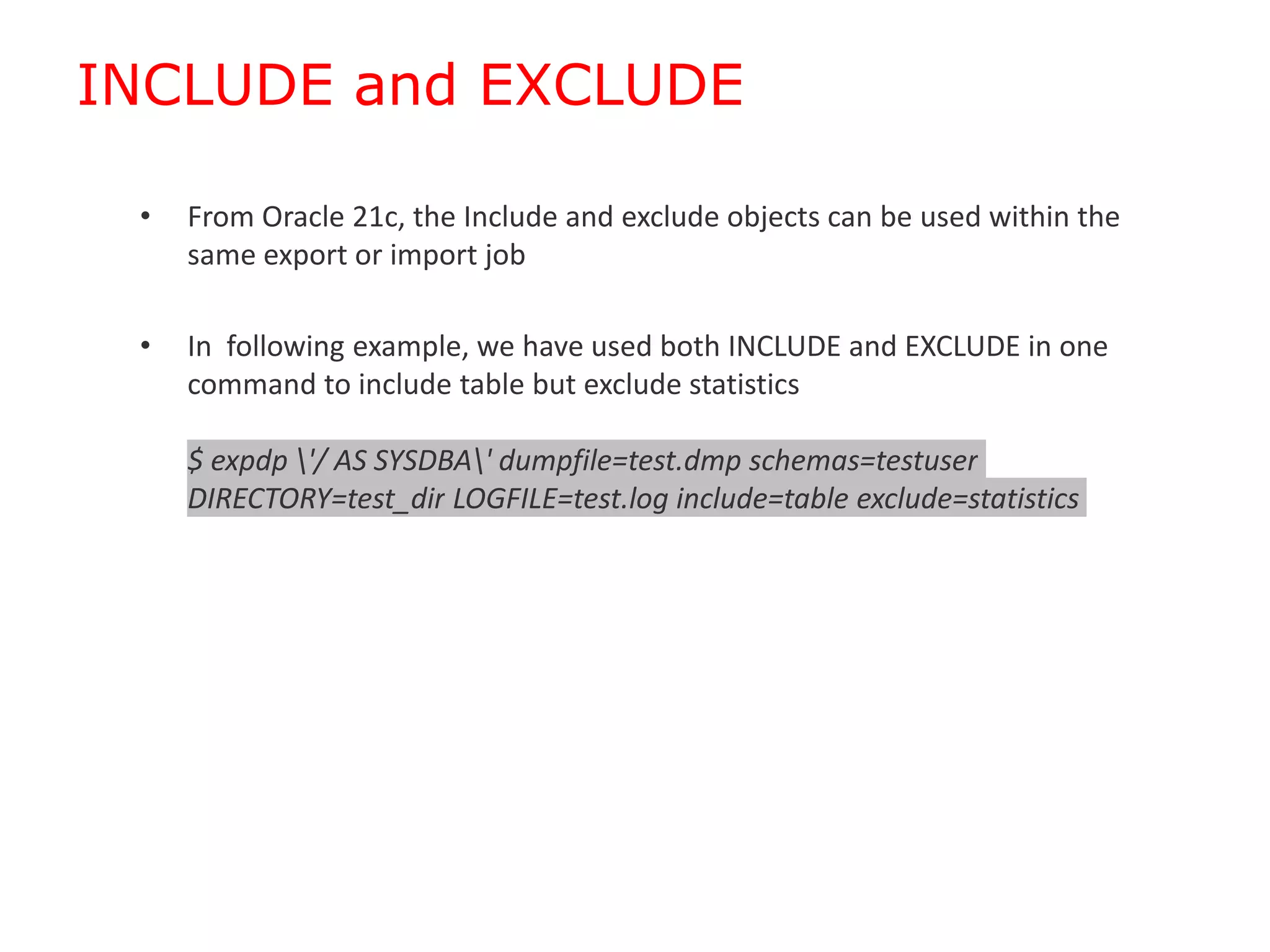

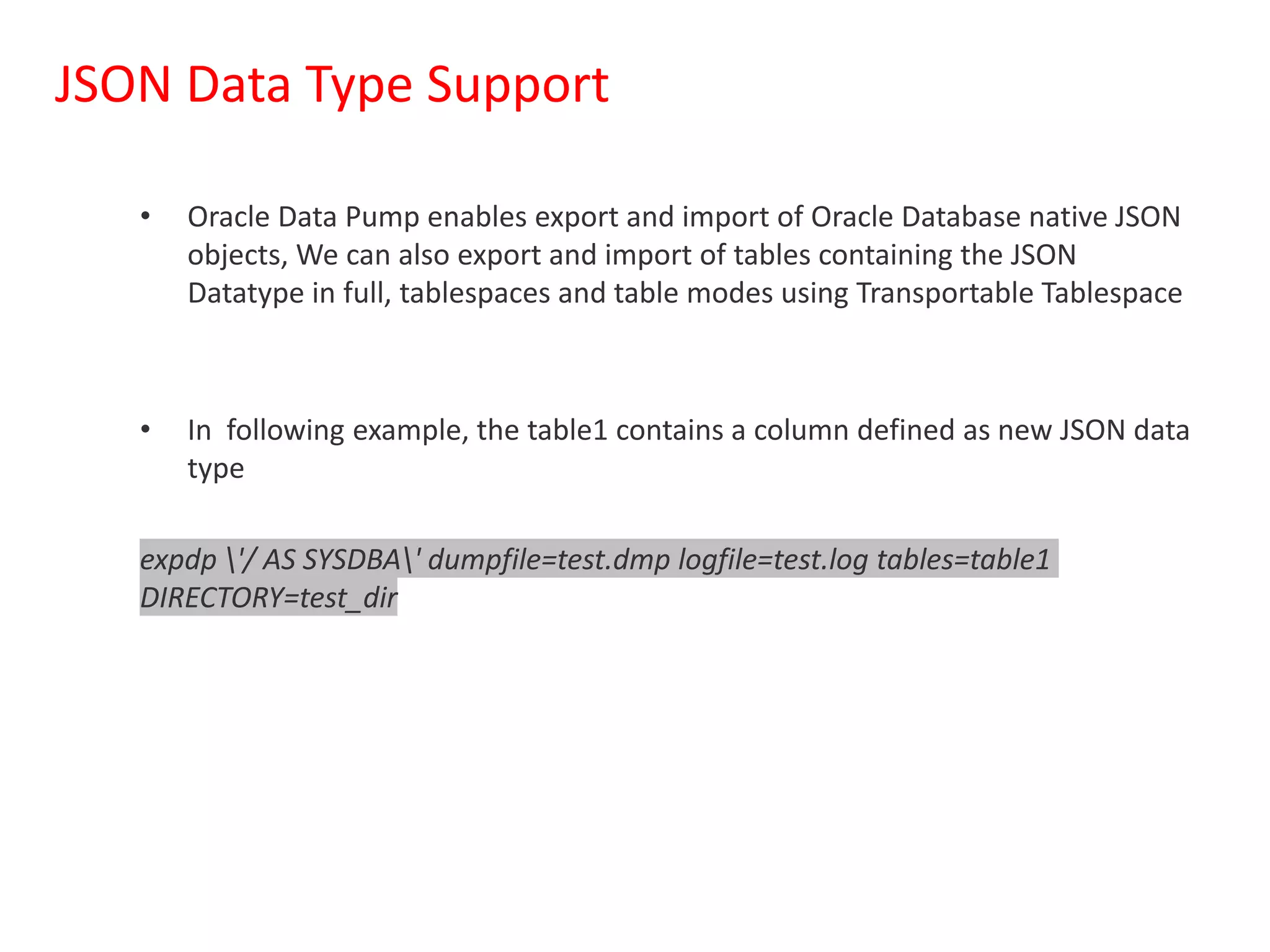

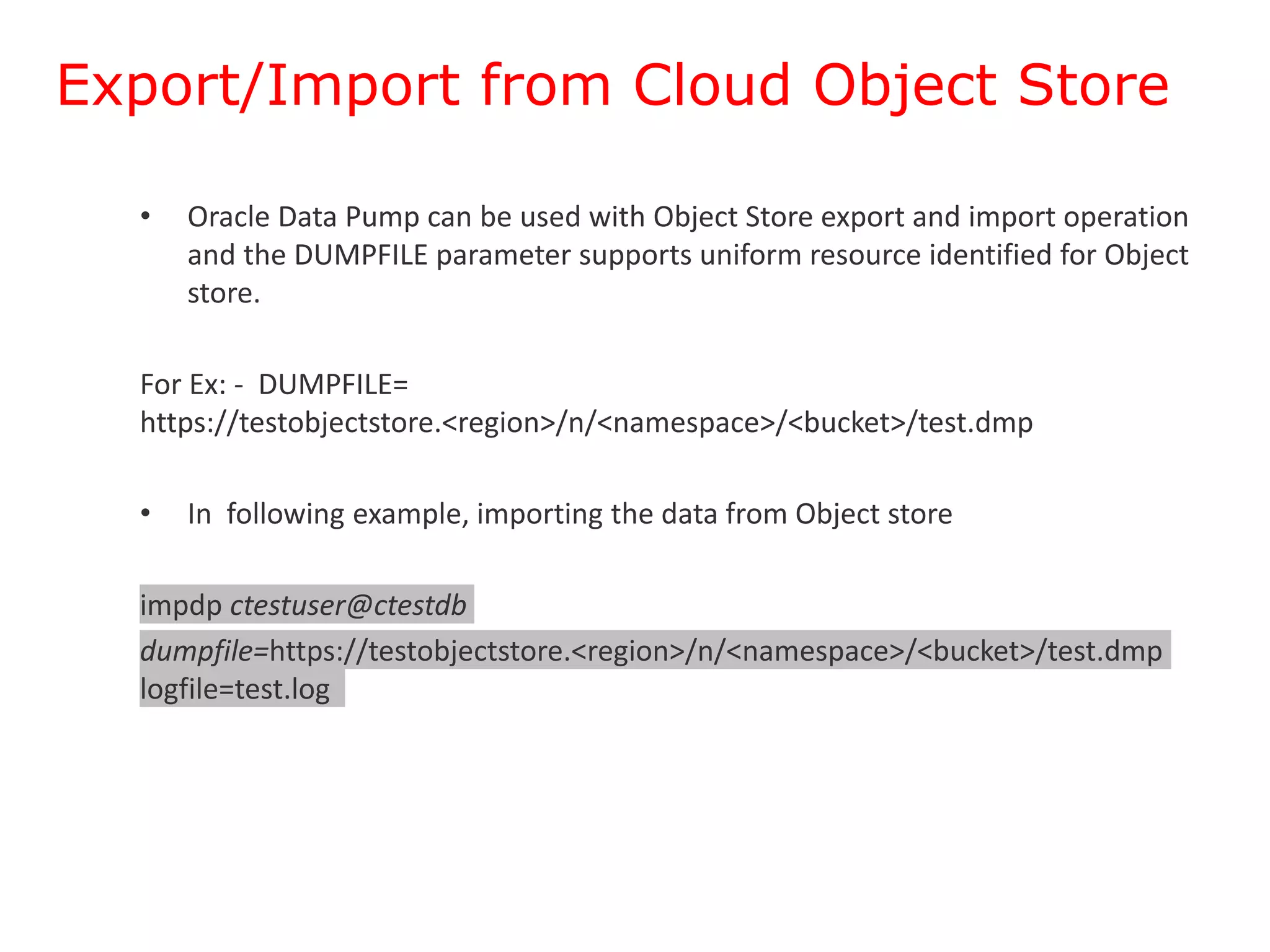

The document discusses the enhancements in Oracle Data Pump in the 21c release, highlighting new features such as checksum validation, verification parameters, support for JSON data types, and export/import capabilities from cloud object stores. It also outlines the operational improvements like resuming transportable tablespace jobs and the ability to include and exclude objects within the same operation. A demonstration and Q&A session are included to clarify the practical applications of these features.