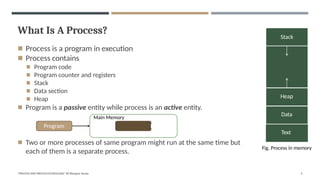

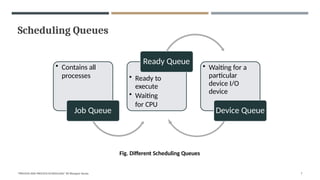

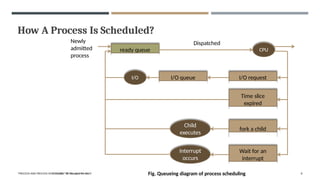

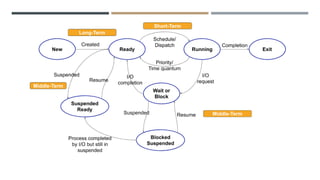

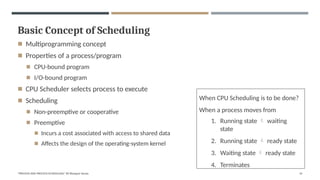

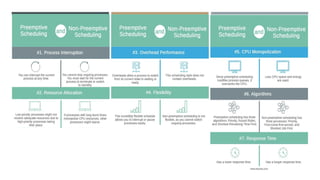

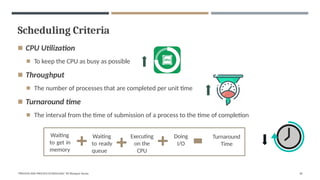

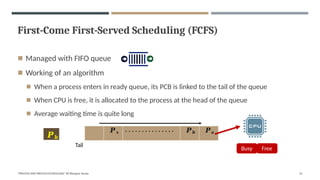

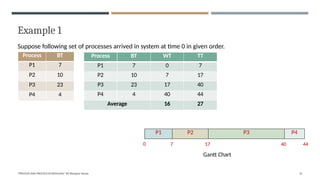

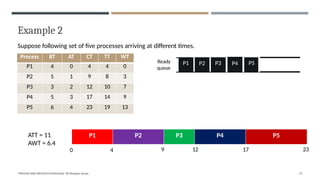

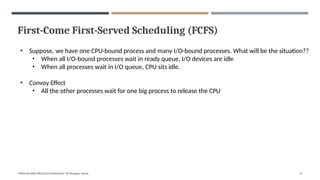

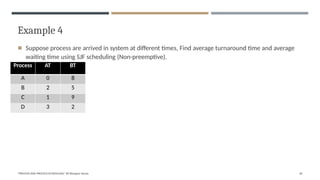

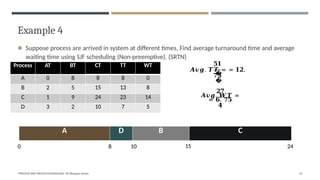

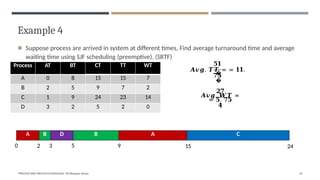

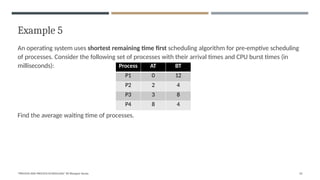

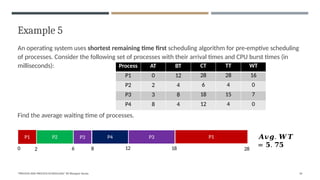

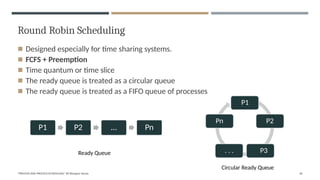

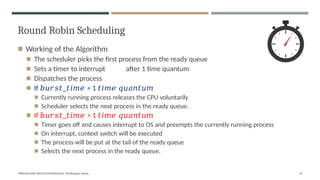

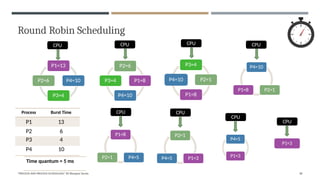

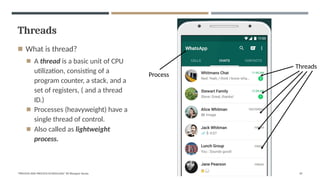

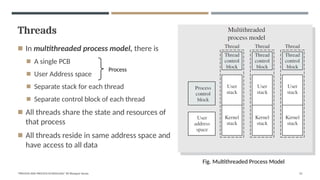

The document by Bhargavi Varala covers the concepts of processes and process scheduling in operating systems. It explains what a process is, the various types of processes and scheduling algorithms, and the responsibilities of schedulers. Key topics include context switching, scheduling criteria, and specific algorithms such as First-Come First-Served (FCFS), Shortest Job First (SJF), and Round Robin scheduling.