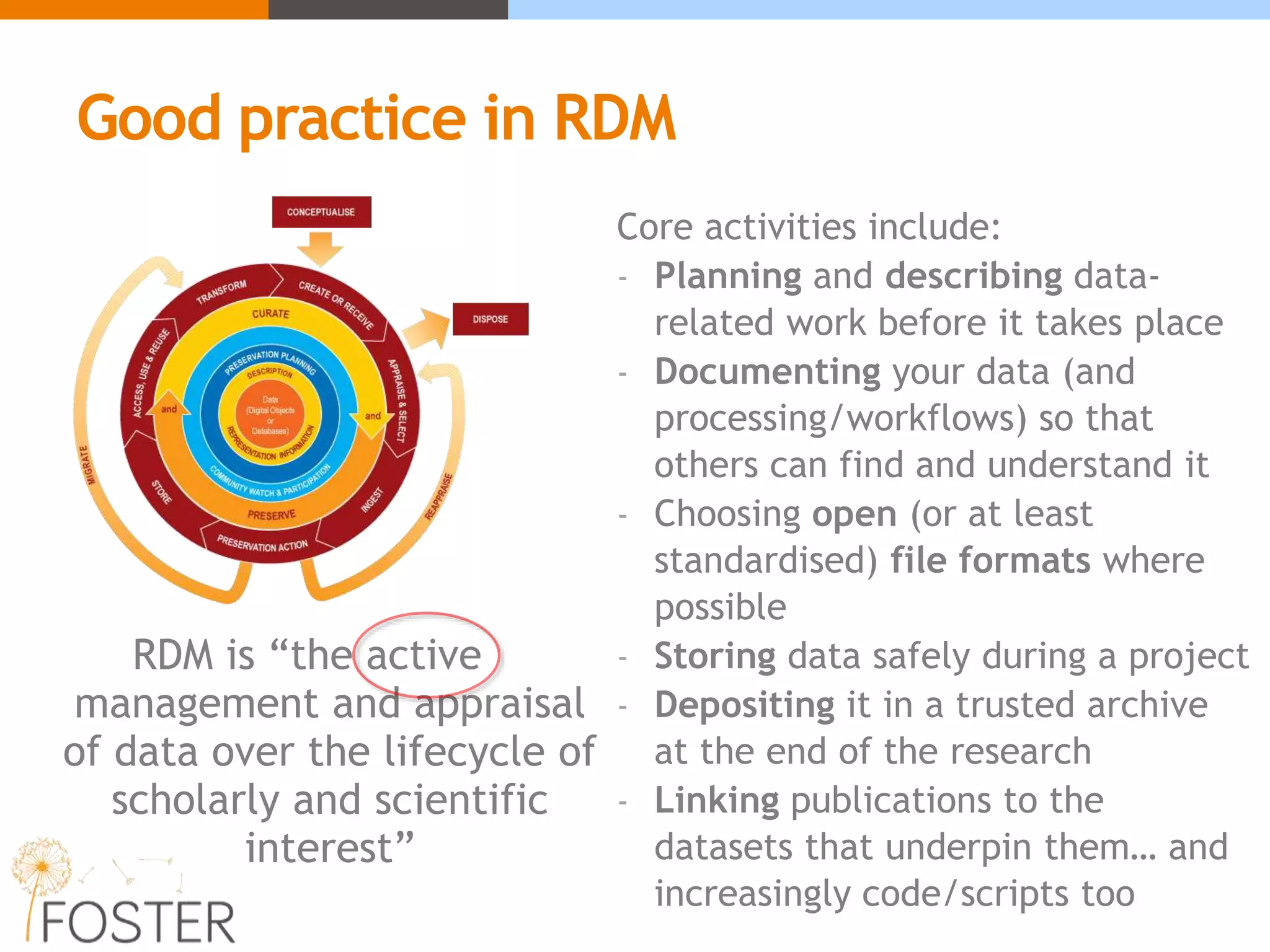

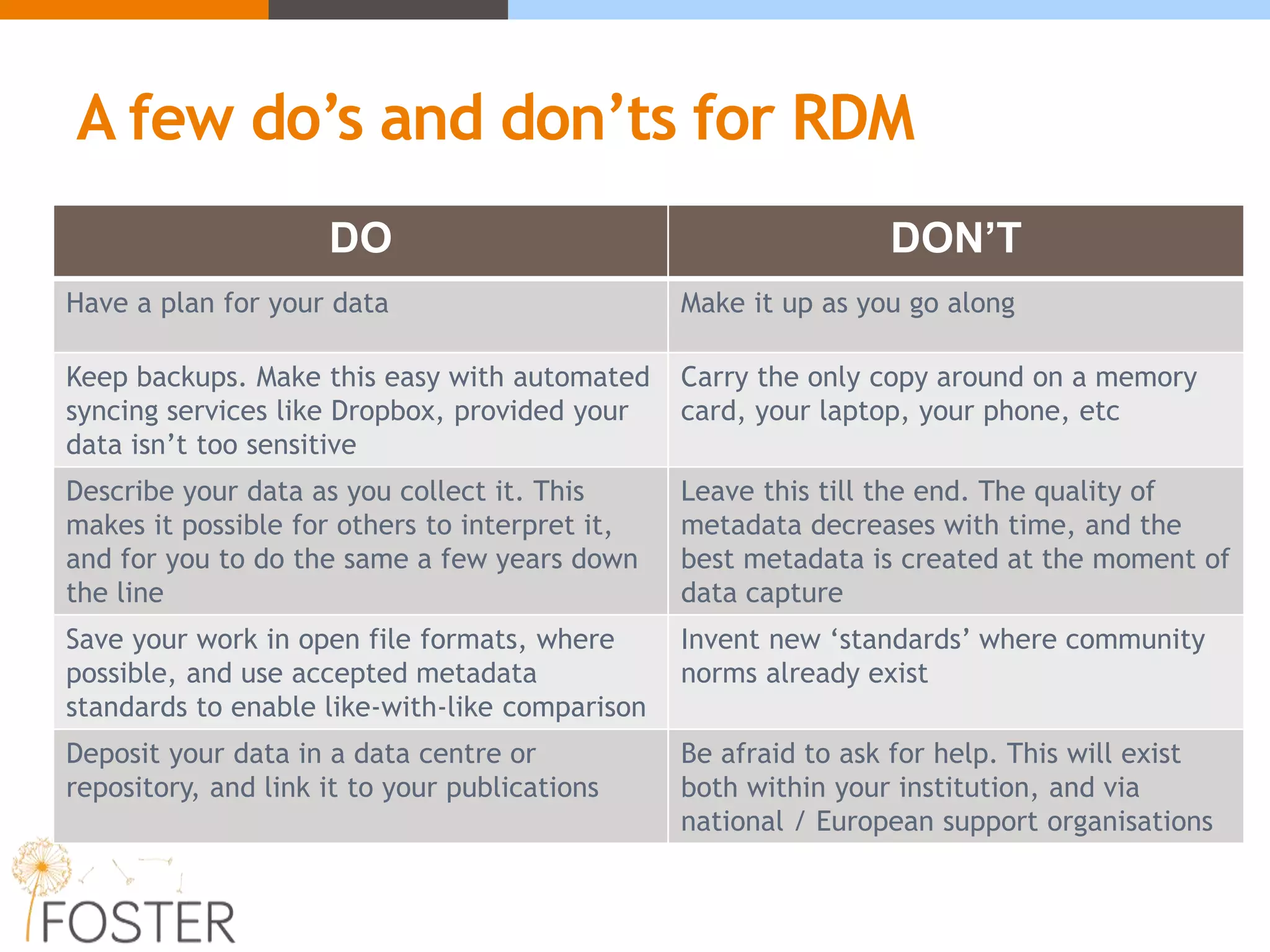

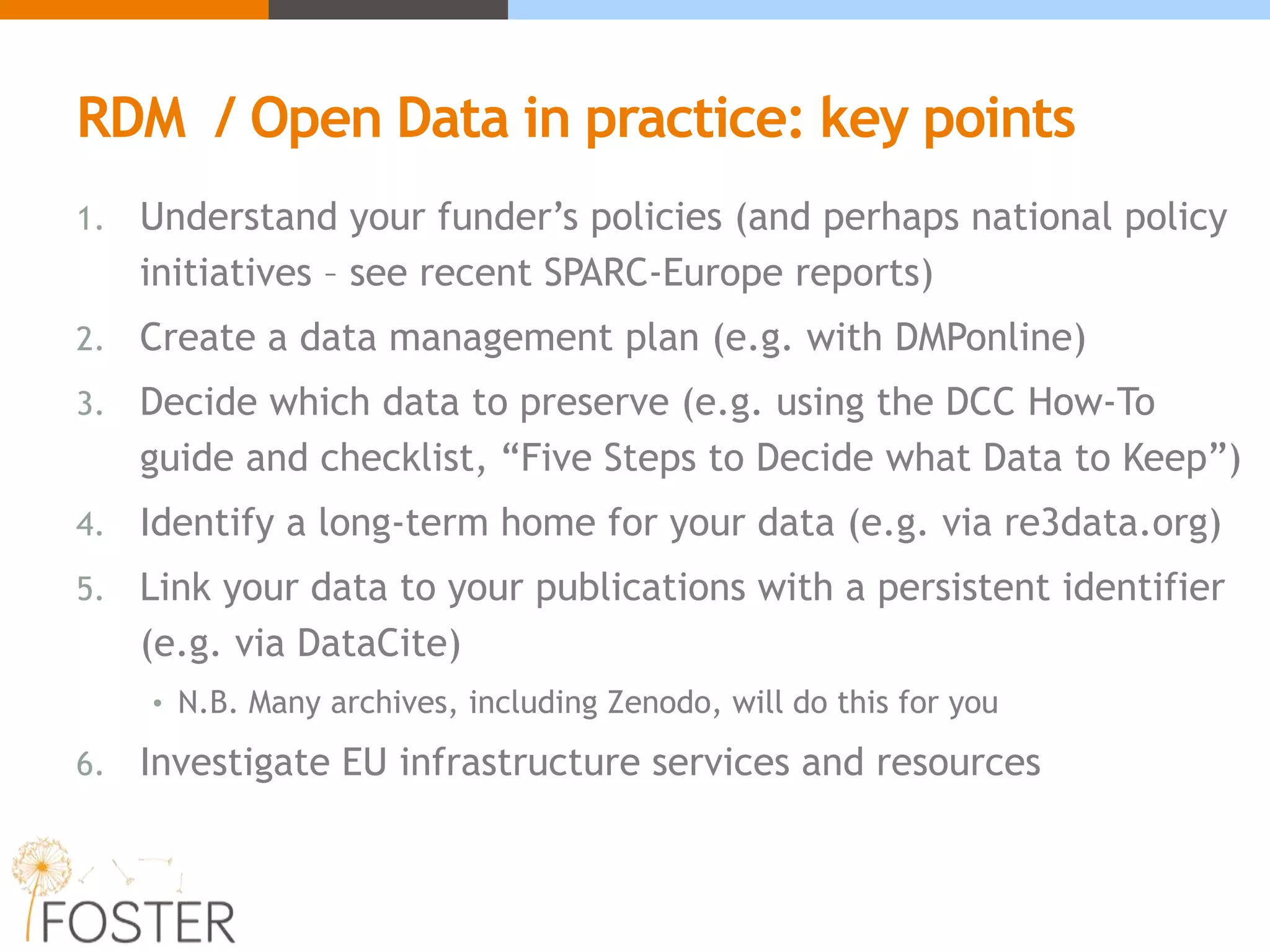

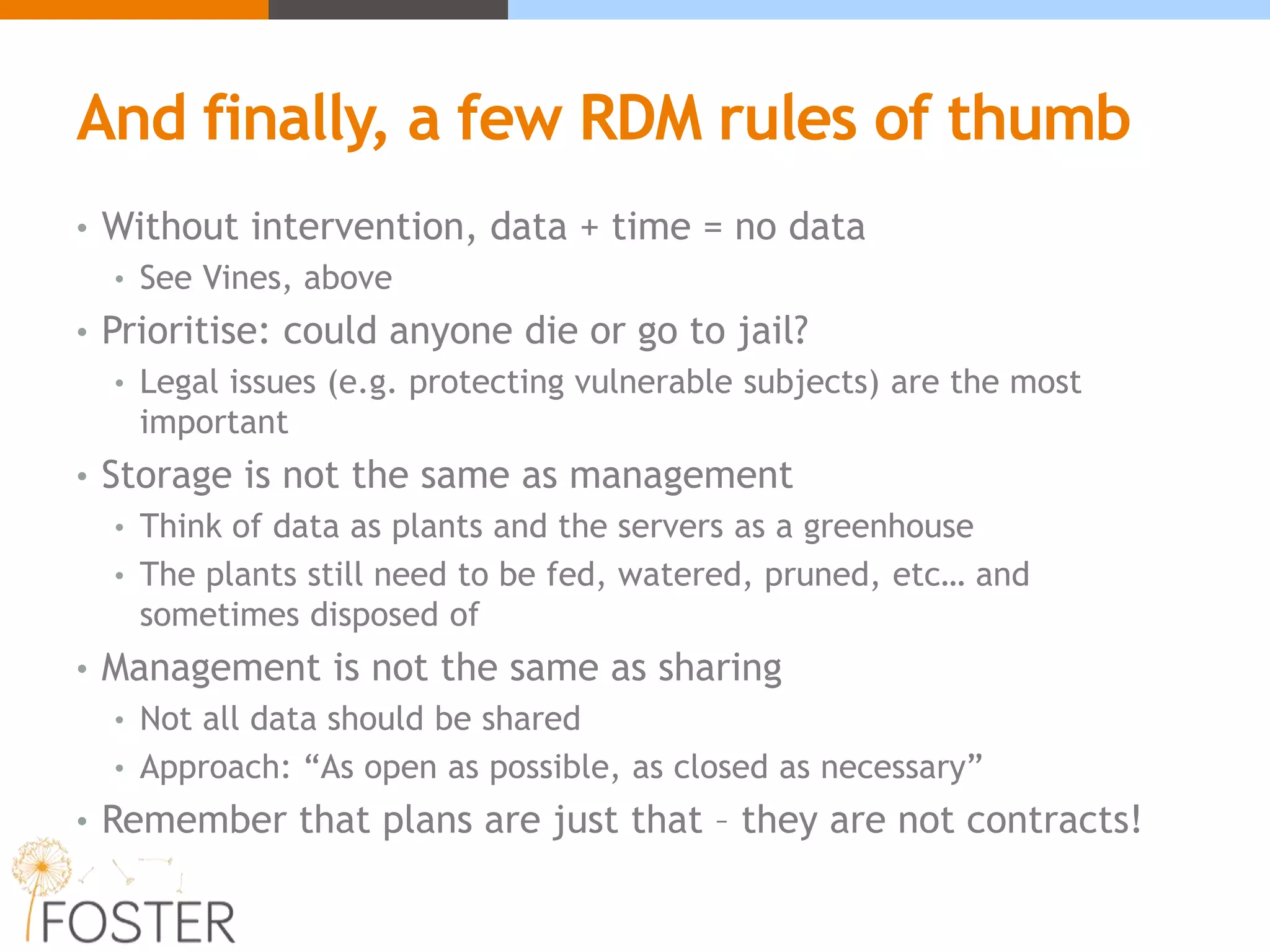

The document summarizes a presentation about facilitating open science training in Europe. It discusses the benefits of open data and research, including increased impact, accessibility, efficiency and transparency. However, it also notes challenges like privacy, recognition issues, and technical limitations. Emerging consensus supports the "FAIR" principles of findable, accessible, interoperable and reusable data. The presentation provides guidance on open data strategies, including having a data management plan, describing and archiving data appropriately, and using standards. It emphasizes communication and seeking help from research support organizations.