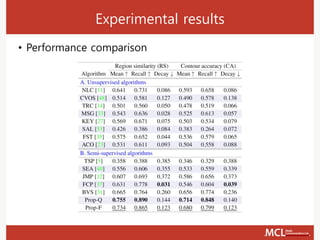

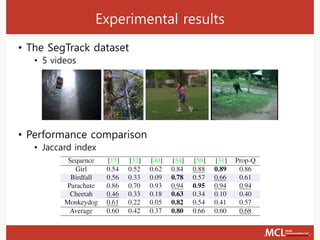

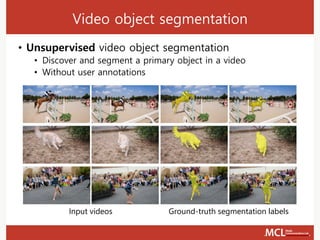

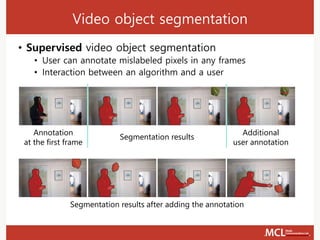

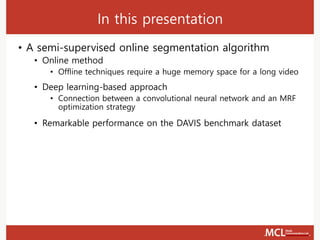

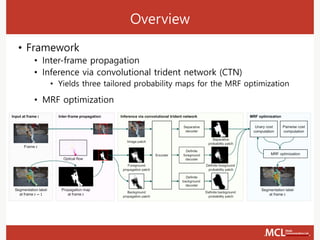

The document presents a semi-supervised online video object segmentation algorithm utilizing a convolutional trident network for effective segmentation of primary objects in videos, with minimal user interaction. It discusses the challenges associated with video object segmentation and outlines the algorithm's architecture, including various decoders and optimization strategies. Through experimental results on multiple datasets, including the Davis benchmark, it demonstrates the algorithm's marked performance enhancements in segmentation accuracy and processing speed.

![Related works

• Object proposal-based algorithm

• Generate object proposals in each frame

• Select object proposals that are similar to the target object

[2015][ICCV][Perazzi] Fully Connected Object Proposals for Video Segmentation

Generated object proposals

Segment track](https://image.slidesharecdn.com/170822-171107015546/85/Online-video-object-segmentation-via-convolutional-trident-network-8-320.jpg)

![Related works

• Superpixel-based algorithm

• Over-segment each frame into superpixels

• Trace the target object based on the inter-frame matching

[2015][CVPR][Wen] Joint Online Tracking and Segmentation

Inter-frame matching Segment track](https://image.slidesharecdn.com/170822-171107015546/85/Online-video-object-segmentation-via-convolutional-trident-network-9-320.jpg)

![Network architecture

• Implementation issues in decoders

• Prediction layer

• Sigmoid layer is used to yield normalized outputs within [0, 1]

• Rectified linear unit (ReLU) + Batch normalization

• Kernel size

• 3 ×3 kernels are used in the prediction layers

• 5 × 5 kernels are used in the other convolution layers](https://image.slidesharecdn.com/170822-171107015546/85/Online-video-object-segmentation-via-convolutional-trident-network-23-320.jpg)