For the full video of this presentation, please visit: https://www.edge-ai-vision.com/2025/06/oaax-one-standard-for-ai-vision-on-any-compute-platform-a-presentation-from-network-optix/

Robin van Emden, Senior Director of Data Science at Network Optix, presents the “OAAX: One Standard for AI Vision on Any Compute Platform” tutorial at the May 2025 Embedded Vision Summit.

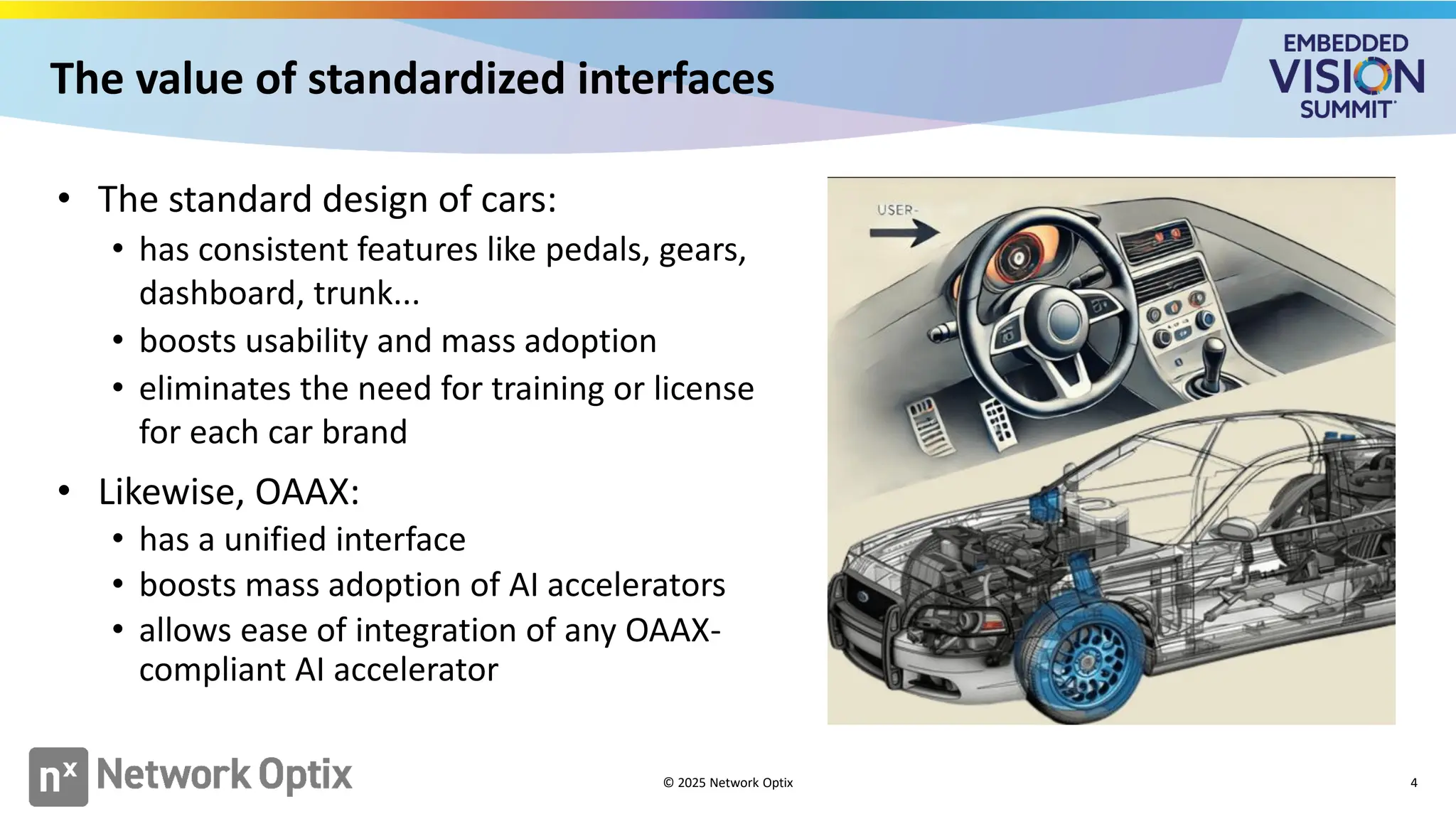

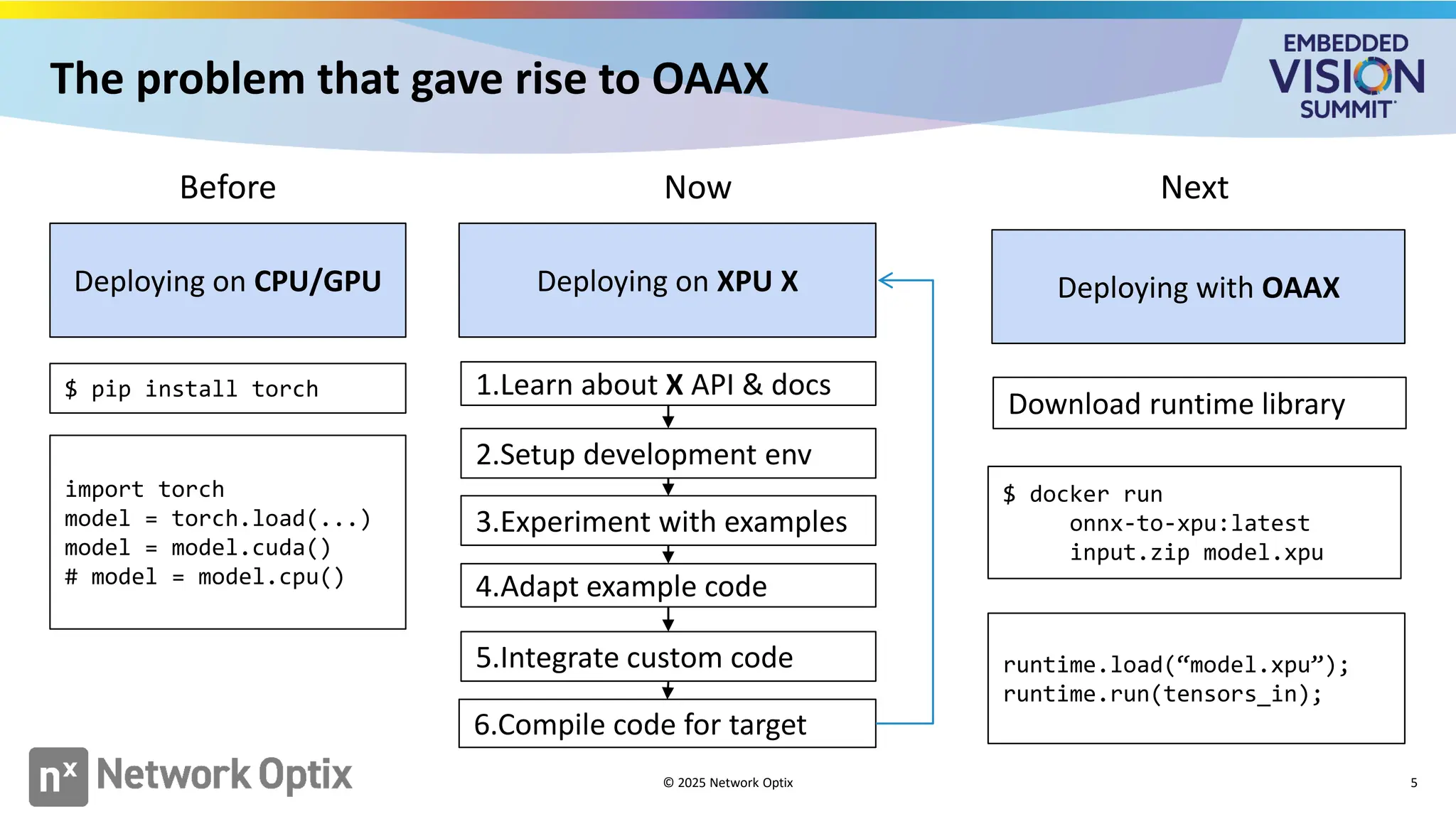

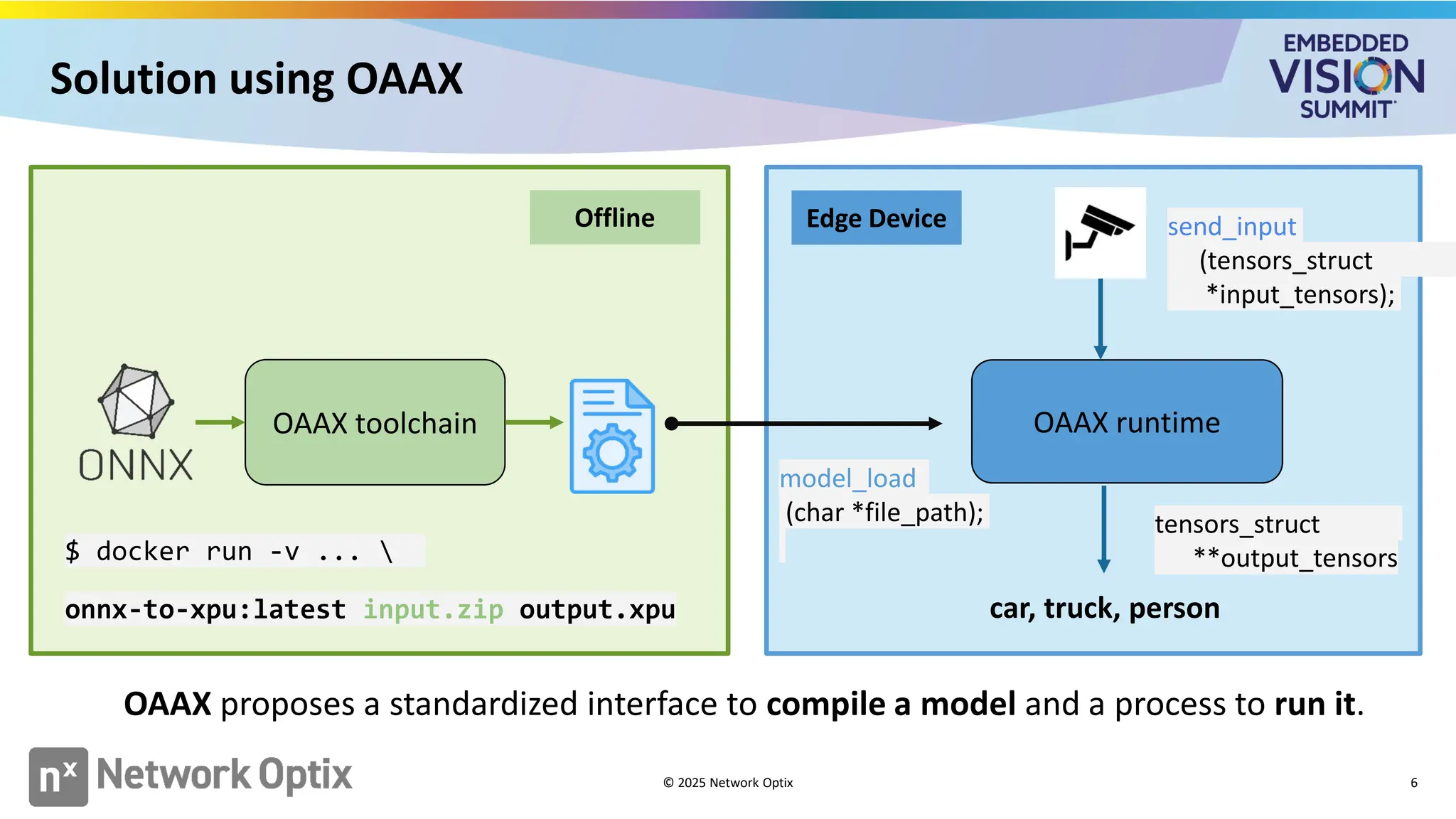

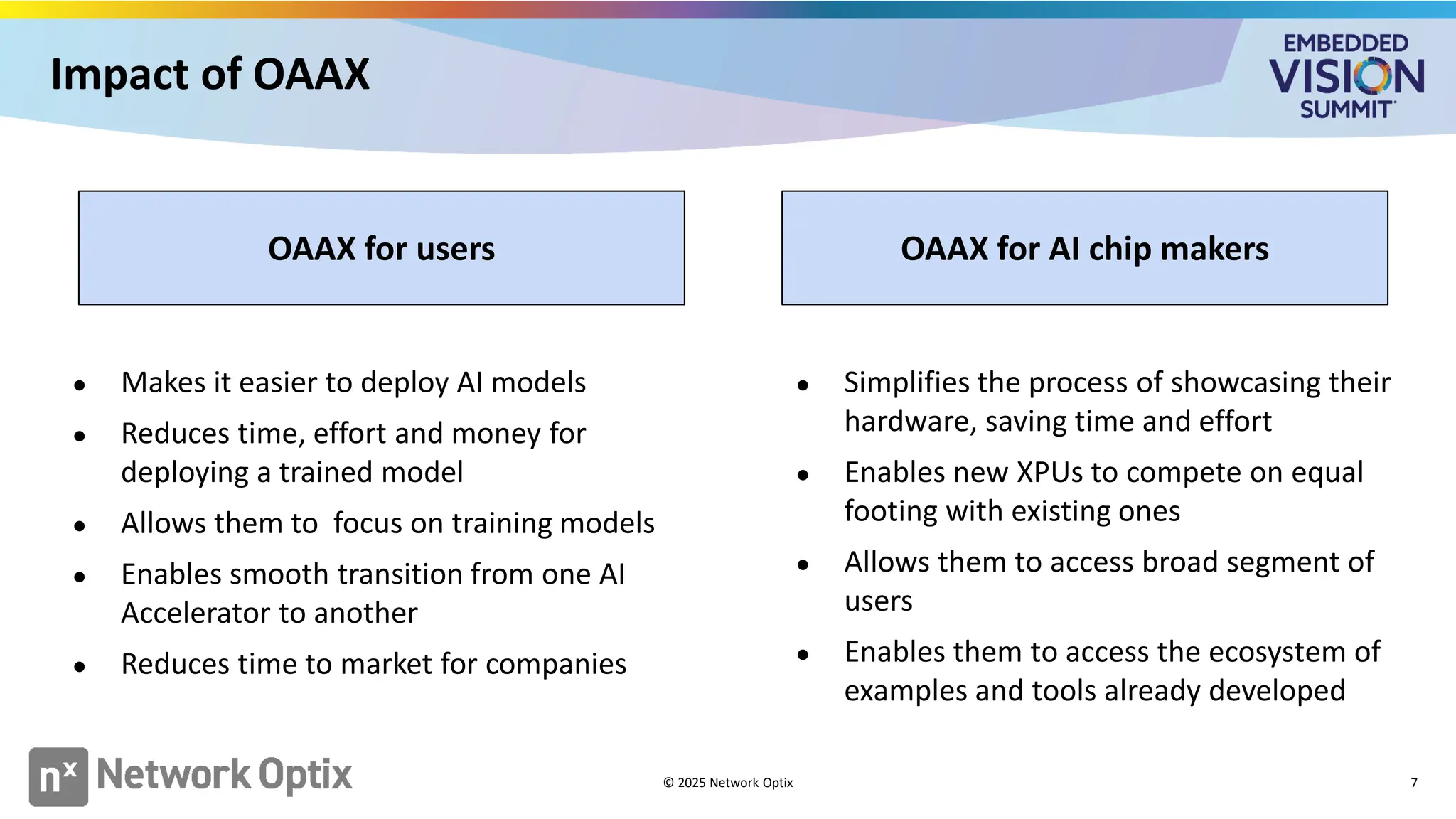

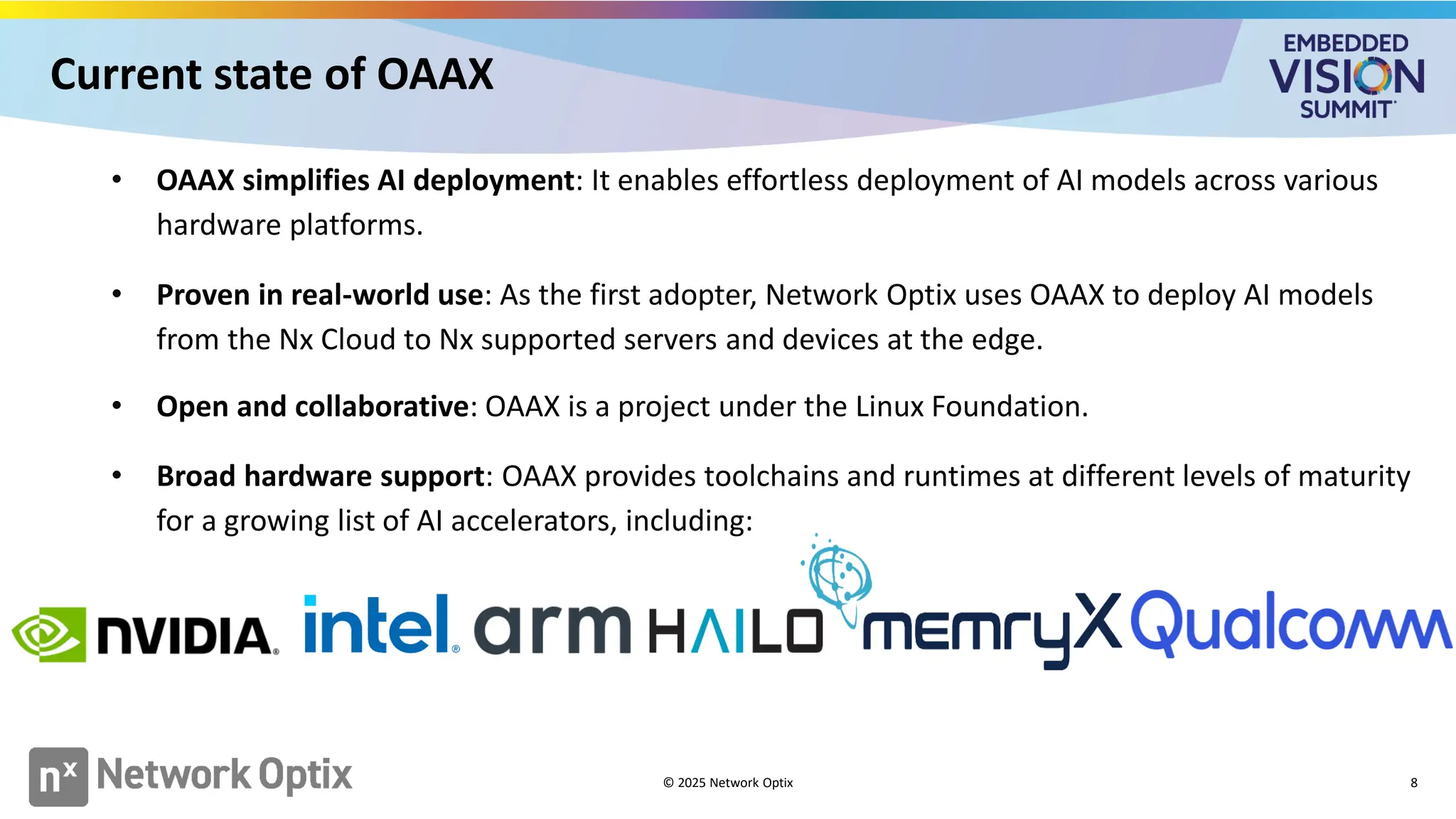

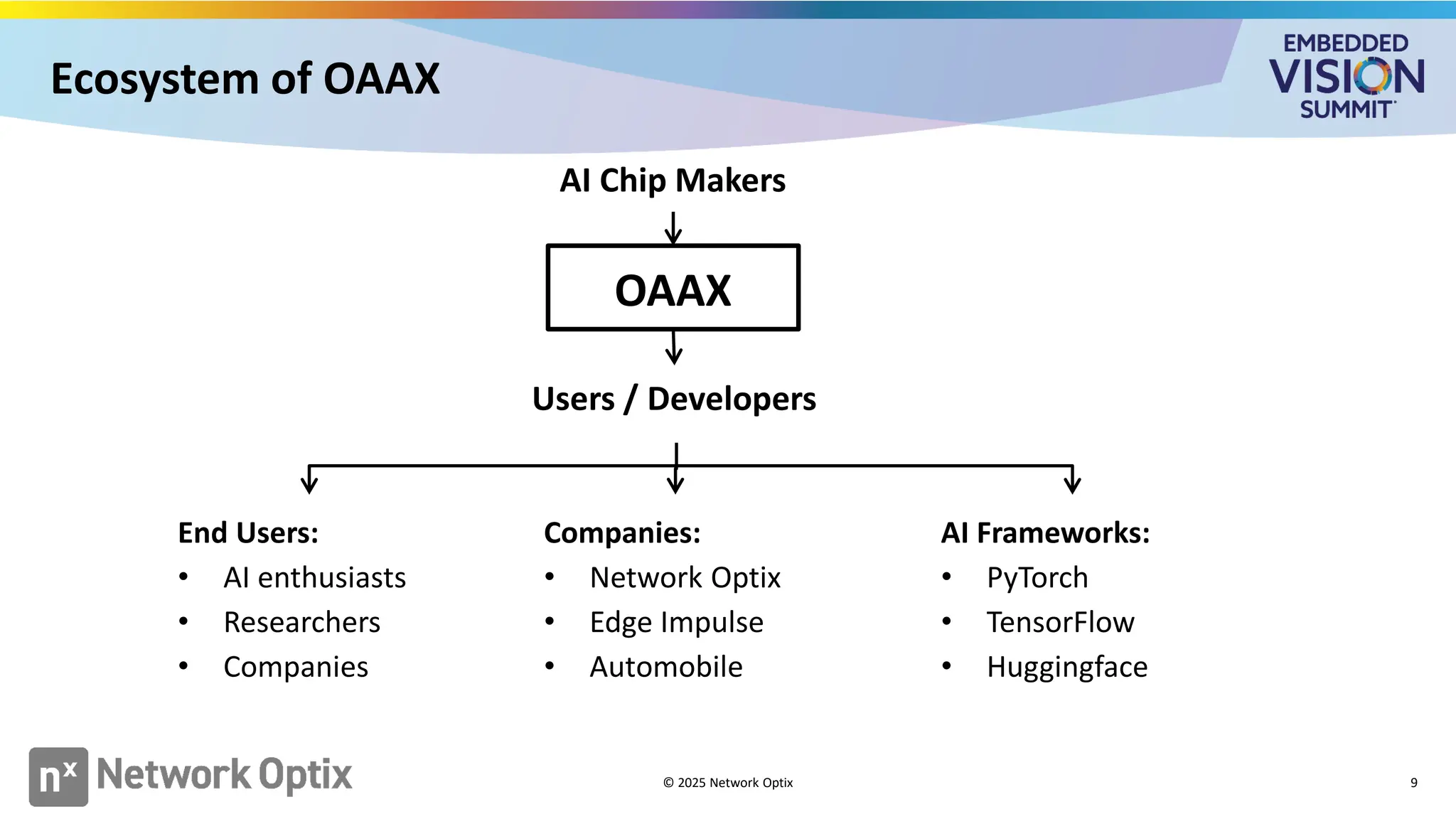

OAAX, an open accelerator compatibility standard being developed under the Linux Foundation AI & Data project, is key to making AI vision truly hardware-agnostic. In this talk, van Emden highlights recent progress and his company’s vision for seamless interoperability across CPUs, XPUs and GPUs. He also explores how developers and processor suppliers can benefit from and contribute to the standard. Most importantly, he discusses how contributors can help shape OAAX to ensure its growth and widespread adoption.

By fostering a strong community, OAAX has the potential to become a widely accepted industry standard, enabling AI models to run efficiently across diverse hardware without vendor lock-in. As AI vision continues to evolve, open collaboration is essential for driving innovation, simplifying development and expanding accessibility. Join Network Optix in shaping the future of AI acceleration.

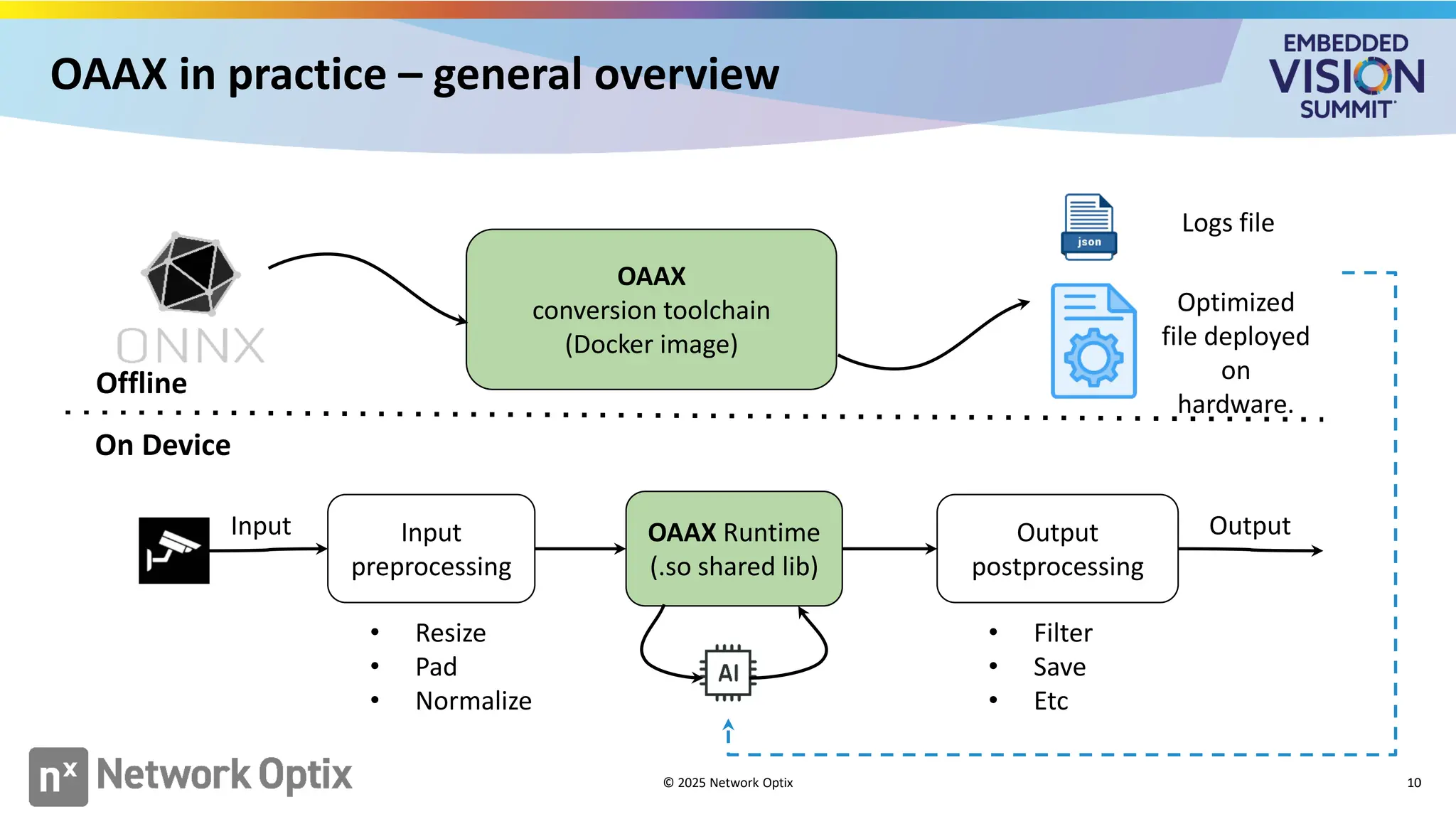

![OAAX from a user perspective

11

User trains and exports

model to ONNX

input_tensor = torch.rand((1,3,256,256));

model = MyModel();

torch.onnx.export(model, input_tensor,

"model.onnx", input_names=["input"])

Compile ONNX to the

XPU format

$ docker pull

onnx-to-xpu:latest

$ docker run

onnx-to-xpu:latest

input.onnx model.xpu

Run the model on the

XPU

int main() {

runtime = load_runtime("./xpu.so");

runtime.runtime_initialization();

runtime.runtime_load("./model.xpu");

while (true) {

runtime.send_input(input);

tensors_struct output;

runtime.receive_output(output);

}

runtime.runtime_destruction();

return 0;

}

© 2025 Network Optix](https://image.slidesharecdn.com/e1r04vanemdennetworkoptix2025-250602130816-fa7f49bf/75/OAAX-One-Standard-for-AI-Vision-on-Any-Compute-Platform-a-Presentation-from-Network-Optix-11-2048.jpg)