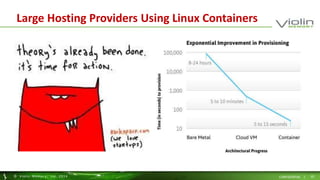

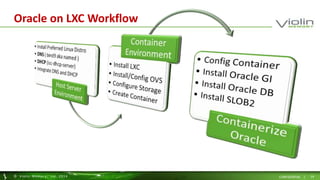

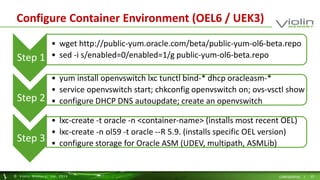

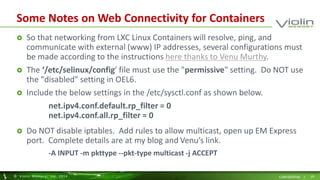

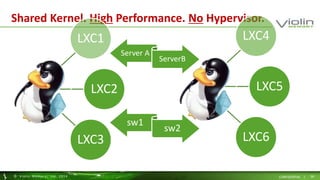

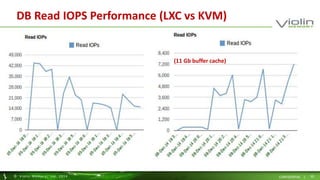

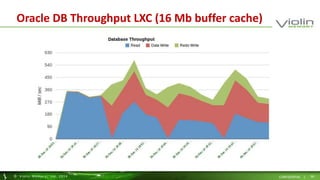

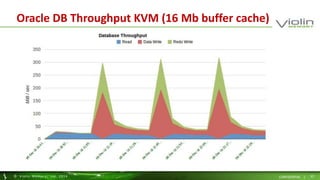

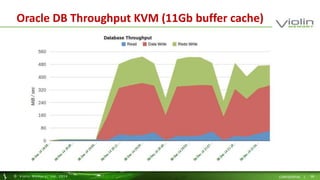

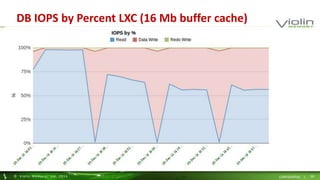

The document discusses building Oracle databases on Linux containers (LXC). It provides instructions on setting up an LXC environment with Oracle Linux 6.5, configuring openvswitch networking and containers, and deploying Oracle databases within the containers. Performance tests show that Oracle databases in LXC containers achieve high performance comparable to virtual machines while using fewer resources and providing easier migration. Large companies have adopted LXC containers for improved efficiency and manageability.

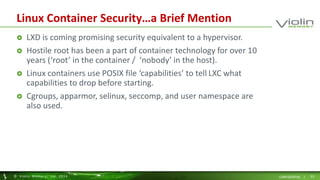

![Building Oracle on LXC in OEL UEK[2,3] Kernels (References)

Wim Coaekerts Blog (October 9, 2011)

Getting LXC, OpenvSwitch, and BTRFS from Beta Repo.

Oracle VM templates can be converted to containerization.

Converting Oracle VM templates to Linux Containers

Oracle® Linux Administrator's Solutions Guide for Release 6

Chapter 9 Linux Containers

Chapter 10 Docker

However, best to use UEK3 Kernels.

The 3.12 kernel provides unified Container support This means

That on 3.12, LXC, OpenVZ, Parallels, .

© Viol in Memory, Inc . 2014 CONFIDENTIAL | 53](https://image.slidesharecdn.com/bdbb5c83-5fa8-4ca6-b8aa-7d4f6084f5a1-141212115331-conversion-gate02/85/nyoug-lxc-december-12-final-53-320.jpg)