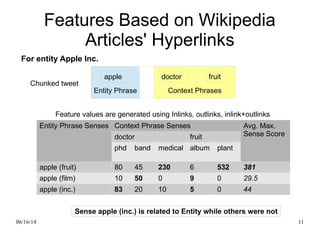

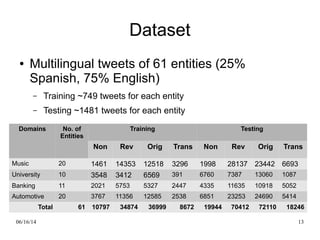

The document discusses the methodology for using Wikipedia to assist in entity name disambiguation in tweets, highlighting the significance of social media feedback for brands. It outlines the process of determining which tweets relate to specific entities, utilizing features like Wikipedia's category-article structure and hyperlink connectivity. The findings indicate that Wikipedia is a strong resource for improving disambiguation accuracy, with plans for future improvements integrating both Wikipedia-based and text-based features.