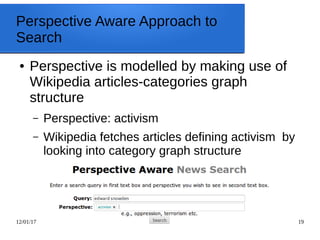

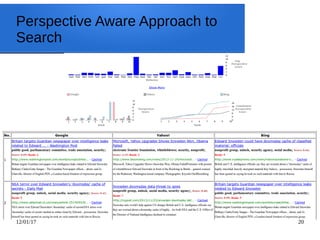

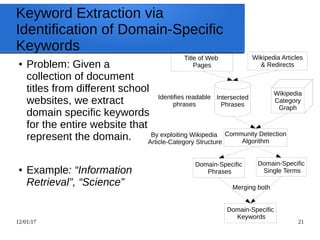

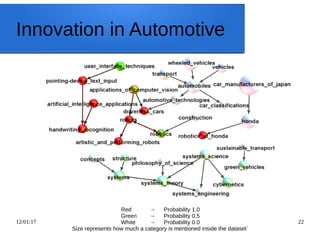

The document discusses the application of text mining and word embeddings, particularly using Wikipedia as a resource for enhancing the understanding and processing of textual data. It outlines challenges such as word ambiguity and the complexity of natural language, while also explaining techniques like phrase chunking and the use of Wikipedia's structured categories for improved semantic relatedness. Additionally, it introduces the perspective-aware approach to search engines and various algorithms for keyword extraction based on domain-specific requirements.

![12/01/17 23

Python Snippet for the Usage of

the WikiMadeEasy API

● wiki_client = Wiki_client_service()

● print(wiki_client.process([`isTitle', `business', 0]))

● print(wiki_client.process([`isPerson', `albert einstein', 0]))

● print(wiki_client.process([`mentionInCategories', `data mining', 0]))

● print(wiki_client.process([`containsArticles', `business', 0]))

● print(wiki_client.process([`matchesCategories', `pakistan', 0]))

● print(wiki_client.process([`matchesArticles', `computer science', 0]))

● print(wiki_client.process([`getWikiOutlinks', `pagerank', 0]))

● print(wiki_client.process([`getWikiInlinks', `google', 0]))

● print(wiki_client.process([`getExtendedAbstract', `pakistan', 0]))

● print(wiki_client.process([`getSubCategory', `science', 0]))

● print(wiki_client.process([`getSuperCategory', `science', 0]))

● graph_dict = wiki_client.process([`getSubtoSuperCategoryGraph', [`information_science',

`sociology'], 2])](https://image.slidesharecdn.com/textminingwordembeddingswikipedia-170112154046/85/Text-mining-word-embeddings-wikipedia-23-320.jpg)