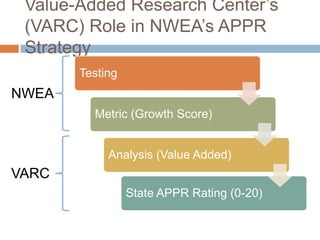

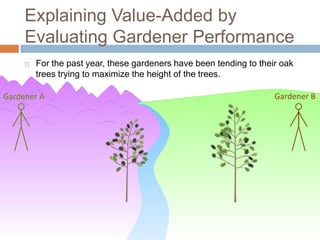

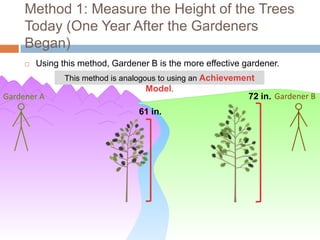

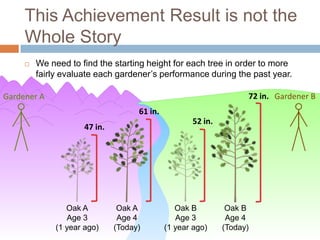

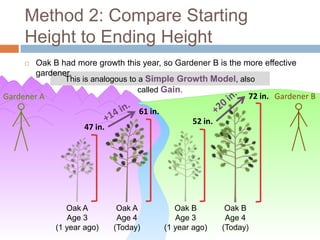

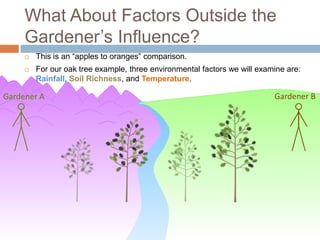

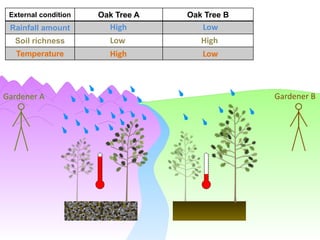

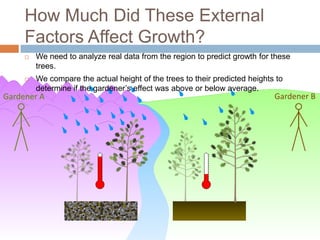

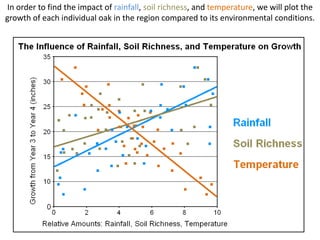

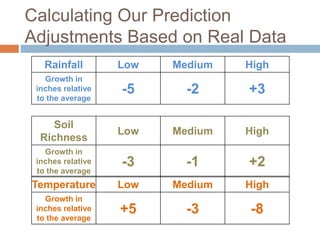

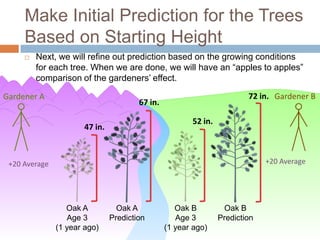

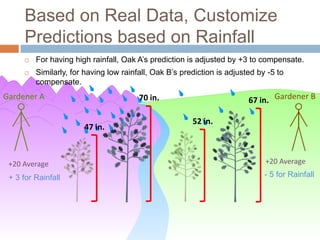

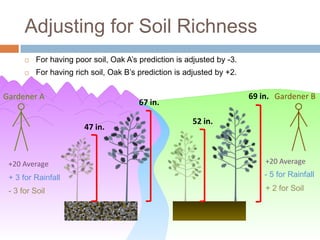

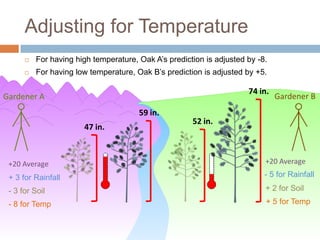

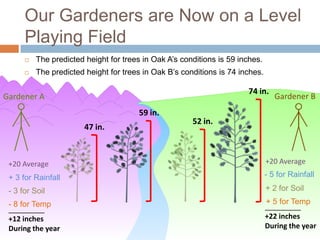

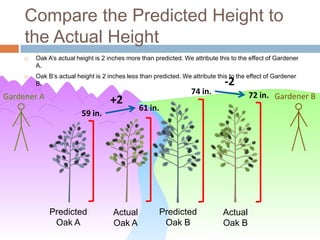

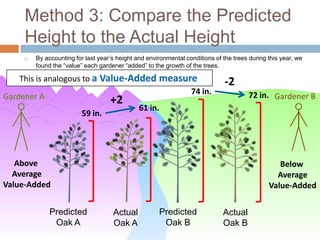

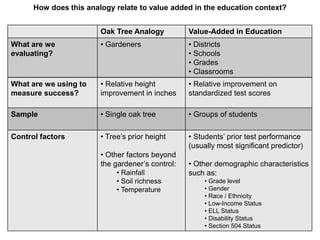

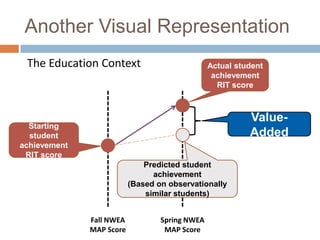

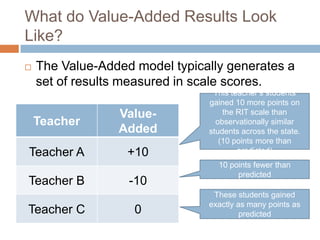

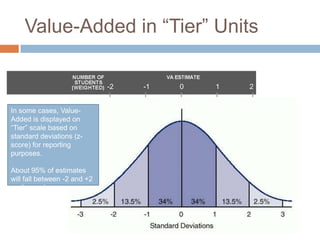

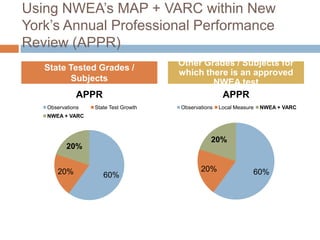

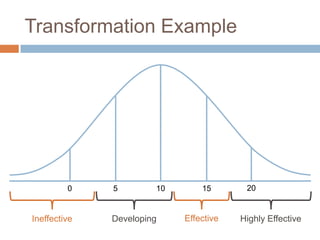

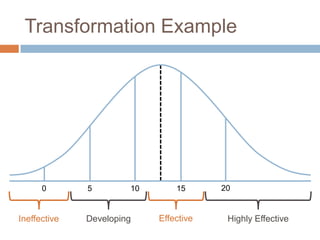

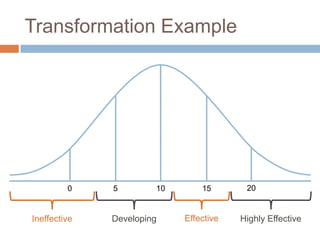

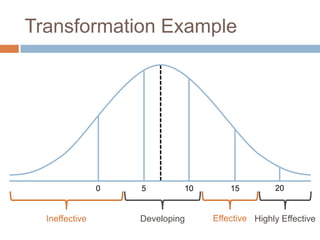

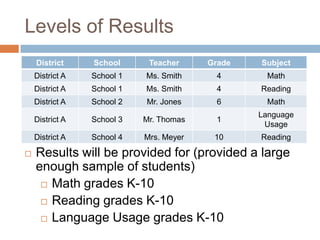

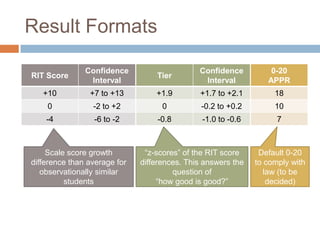

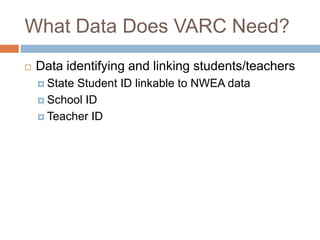

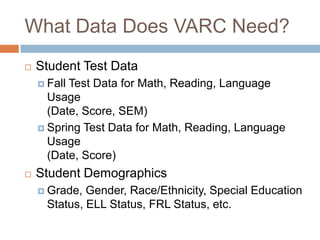

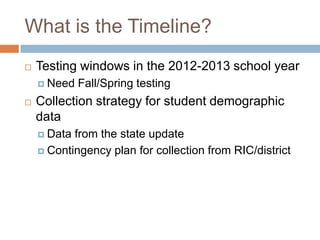

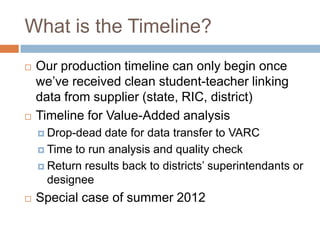

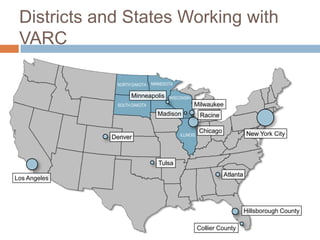

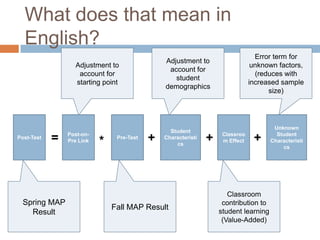

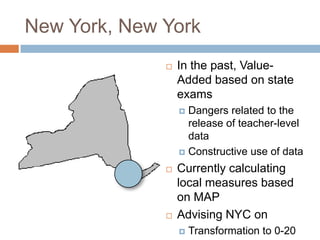

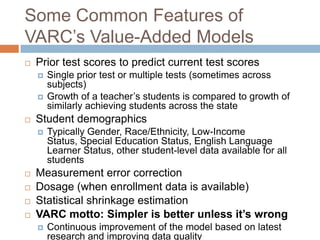

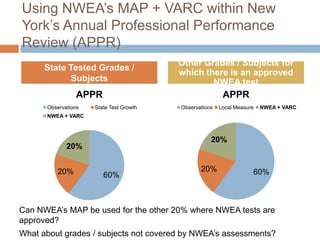

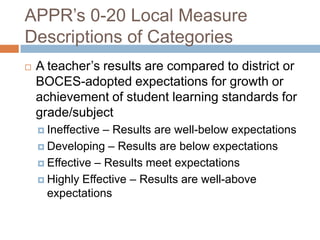

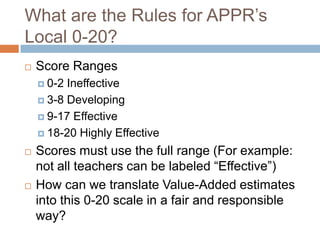

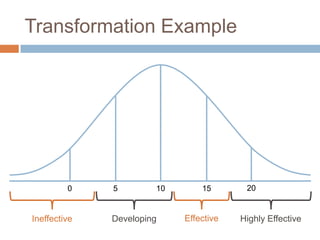

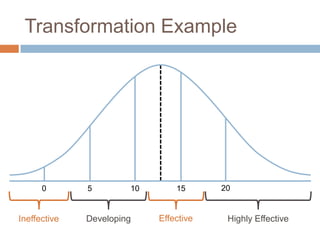

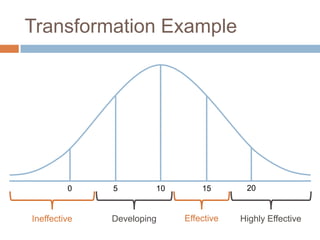

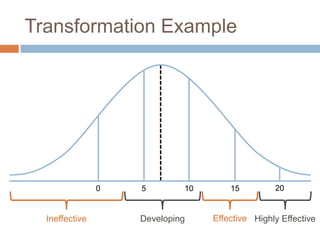

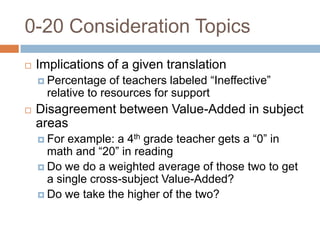

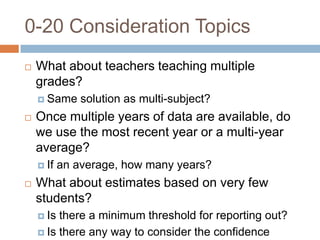

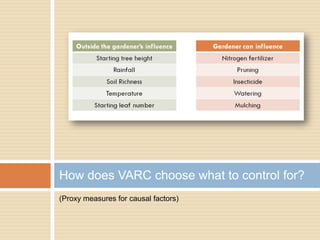

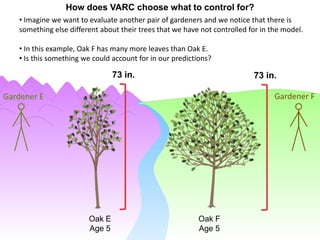

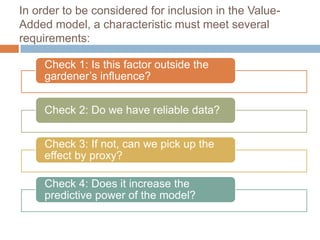

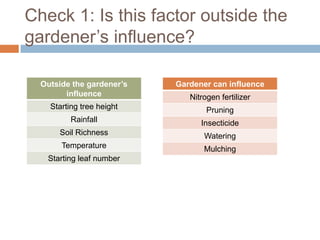

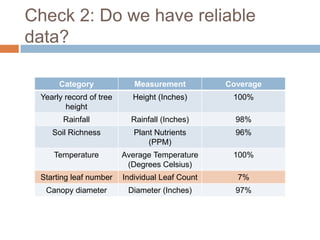

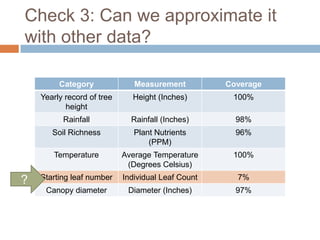

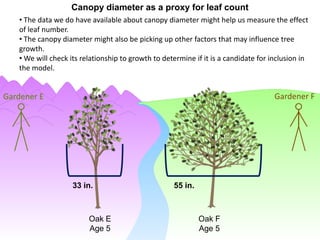

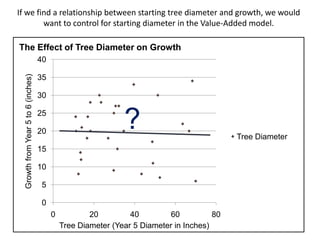

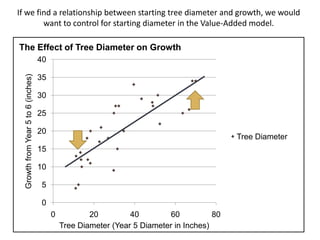

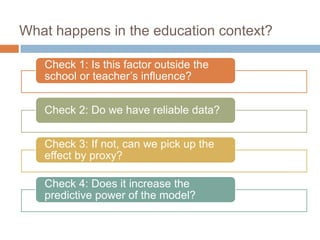

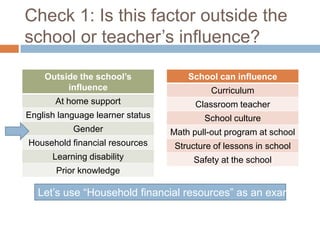

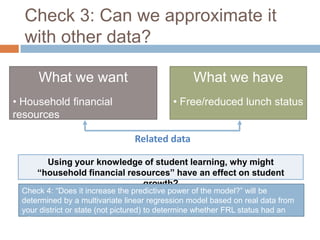

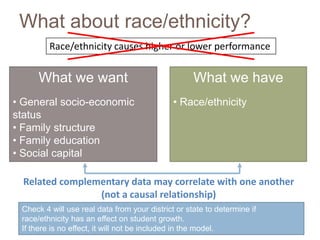

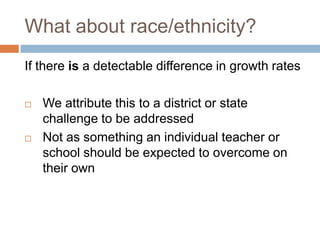

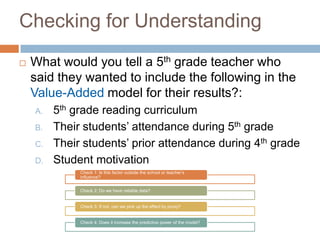

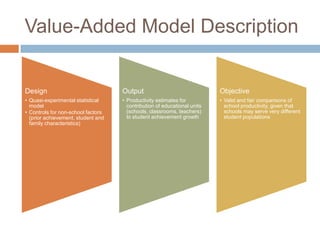

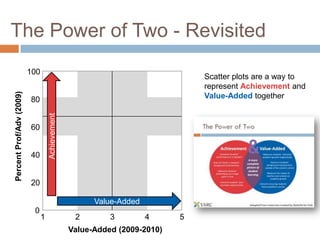

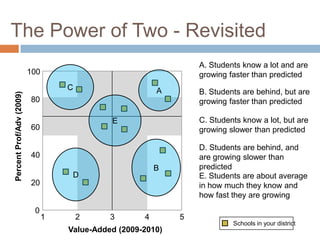

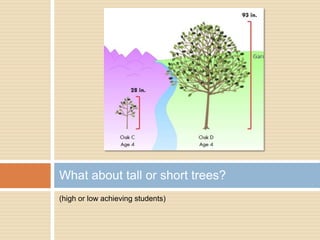

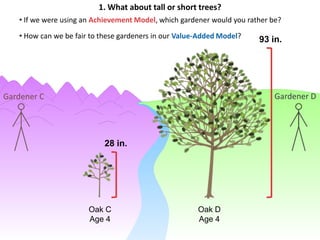

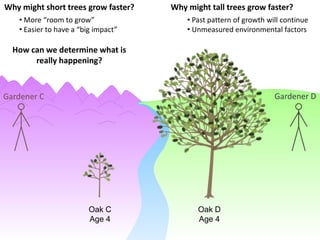

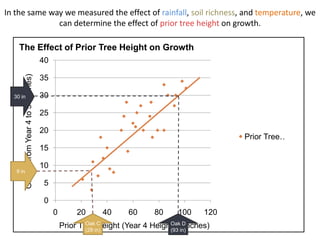

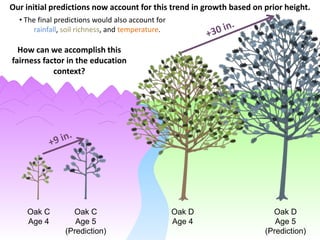

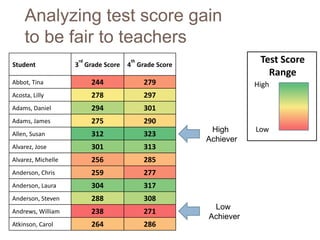

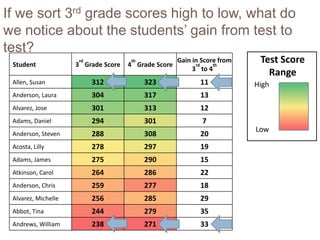

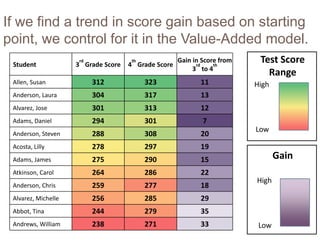

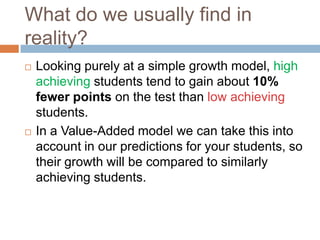

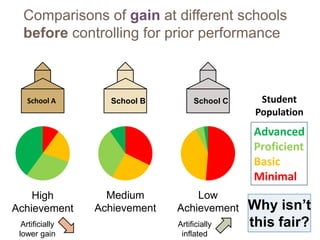

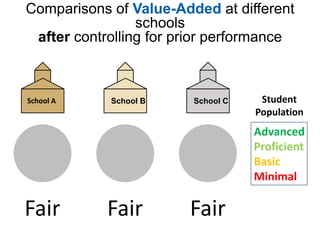

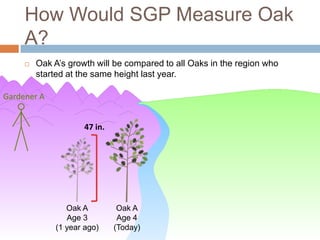

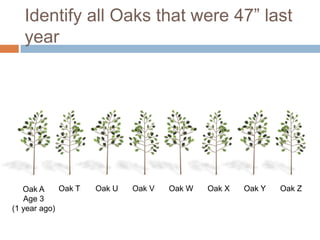

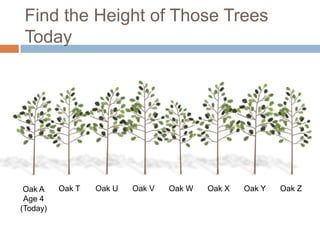

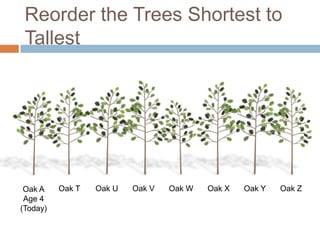

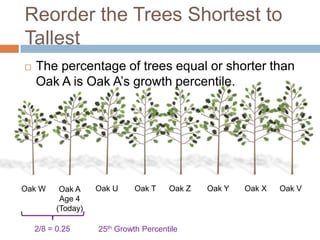

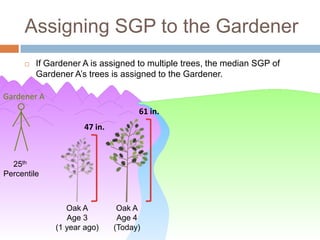

This document discusses using NWEA MAP assessments and the Value-Added Research Center (VARC) within New York's Annual Professional Performance Review (APPR) system. It provides an overview of VARC and how it analyzes student growth data to generate value-added scores for teachers, grades, and schools. It also explains how VARC results could potentially be transformed into the required 0-20 APPR rating scale. The document uses an analogy of evaluating gardeners' performance based on oak tree growth to illustrate the concepts of achievement, growth, and value-added models. It addresses technical details like the data needed and timeline for implementing a value-added system in New York.