This document describes data from a controlled, multiple case study of software evolution and defects from industrial projects. The study involved 12 software projects developed by 6 programmers across 2 systems. Data was collected on code smells, code changes, defects, task dates and other variables. Code, code smells and evolution data are available online, along with defect reports extracted from issue tracking systems. The goal is to enable further analysis of factors that influence software maintainability.

![Variables and Data Sources

System

Project context

Tasks

Source

code

Daily interviews

Audio files/notes

Subversion

database

Programming

Skill

Defects*

Development

Technology

Change

Size**

Effort**

Maintenance outcomes

Think aloud

Video files/notes

Task

progress

sheets

Eclipse

activity

logs

Trac (Issue tracker),

Acceptance test

reports

Open interviews

Audio files/notes

Variables

of interest

Data

sources

Moderator

variables

Code smells

(num. smells**

smell density**)

** System and file level

* Only at system level

Maintainability

perception*

Maintenance

problems**

Think aloud

Video files/notes

Study

diary

Task

Dates+

Figure from [1]

[1] Yamashita, 2012: “Assessing the capability of code smells to support software maintainability

assessments: Empirical inquiry and methodological approach” PhD Thesis](https://image.slidesharecdn.com/msr17a-171205033929/85/Msr17a-ppt-17-320.jpg)

![Potential usage scenarios

a) Analysis of “repeated defects” in a

multiple case study

b) Studies on the impact of different

metrics/attributes on software evolution

c) Further studies on inter-smell relations

d) Cost-benefit analysis of code smell

removal

e) Benchmarking of diverse tools/

methodologies

f) Task/context extraction, alongside

ideas by [2]

[2] M. Barnett, et al., “Helping Developers Help Themselves: Automatic Decomposition

of Code Review Change-sets,” (ICSE ’15)](https://image.slidesharecdn.com/msr17a-171205033929/85/Msr17a-ppt-59-320.jpg)

![Experimental Replication Applied to Case Study [1]](https://image.slidesharecdn.com/msr17a-171205033929/85/Msr17a-ppt-78-320.jpg)

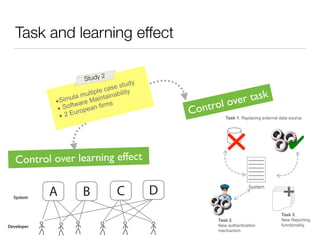

![Experimental Replication Applied to Case Study [1]

Context Context

Case 1 Case 2

Literal Replication

≈

Same Tasks

Developers with similar skills

Same project setting

Same technology

Case 2

Code

Smells

System A

Code

Smells

System A

≈

Maintenance

outcomes

Maintenance

outcomes

System ASystem A

Same Systems

Context Context

Case 1 Case 2

Maintenance

outcomes

Theoretical Replication

≠

Same Tasks

Developers with similar skills

Same project setting

Same technology

Case 3

Code

Smells

System A

Code

Smells

System B

≠

Maintenance

outcomes

System BSystem A

Different Systems](https://image.slidesharecdn.com/msr17a-171205033929/85/Msr17a-ppt-79-320.jpg)

![Experimental Replication Applied to Case Study [1]

Context Context

Case 1 Case 2

Literal Replication

≈

Same Tasks

Developers with similar skills

Same project setting

Same technology

Case 2

Code

Smells

System A

Code

Smells

System A

≈

Maintenance

outcomes

Maintenance

outcomes

System ASystem A

Same Systems

Context Context

Case 1 Case 2

Maintenance

outcomes

Theoretical Replication

≠

Same Tasks

Developers with similar skills

Same project setting

Same technology

Case 3

Code

Smells

System A

Code

Smells

System B

≠

Maintenance

outcomes

System BSystem A

Different Systems

[1] Yamashita, 2012: “Assessing the capability of code smells to support software maintainability

assessments: Empirical inquiry and methodological approach” PhD Thesis](https://image.slidesharecdn.com/msr17a-171205033929/85/Msr17a-ppt-80-320.jpg)