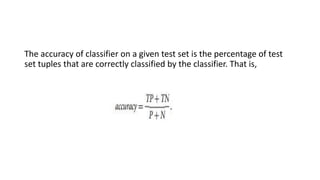

The document discusses metrics for evaluating classifiers, including true positives, true negatives, false positives, false negatives, and accuracy. It also discusses confusion matrices, sensitivity, specificity, precision, and recall. Additionally, it covers methods for evaluating classifiers like holdout validation, cross-validation, and Bayesian belief networks which allow representation of attribute dependencies.