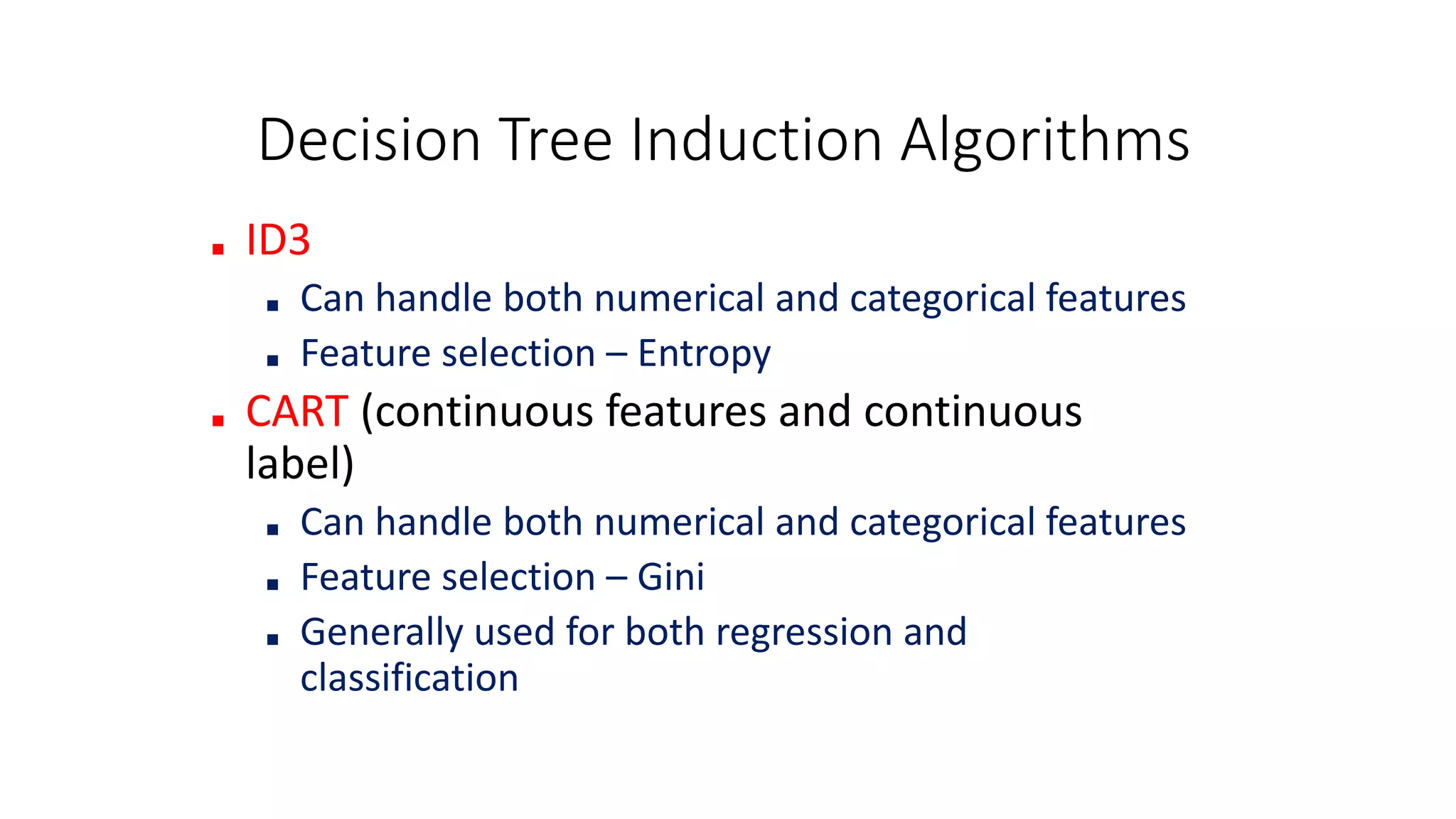

This document discusses decision tree induction algorithms and their splitting criteria. It covers ID3, CART, and C4.5 algorithms. ID3 uses information gain and entropy for splitting criteria. CART uses the Gini index. The Gini index measures impurity at each node, with 0 being pure and 0.5 being completely impure. C4.5 improves on ID3 by using the gain ratio, which normalizes information gain to account for attributes with many values. The document provides examples of computing the Gini index and error for different distributions of data classes at nodes.

![Measure of Impurity: GINI

• The Gini Index is the probability that a variable will not

be classified correctly if it was chosen randomly.

• Gini Index for a given node t with classes j

NOTE: p( j | t) is computed as the relative frequency of class j at

node t

j

t

j

p

t

GINI 2

)]

|

(

[

1

)

(

3](https://image.slidesharecdn.com/mldecisiontree2-230912204721-ad2f2189/75/ML-Decision-Tree_2-pptx-3-2048.jpg)

![GINI Index : Example

• Example: Two classes C1 & C2 and node t has 5 C1 and

5 C2 examples. Compute Gini(t)

• 1 – [p(C1|t) + p(C2|t)] = 1 – [(5/10)2 + [(5/10)2 ]

• 1 – [¼ + ¼] = ½.

j

t

j

p

t

GINI 2

)]

|

(

[

1

)

(

4

The Gini index will always be between [0, 0.5], where 0 is

a selection that perfectly splits each class in your dataset

(pure), and 0.5 means that neither of the classes was

correctly classified (impure).](https://image.slidesharecdn.com/mldecisiontree2-230912204721-ad2f2189/75/ML-Decision-Tree_2-pptx-4-2048.jpg)

![More on Gini

• Worst Gini corresponds to probabilities of 1/nc, where nc is the number of

classes.

• For 2-class problems the worst Gini will be ½

• How do we get the best Gini? Come up with an example for node t with 10

examples for classes C1 and C2

• 10 C1 and 0 C2

• Now what is the Gini?

• 1 – [(10/10)2 + (0/10)2 = 1 – [1 + 0] = 0

• So 0 is the best Gini

• So for 2-class problems:

• Gini varies from 0 (best) to ½ (worst).

5](https://image.slidesharecdn.com/mldecisiontree2-230912204721-ad2f2189/75/ML-Decision-Tree_2-pptx-5-2048.jpg)

![Examples for computing GINI

C1 0

C2 6

C1 2

C2 4

C1 1

C2 5

P(C1) = 0/6 = 0 P(C2) = 6/6 = 1

Gini = 1 – P(C1)2 – P(C2)2 = 1 – 0 – 1 = 0

j

t

j

p

t

GINI 2

)]

|

(

[

1

)

(

P(C1) = 1/6 P(C2) = 5/6

Gini = 1 – (1/6)2 – (5/6)2 = 0.278

P(C1) = 2/6 P(C2) = 4/6

Gini = 1 – (2/6)2 – (4/6)2 = 0.444](https://image.slidesharecdn.com/mldecisiontree2-230912204721-ad2f2189/75/ML-Decision-Tree_2-pptx-7-2048.jpg)