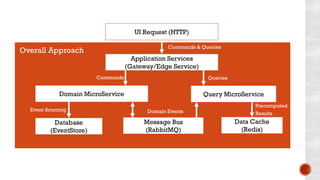

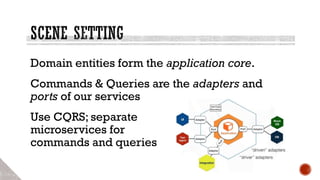

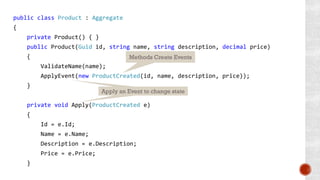

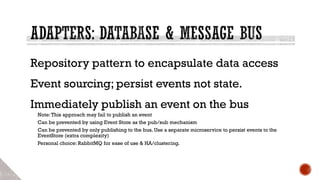

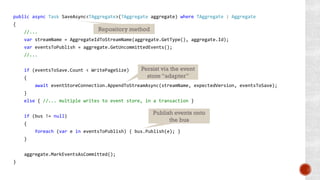

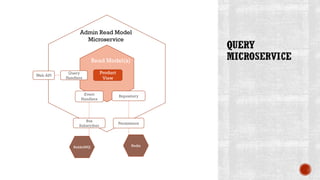

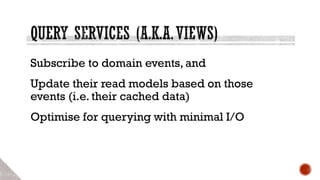

This document outlines the principles of building microservices architecture, emphasizing flexibility, scalability, and resilience through independent deployment of services. It discusses the importance of loose coupling, proper communication patterns, and the integration of domain-driven design, event sourcing, and CQRS to enhance performance and maintainability. Additionally, it presents considerations for testing, deploying, and managing services within a microservices ecosystem.

![[HttpPost]

public IHttpActionResult Post(CreateProductCommand cmd)

{

if (string.IsNullOrWhiteSpace(cmd.Name))

{

var response = new HttpResponseMessage(HttpStatusCode.Forbidden) { //… }

throw new HttpResponseException(response);

}

try

{

var command = new CreateProduct(Guid.NewGuid(), cmd.Name, cmd.Description, cmd.Price);

handler.Handle(command);

var link = new Uri(string.Format("http://localhost:8181/api/products/{0}", command.Id));

return Created<CreateProduct>(link, command);

}

catch (AggregateNotFoundException) { return NotFound(); }

catch (AggregateDeletedException) { return Conflict(); }

}

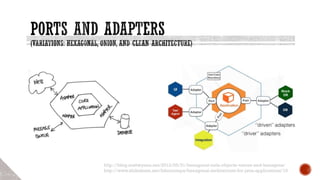

Incoming “adapter”

Pass through to the

internal “port”

Commands either

succeed or throw an error](https://image.slidesharecdn.com/microserviceswith-160805020136/85/Microservices-with-Net-NDC-Sydney-2016-54-320.jpg)

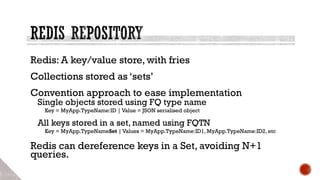

![public IEnumerable<T> GetAll()

{

var get = new RedisValue[] { InstanceName() + "*" };

var result = database.SortAsync(SetName(), sortType: SortType.Alphabetic, by: "nosort", get: get).Result;

var readObjects = result.Select(v => JsonConvert.DeserializeObject<T>(v)).AsEnumerable();

return readObjects;

}

public void Insert(T t)

{

var serialised = JsonConvert.SerializeObject(t);

var key = Key(t.Id);

var transaction = database.CreateTransaction();

transaction.StringSetAsync(key, serialised);

transaction.SetAddAsync(SetName(), t.Id.ToString("N"));

var committed = transaction.ExecuteAsync().Result;

if (!committed)

{

throw new ApplicationException("transaction failed. Now what?");

}

}

Updating the Redis Cache

We cache JSON strings.

Simple Redis query

Return the DTOs we’d

previously persisted](https://image.slidesharecdn.com/microserviceswith-160805020136/85/Microservices-with-Net-NDC-Sydney-2016-66-320.jpg)