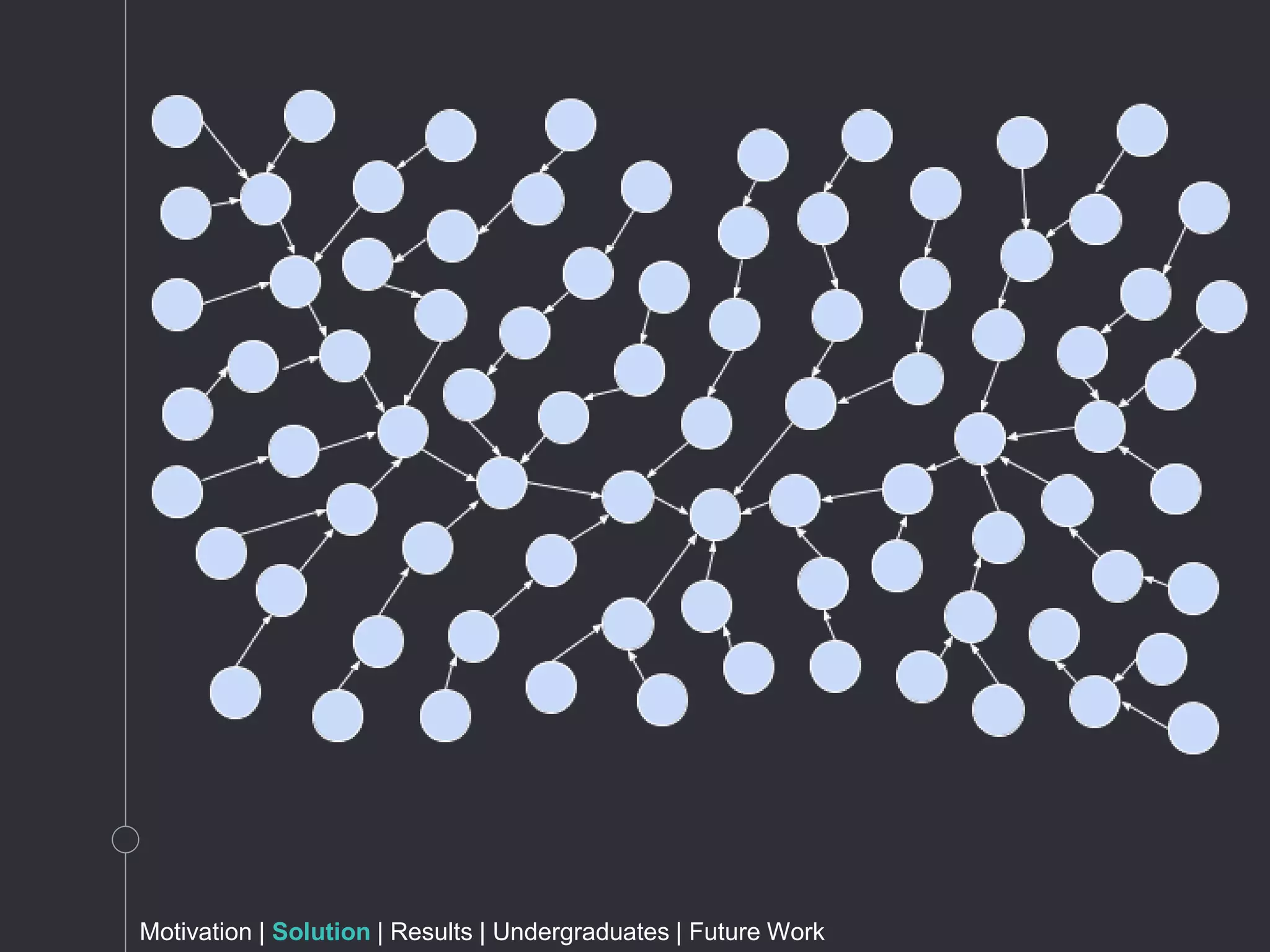

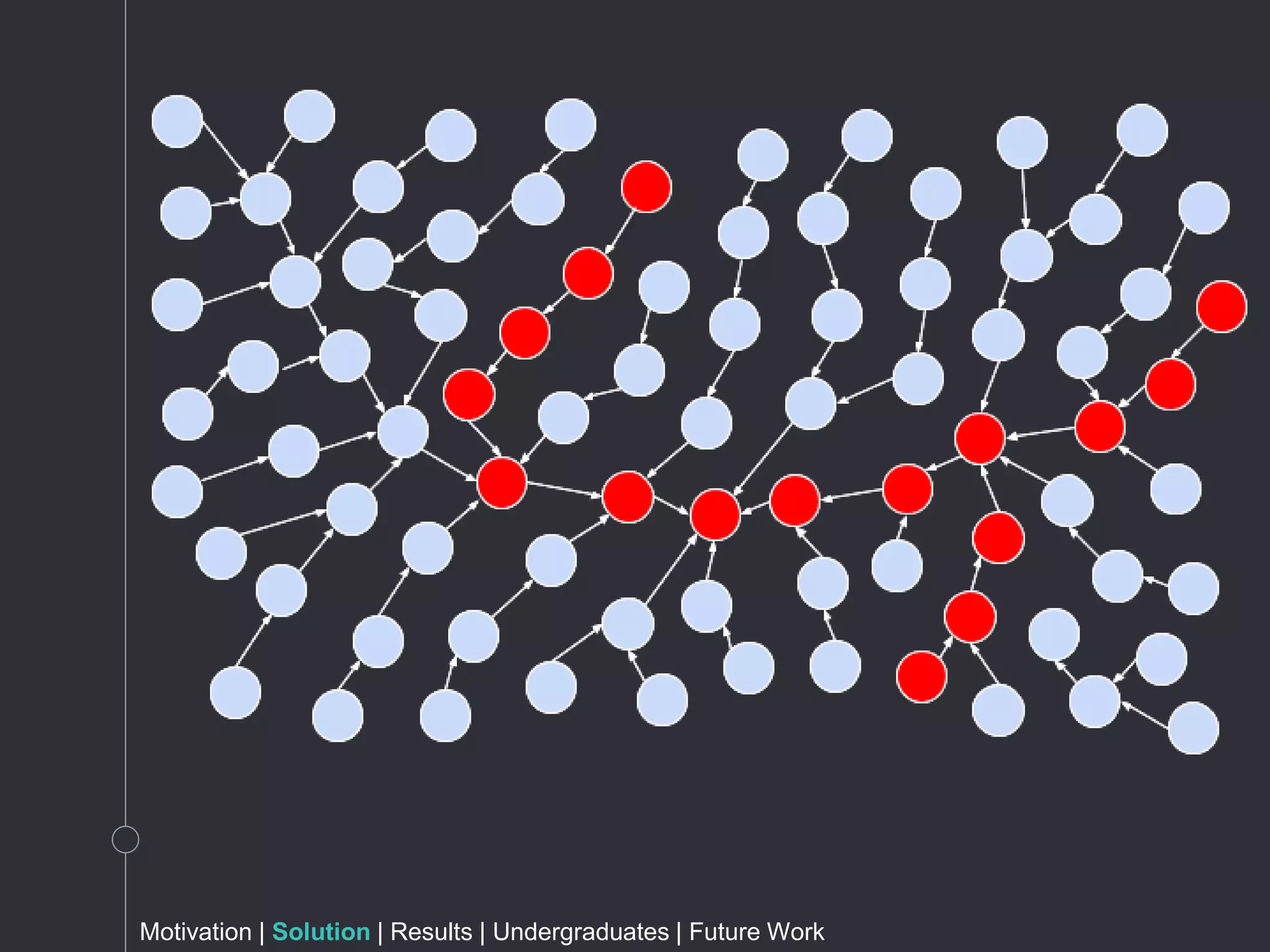

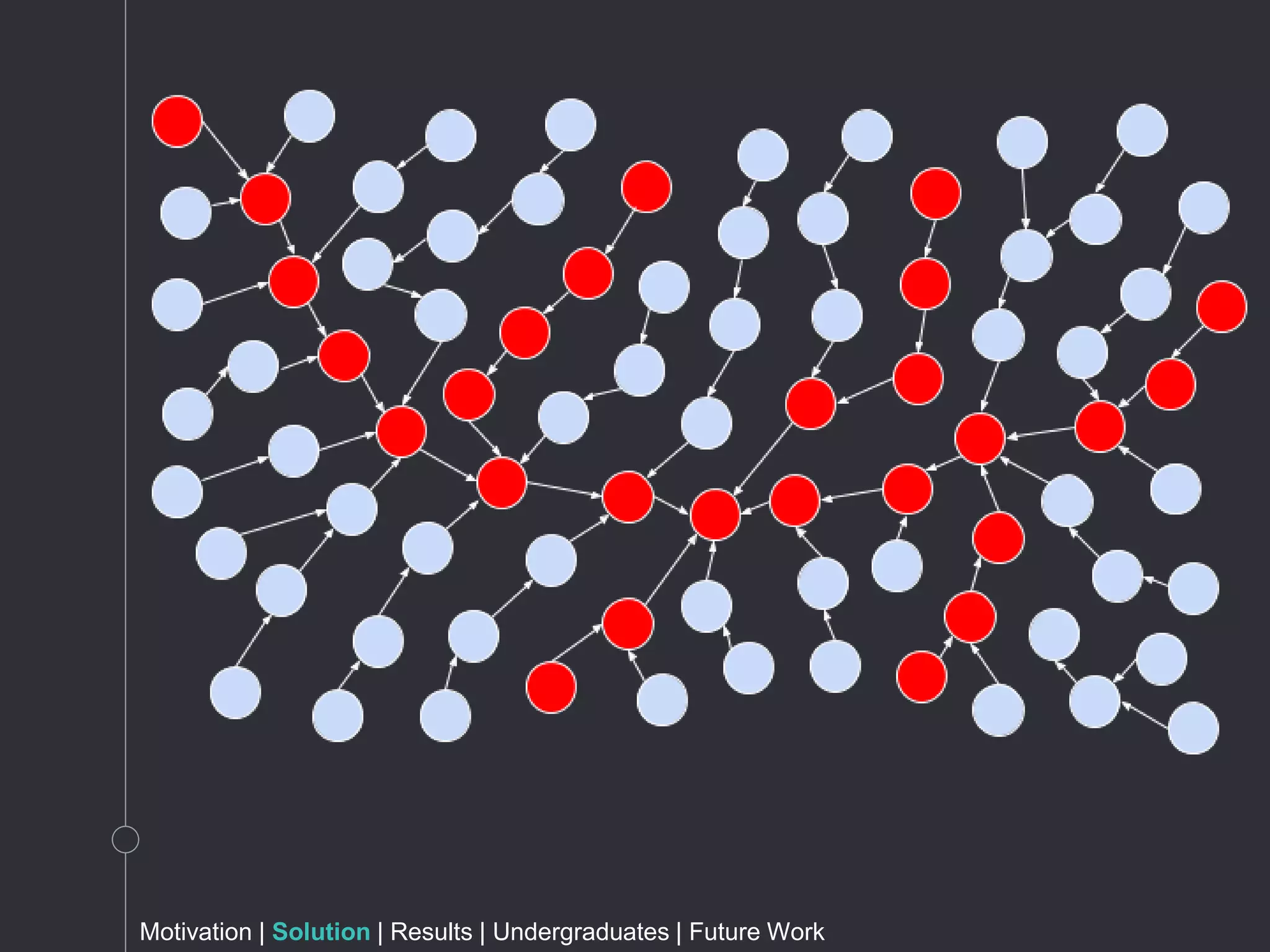

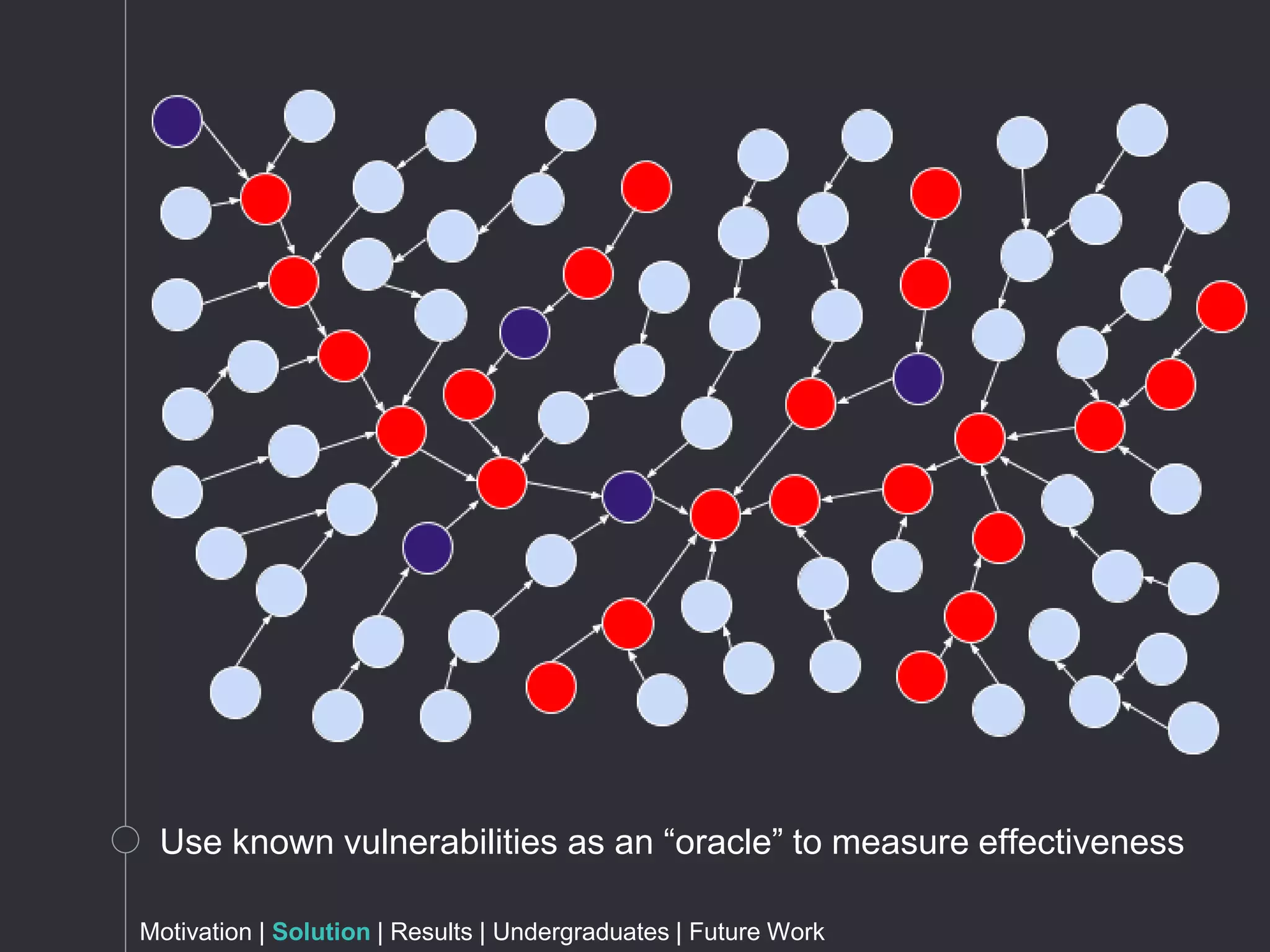

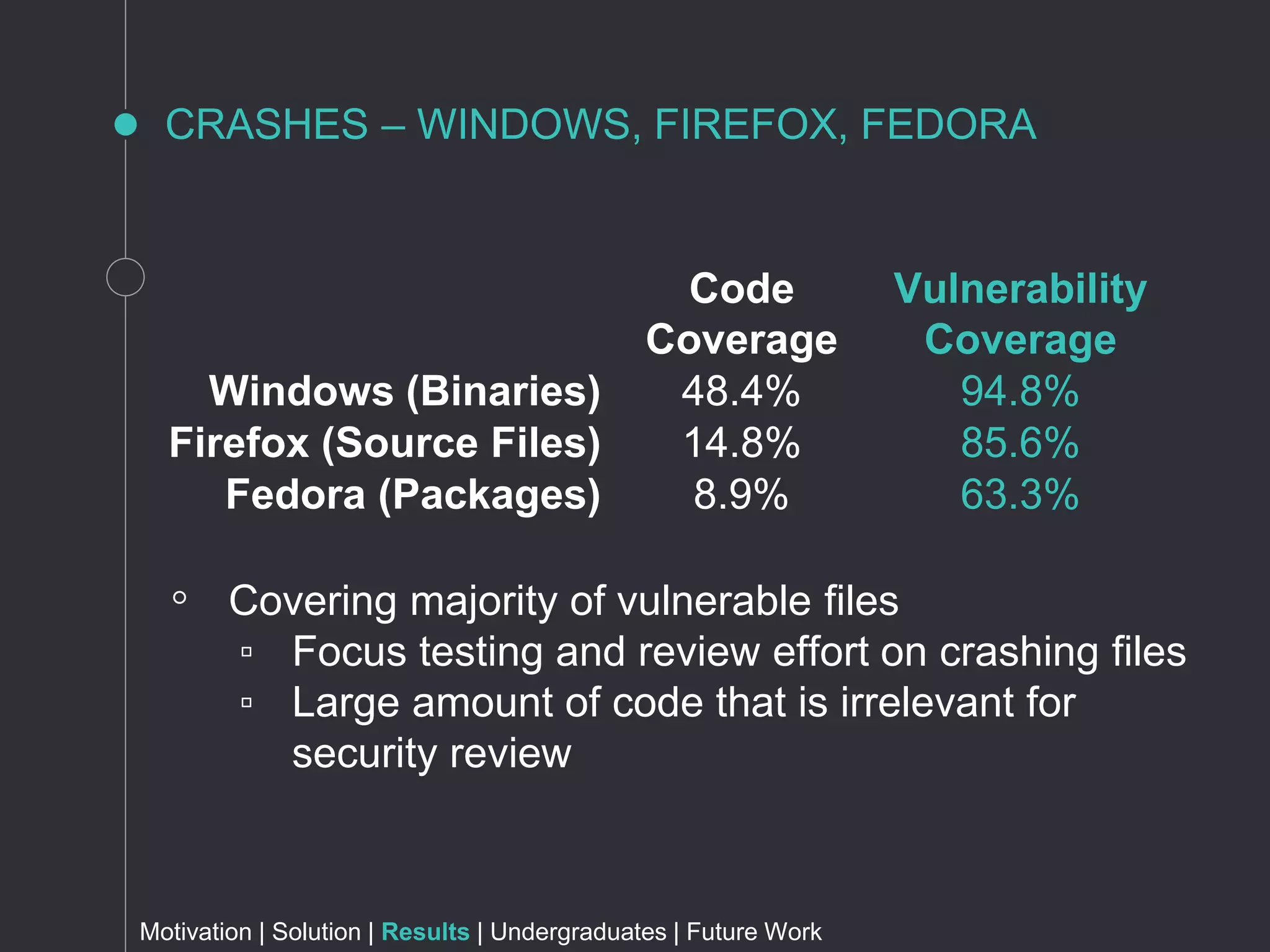

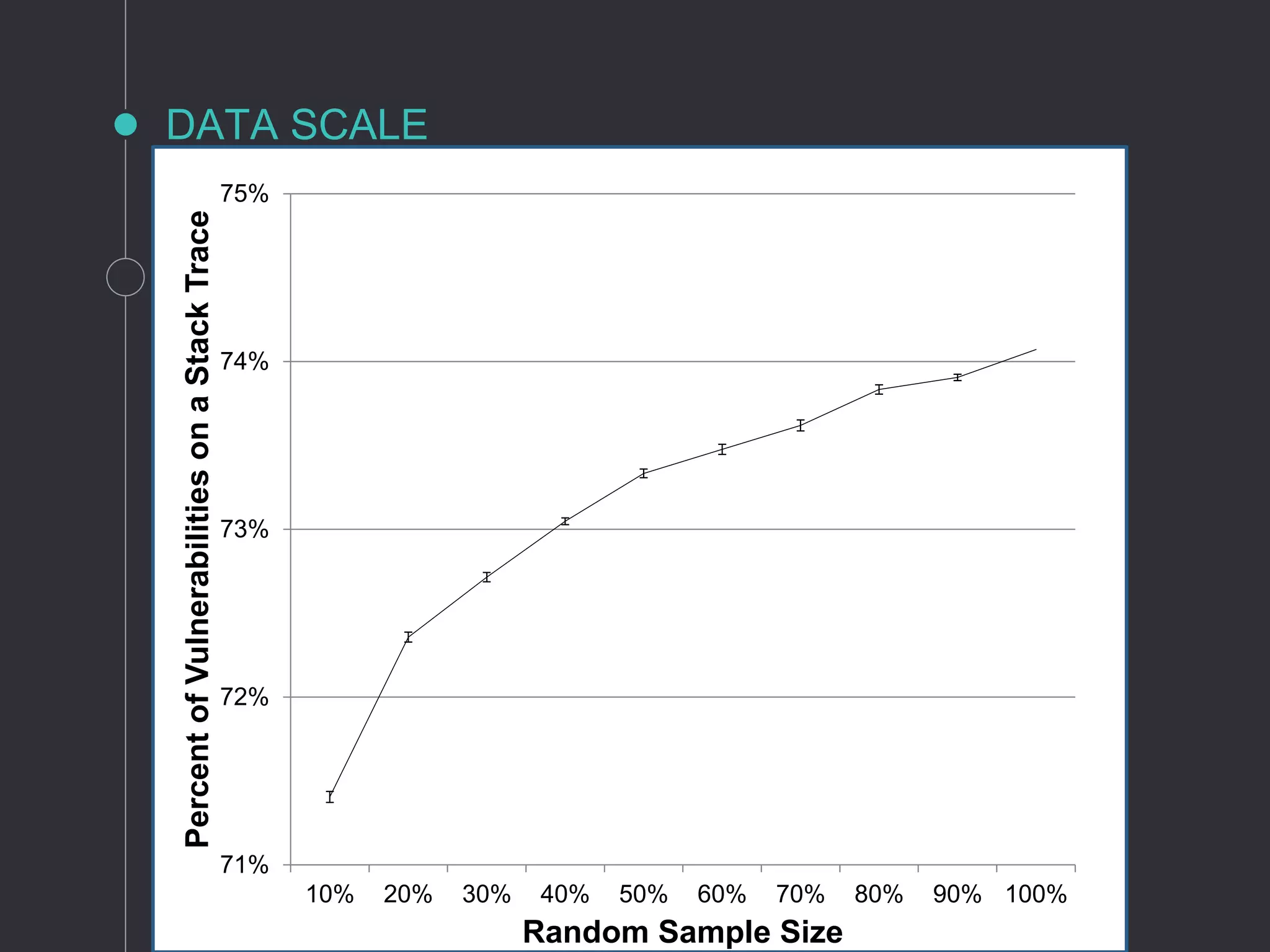

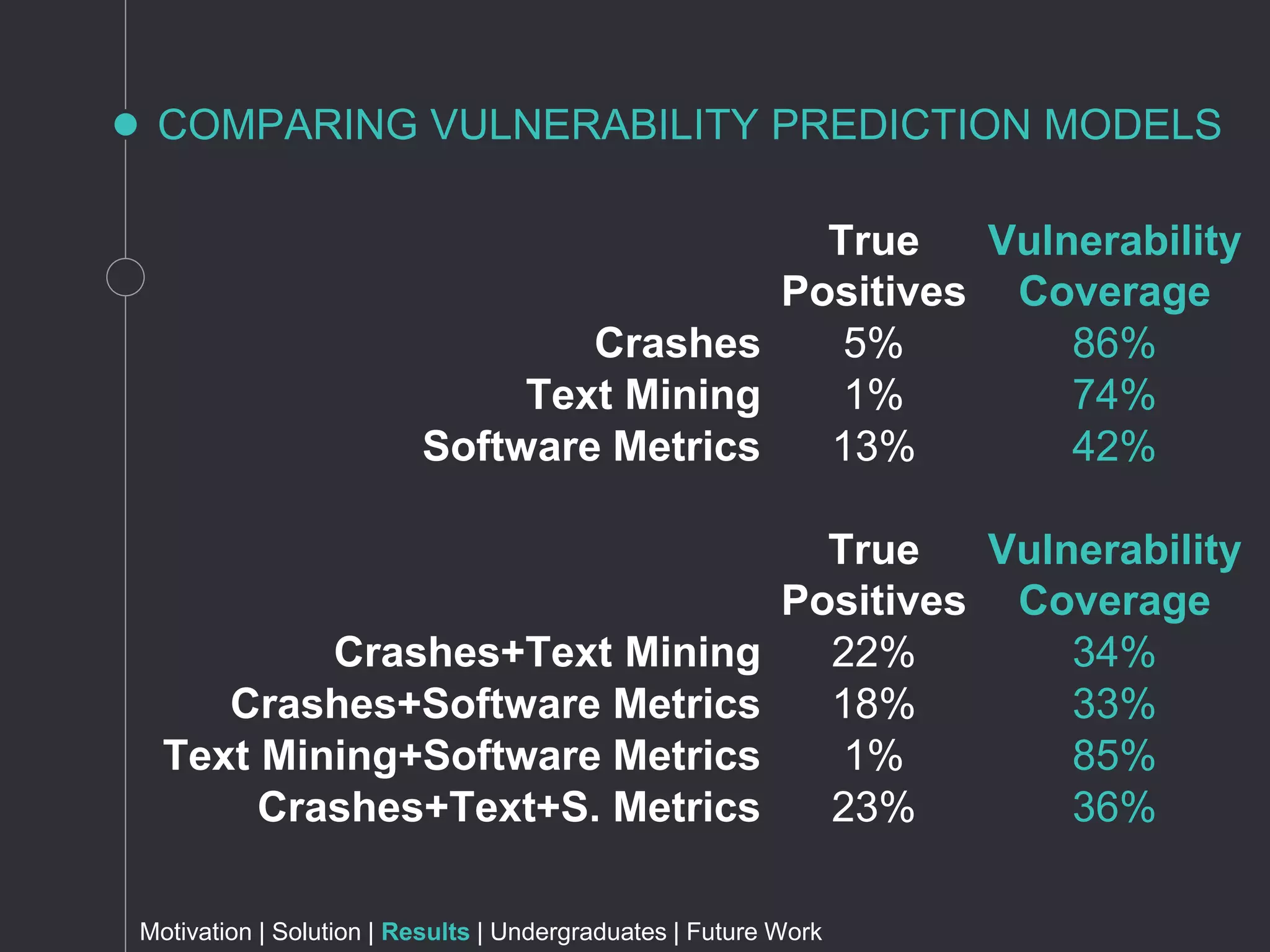

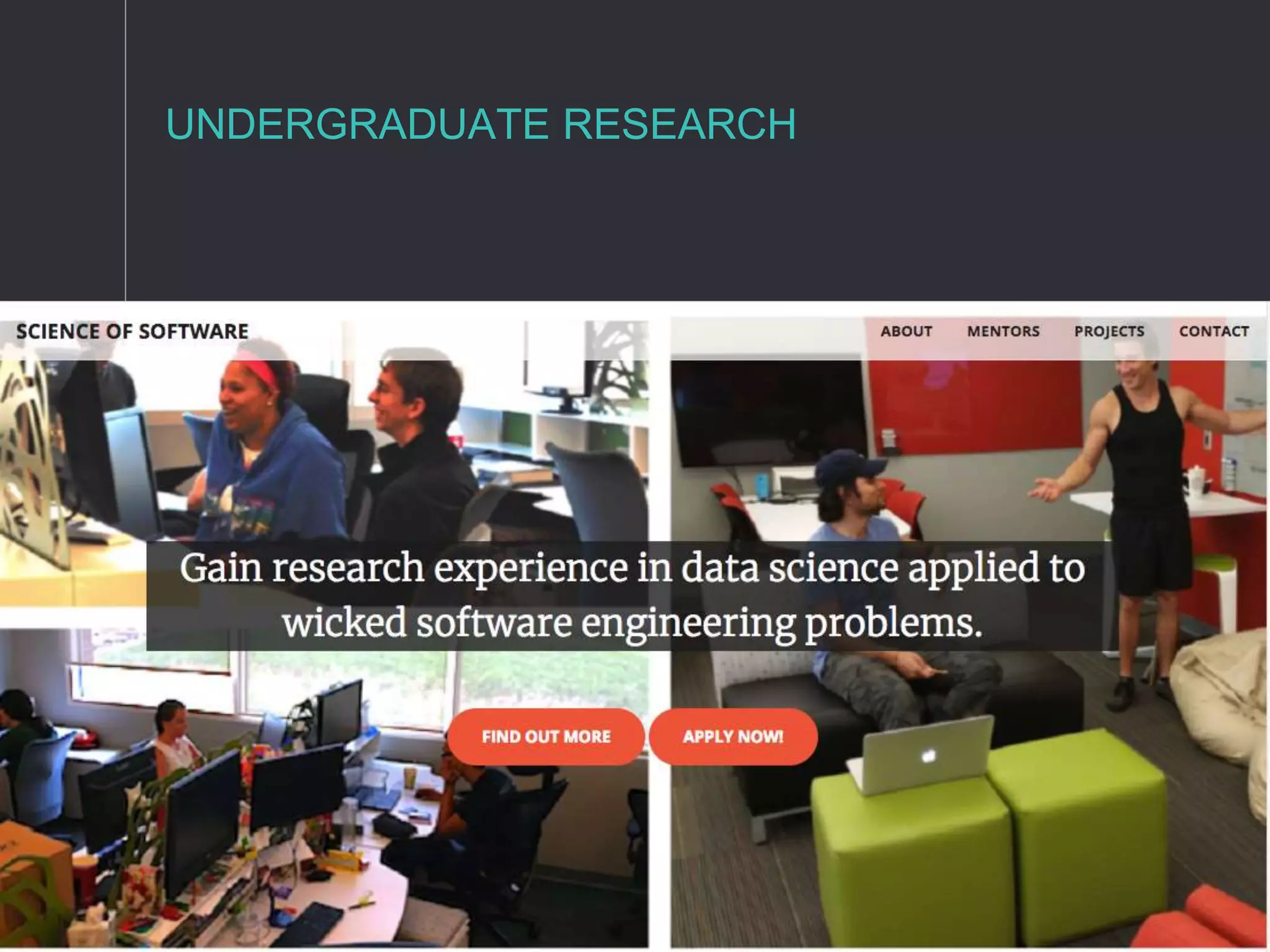

The document discusses metrics for prioritizing security efforts. It proposes using attack surfaces, which are all paths for data and commands in software, to identify code most likely to have vulnerabilities. The approach analyzes crash dump stack traces to determine which code is involved in crashes, representing active parts of the attack surface. Analysis of Windows, Firefox, and Fedora crashes found this approach covered the majority of known vulnerabilities. Undergraduate research further classified vulnerability types covered and visualized the data. The approach was found to outperform alternative models using software metrics or text mining alone or in combination.

![[2] https://arstechnica.com/information-technology/2017/02/microsoft-hosts-the-windows-source-in-a-monstrous-300gb-git-repository/

Windows: 300GB repository, millions of source code files

Motivation | Solution | Results | Undergraduates | Future Work](https://image.slidesharecdn.com/finaljobtalk-180227040336/75/Metrics-for-Security-Effort-Prioritization-9-2048.jpg)

![RELATED WORK

Data in this talk:

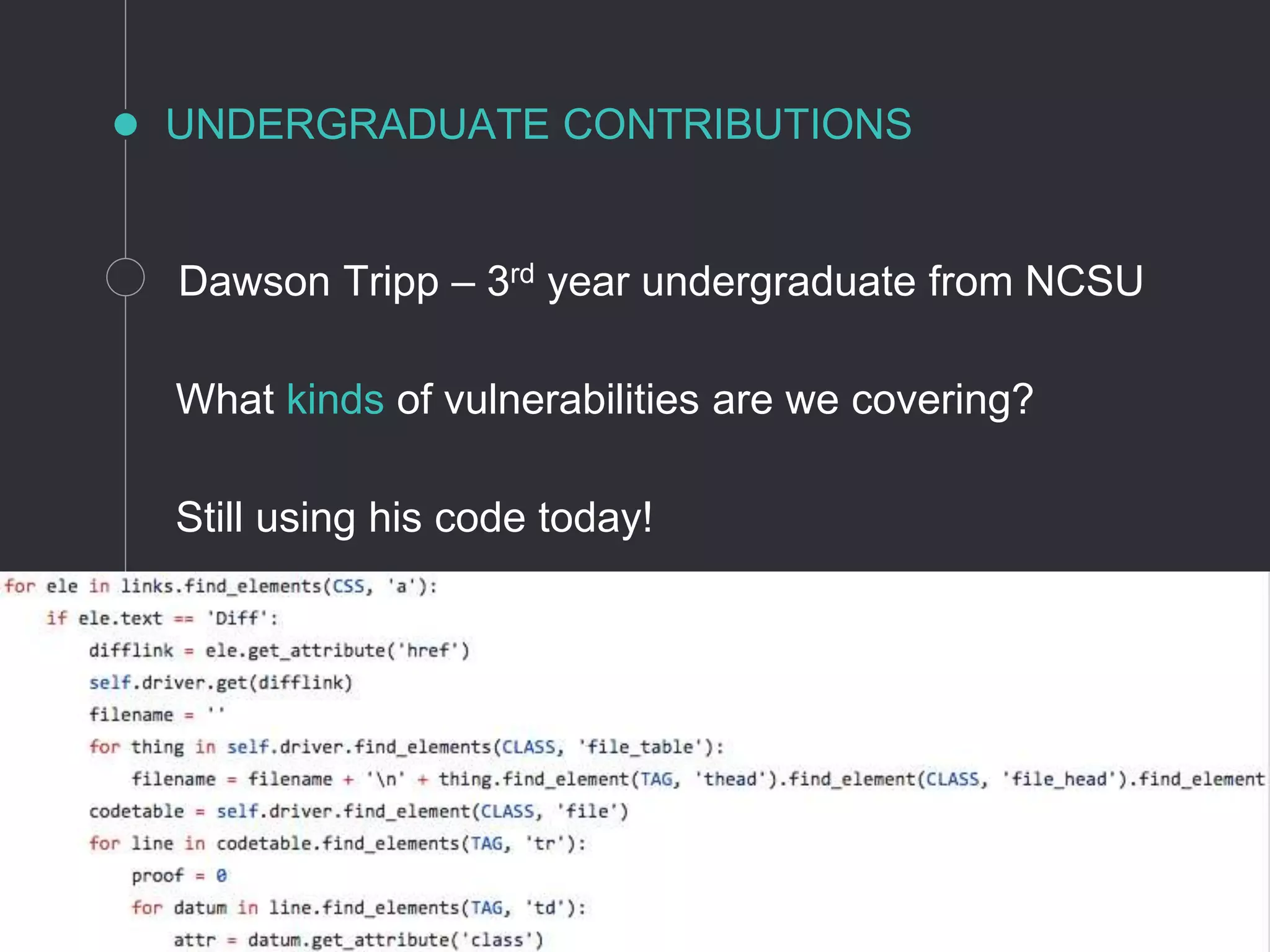

In Submission: [SECDEV ‘18] Chris Theisen, Hyunwoo Song, Dawson Tripp, Laurie Williams,

“What Are We Missing? Vulnerability Coverage by Type”

In Submission: [FSE ‘18] Chris Theisen, Hyunwoo Song, Dalisha Rodriguez, Laurie Williams,

“Better Together: Comparing Vulnerability Prediction Models”

[ICSE – SEIP ‘17] Chris Theisen, Kim Herzig, Laurie Williams, “Risk-Based Attack Surface

Approximation: How Much Data is Enough?”

[ICSE - SEIP ‘15] Chris Theisen, Kim Herzig, Pat Morrison, Brendan Murphy, and Laurie

Williams, “Approximating Attack Surfaces with Stack Traces”, in Companion Proceedings of the

37th International Conference on Software Engineering

Other works:

Revising: [IST] Chris Theisen, Nuthan Munaiah, Mahran Al-Zyoud, Jeffery C. Carver, Laurie

Williams, “Attack Surface Definitions”

[HotSoS ’17] Chris Theisen and Laurie Williams, “Advanced Metrics for Risk-Based Attack

Surface Approximation”, Proceedings of the Symposium and Bootcamp on the Science of Security.

[FSE – SRC ’15 – 1st Place] Chris Theisen, “Automated Attack Surface Approximation”, in the

23rd ACM SIGSOFT International Symposium on the Foundations of Software Engineering -

Student Research Competition, 2015

[SRC Grand Finals ’15 – 3rd Place] Chris Theisen, “Automated Attack Surface Approximation”, in

the 23rd ACM SIGSOFT International Symposium on the Foundations of Software Engineering -

Student Research Competition, 2015](https://image.slidesharecdn.com/finaljobtalk-180227040336/75/Metrics-for-Security-Effort-Prioritization-37-2048.jpg)

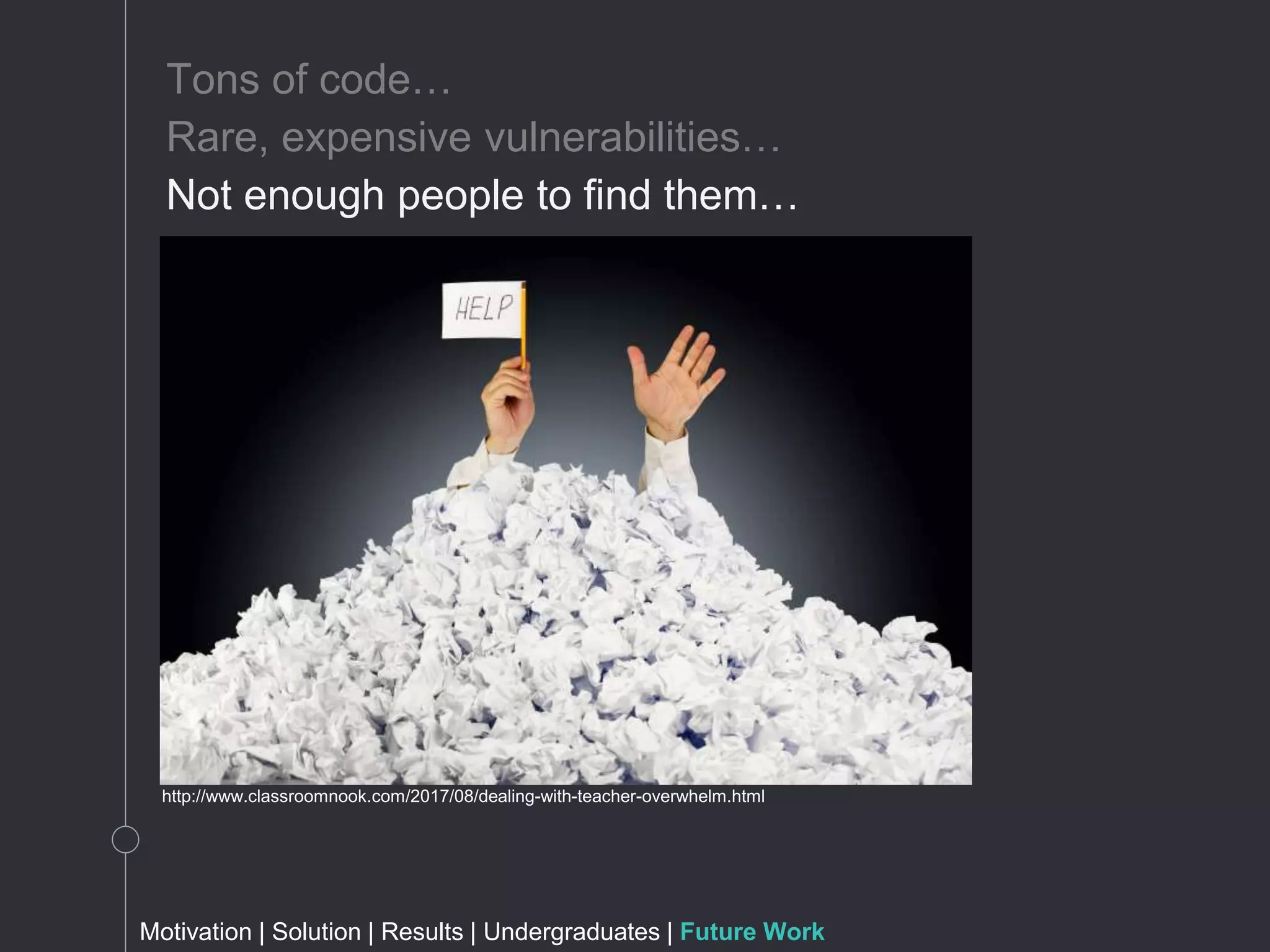

![IDENTIFYING VULNERABLE CODE

#include <stdio.h>

int main(int argc, char **argv)

{

char buf[8]; // buffer for eight characters

gets(buf); // read from stdio (sensitive!)

printf("%sn", buf); // print out data in buf

return 0; // 0 as return value

}

https://www.owasp.org/index.php/Buffer_overflow_attack

Motivation | Solution | Results | Undergraduates | Future Work](https://image.slidesharecdn.com/finaljobtalk-180227040336/75/Metrics-for-Security-Effort-Prioritization-38-2048.jpg)