This document discusses various mathematical tools used in digital image processing (DIP), including array versus matrix operations, linear versus nonlinear operations, arithmetic operations, set and logical operations, spatial operations, vector and matrix operations, and image transforms. Key points include:

- Array operations are performed on a pixel-by-pixel basis, while matrix operations consider relationships between pixels.

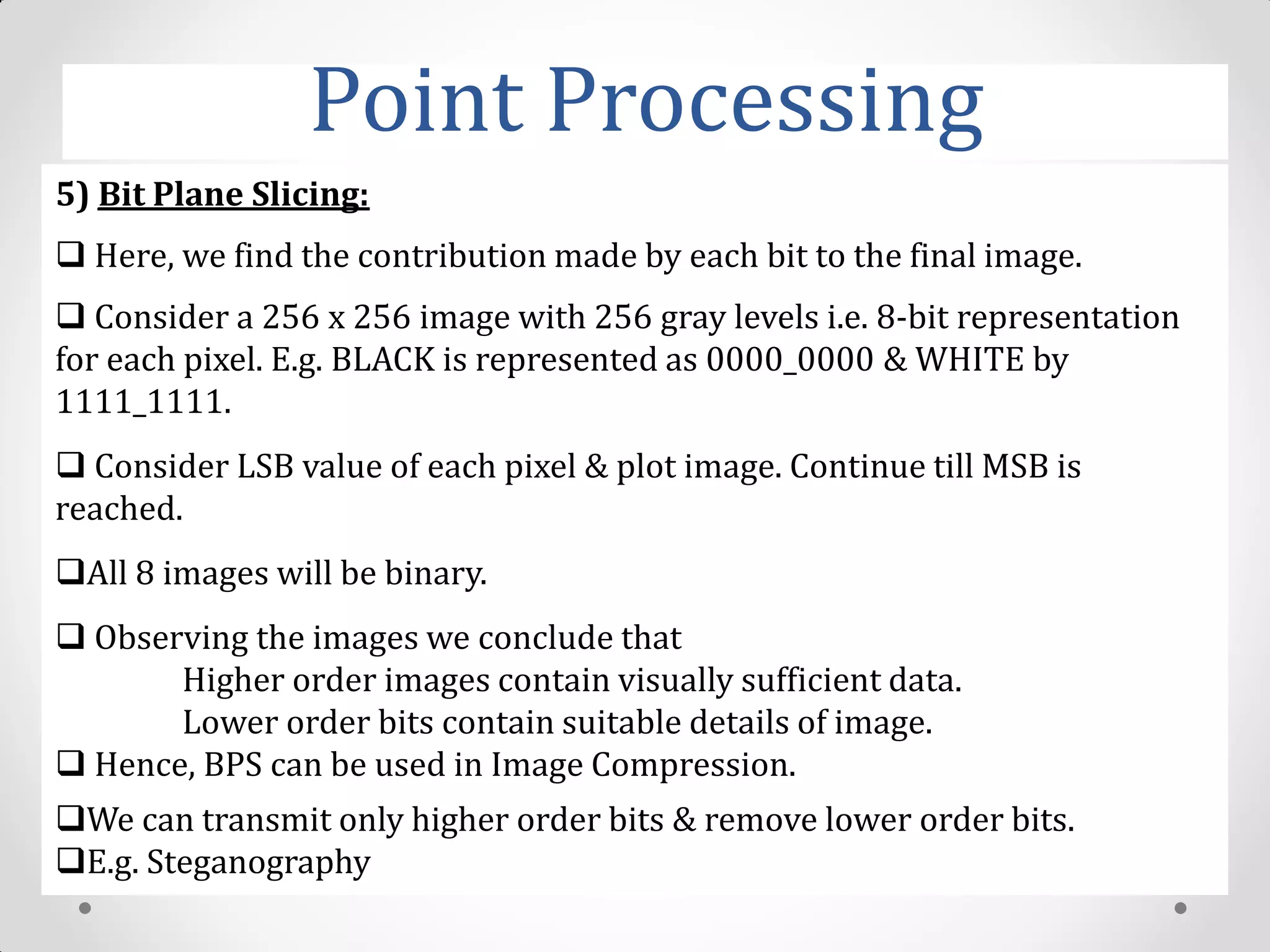

- Linear operators preserve scaling and addition properties, while nonlinear operators like max do not.

- Spatial operations include single-pixel, neighborhood, and geometric transformations of pixel locations and intensities.

- Images can be represented as vectors and transformed using matrix operations.

- Common transforms like Fourier use separable, symmetric kernels to decompose images into frequency domains.

![Linear versus Nonlinear

Operations

• General operator, H, that produces an output image, g(x, y),

for a given input image, f (x, y):

H[f(x, y)] = g(x, y)

• H is said to be a linear operator if

H[aifi(x,y) + ajfj(x,y)] = aiH[fi(x,y)] + ajH[fj(x,y)]

= aigi(x,y) + ajgj(x,y)—Eq.(i)

where, ai, aj – arbitrary constants

fi(x,y), fj(x,y) – images of same size.](https://image.slidesharecdn.com/mathematicaltoolsindip-210417050321/75/Mathematical-tools-in-dip-4-2048.jpg)

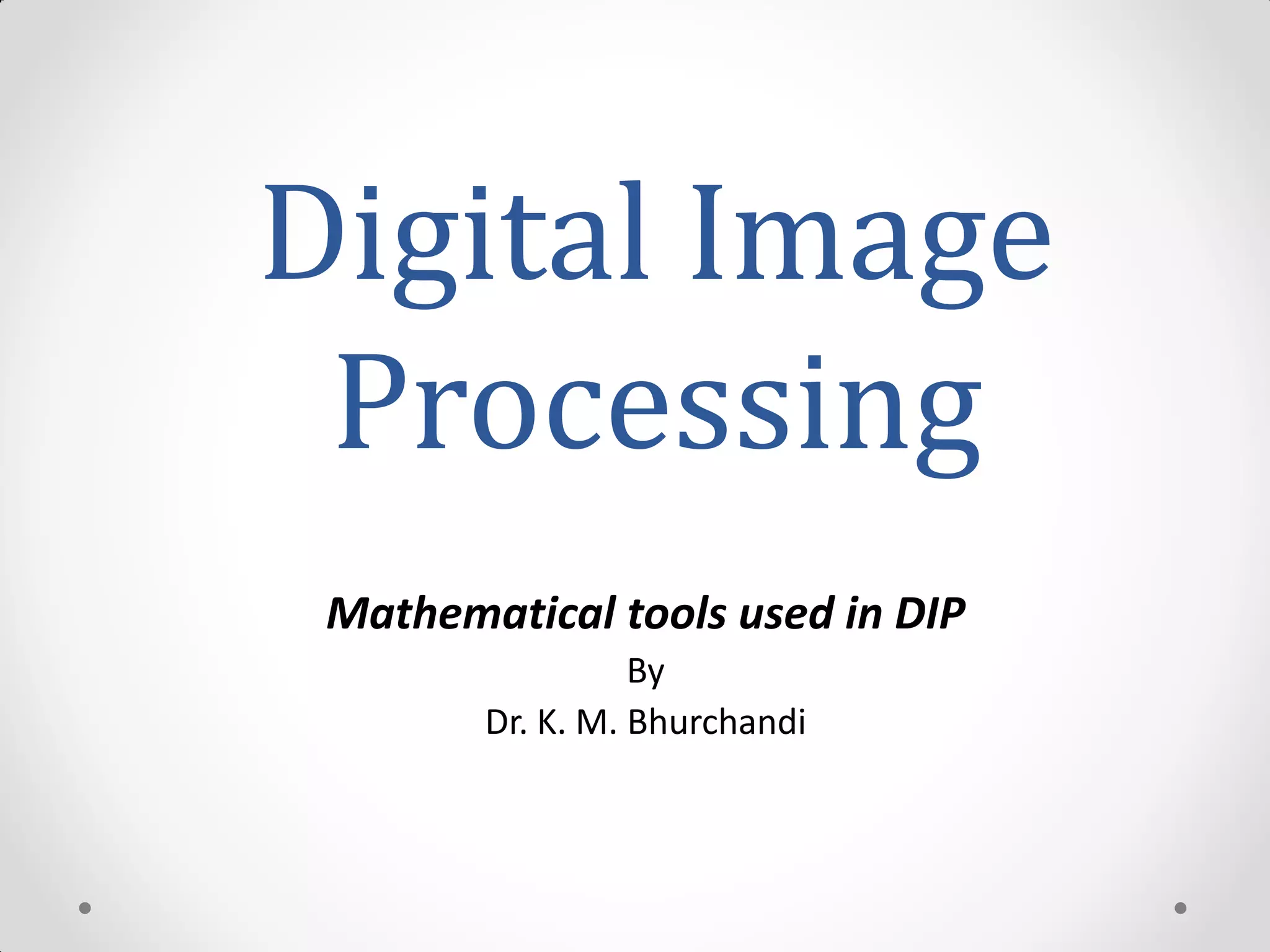

![• Suppose H is the sum operator,

• [𝑎𝑖𝑓𝑖 𝑥, 𝑦 + 𝑎𝑗𝑓𝑗(𝑥, 𝑦)] = 𝑎𝑖𝑓𝑖(𝑥, 𝑦) + 𝑎𝑗𝑓𝑗(𝑥, 𝑦)

= 𝑎𝑖 𝑓𝑖 𝑥, 𝑦 + 𝑎𝑗 𝑓𝑗(𝑥, 𝑦)

= 𝑎𝑖𝑔𝑖 𝑥, 𝑦 + 𝑎𝑗𝑔𝑗(𝑥, 𝑦)

Thus, operator is linear.](https://image.slidesharecdn.com/mathematicaltoolsindip-210417050321/75/Mathematical-tools-in-dip-5-2048.jpg)