This document provides an overview of digital image processing and computer vision. It discusses:

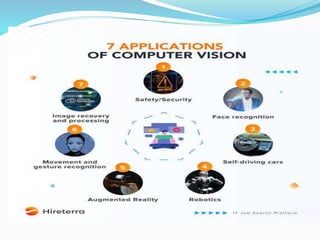

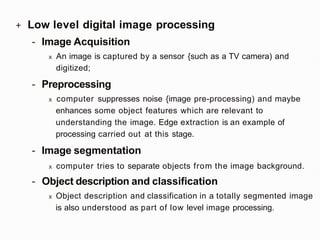

1. Low-level image processing techniques like pre-processing, segmentation, and object description that use little domain knowledge.

2. High-level image understanding techniques based on knowledge, goals, and plans that aim to imitate human cognition.

3. Fundamental concepts in digital image processing including image functions, sampling, quantization, and properties. Mathematical tools from linear systems theory, transforms, and statistics are used.

![+ A continuous image function

f(x,y) can be sampled using a

discrete grid of sampling

points in the plane.

+ The image is sampled at points x = j x, y = k y

+ Two neighboring sampling points are separated

by distance x along the x axis and y along the

y axis. Distances x and y are called the

sampling interval(*t¥f8] i;) and the matrix of

samples constitutes the discrete image.](https://image.slidesharecdn.com/chapter1cv-240318044322-293de291/85/chAPTER1CV-pptx-is-abouter-computer-vision-in-artificial-intelligence-34-320.jpg)

![I I

■

■ ■ ■ ■ ■

■ ■ ■ ■

■ ■ ■ ■

■ ■ ■ ■

■ ■ ■

■ ■

■ ■ ■ ■ ■ ■

■ ■ ■ ■ ■ ■ ■

■ ■ ■

■ ■ ■

■ ■

■ ■

■ ■

■ ■

+ Region is a contiguous(ii®8{]) set.

+ Contiguity paradoxes(1$i.t) of the square grid

... Fig. 2.7, 2.8

I

I

I

Figure Z.7 Di9ital line. Figure Z.8 Olosed curtte paradcn.](https://image.slidesharecdn.com/chapter1cv-240318044322-293de291/85/chAPTER1CV-pptx-is-abouter-computer-vision-in-artificial-intelligence-51-320.jpg)

![1. Assign zero values to all

elements of the array h.

2. For all pixels (x,y) of the image f, increment

h(f(x,y)) by one.

int h{256}={0};

for(i=O; i<M;i++)

for(j=O; j<N;j++)

h[f[i][j]]++;](https://image.slidesharecdn.com/chapter1cv-240318044322-293de291/85/chAPTER1CV-pptx-is-abouter-computer-vision-in-artificial-intelligence-58-320.jpg)