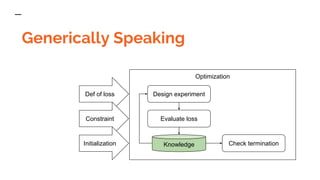

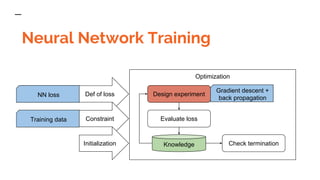

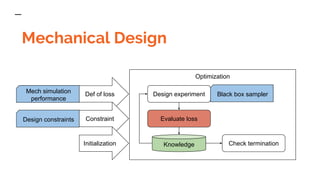

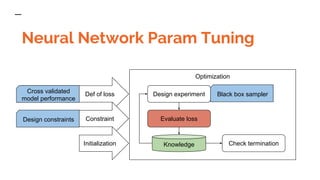

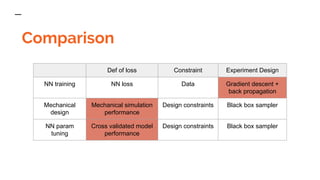

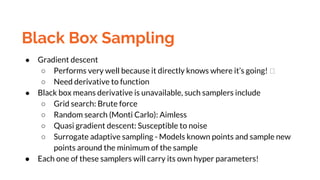

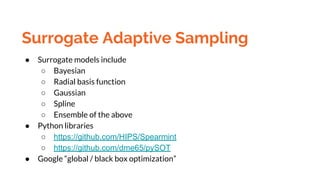

The document contrasts machine learning with traditional optimization, highlighting that machine learning predicts new sample behavior and identifies data patterns, while traditional optimization focuses on parameter selection within engineering design constraints. It discusses various methodologies, like black box sampling and neural network training, and outlines the importance of evaluating loss functions through simulations. Additionally, it mentions Python libraries for global optimization and introduces surrogate adaptive sampling methods.