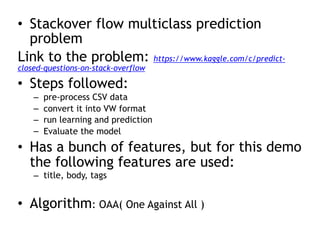

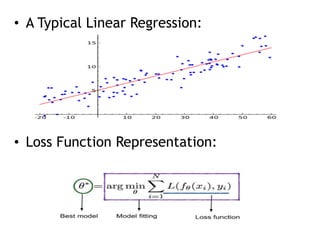

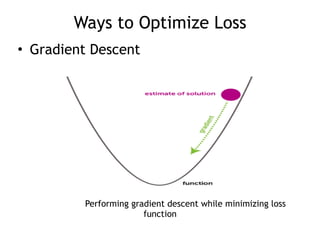

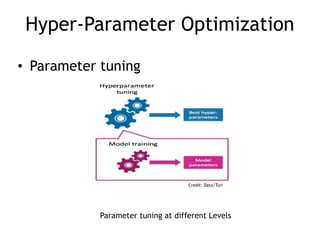

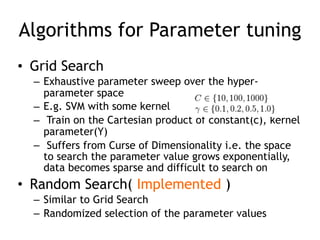

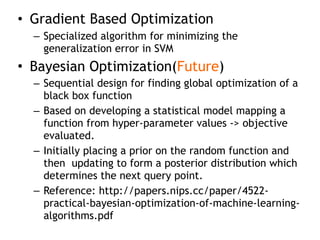

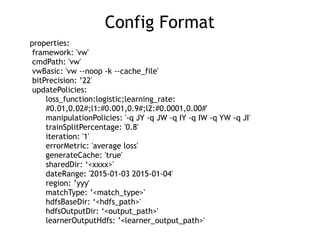

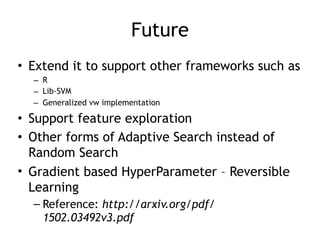

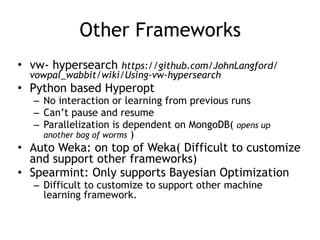

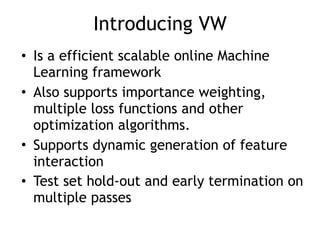

The document discusses machine learning models and hyper-parameter optimization, emphasizing the importance of parameter tuning for improving model accuracy and generalization. It outlines various optimization techniques, including grid search, random search, and Bayesian optimization, while addressing the challenges faced in selecting algorithms and tuning parameters. Additionally, the text introduces Apache Spark for hyper-parameter optimization and provides a demo of the Vowpal Wabbit framework for scalable online learning.

![Apache Spark HPO

spark-submit --driver-memory 8g --executor-memory 8g --num-executors 2 --executor-cores 4 –

class ../modellearner.ExecutionManager --master local[*] model-learner-0.0.1-jar-with-

dependencies.jar --help

Experimental Model Learner.

For usage see below:

-a, --aloha-spec-file <arg> Specify the aloha spec file name with path

-c, --config-file <arg> Specify the config file name with path

-n, --negative-downSampling <arg> Specify negative down-sampling needed as a

percentage (default = 0.0)

-q, --query_data <arg> Specify false if there is no need query for

data(useful if data has been queried earlier)

(default = true)

-s, --seed-value <arg> Specify seed value (default = 0)

-t, --top-n-values <arg> Specify the number for top n loss values (default = 10)

--help Show help message

*Currently supports only vw, can be extended to include others.](https://image.slidesharecdn.com/hpo-170116034910/85/Learning-to-Optimize-12-320.jpg)

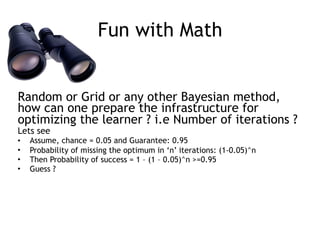

![Demo of vw

• vw file format

[Label] [Importance] [Tag]|Namespace Features |Namespace Features ... |

Namespace Features

• Label: is the real number that we are trying to predict for this example

• Importance: (importance weight) is a non-negative real number indicating

the relative importance of this example over the others.

• Tag: is a string that serves as an identifier for the example

• Namespace: is an identifier of a source of information for the example

• Features: is a sequence of whitespace separated strings, each of which is

optionally followed by a float

• Note: ** vertical bar, colon, space, and newline](https://image.slidesharecdn.com/hpo-170116034910/85/Learning-to-Optimize-20-320.jpg)