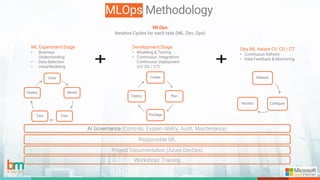

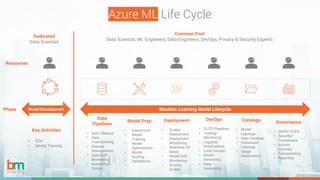

Bizmetric, established in 2011 and a Microsoft Gold Partner, specializes in MLOps and provides extensive Azure data services with a highly experienced global team. They have successfully implemented automated machine learning solutions for various industries, focusing on operational efficiency and continuous delivery with a commitment to responsible AI practices. Their MLOps methodology incorporates governance, model lifecycle management, and advanced analytics to ensure effective deployment and monitoring of data-driven projects.

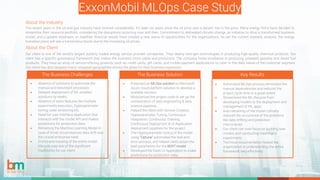

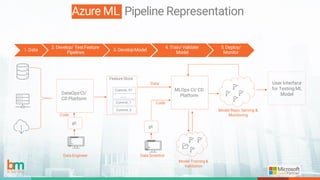

![Azure ML Architecture

Azure Pipeline

Azure Machine Learning

Data

Sanity

Test

Unit Test Create ML

Workspace

Create

ML

Compute

Publish

ML

Pipeline

One Time Run

Runs for New Code

Azure Pipeline

Azure DevOps Build Pipeline

Azure Machine Learning Azure Machine Learning

Package

model into

image

Model

Deploying

on ACI

Test Web

Service

Model

Deploying

on AKS

Test Web

Service

Azure DevOps Release Pipeline

G]

Staging QA Production

Manual

Release

Gate

AML Model

Management

Azure Kubernetes

Service

Azure Storage

Azure ML

Pipeline

Endpoint

Azure ML

Retraining Pipeline

Train

Model

Evaluate

Model

Register

Model

Azure Container Instances

Azure ML Compute

Publish ML

Pipeline

New Data Location

Model Artefact Trigger

Azure App Insights

Monitoring

Triggers: data-driven, Schedule Driven, Metrics-

driven](https://image.slidesharecdn.com/mlops-241108041136-ce810111/85/Machine-Learning-Operations-Cababilities-7-320.jpg)