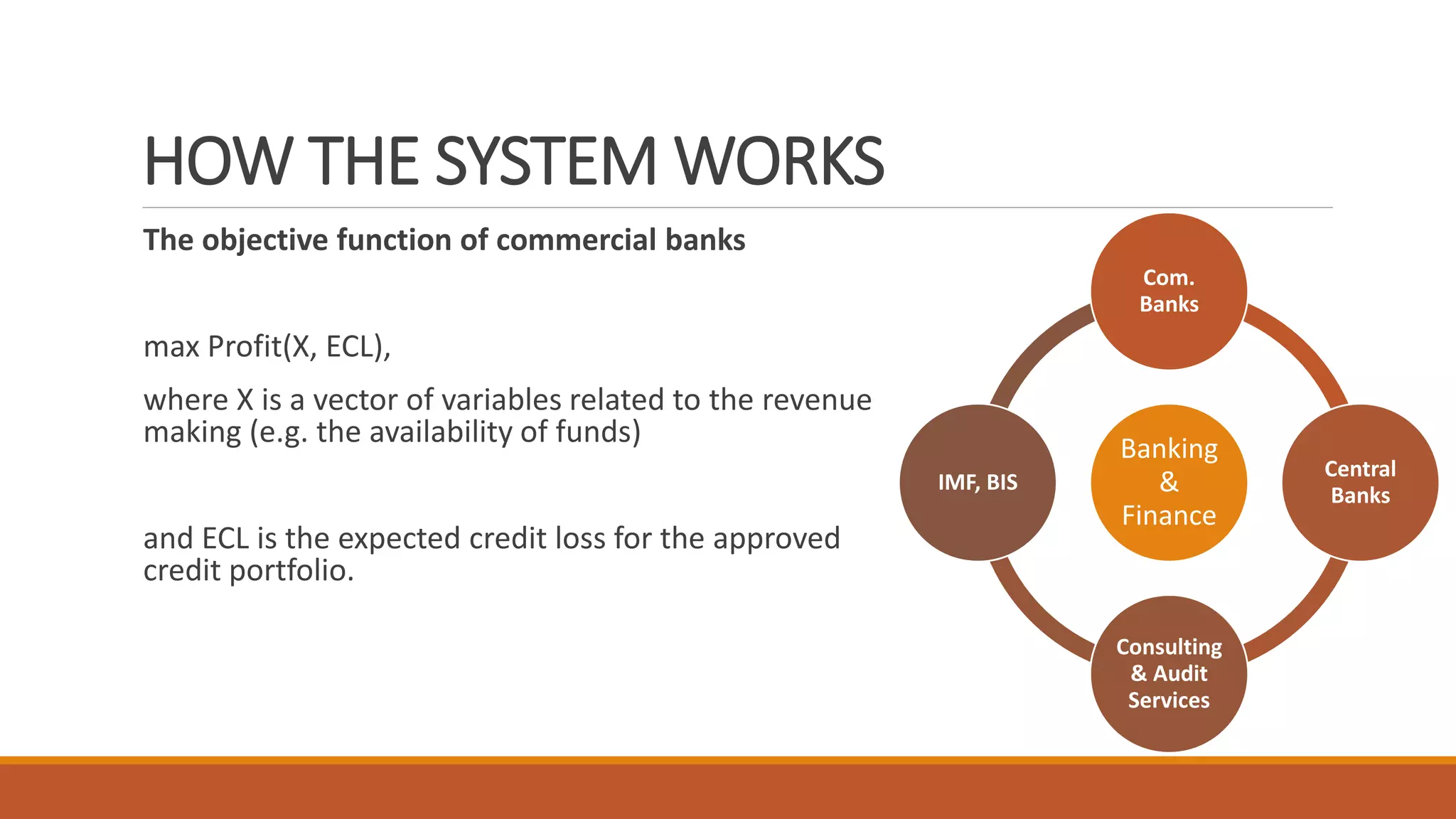

Machine learning algorithms can be used in various areas of banking and central banking. Specifically:

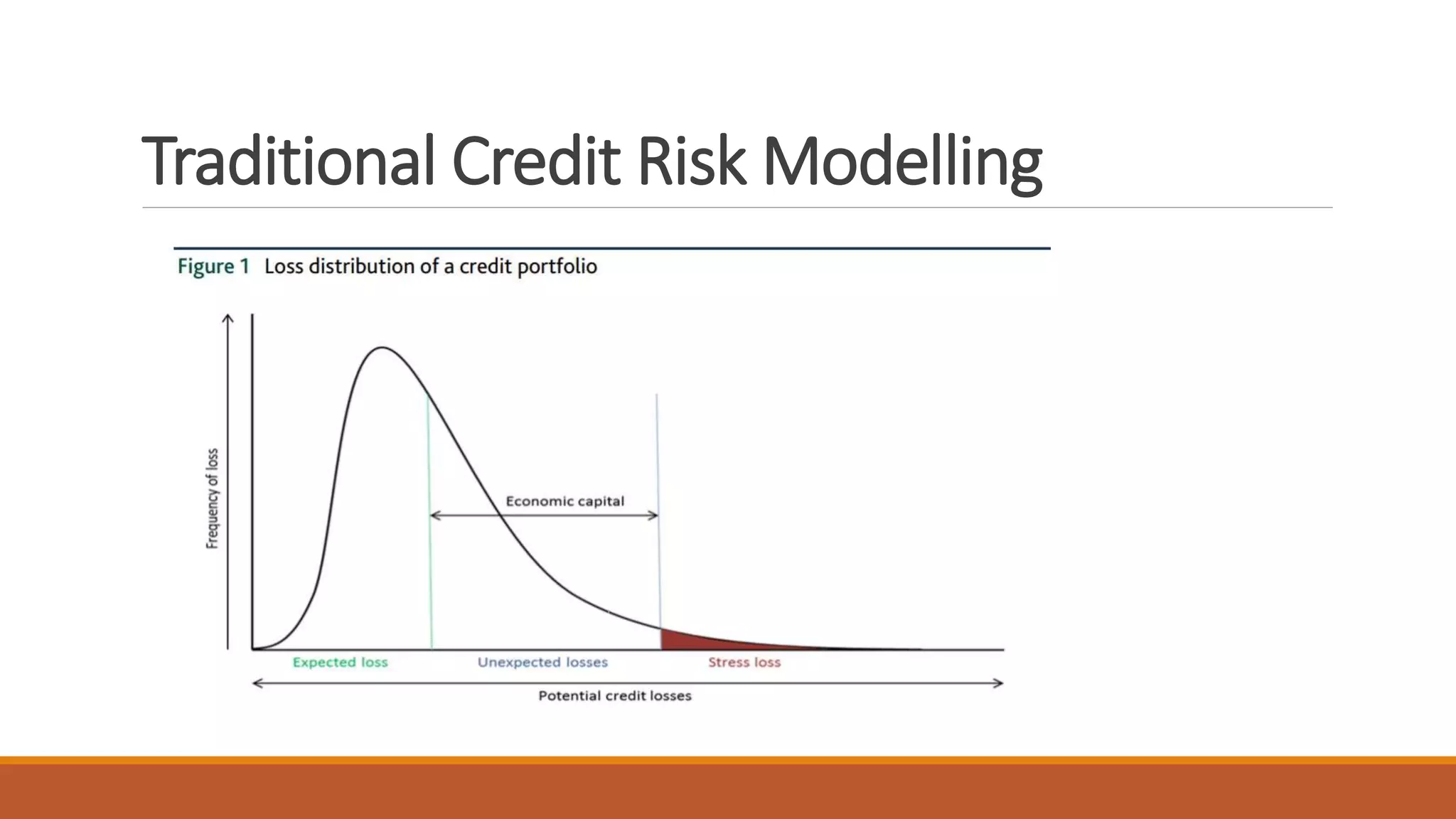

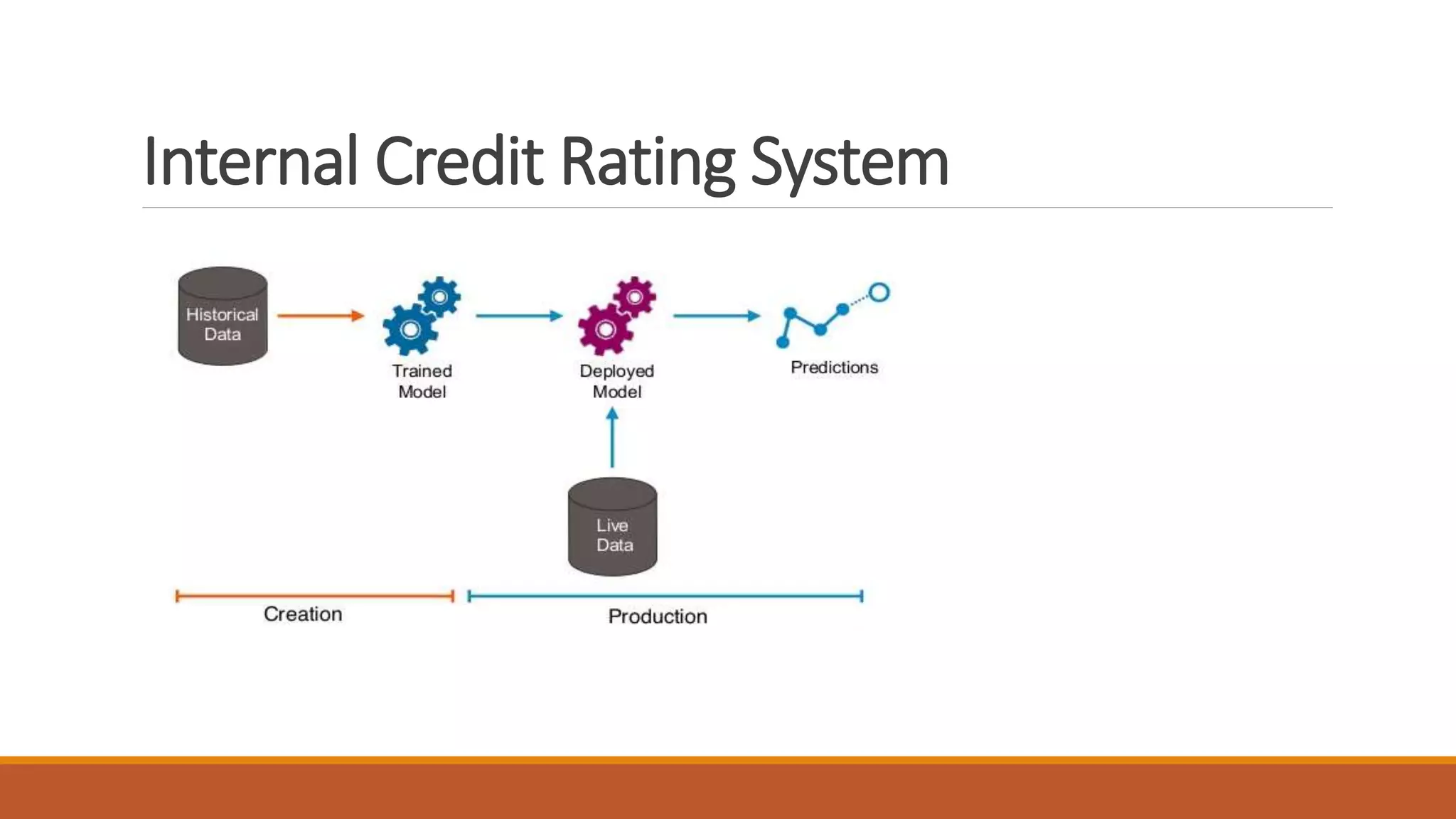

1) Traditional credit risk modeling can be enhanced with machine learning to predict probability of credit defaults based on borrower and macroeconomic variables.

2) Central banks can use credit bureau data and machine learning to monitor credit quality in real-time and provide recommendations to commercial banks.

3) Machine learning methods like random forests and neural networks outperform traditional models in time series forecasting of macroeconomic variables like inflation.

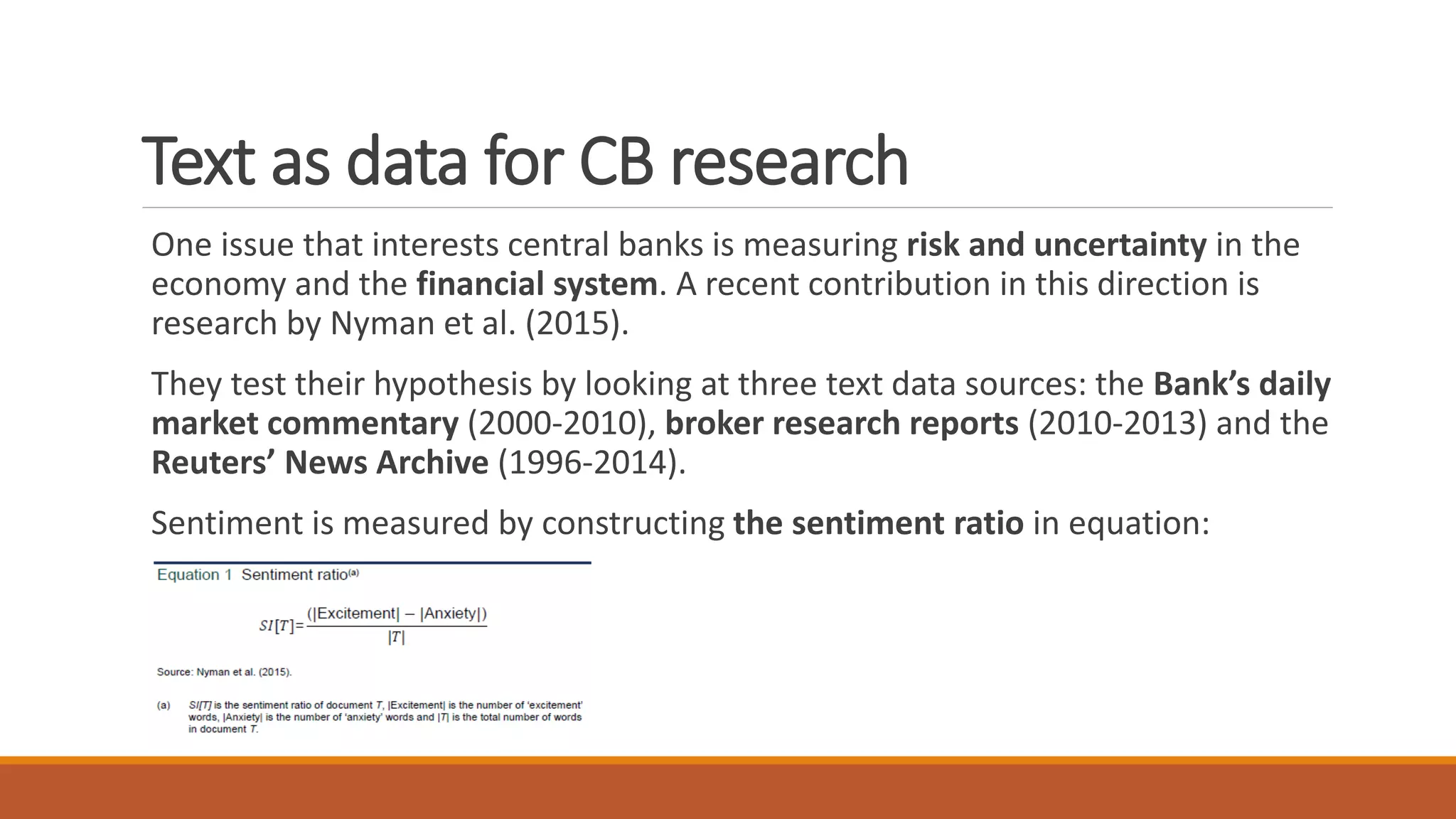

4) Unstructured text and narrative data from news, market commentary, and reports can be analyzed with machine learning to measure economic sentiment, risk, uncertainty and consensus.