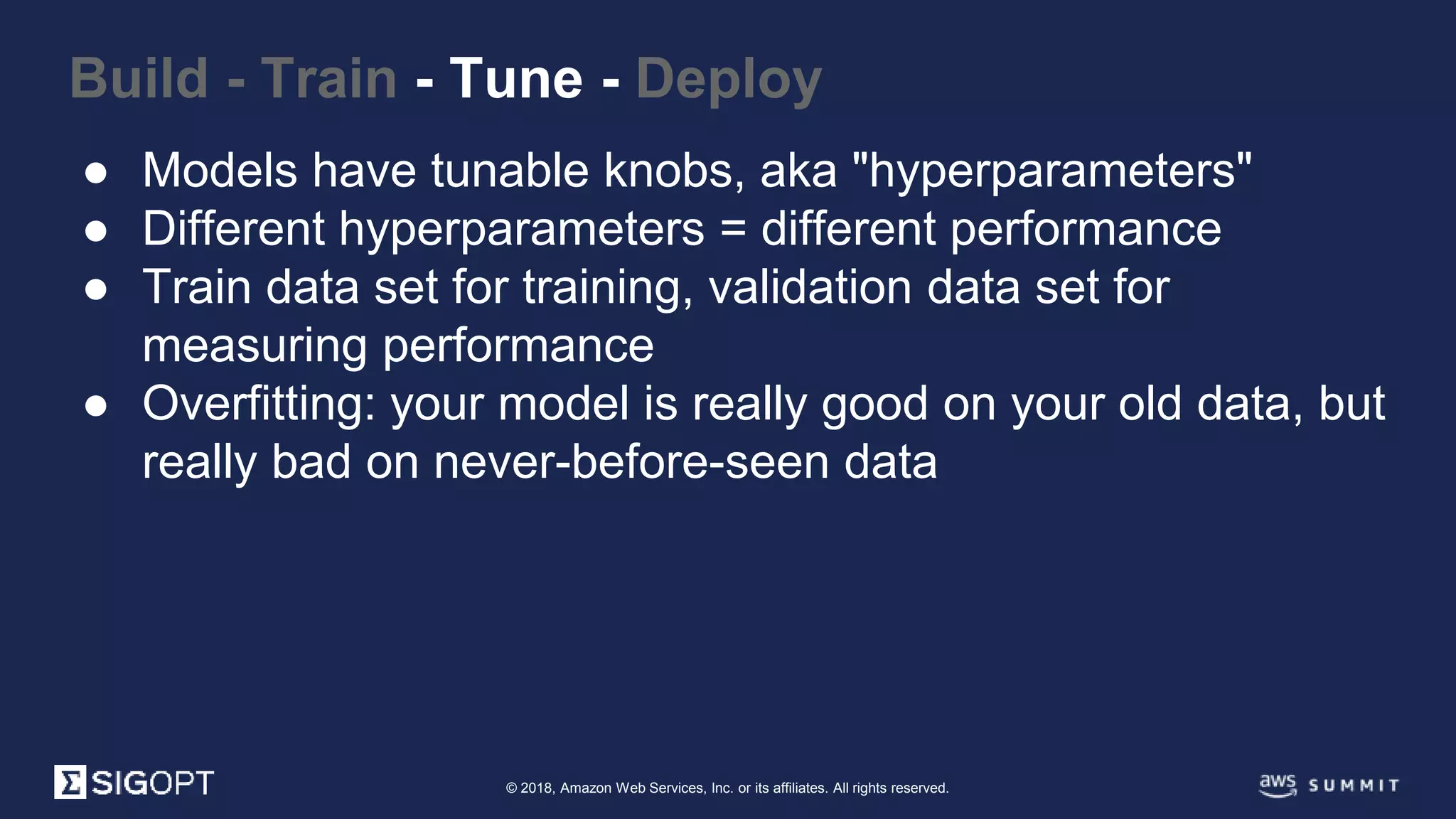

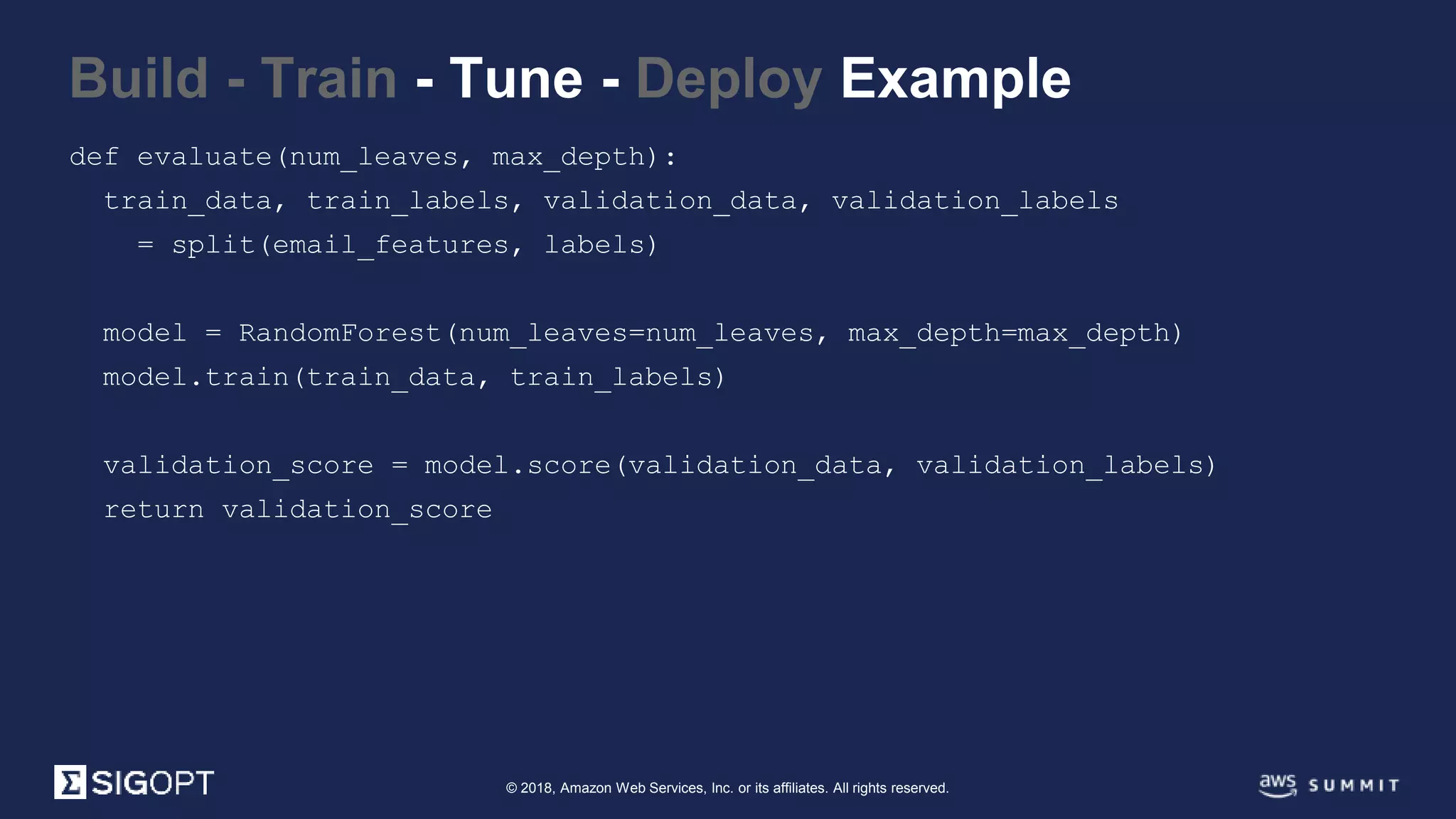

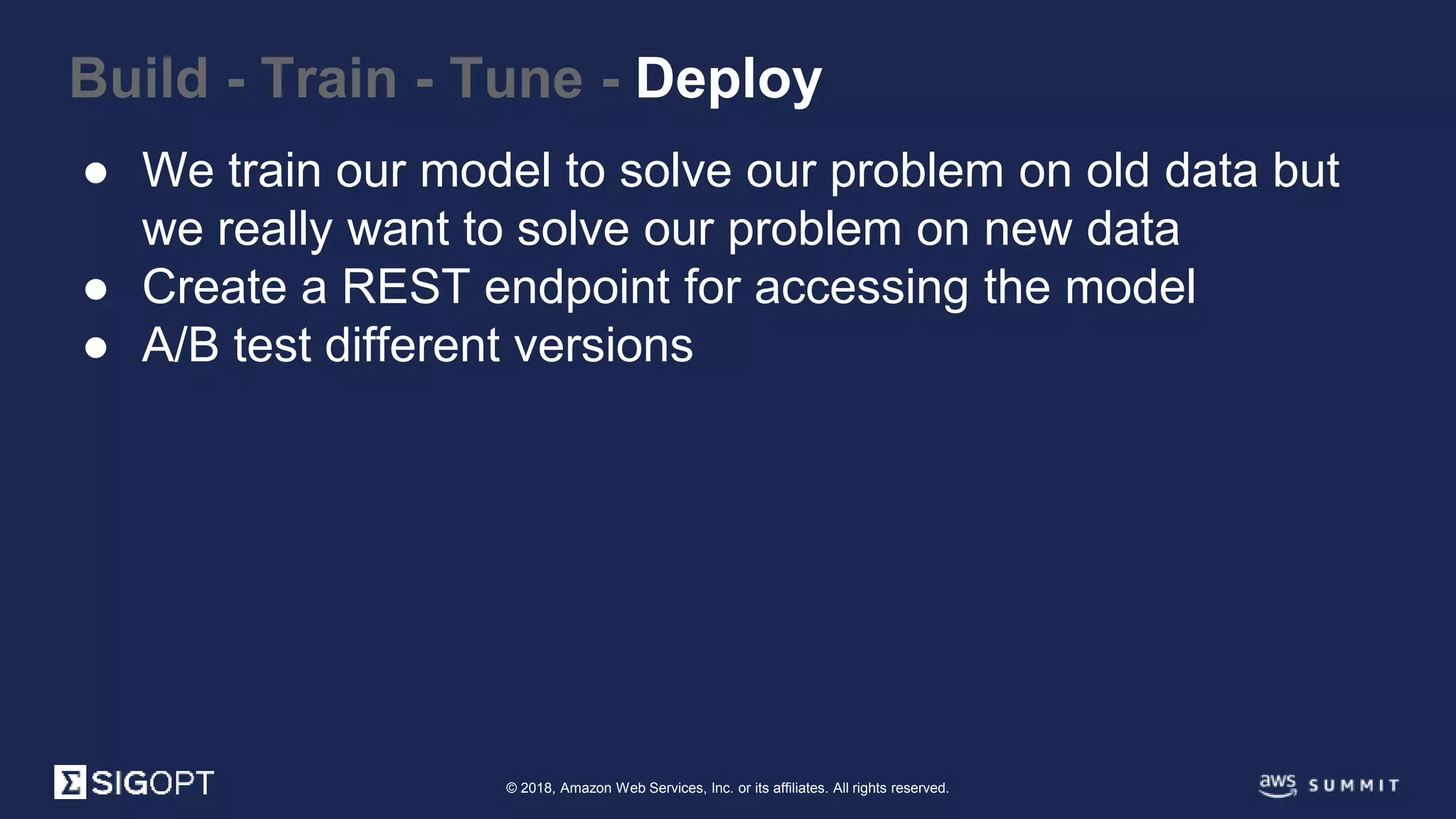

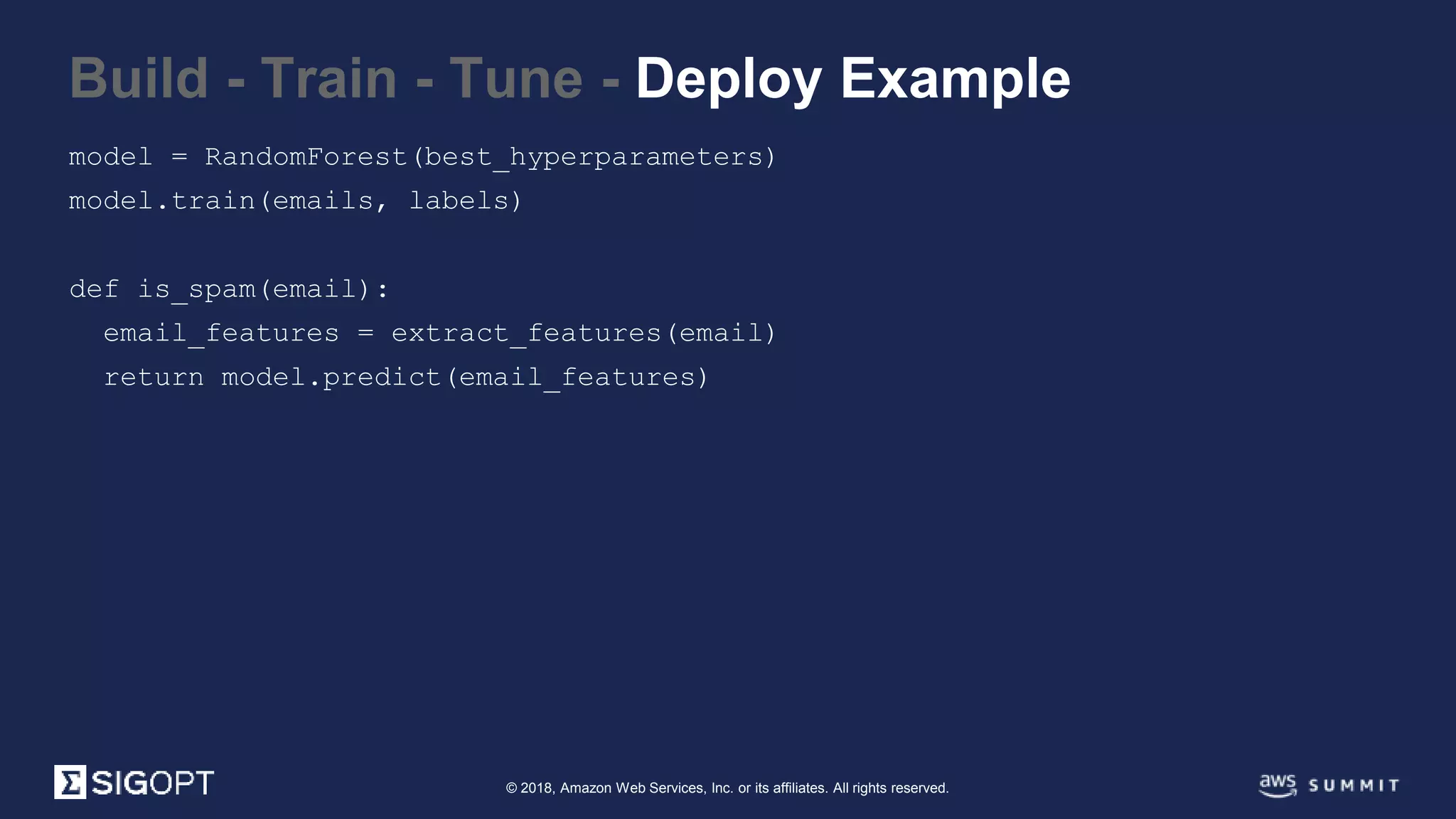

The document provides a foundational overview of machine learning, defining it as a problem-solving approach that improves through data. It outlines the steps involved in building a machine learning model, including data transformation, feature engineering, model training, tuning, and deployment, using spam email classification as an example. Key concepts such as model selection, hyperparameters, overfitting, and creating endpoints for model access are also discussed.

![© 2018, Amazon Web Services, Inc. or its affiliates. All rights reserved.

● Model: random forest

● Features: percentage of misspelled words, number of

words from a blacklist, domain name of email sender

Build - Train - Tune - Deploy Example

def extract_features(email):

return [

email.mispelled_words,

email.words_on_blacklist,

email.sender.domain,

]](https://image.slidesharecdn.com/machinelearningfundamentals-180404184551/75/Machine-Learning-Fundamentals-9-2048.jpg)

![© 2018, Amazon Web Services, Inc. or its affiliates. All rights reserved.

● Model: random forest

● Features: percentage

of misspelled words,

number of words from

a blacklist, domain

name of email sender

Build - Train - Tune - Deploy Example

email_features = [

[0.1, 1, 'hotmail.com'],

[0.7, 20, 'gmail.com'],

[0.3, 92, 'yahoo.com'],

]

labels = [0, 1, 1]

model = RandomForest()

model.train(email_features, labels)](https://image.slidesharecdn.com/machinelearningfundamentals-180404184551/75/Machine-Learning-Fundamentals-11-2048.jpg)