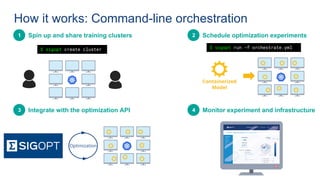

This document discusses techniques for optimizing deep learning models, including hyperparameter optimization. It describes SigOpt's approach which uses software to automate repeatable tasks like training orchestration and model tuning. Experts can then focus on data science tasks. SigOpt utilizes techniques like Bayesian optimization, multitask optimization, and infrastructure orchestration to improve model performance while reducing costs and tuning time.