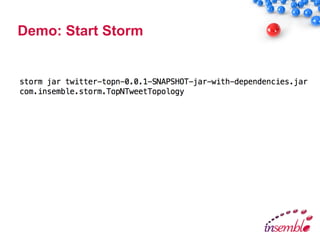

The document discusses the relevance of big data and the Hadoop ecosystem, emphasizing the need for low latency streaming architecture. It covers various components such as Flume, Kafka, and Storm, explaining their roles in data collection, messaging, and real-time processing. Additionally, it presents a demo using these technologies to analyze Twitter data for trending topics, showcasing their integration and practical application.