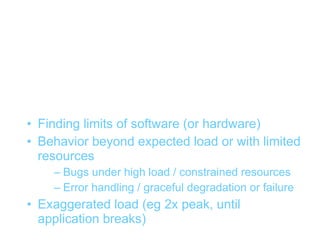

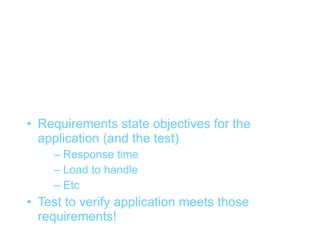

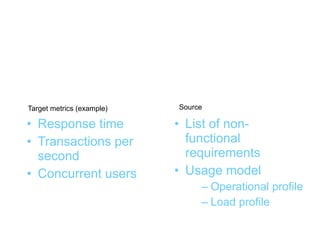

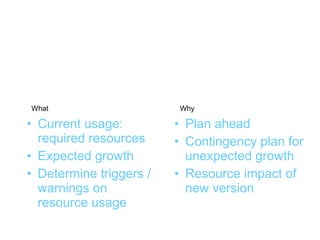

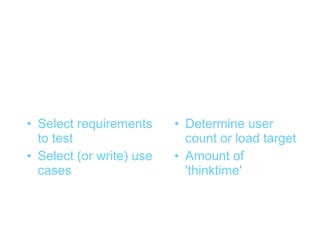

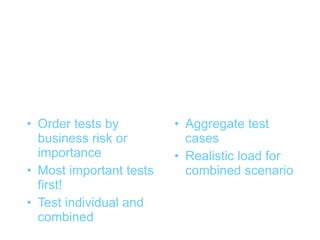

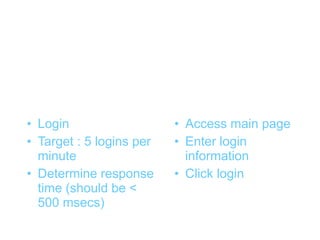

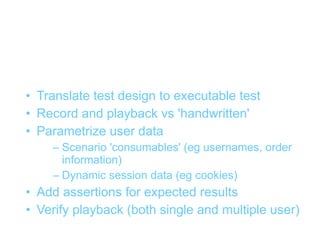

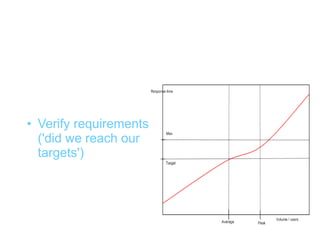

This document discusses load testing web applications. It explains why load testing is important, such as verifying performance requirements and planning resources. It describes what to test, like designing test cases and selecting appropriate metrics. Finally, it outlines how to perform load testing, including using tools to record and execute tests, preparing test environments, and analyzing results to check requirements and identify bottlenecks.