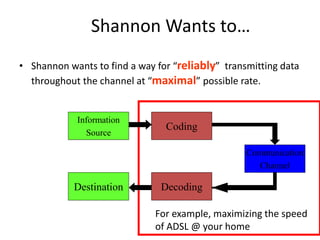

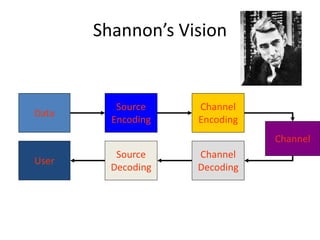

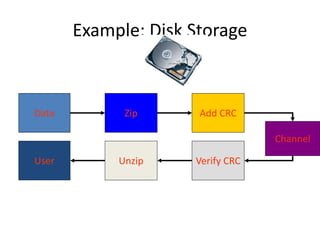

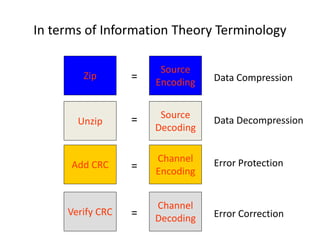

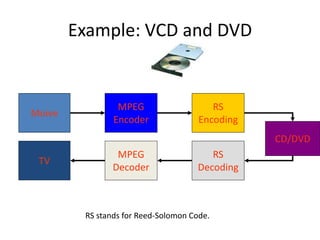

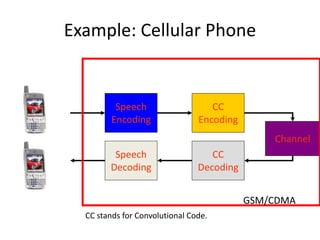

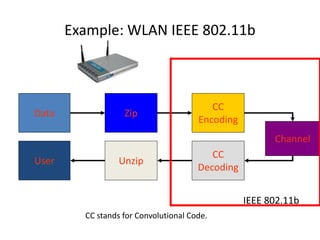

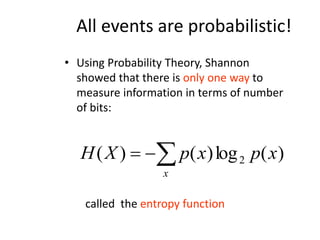

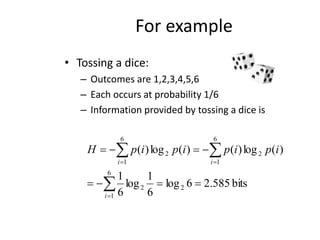

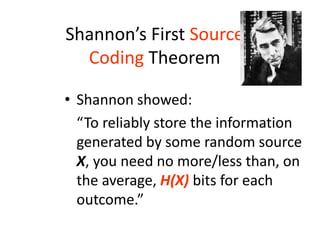

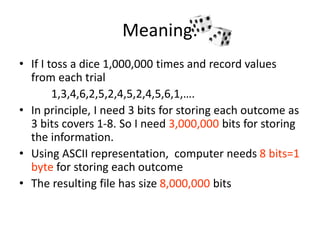

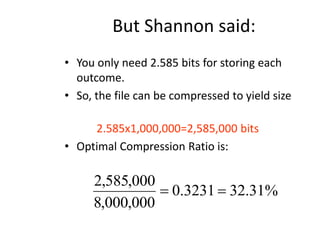

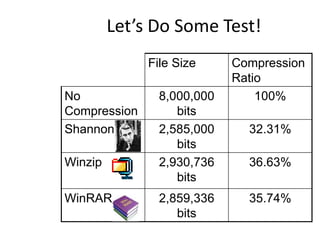

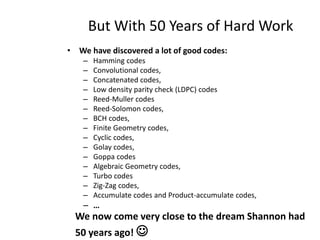

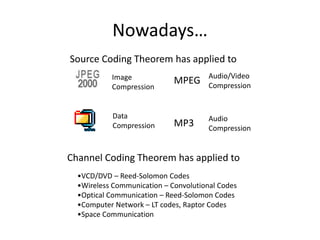

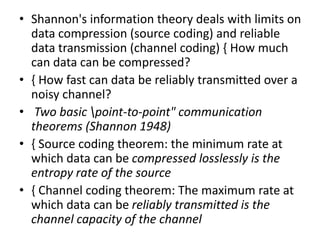

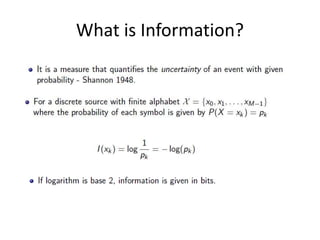

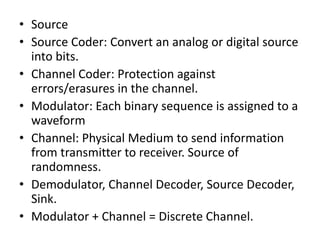

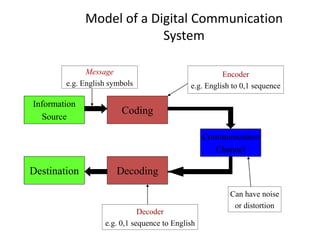

This document provides an introduction to information theory and coding. It discusses Shannon's foundational work in the 1940s that established information theory and answered two fundamental questions: the limit on data compression and transmission rate over a communications channel. It describes the basic components of a digital communication system and Shannon's definition of communication. Shannon sought to determine the maximum possible transmission rate over a channel. The document also summarizes Shannon's source coding and channel coding theorems and how modern coding techniques have come close to achieving Shannon's theoretical limits.

![Shannon’s Definition

of Communication

“The fundamental problem of communication

is that of reproducing at one point either

exactly or approximately a message selected at

another point.”

“Frequently the messages have meaning”

“... [which is] irrelevant to the engineering problem.”](https://image.slidesharecdn.com/lecture1-240125230901-66db9eef/85/Lecture-1-pptx-7-320.jpg)