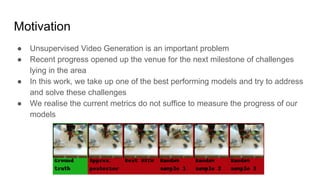

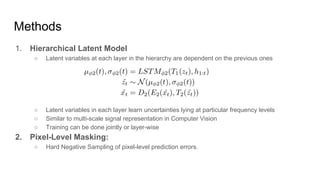

This document discusses the challenges and advancements in unsupervised video generation, particularly focusing on improving existing models. It introduces methods such as hierarchical latent models and pixel-level masking to enhance the accuracy of video predictions while addressing issues related to object interaction and occlusion. The evaluation of various model variants suggests that the hierarchical latent model trained in layers outperforms the baseline in certain metrics.