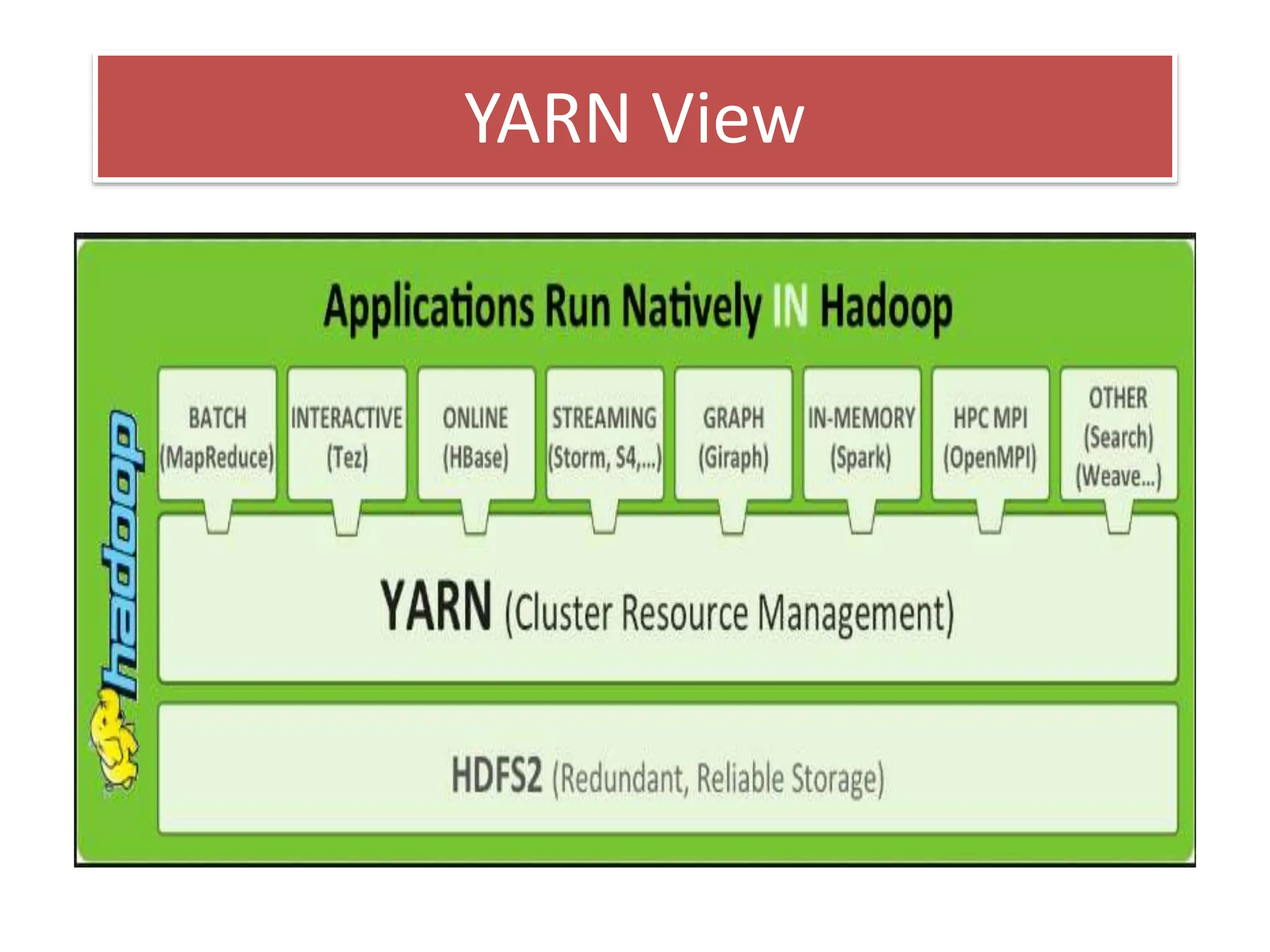

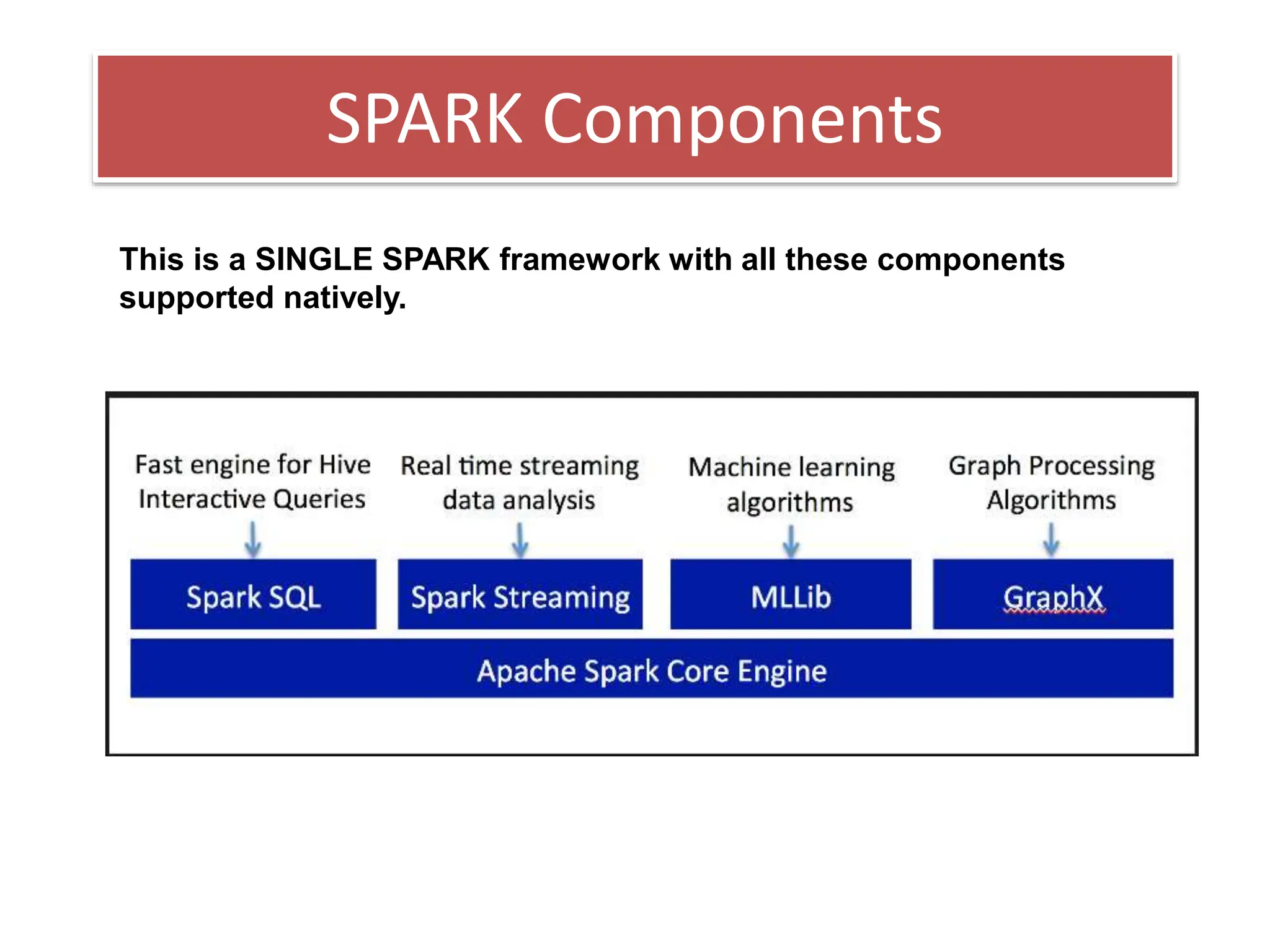

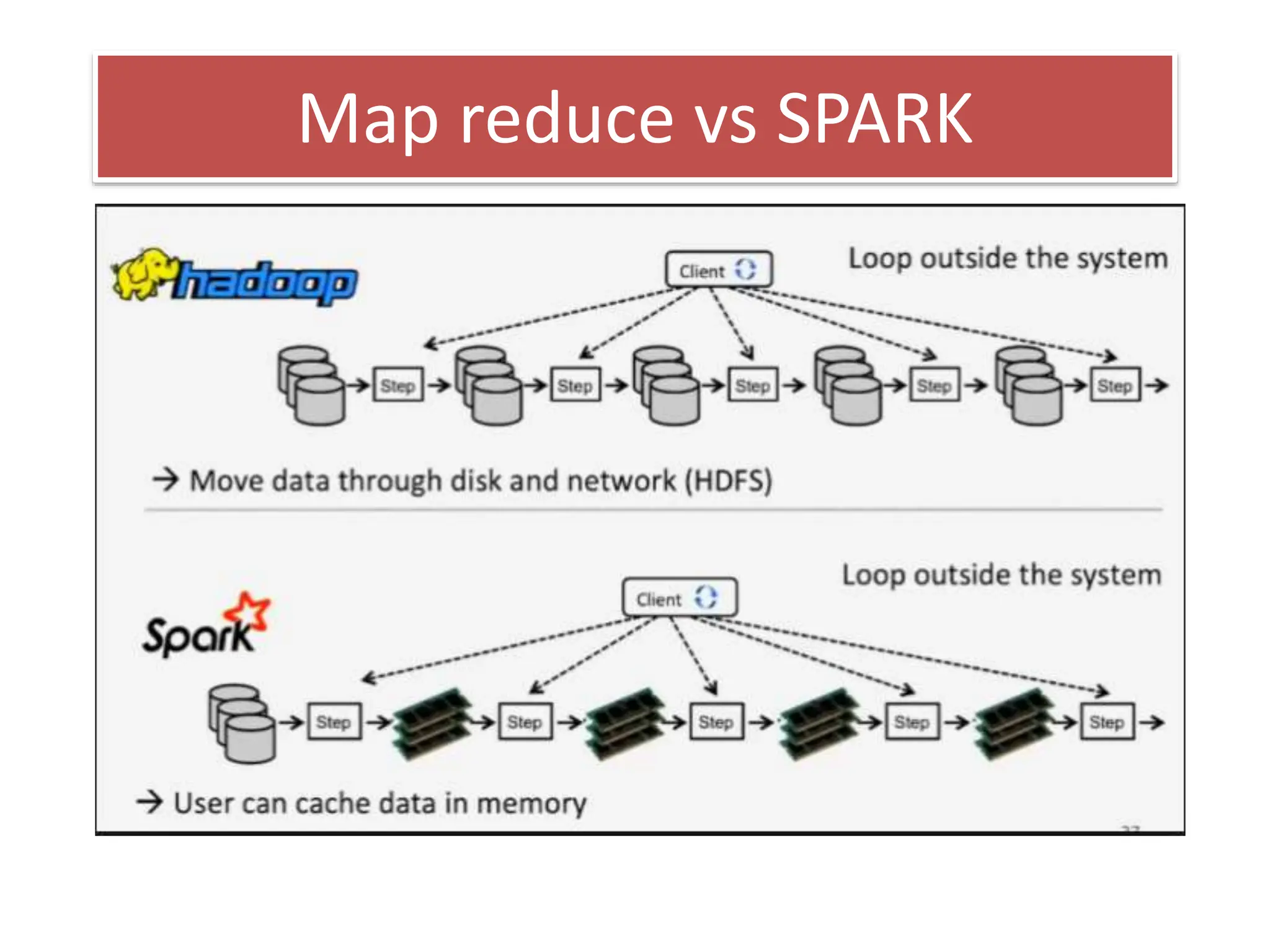

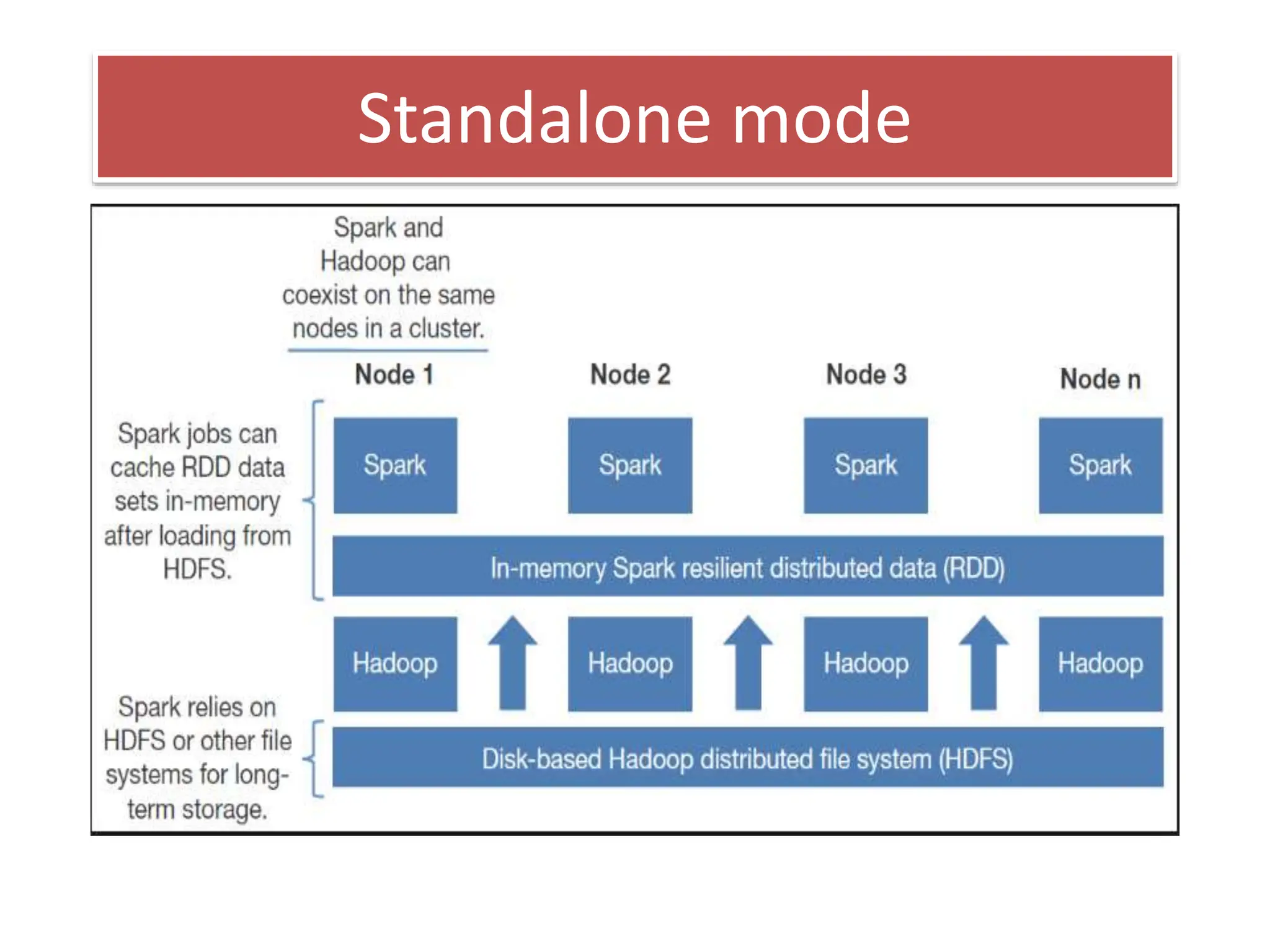

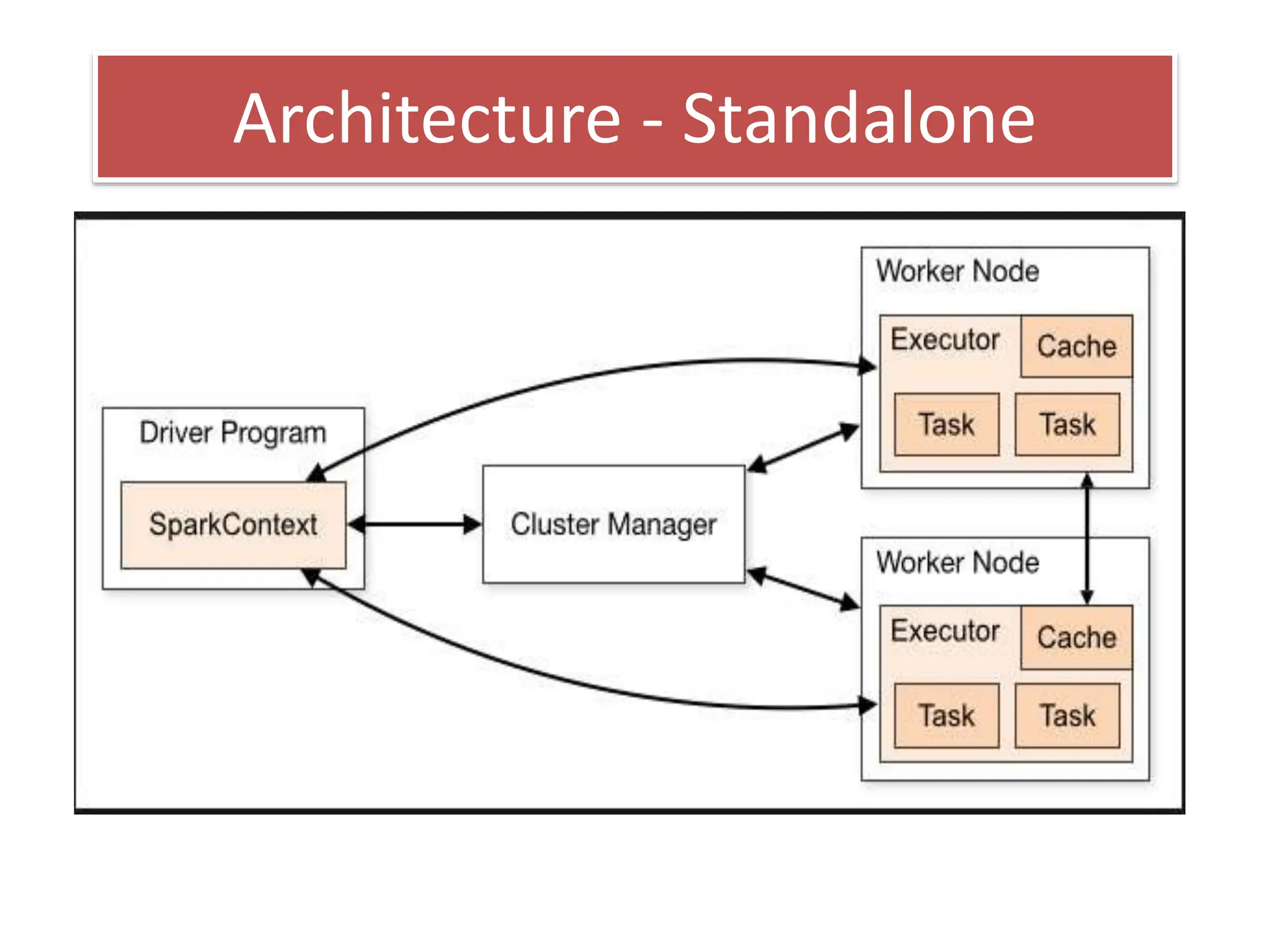

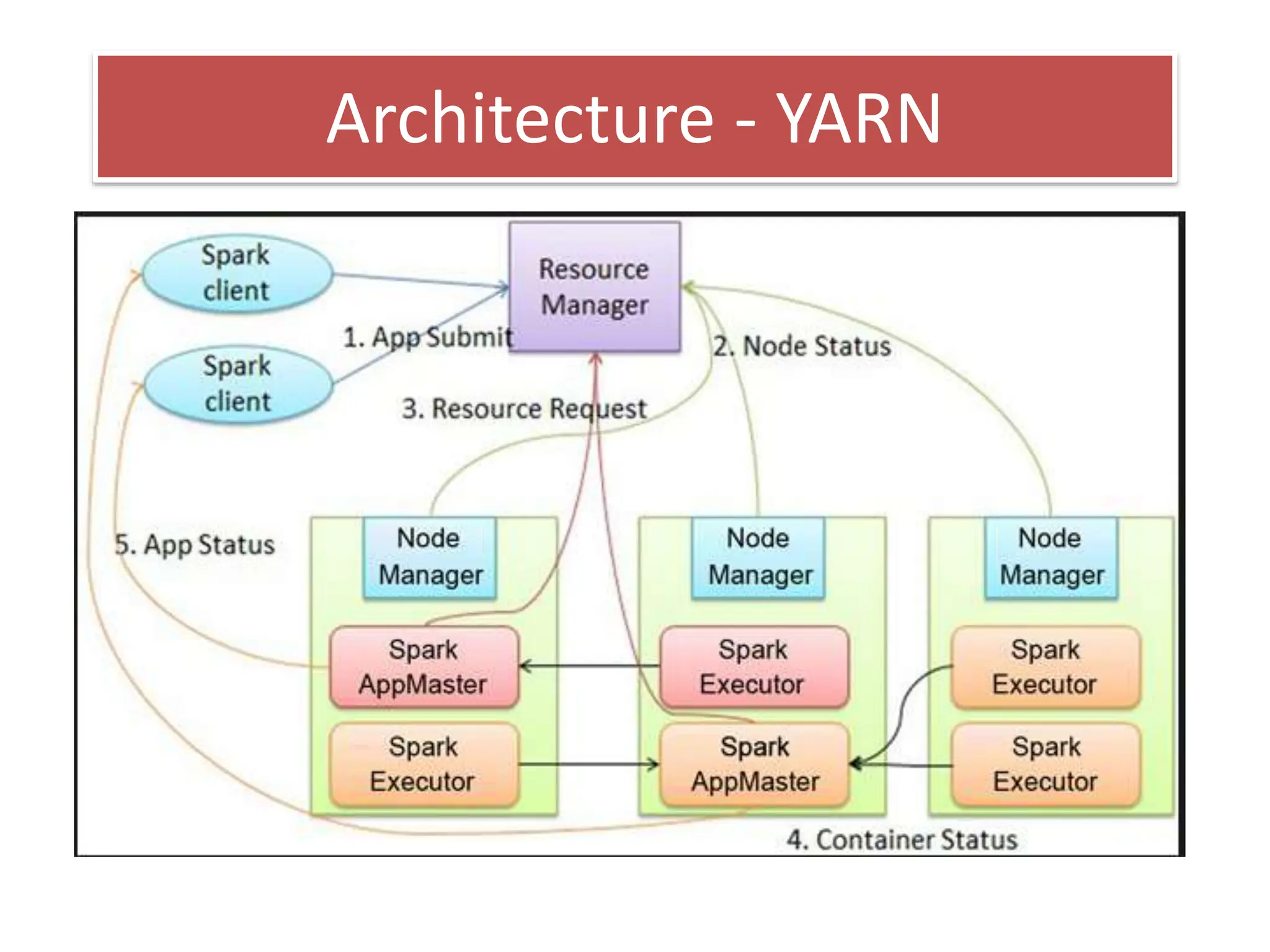

This document discusses Apache Spark, a fast and general engine for large-scale data processing. It provides three key advantages over MapReduce: in-memory processing which is 10-100x faster, support for interactive queries, and integration of streaming, SQL, machine learning, and graph processing. The core abstraction in Spark is the Resilient Distributed Dataset (RDD), which allows data to be partitioned across clusters and cached in memory for faster shared access compared to MapReduce's disk-based approach.