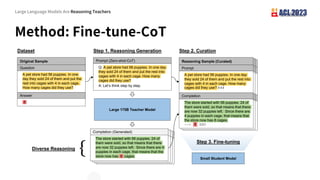

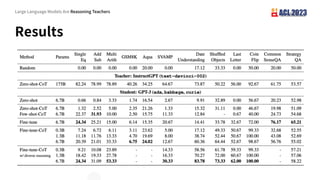

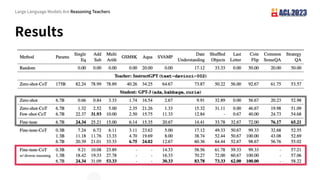

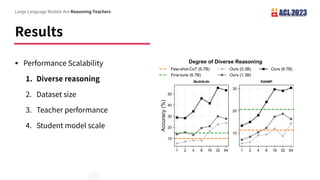

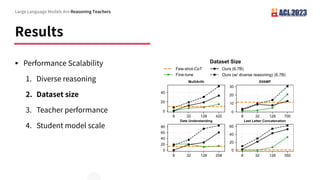

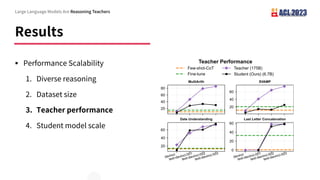

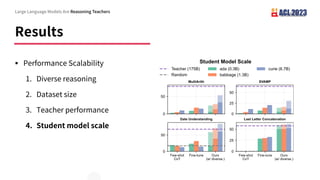

The document discusses the use of chain-of-thought (CoT) reasoning in large language models, particularly how GPT-3 (175 billion parameters) can serve as a reasoning teacher for smaller models (70 million to 6.7 billion parameters). It highlights the effectiveness of CoT prompting in generating training data for complex reasoning tasks and the significant performance improvements through a method called fine-tune-CoT. The paper also addresses the scalability of these models and the trade-offs in development and inference costs.

![Short Summary

§ Chain-of-thought (CoT) reasoning [Wei 2022] enables complex reasoning

… in huge models with over 100B 🤯 parameters.

Large Language Models Are Reasoning Teachers](https://image.slidesharecdn.com/presentation-230706051439-ab1dd473/85/Large-Language-Models-Are-Reasoning-Teachers-2-320.jpg)

![Short Summary

§ Chain-of-thought (CoT) reasoning [Wei 2022] enables complex reasoning

… in huge models with over 400GB VRAM 💰.

Large Language Models Are Reasoning Teachers](https://image.slidesharecdn.com/presentation-230706051439-ab1dd473/85/Large-Language-Models-Are-Reasoning-Teachers-3-320.jpg)

![Short Summary

§ Chain-of-thought (CoT) reasoning [Wei 2022] enables complex reasoning

… in huge models with over 100B 🤯 parameters.

§ We use GPT-3 175B as a reasoning teacher 🧑🏫

to teach smaller students with 70M‒6.7B parameters.

Large Language Models Are Reasoning Teachers](https://image.slidesharecdn.com/presentation-230706051439-ab1dd473/85/Large-Language-Models-Are-Reasoning-Teachers-4-320.jpg)

![Short Summary

§ Chain-of-thought (CoT) reasoning [Wei 2022] enables complex reasoning

… in huge models with over 100B 🤯 parameters.

§ We use GPT-3 175B as a reasoning teacher 🧑🏫

to teach smaller students with 70M‒6.7B parameters.

§ Diverse reasoning ✨ is a simple way to boost teaching.

Large Language Models Are Reasoning Teachers](https://image.slidesharecdn.com/presentation-230706051439-ab1dd473/85/Large-Language-Models-Are-Reasoning-Teachers-5-320.jpg)

![Short Summary

§ Chain-of-thought (CoT) reasoning [Wei 2022] enables complex reasoning

… in huge models with over 100B 🤯 parameters.

§ We use GPT-3 175B as a reasoning teacher 🧑🏫

to teach smaller students with 70M‒6.7B parameters.

§ Diverse reasoning ✨ is a simple way to boost teaching.

§ Extensive analysis 🕵 on the emergence of reasoning.

Large Language Models Are Reasoning Teachers](https://image.slidesharecdn.com/presentation-230706051439-ab1dd473/85/Large-Language-Models-Are-Reasoning-Teachers-6-320.jpg)

![Introduction

§ Background: chain-of-thought (CoT) prompting [Weil 2022] elicits models to

solve complex reasoning tasks step-by-step

§ Standard prompting is insu cient.

Large Language Models Are Reasoning Teachers](https://image.slidesharecdn.com/presentation-230706051439-ab1dd473/85/Large-Language-Models-Are-Reasoning-Teachers-7-320.jpg)

![Introduction

§ Background: chain-of-thought (CoT) prompting [Weil 2022] elicits models to

solve complex reasoning tasks step-by-step

§ Standard prompting is insufficient.

§ Limitation: CoT prompting is only applicable to very large models such as GPT-

3 175B and PaLM.

Large Language Models Are Reasoning Teachers](https://image.slidesharecdn.com/presentation-230706051439-ab1dd473/85/Large-Language-Models-Are-Reasoning-Teachers-8-320.jpg)

![Introduction

§ Background: chain-of-thought (CoT) prompting [Weil 2022] elicits models to

solve complex reasoning tasks step-by-step

§ Standard prompting is insufficient.

§ Limitation: CoT prompting is only applicable to very large models such as GPT-

3 175B and PaLM.

§ Solution: apply CoT prompting on very large models to generate training data

on complex reasoning for smaller models.

Large Language Models Are Reasoning Teachers](https://image.slidesharecdn.com/presentation-230706051439-ab1dd473/85/Large-Language-Models-Are-Reasoning-Teachers-9-320.jpg)