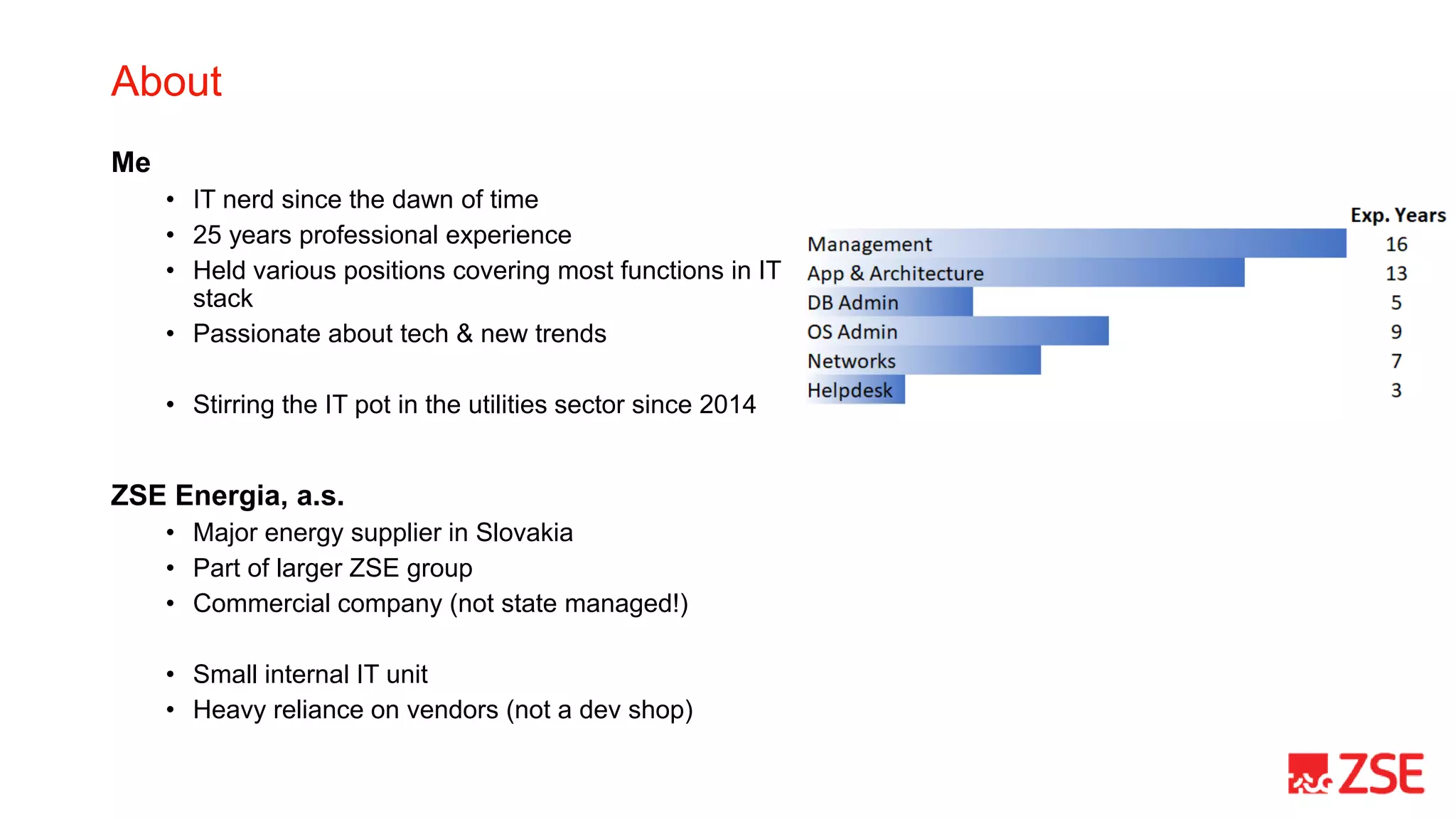

This document summarizes Kubernetes Day 2 operations at ZSE Energia, a.s. over a period of less than 6 months. It describes how they expanded their initial Kubernetes cluster to include ingress, logging, monitoring, backups, and additional namespaces. It provides recommendations such as budgeting for disruptions, distributing pods across nodes, allowing generous termination periods, and upgrading control planes separately from node pools. The document also notes some approaches they considered but did not take, such as heavy use of Helm charts, operators, or pre-packaged pipelines.

![The (somewhat) accelerated journey

Day 0 & 1 - Now or never

• K8s incepted as a target platform for an

ongoing high-profile project

• Severely limited infrastructure support

capacities (human) at the time [couldn’t

deploy on ‘classic’ VMs]

• Anticipated uptime requirements

Day 2 start – Apr 2019

• ingress

• logs (Fluentd, Elasticsearch, Kibana)

• 1 app namespace

• no native monitoring* (!DON’T!)

* trivial heartbeat monitoring with Zabbix

Later that (2nd) day..

• elasticsearch->opendistro->opensearch

• fluentd->fluent-bit

• vendor namespaces (SaaS model with ‘our’

infrastructure)

• calico (cluster reinstall)

• cert-manager

• prometheus/alert manager/grafana

• real backups (!)

• zookeeper

• kafka

Day 0 to Day 2 in <6 months](https://image.slidesharecdn.com/kubernetesday2zseenergia-211111091812/75/Kubernetes-day-2-zse-energia-3-2048.jpg)