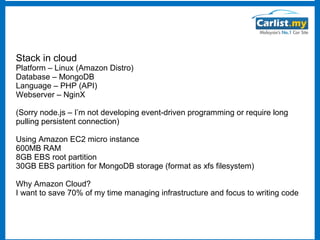

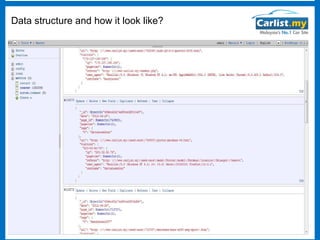

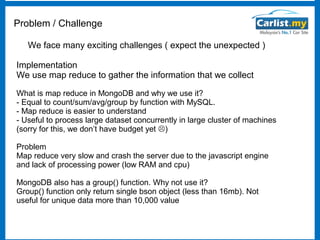

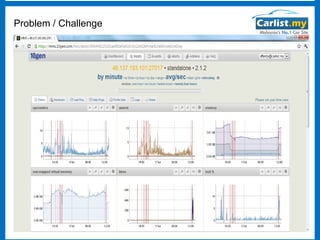

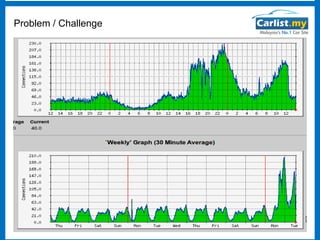

This document discusses using MongoDB for analytics and lessons learned from implementing analytics on a car listings website. It describes the technical stack including MongoDB, challenges with slow map reduce queries and server crashes, and solutions tried like moving to aggregation queries and increasing hardware. A key lesson was that data modeling is important - denormalizing data and simplifying queries through adding redundant data improved performance and solved the problem more cost effectively than increasing resources.