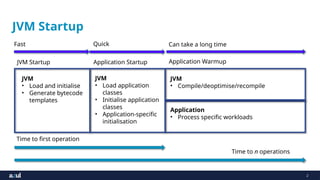

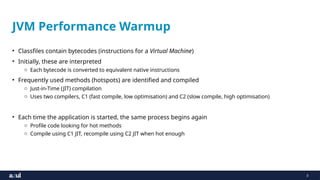

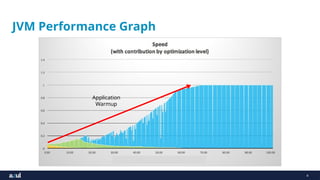

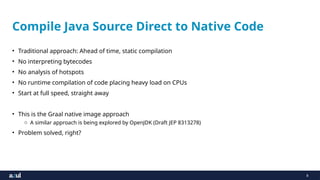

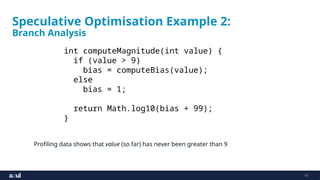

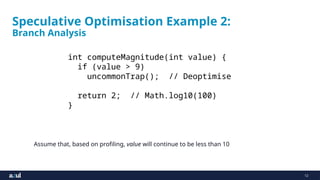

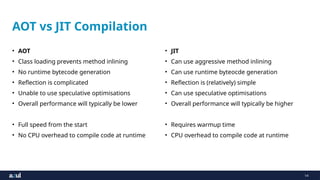

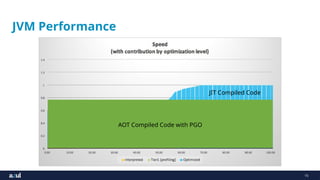

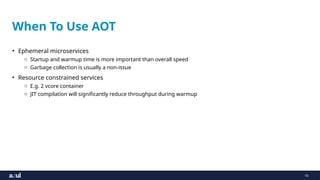

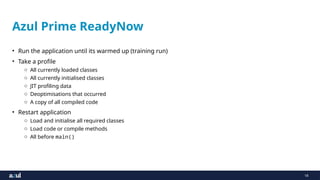

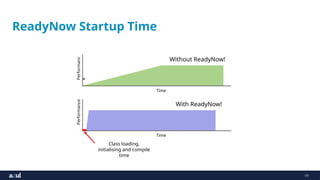

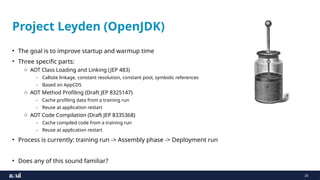

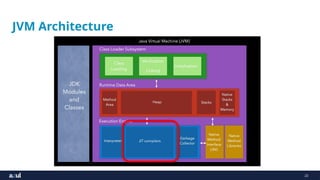

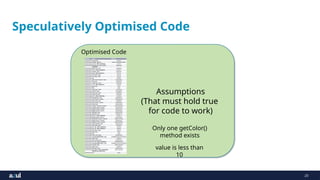

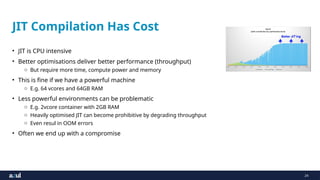

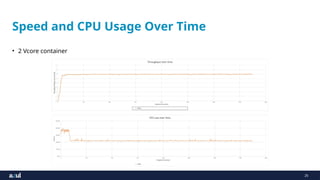

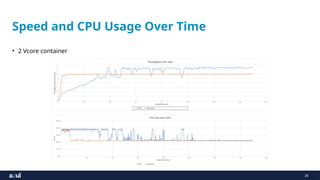

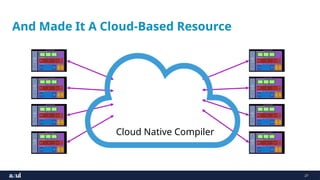

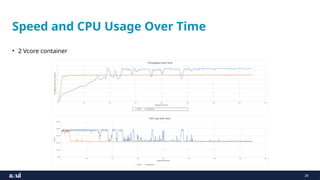

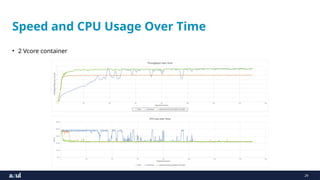

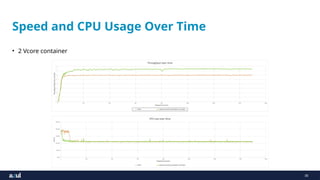

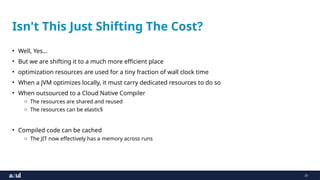

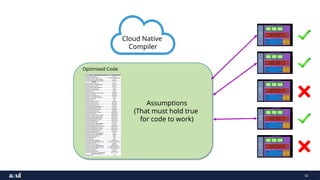

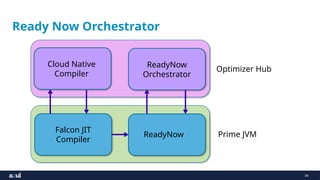

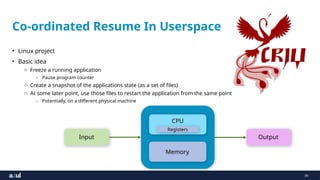

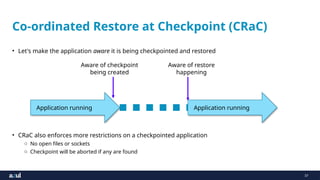

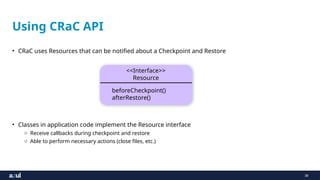

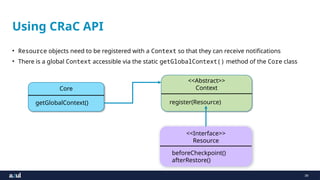

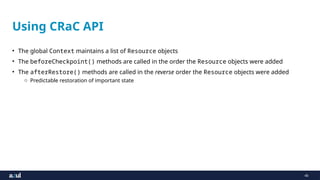

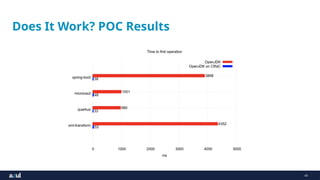

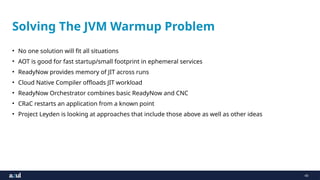

The document discusses solutions to the Java Virtual Machine (JVM) warmup problem, focusing on achieving faster application performance through techniques like Ahead of Time (AOT) compilation and Just-In-Time (JIT) compilation strategies. It highlights the importance of speculative optimizations, cloud-native compiler approaches, and the Readynow! technology to mitigate warmup times and CPU overhead during application execution. Additionally, it introduces Project Leyden and CRaC for managing application states and improving startup and warmup efficiency.