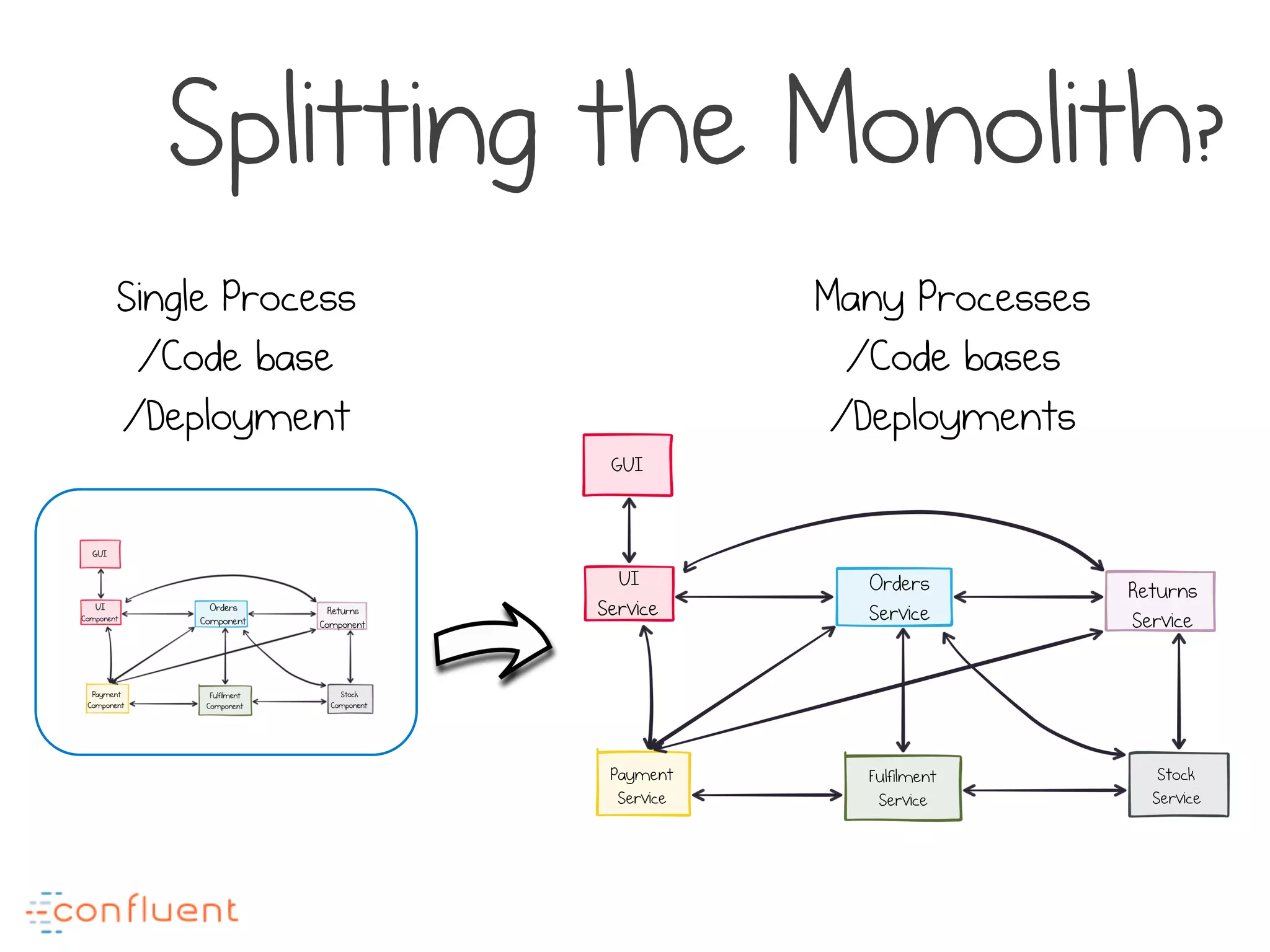

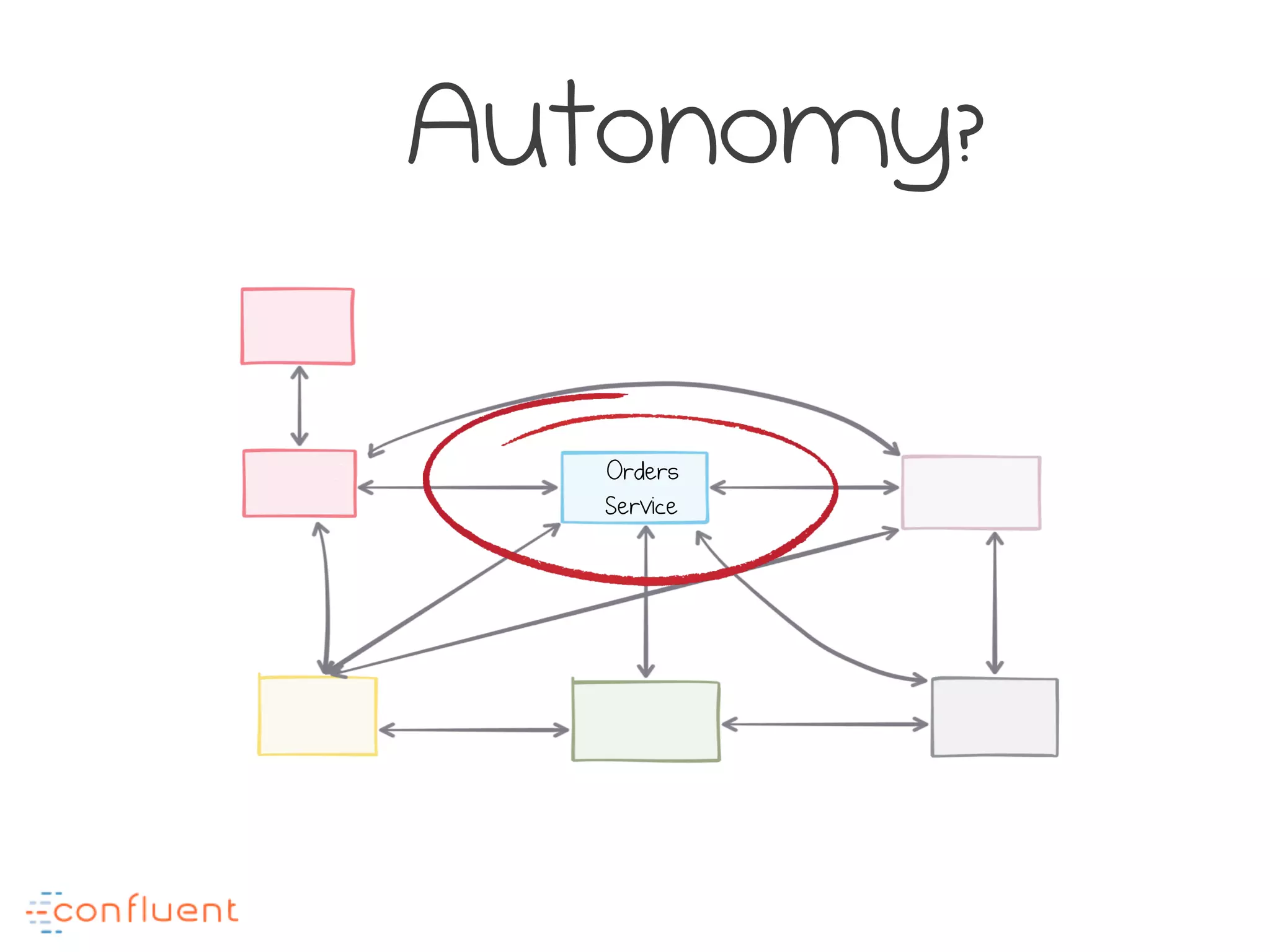

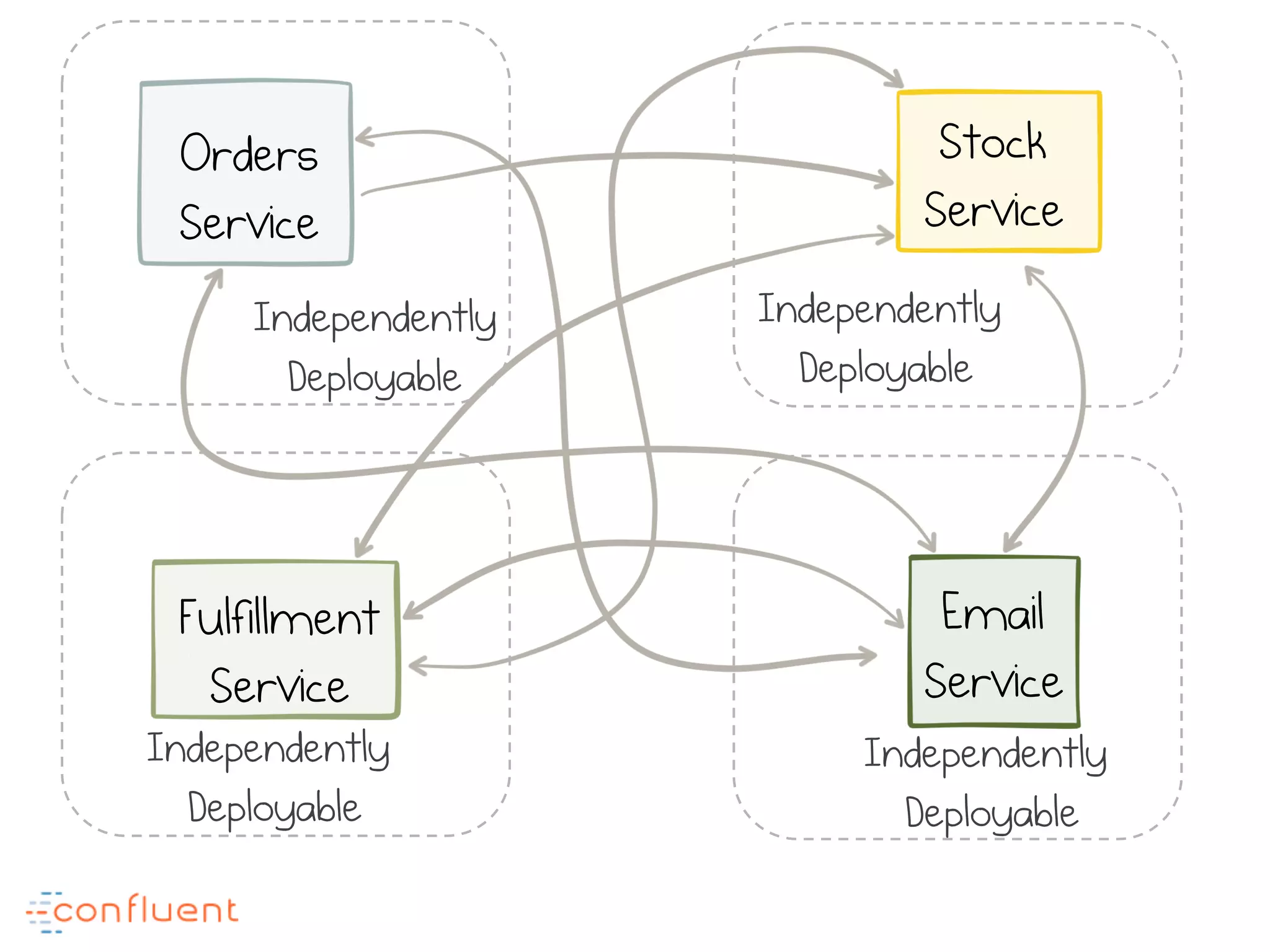

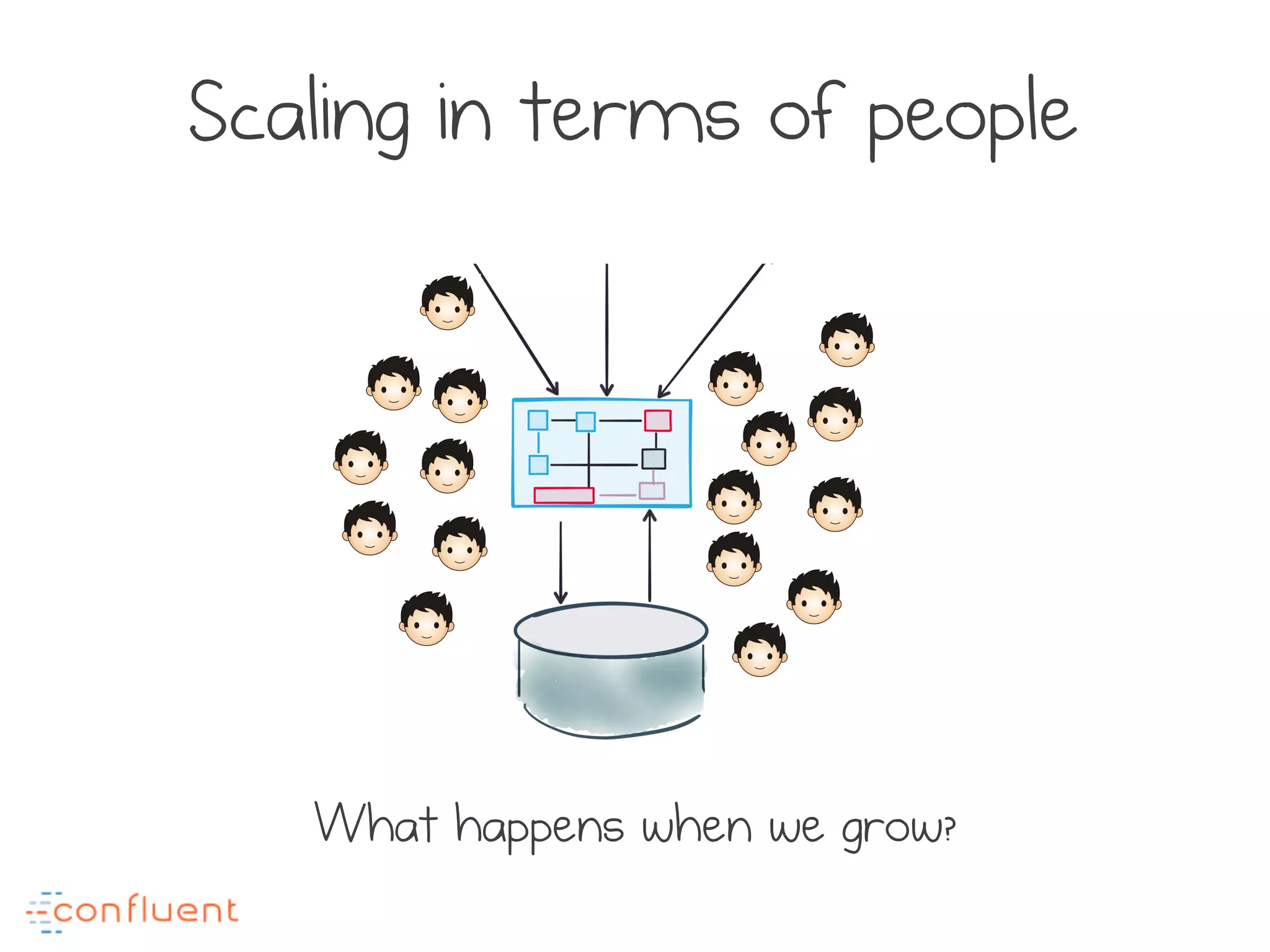

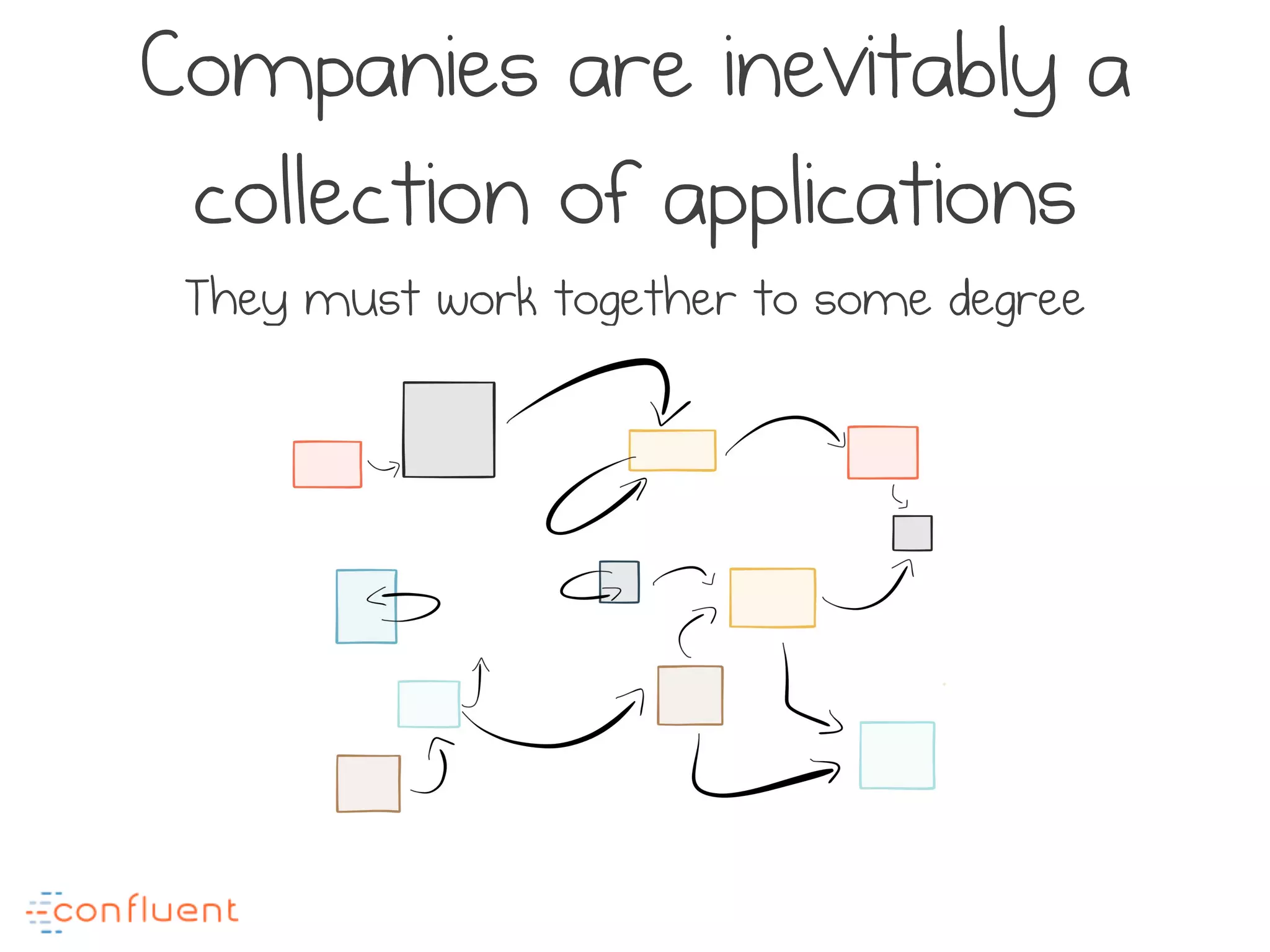

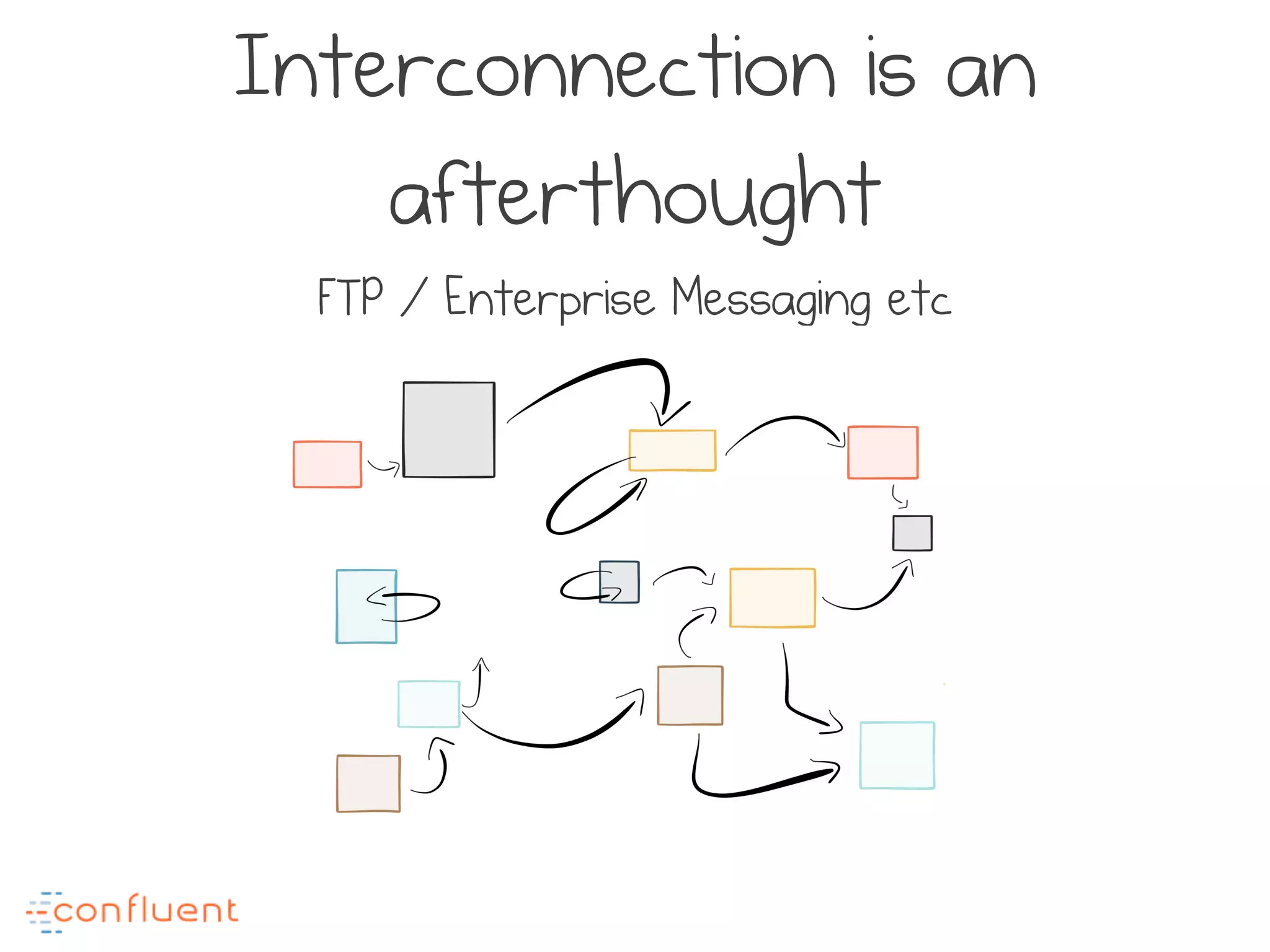

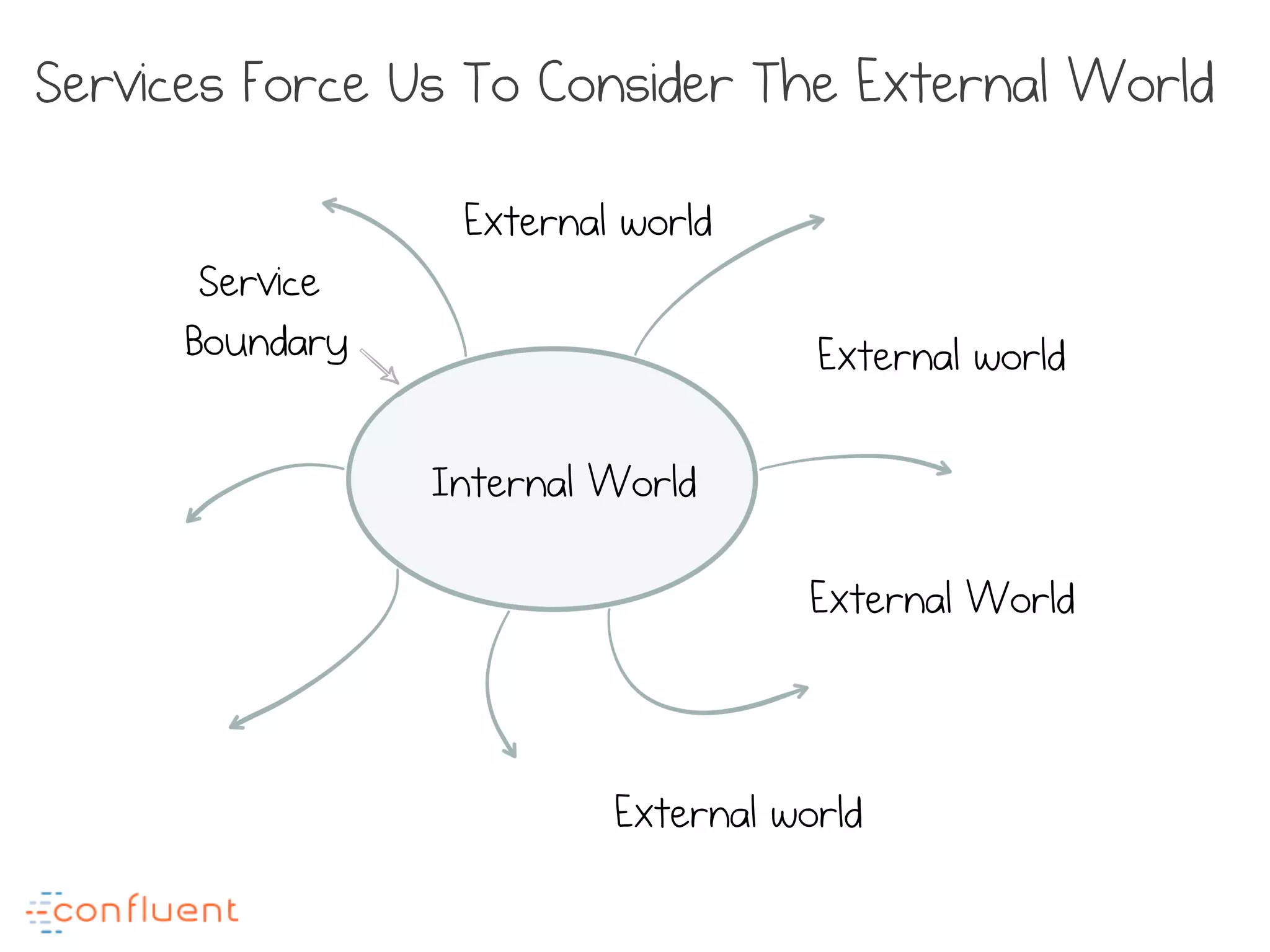

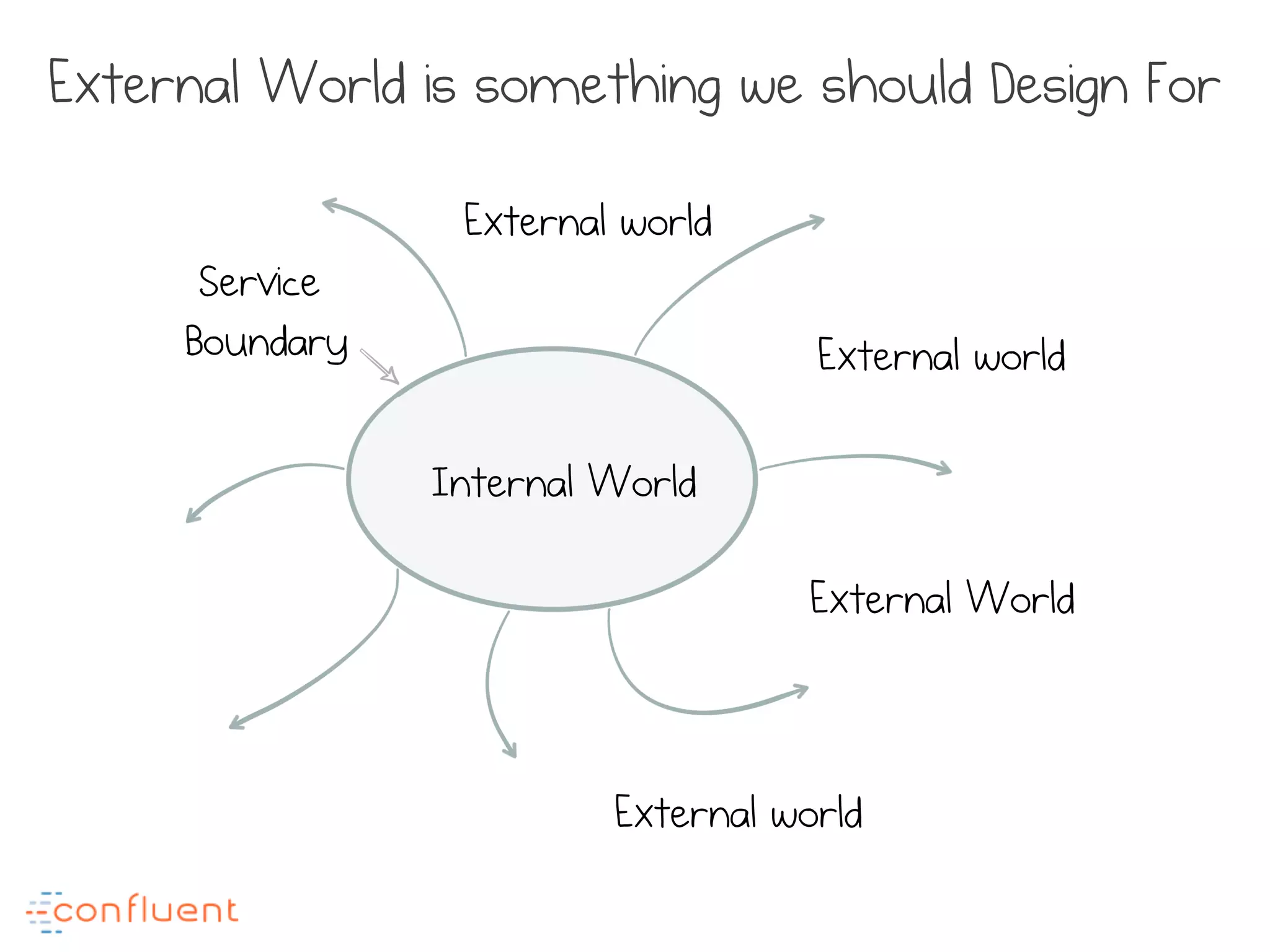

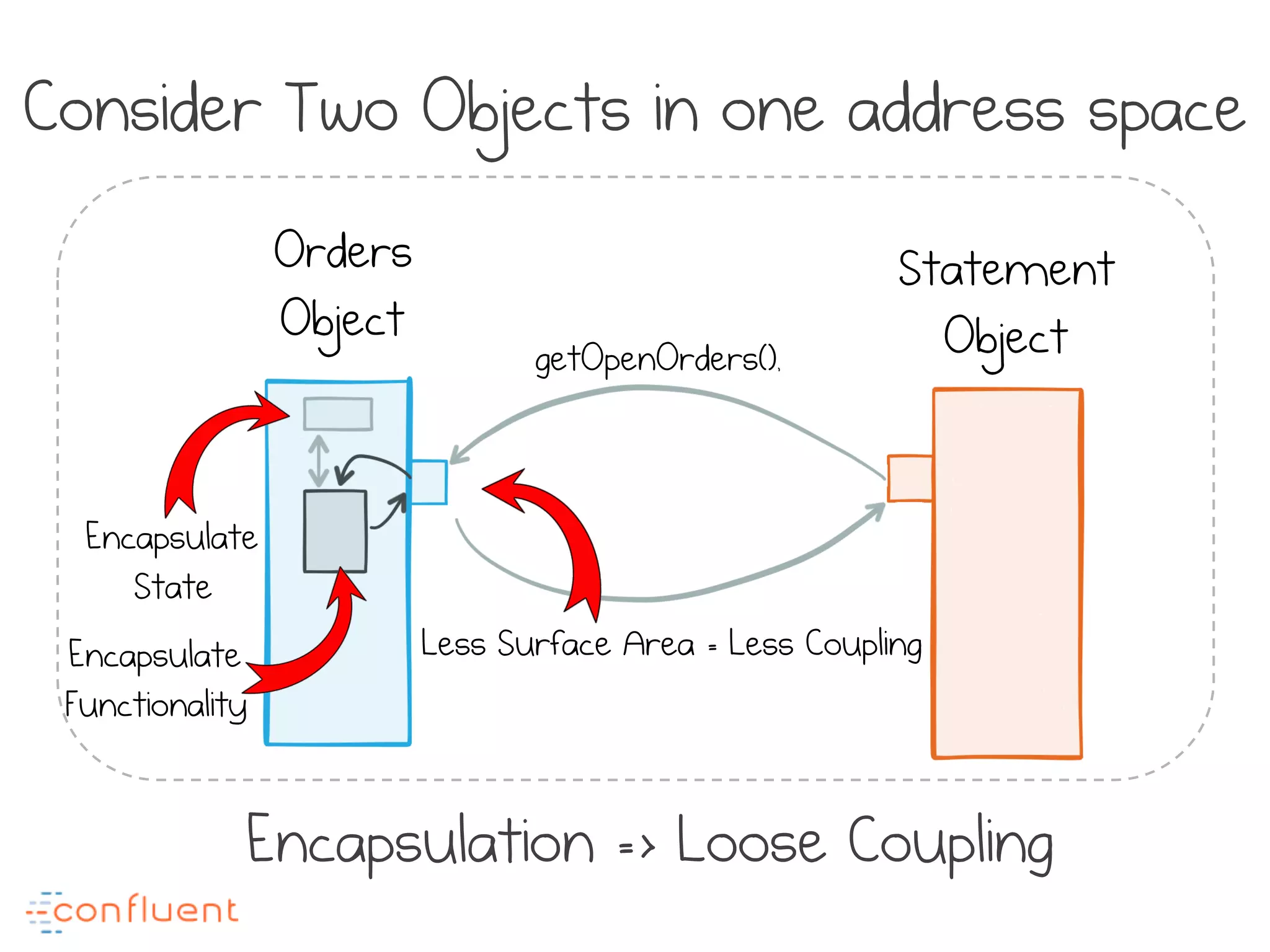

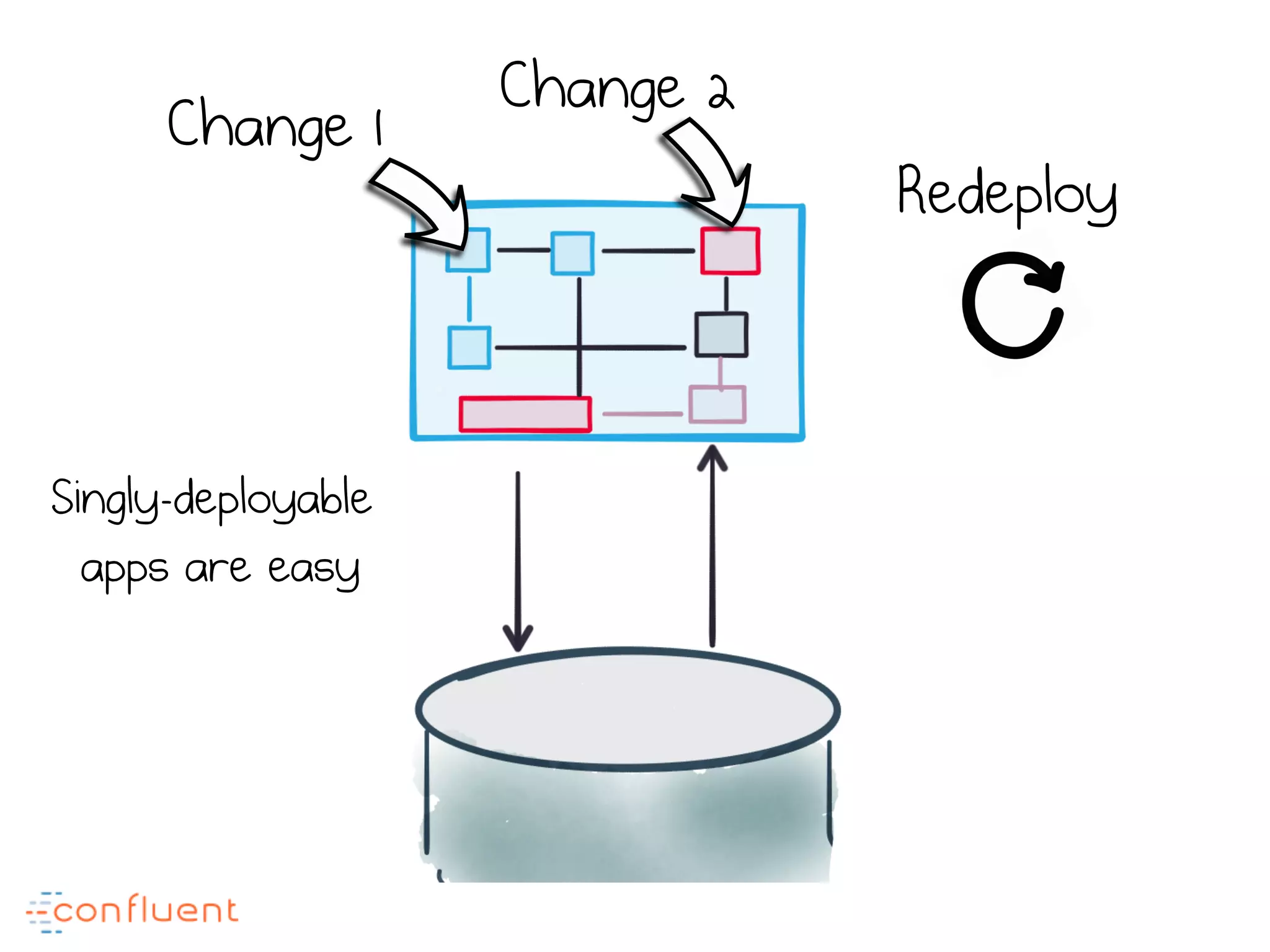

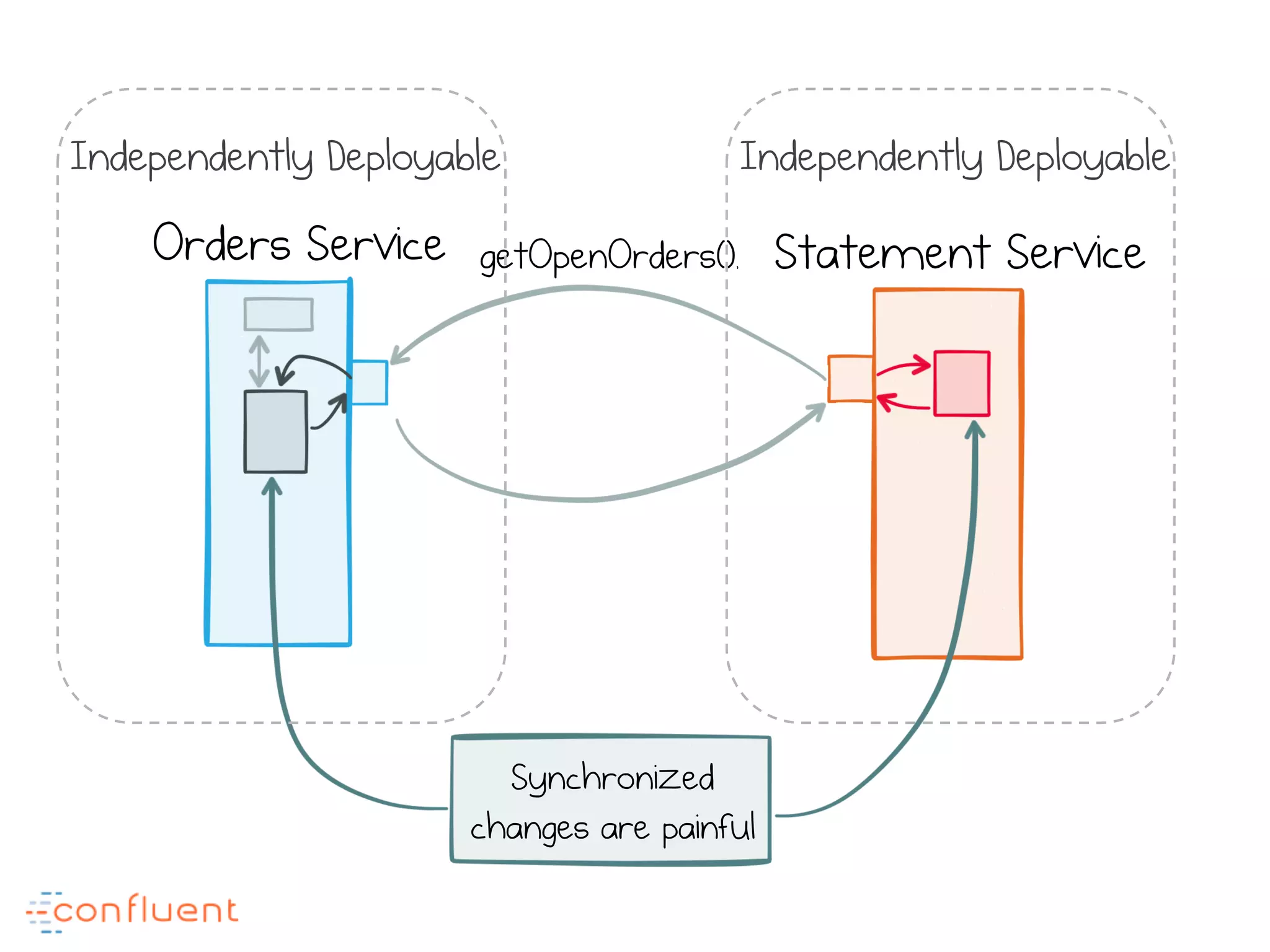

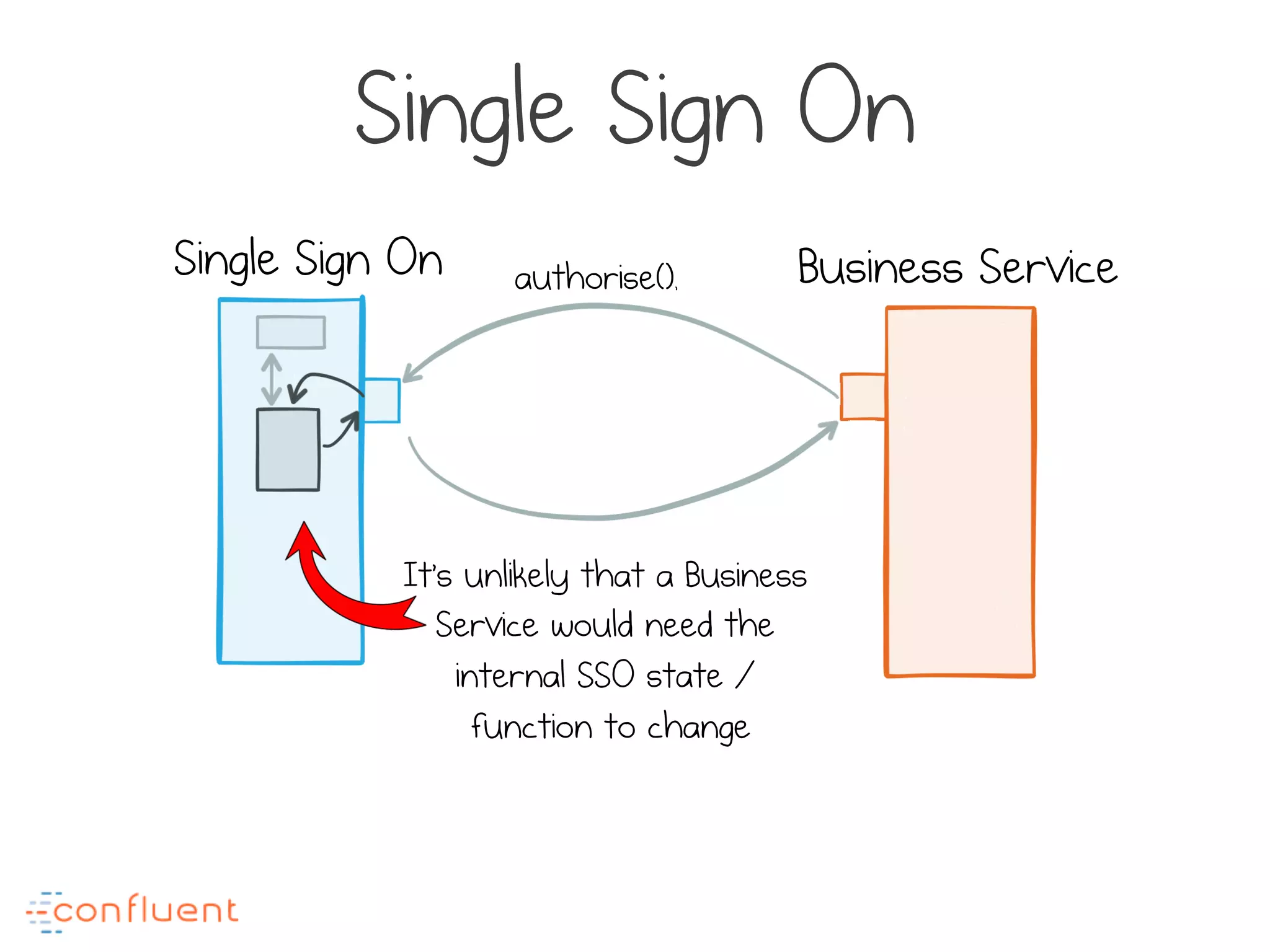

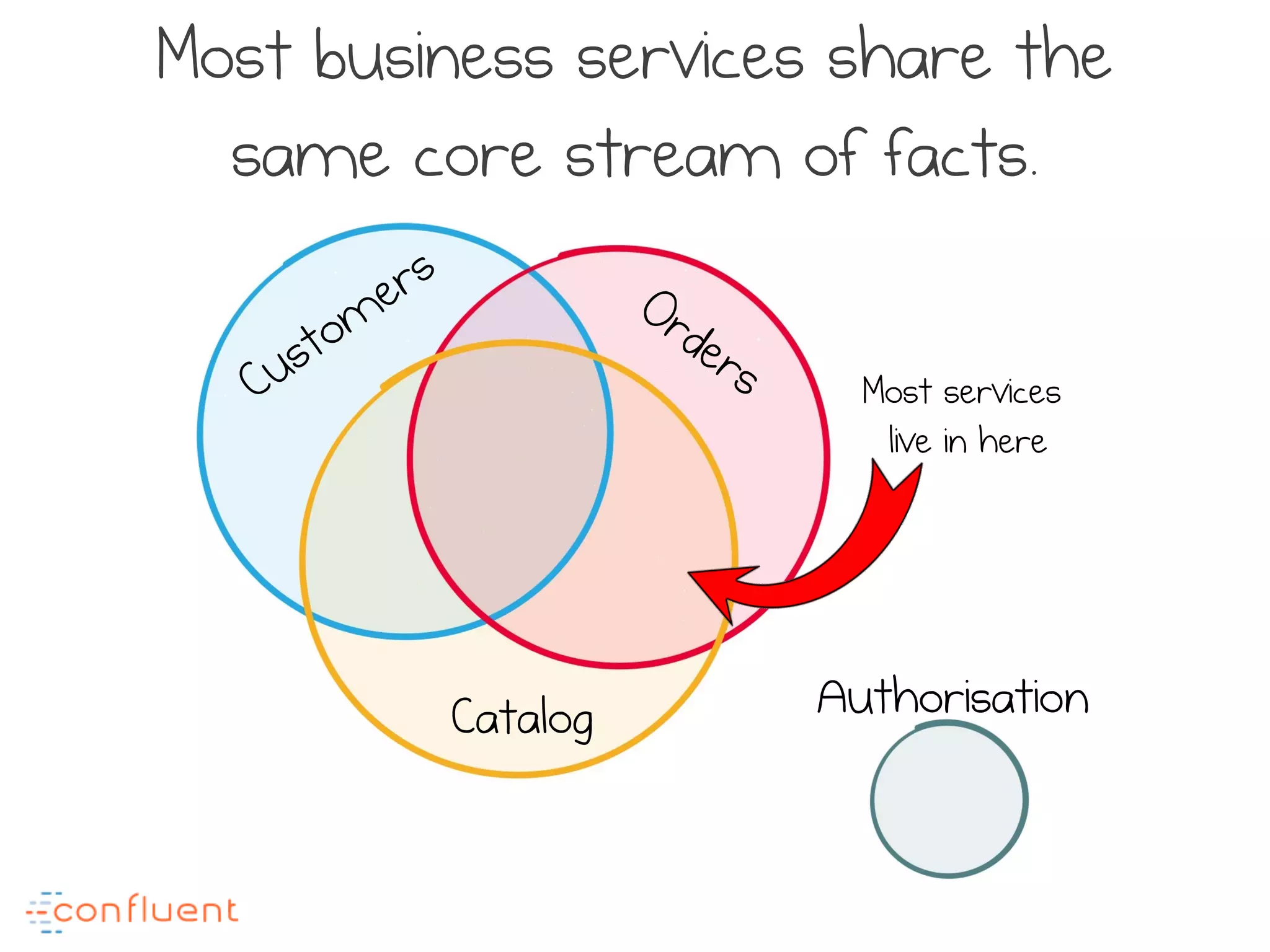

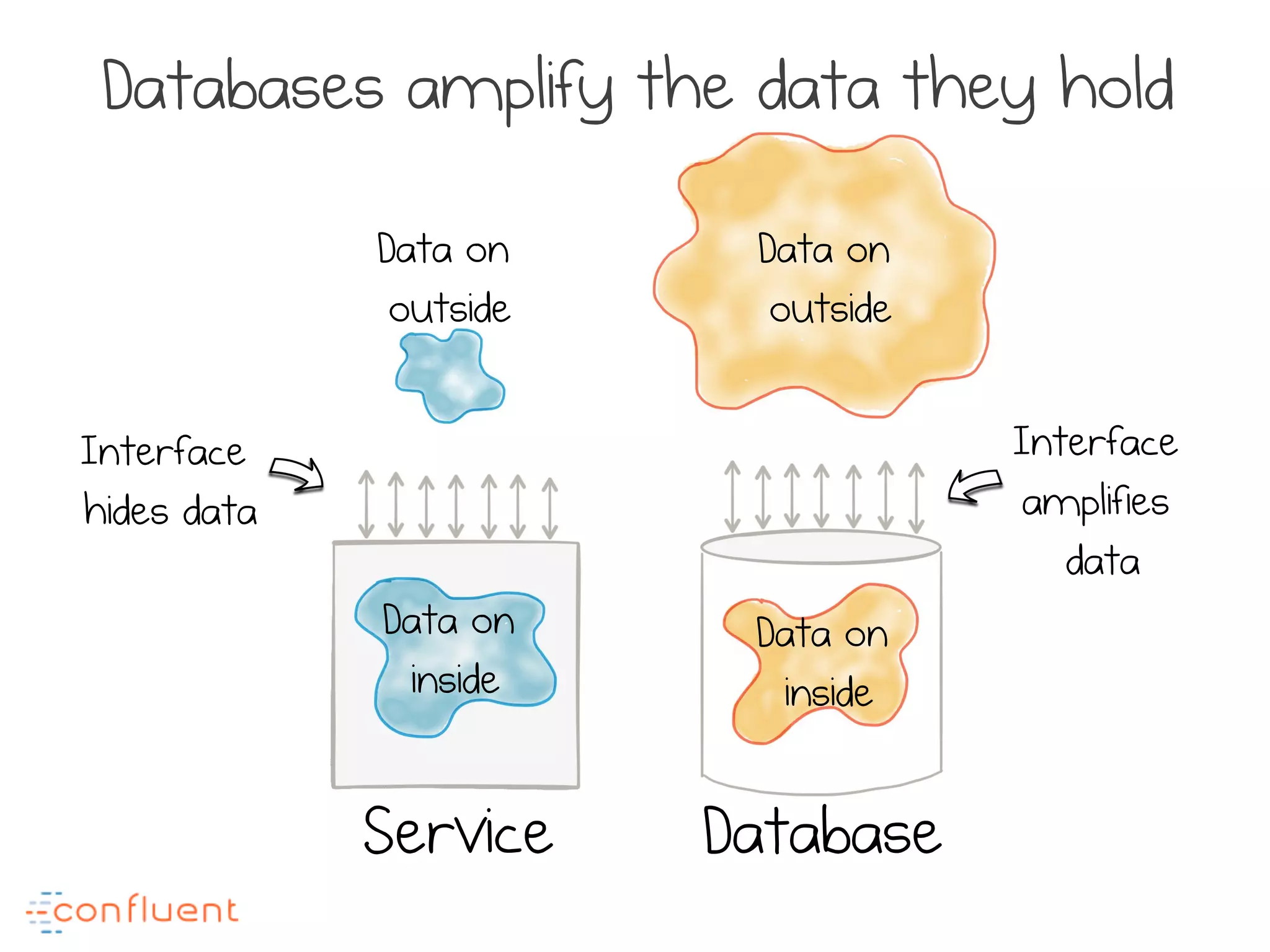

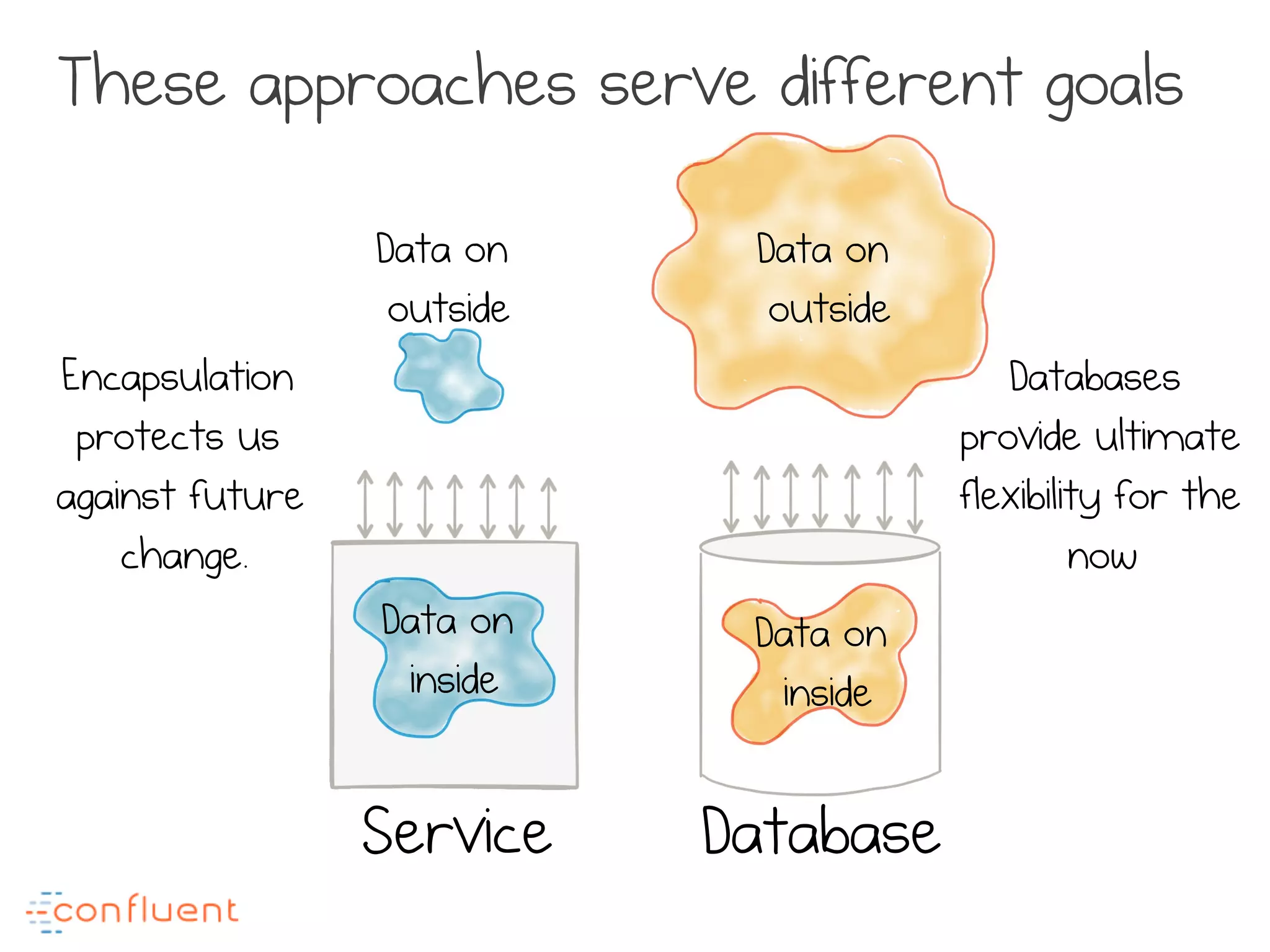

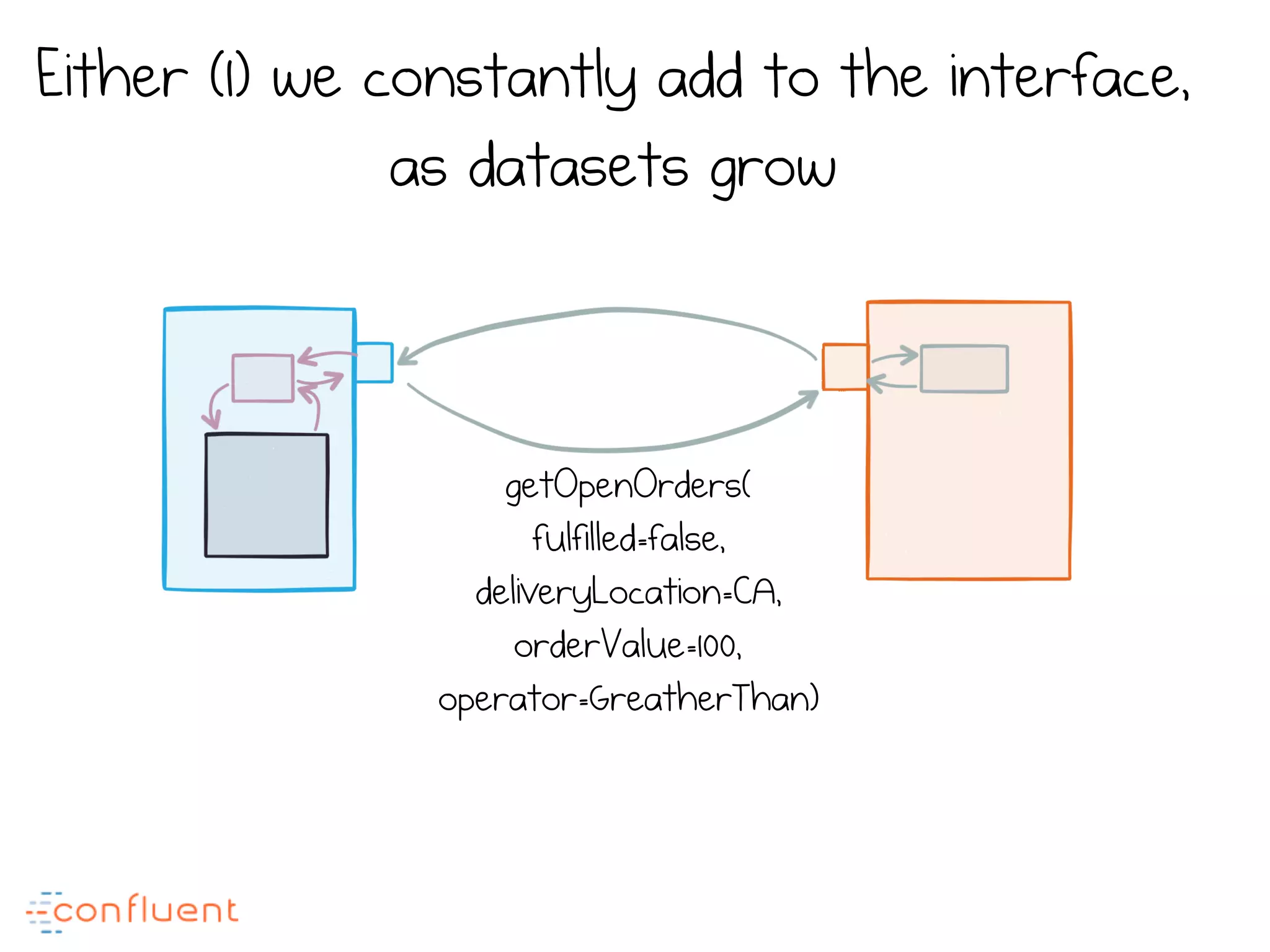

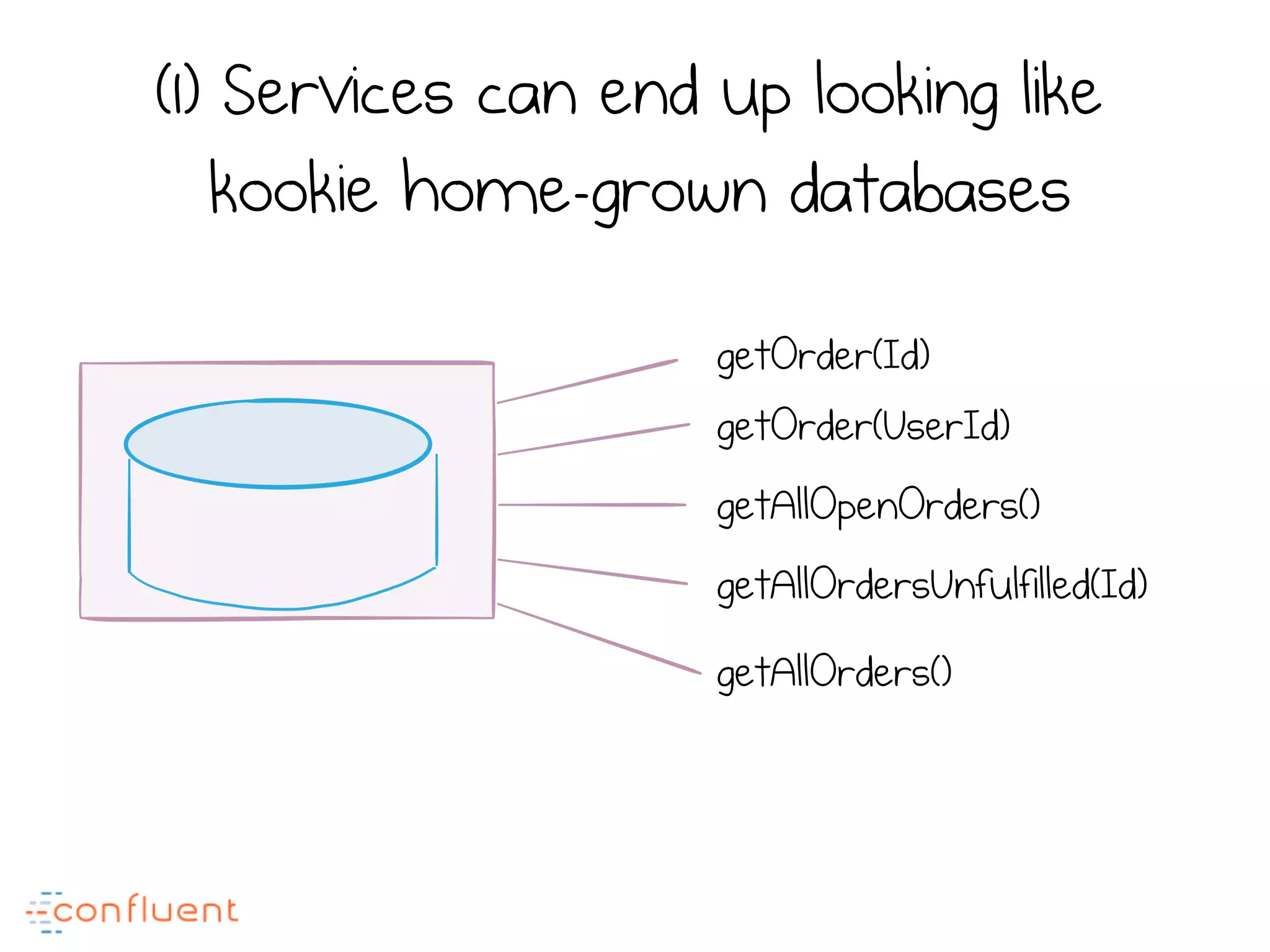

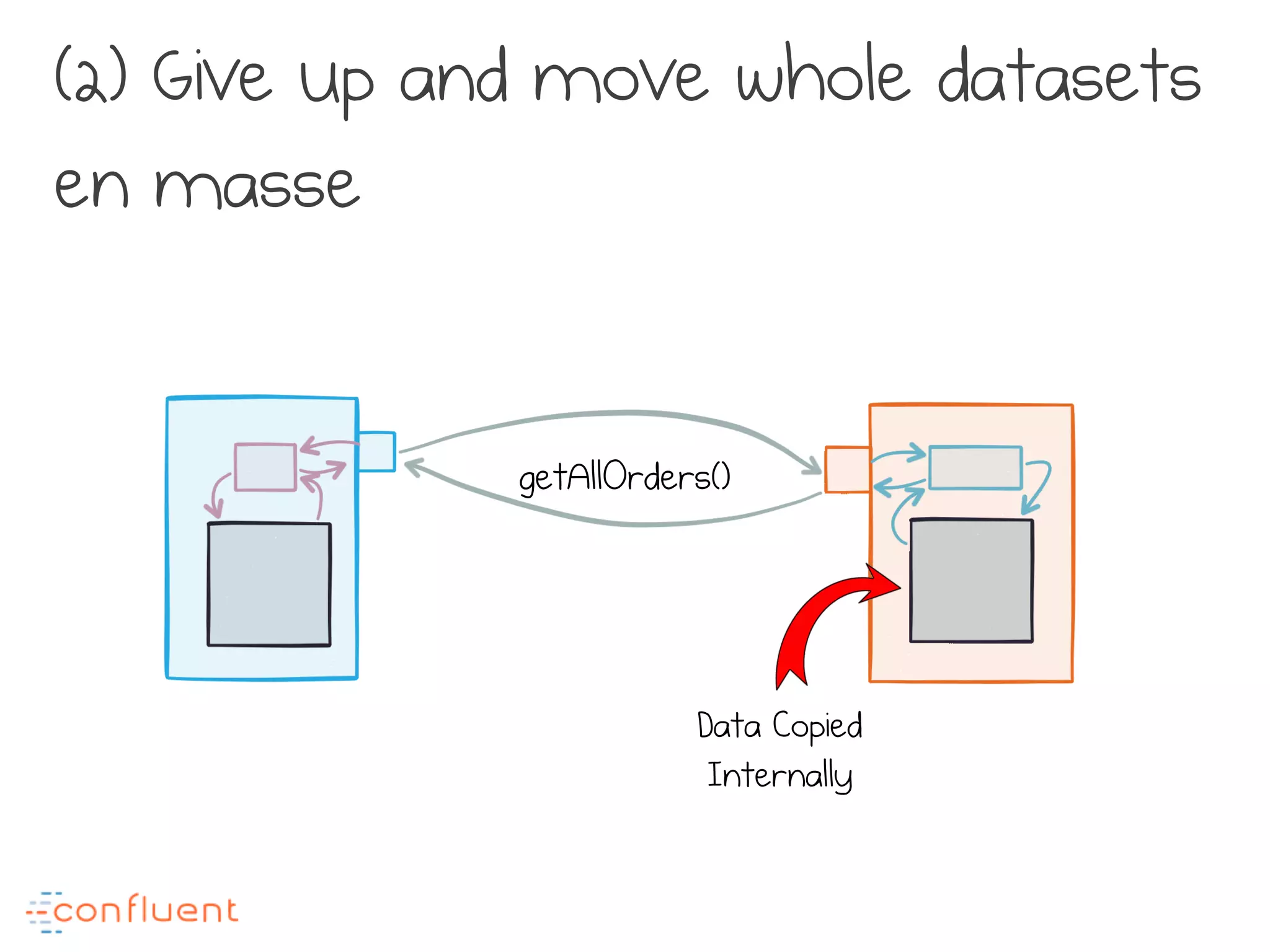

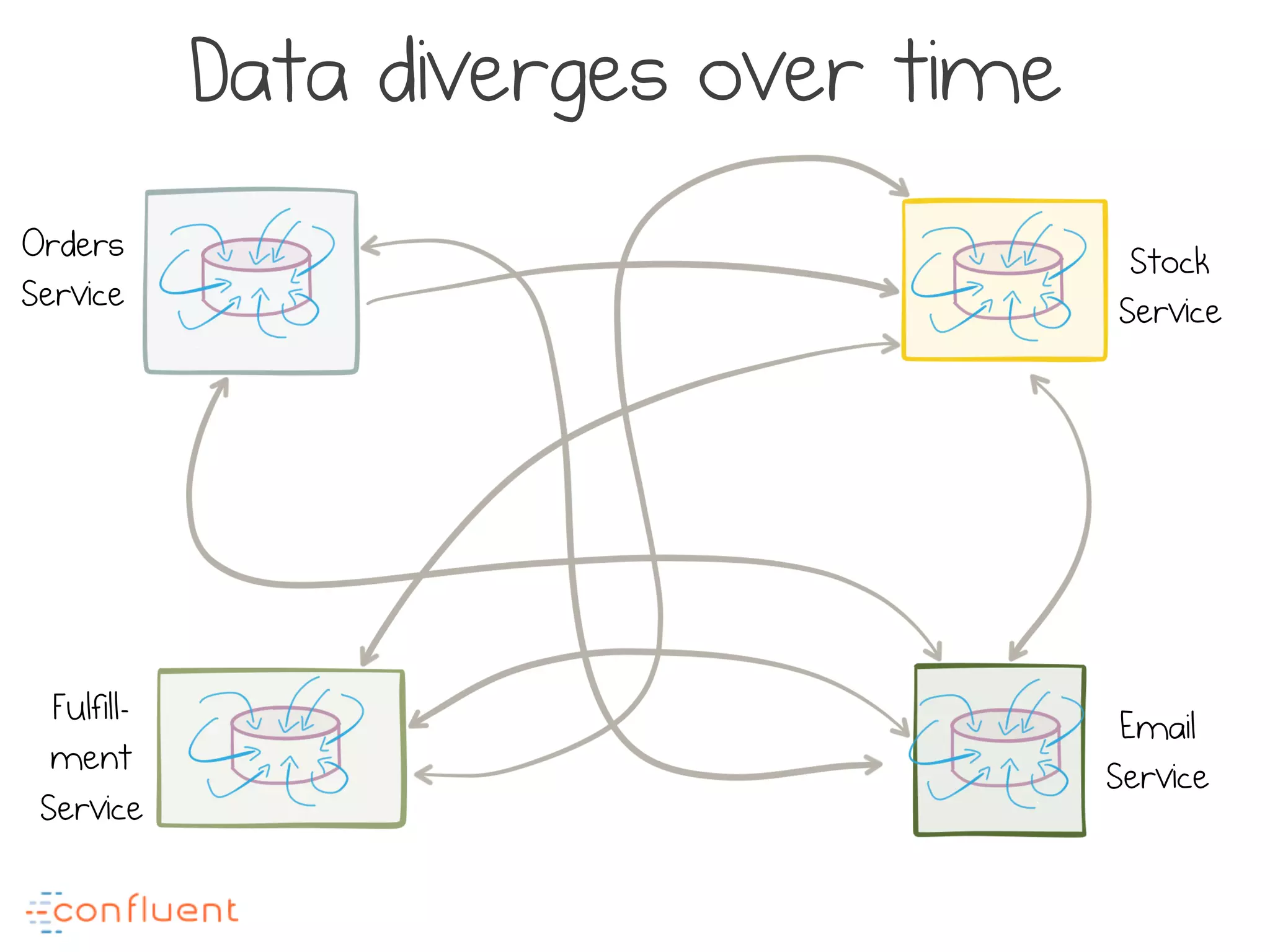

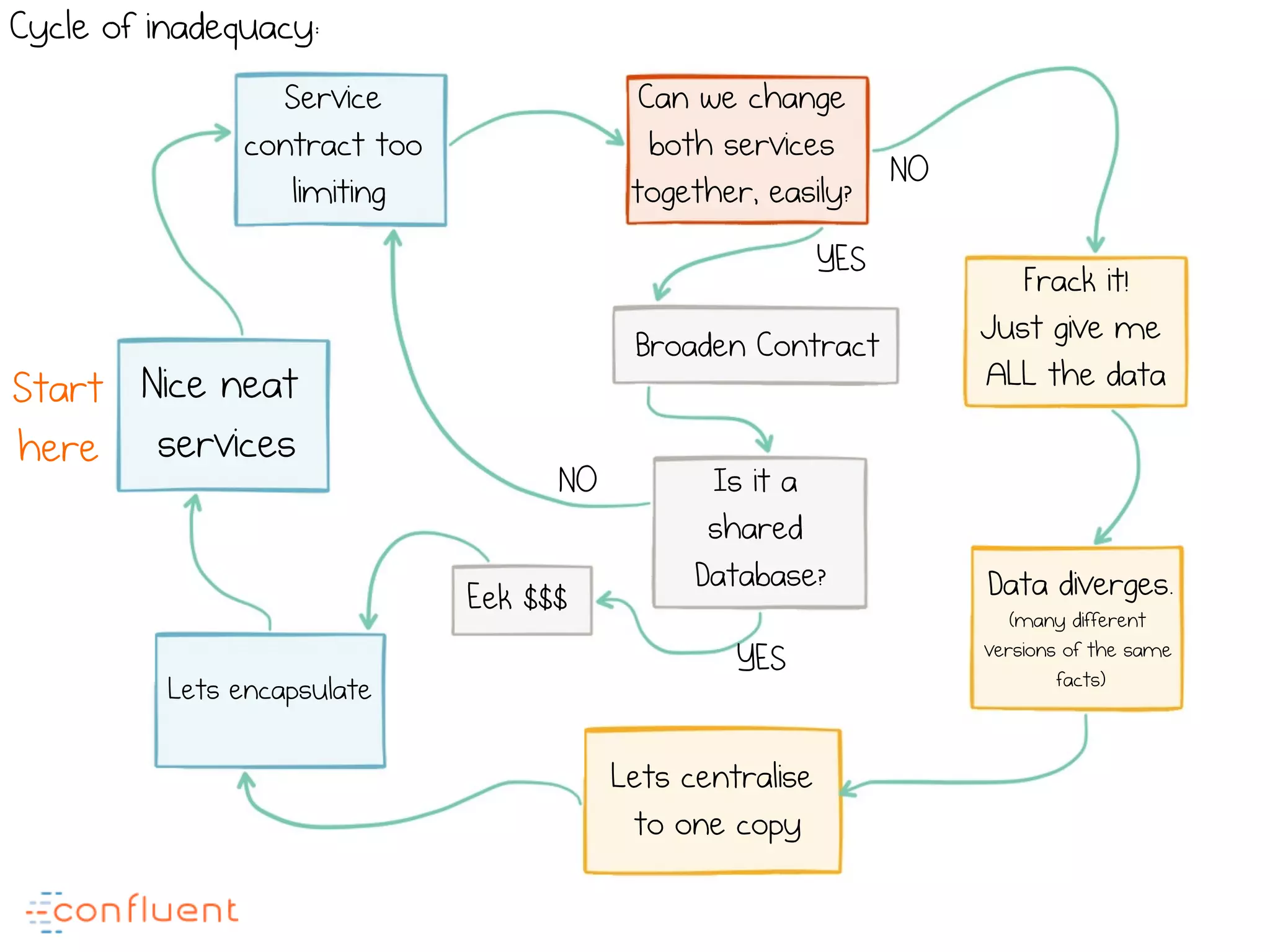

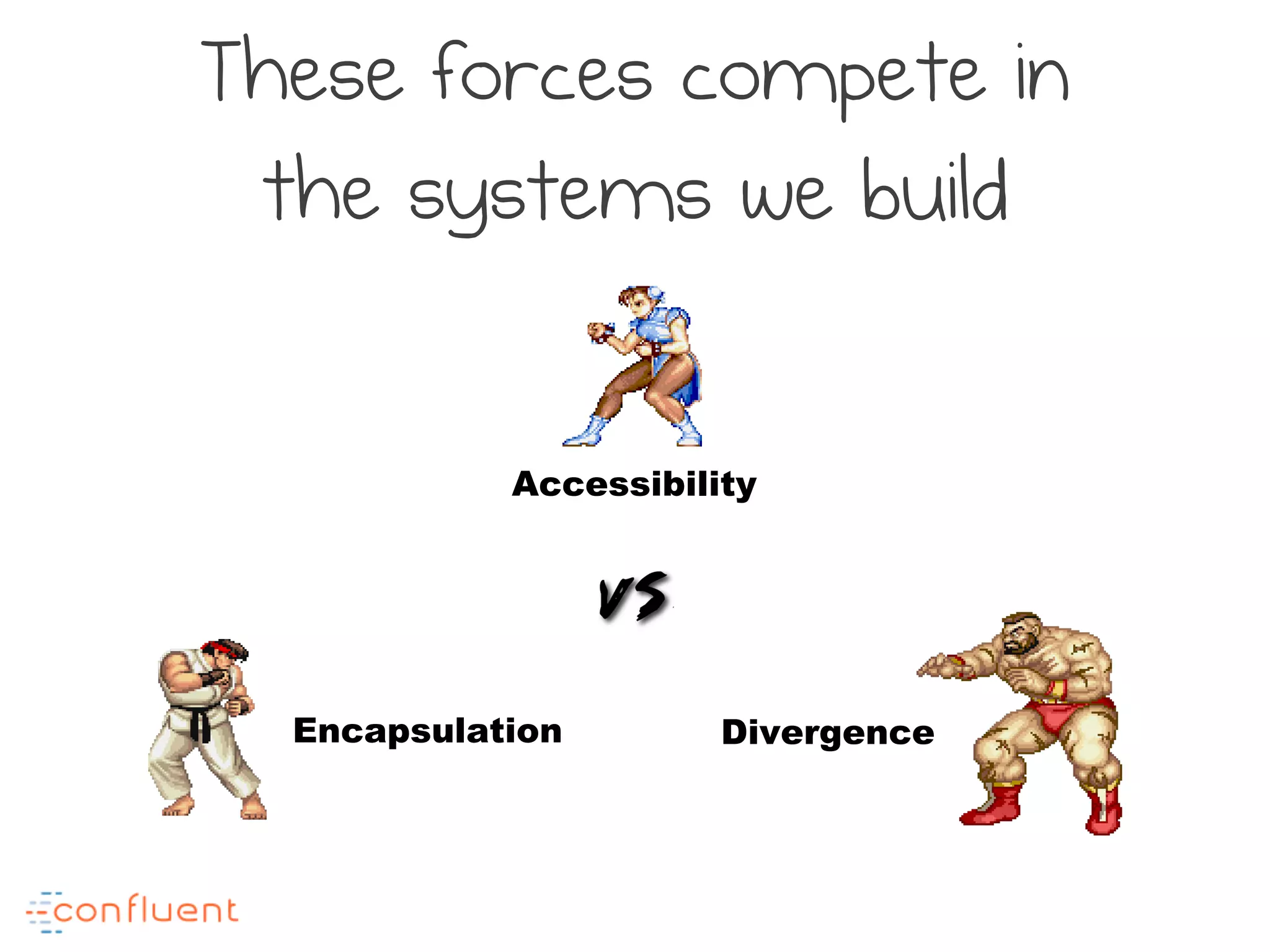

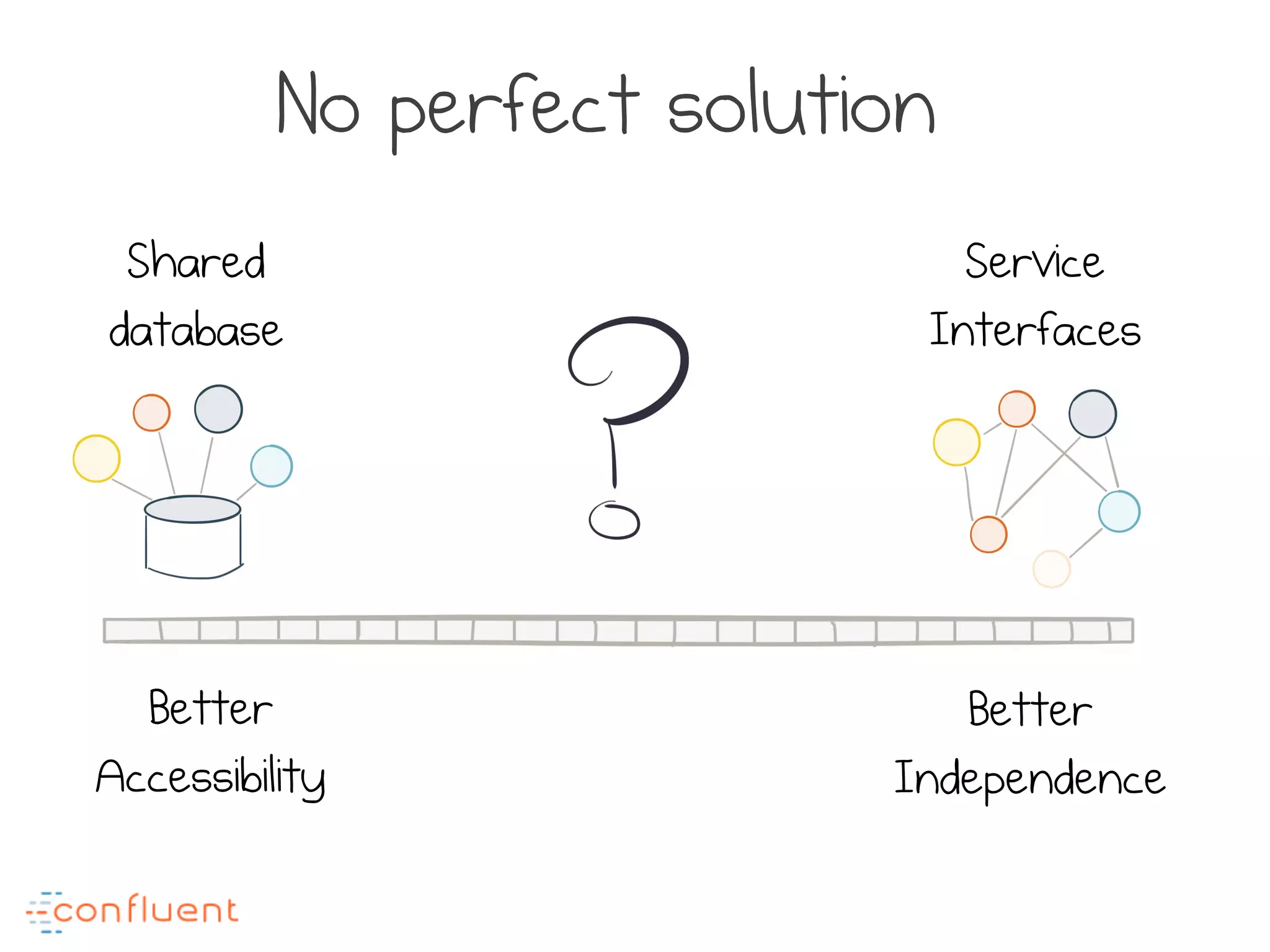

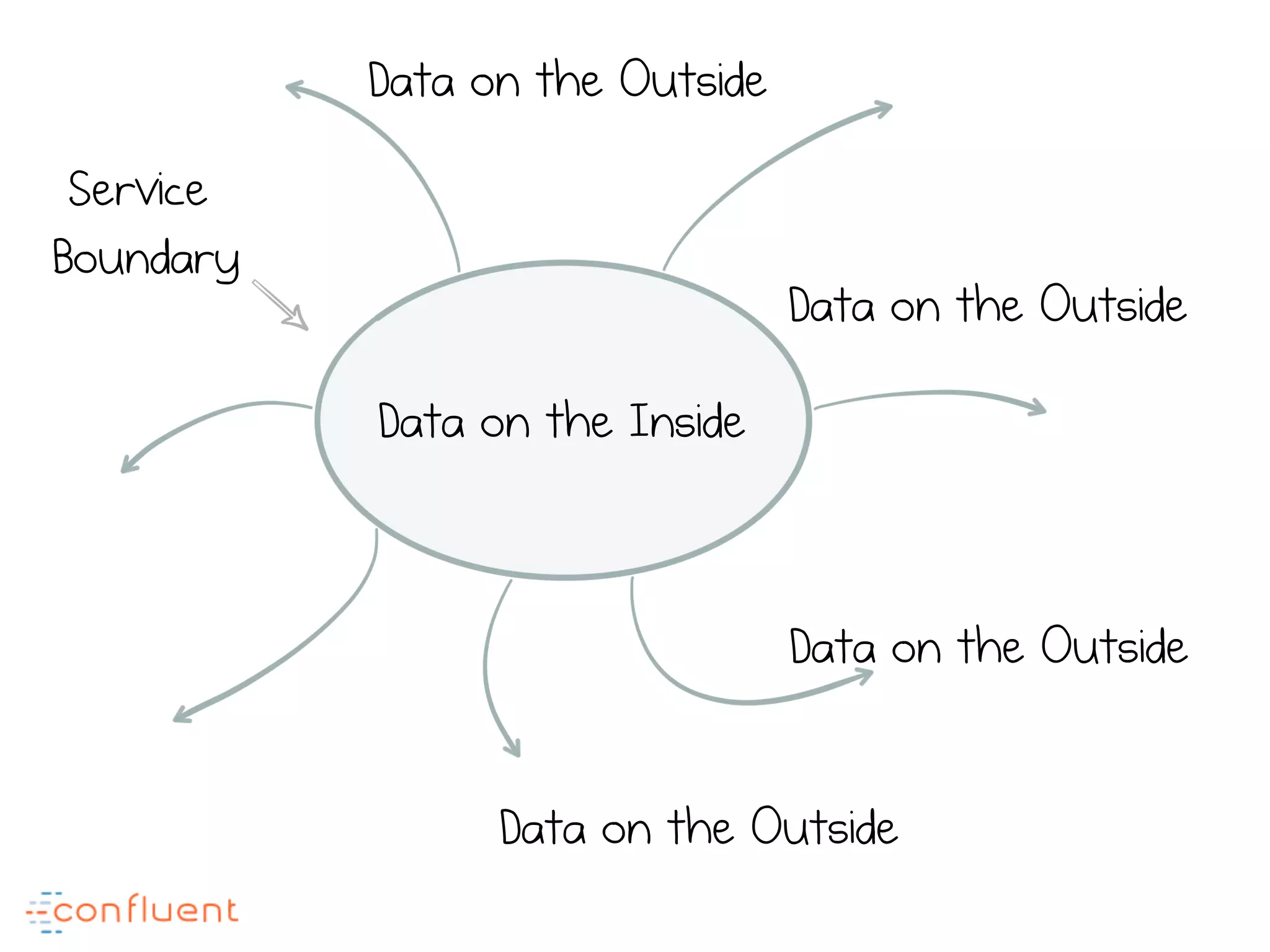

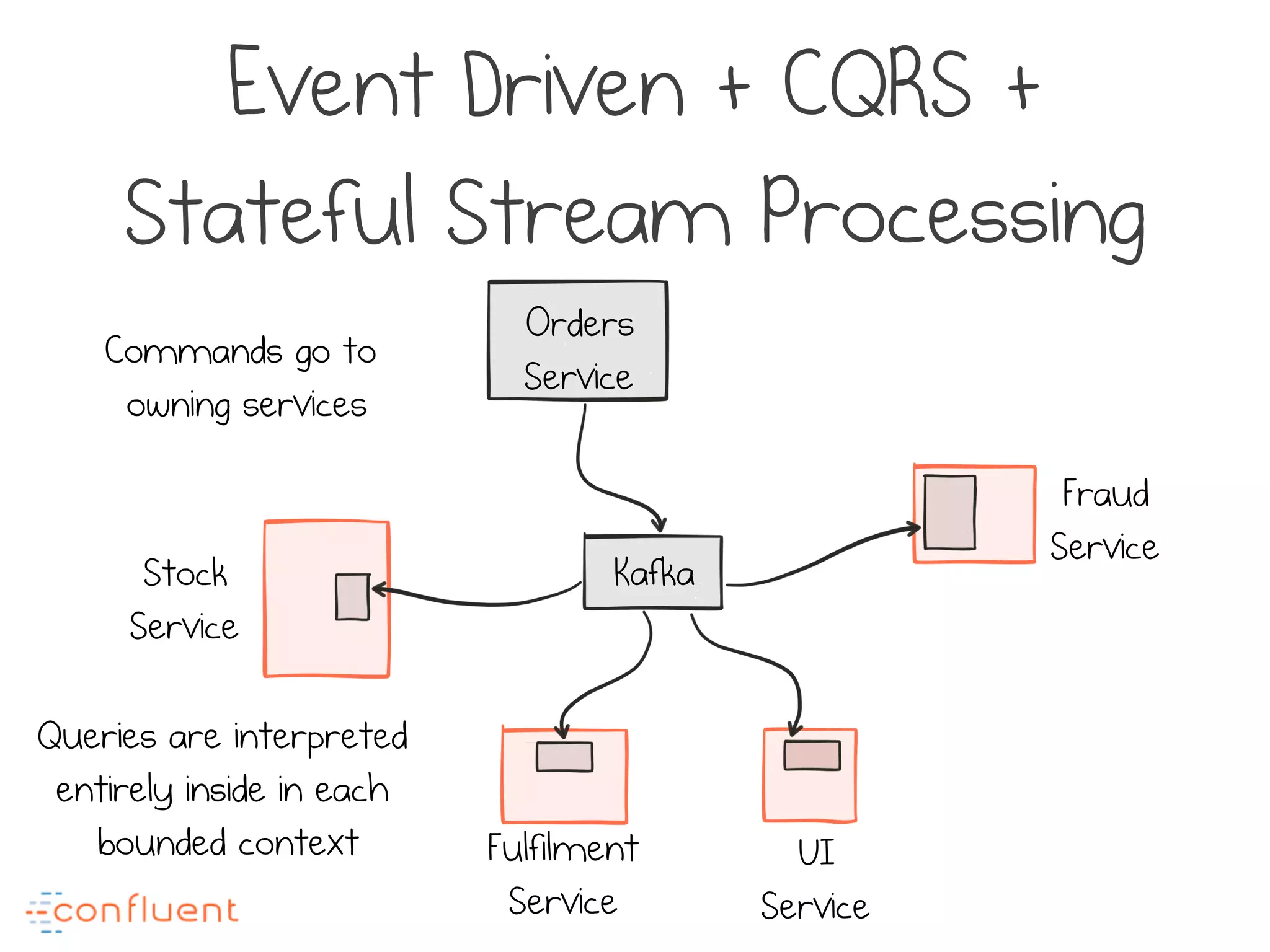

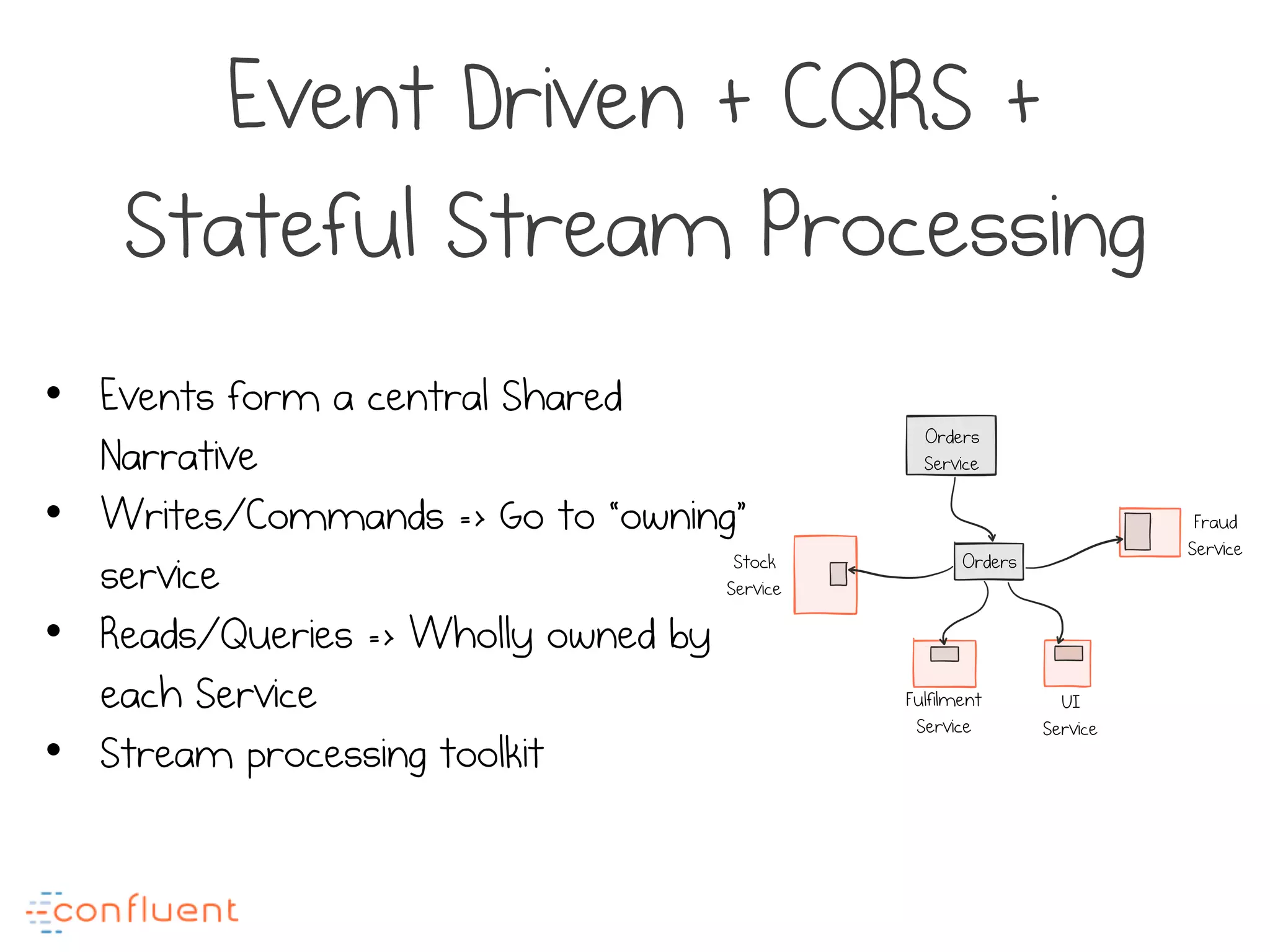

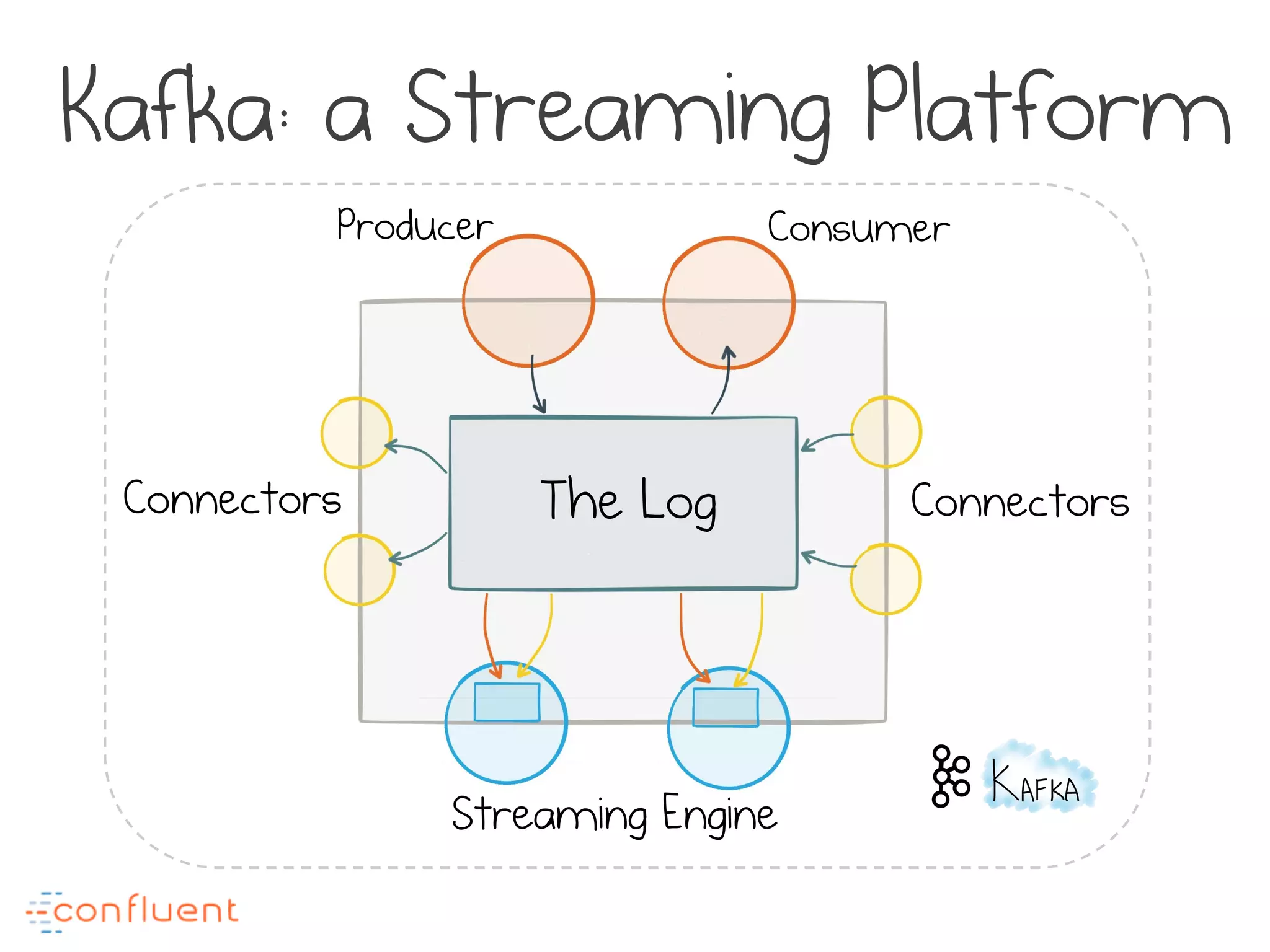

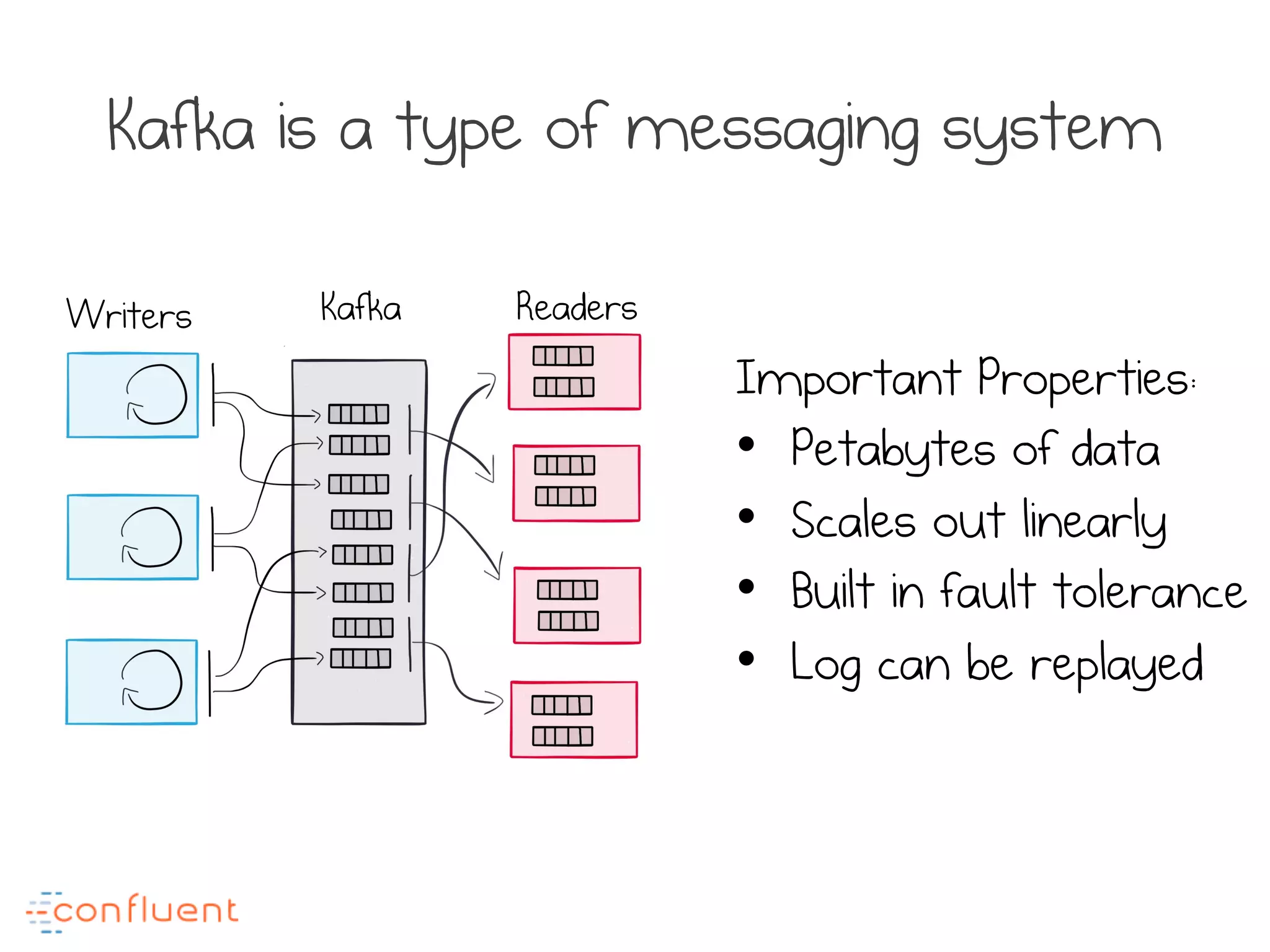

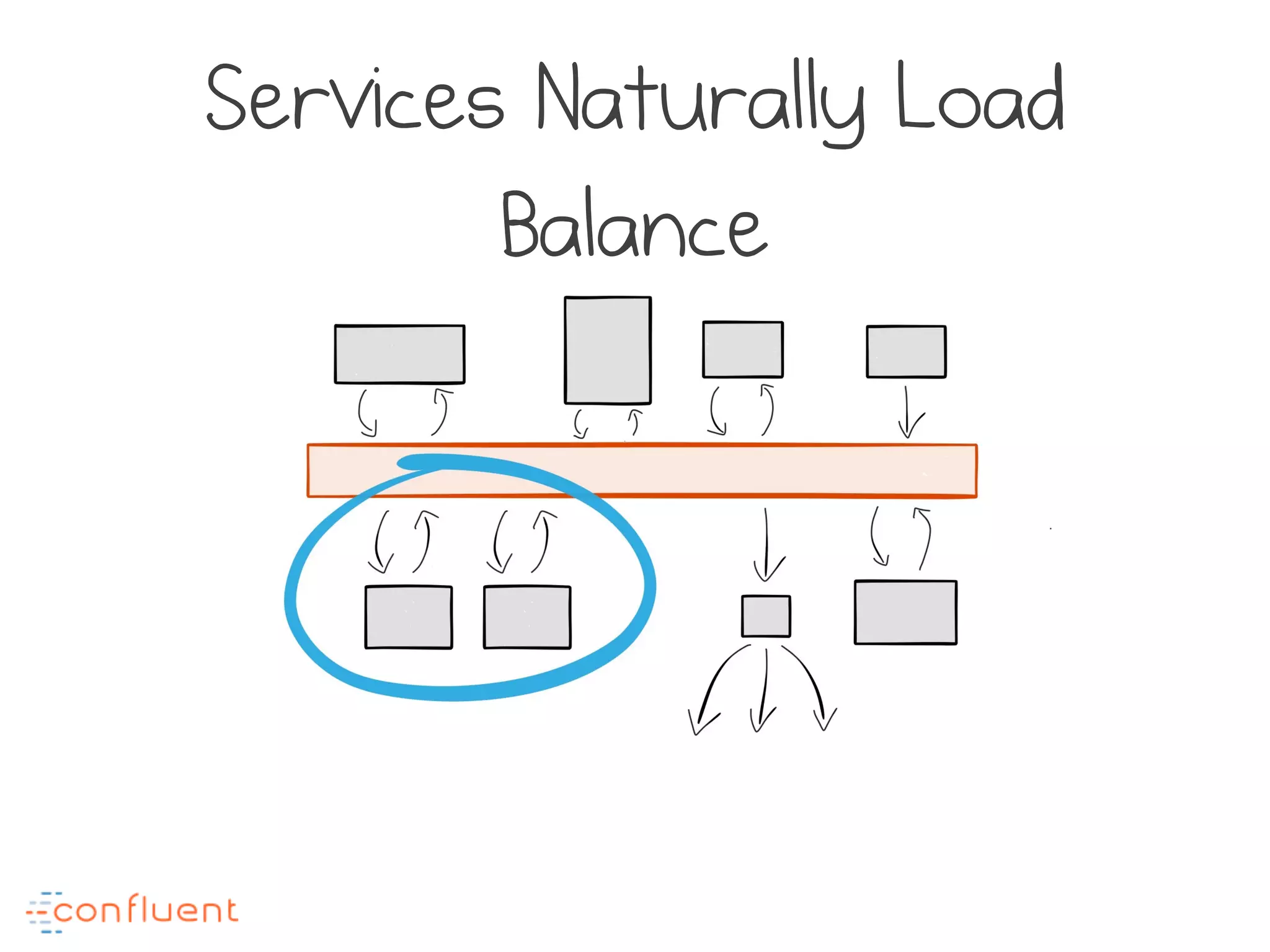

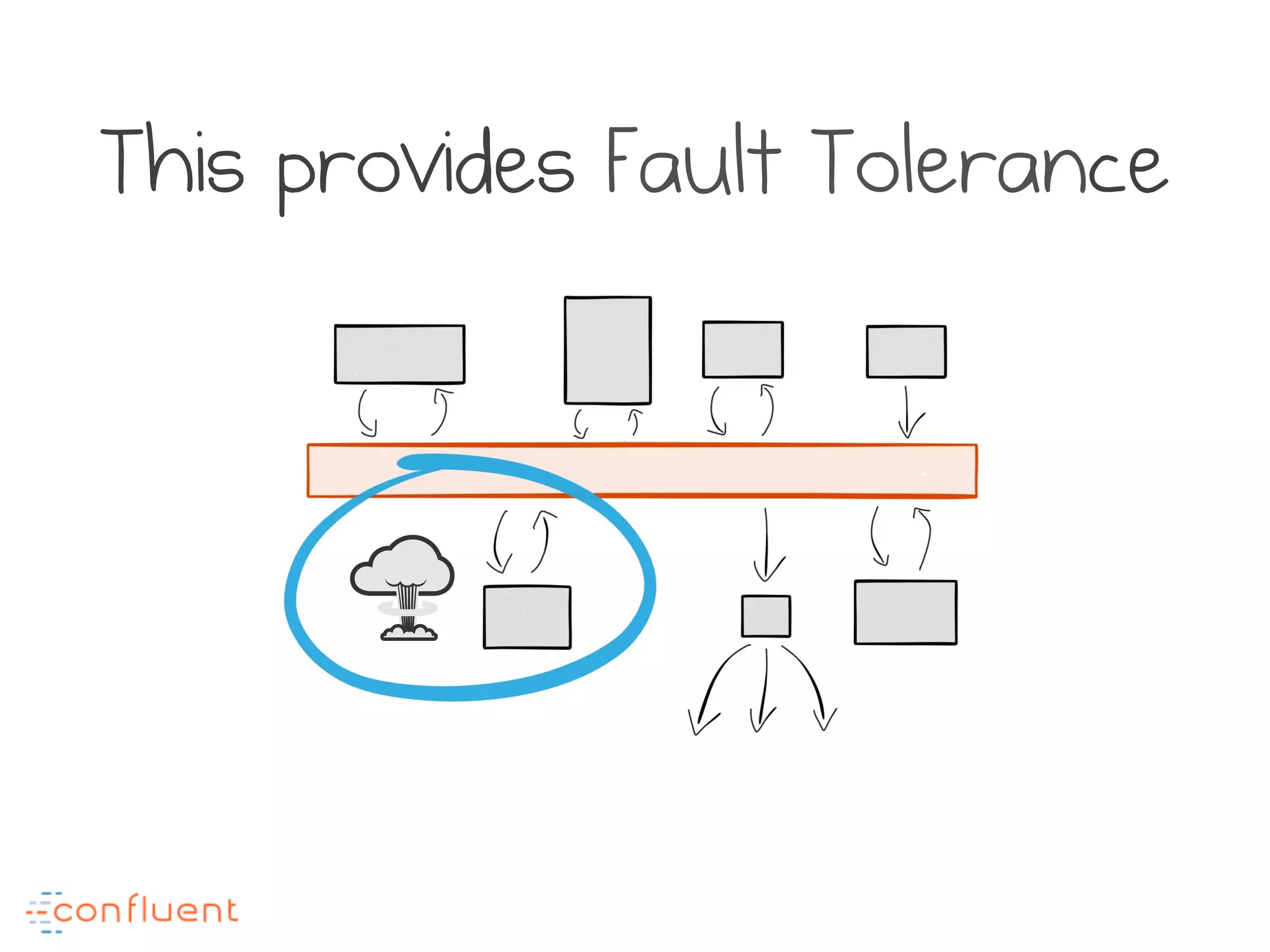

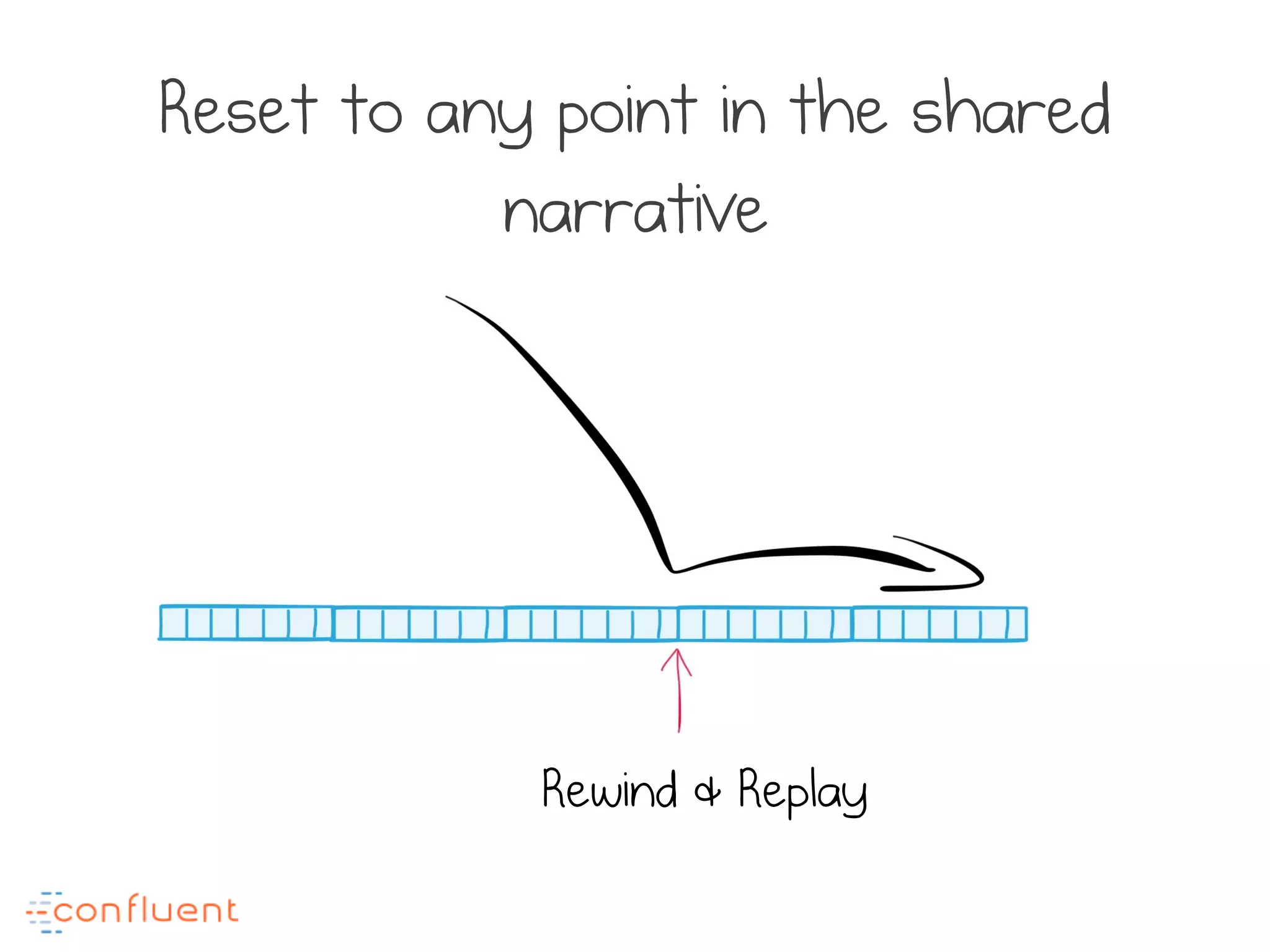

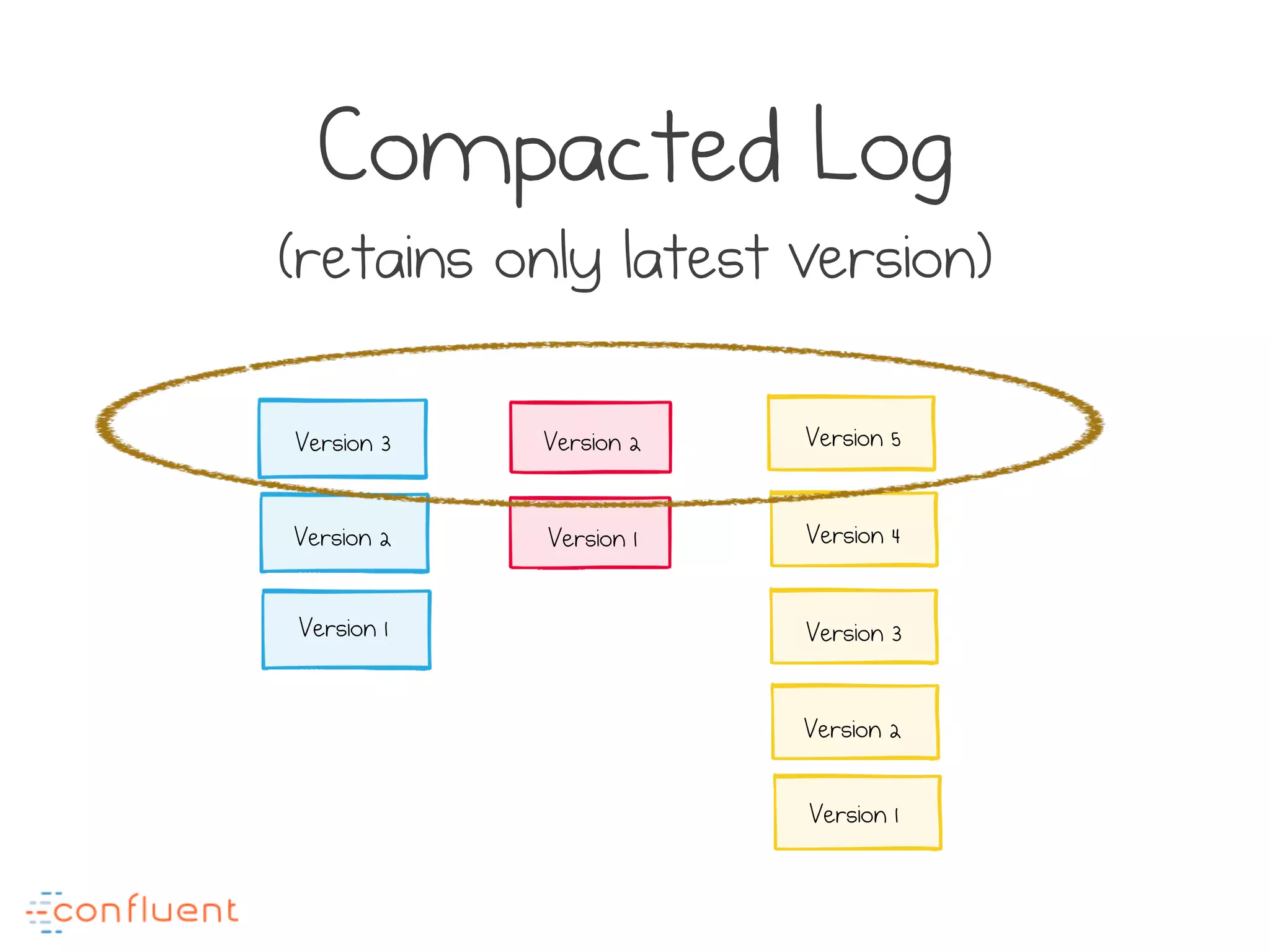

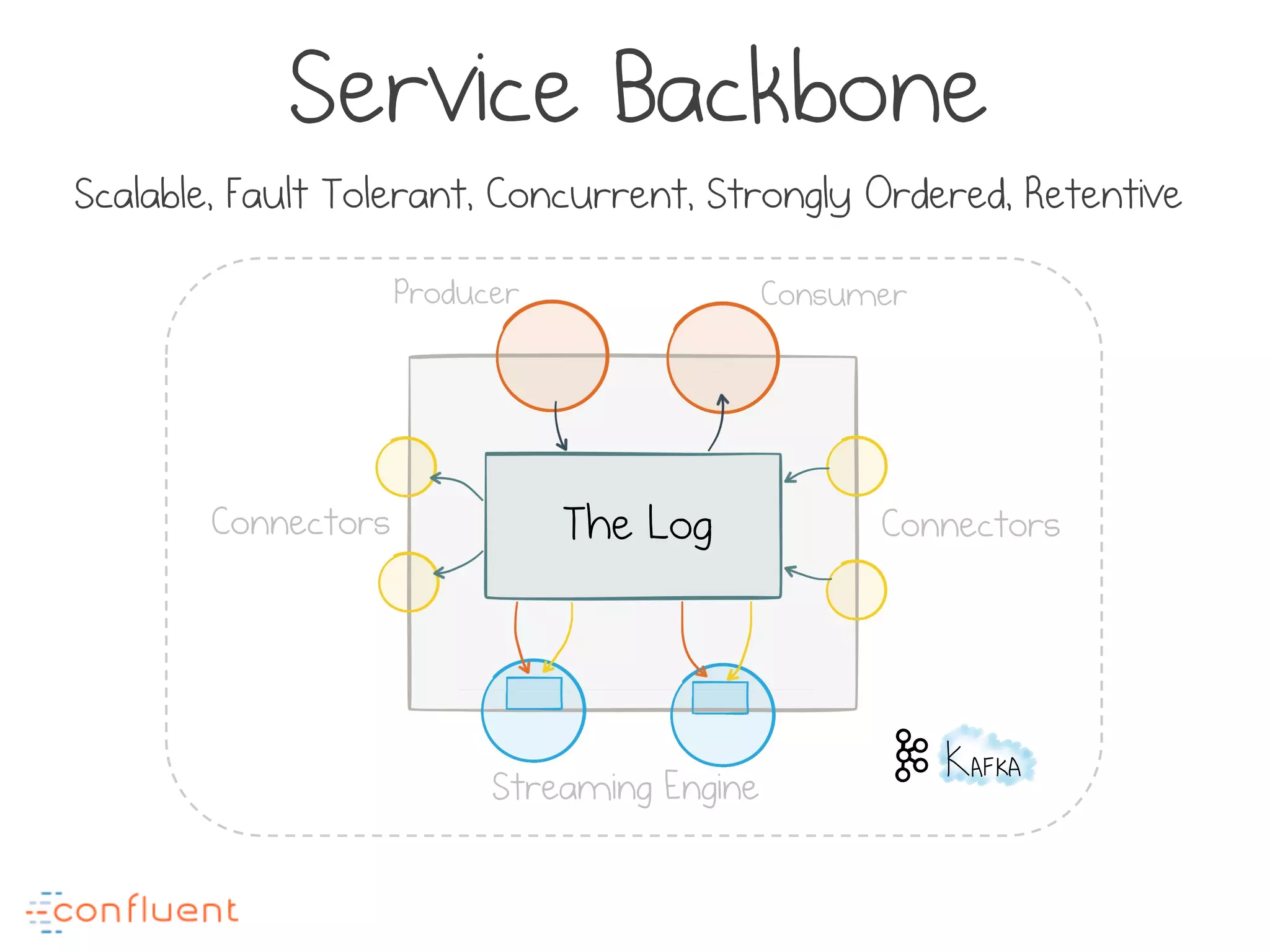

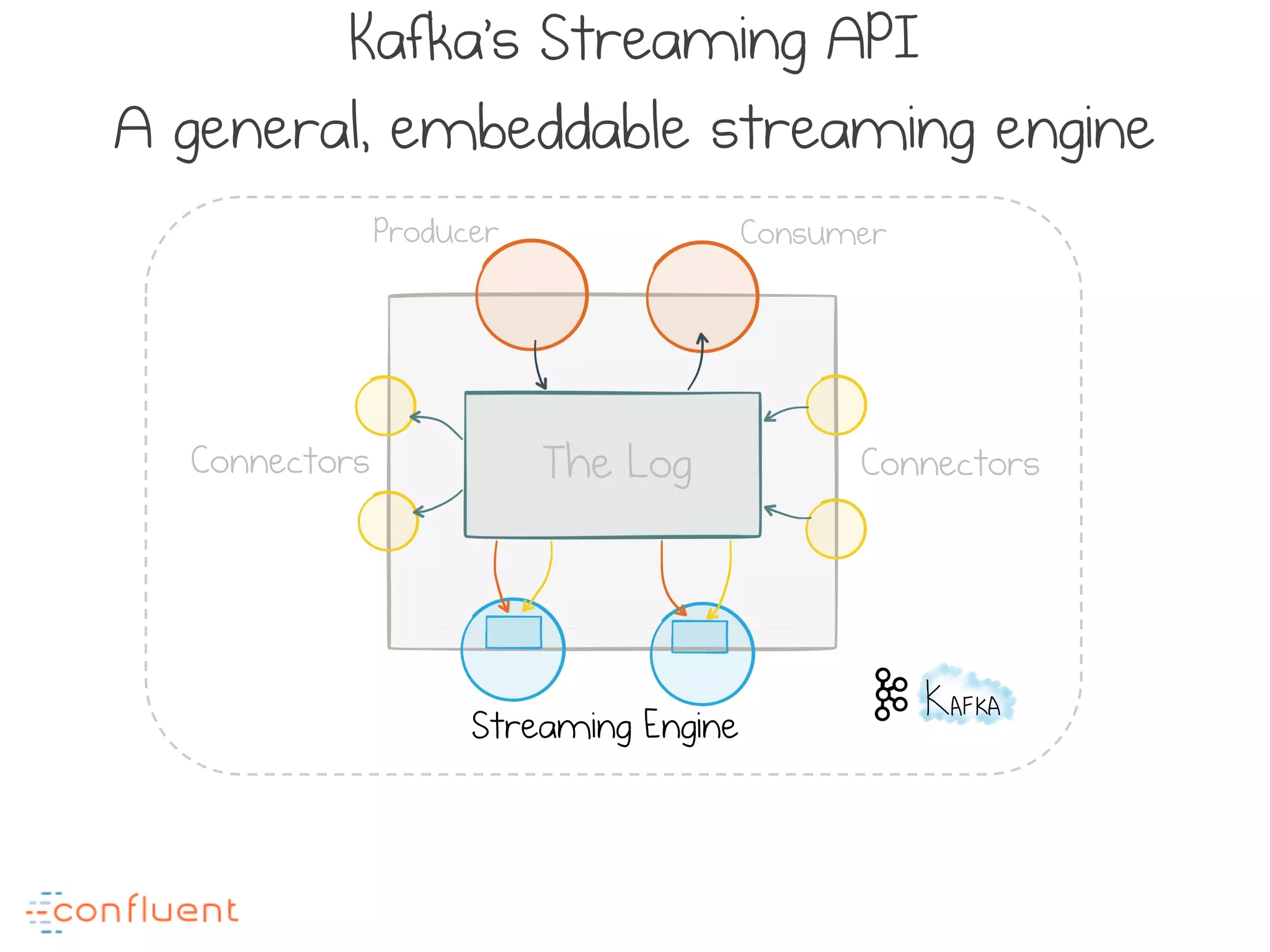

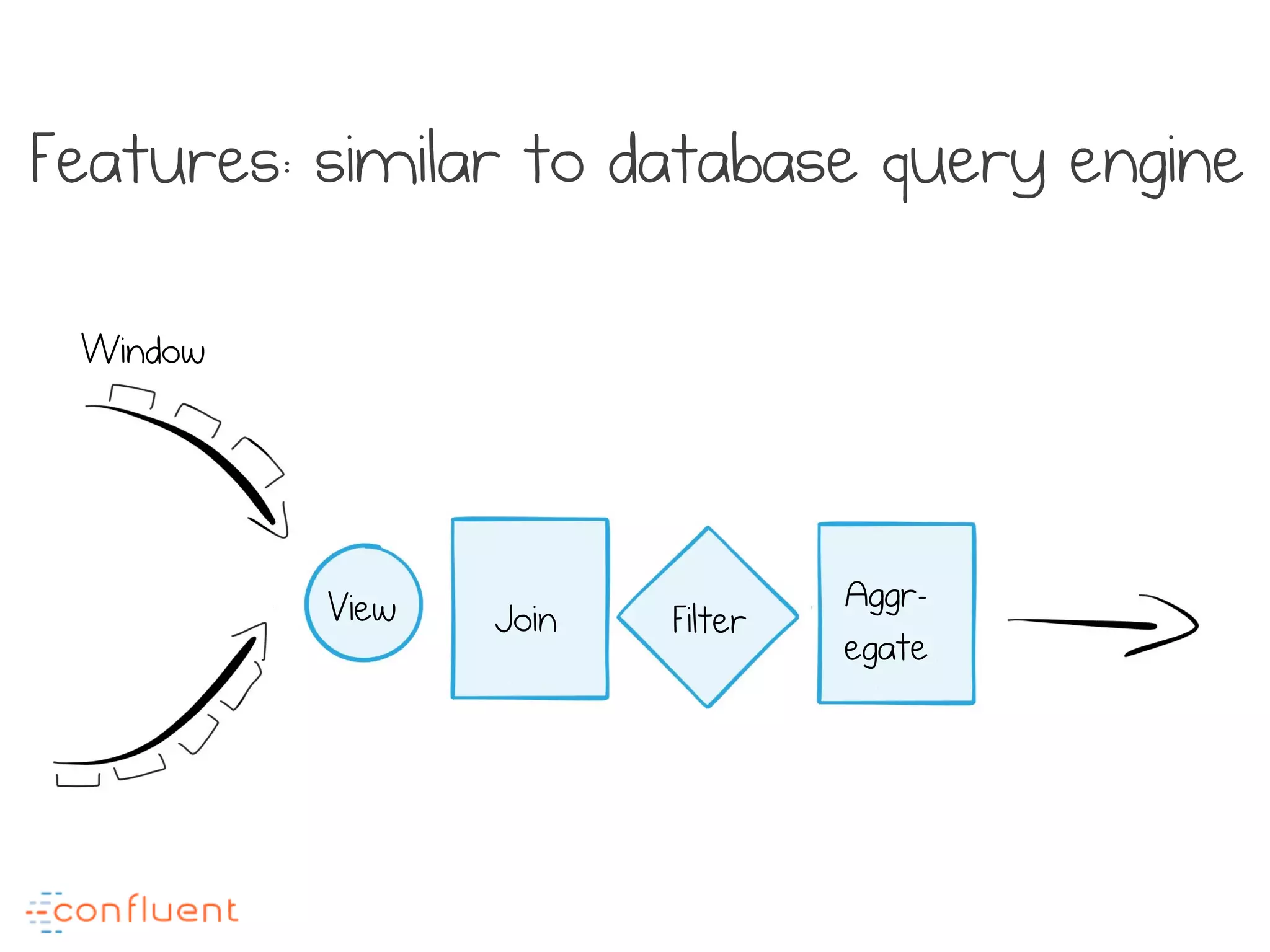

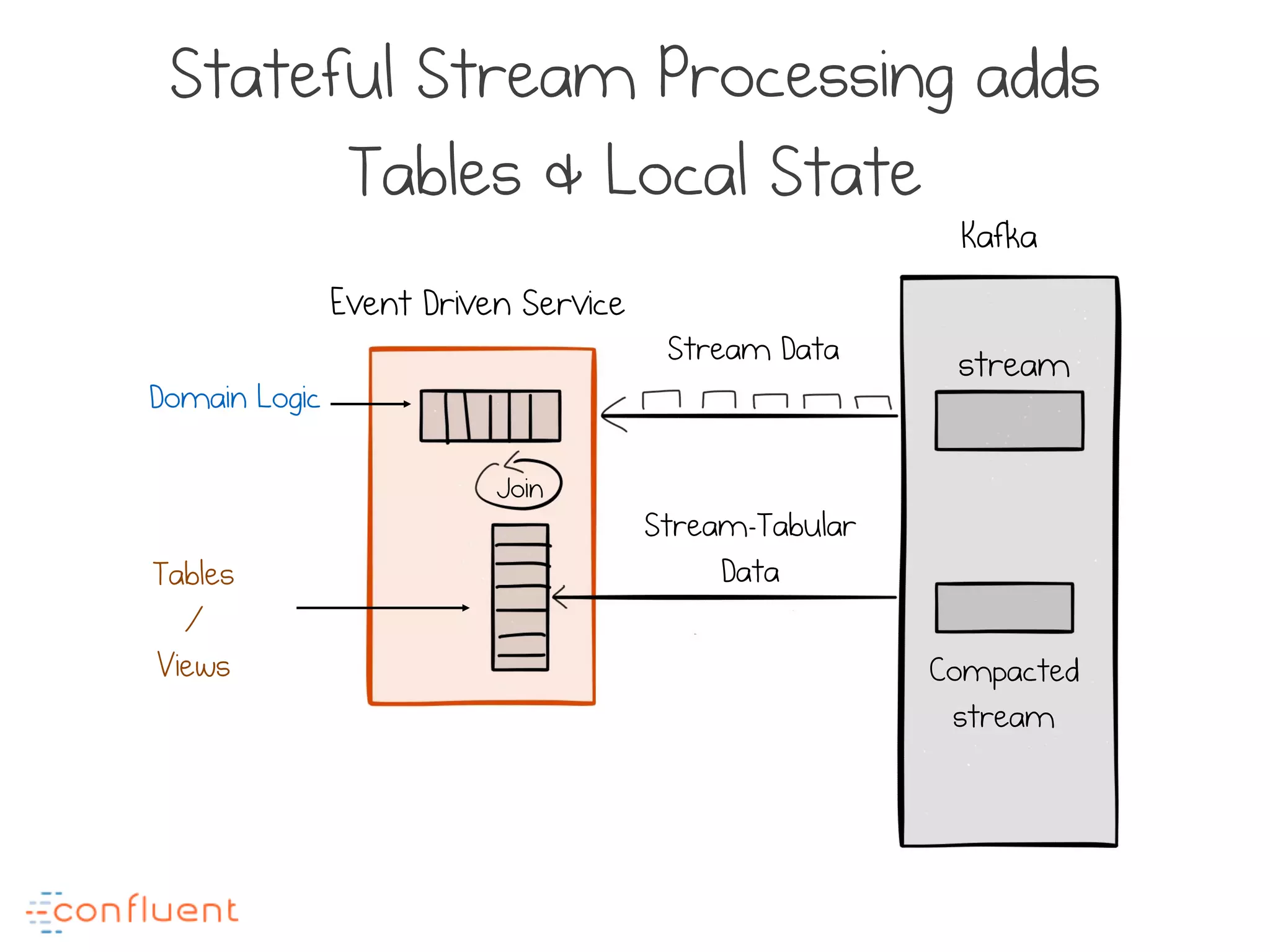

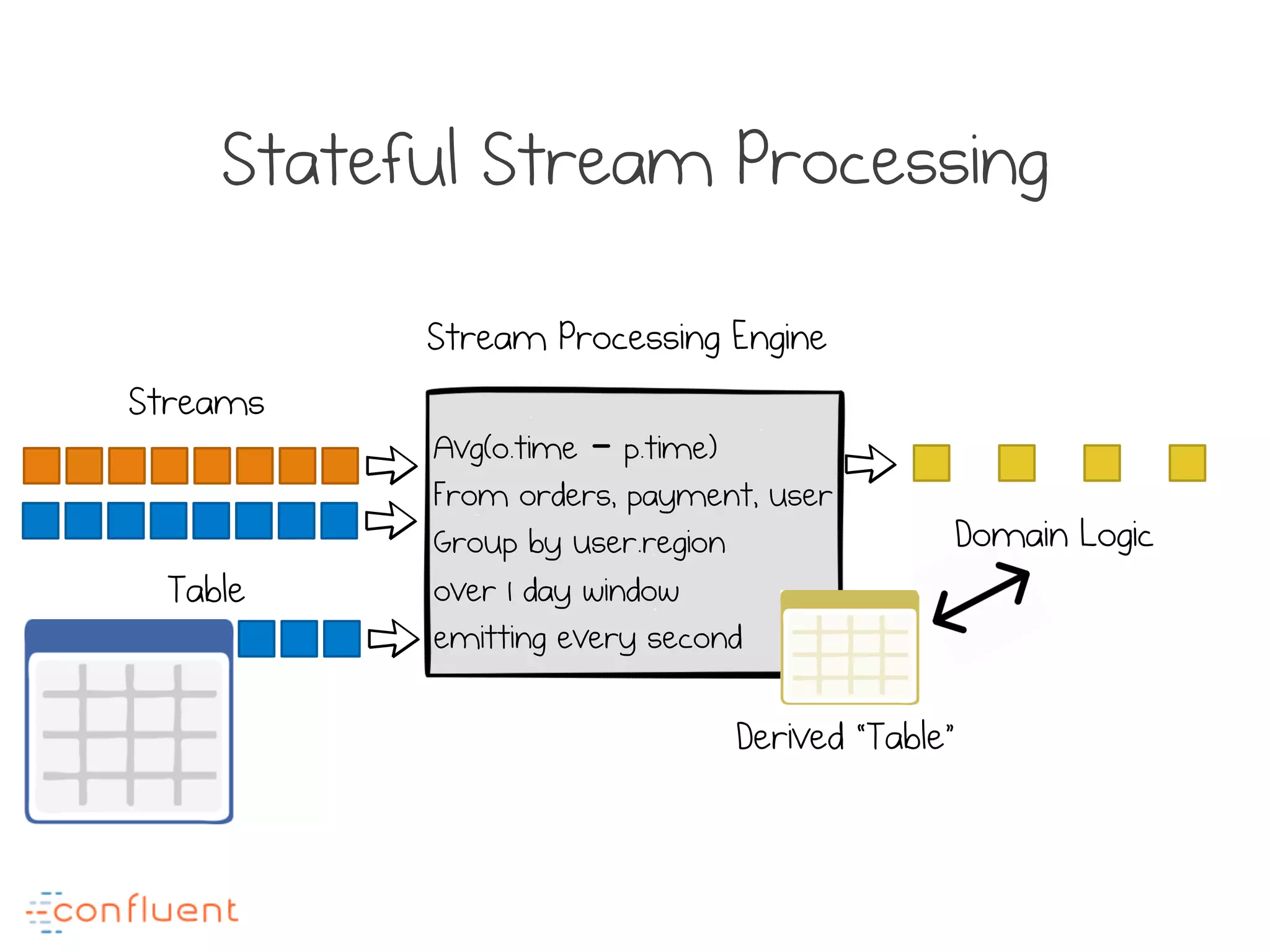

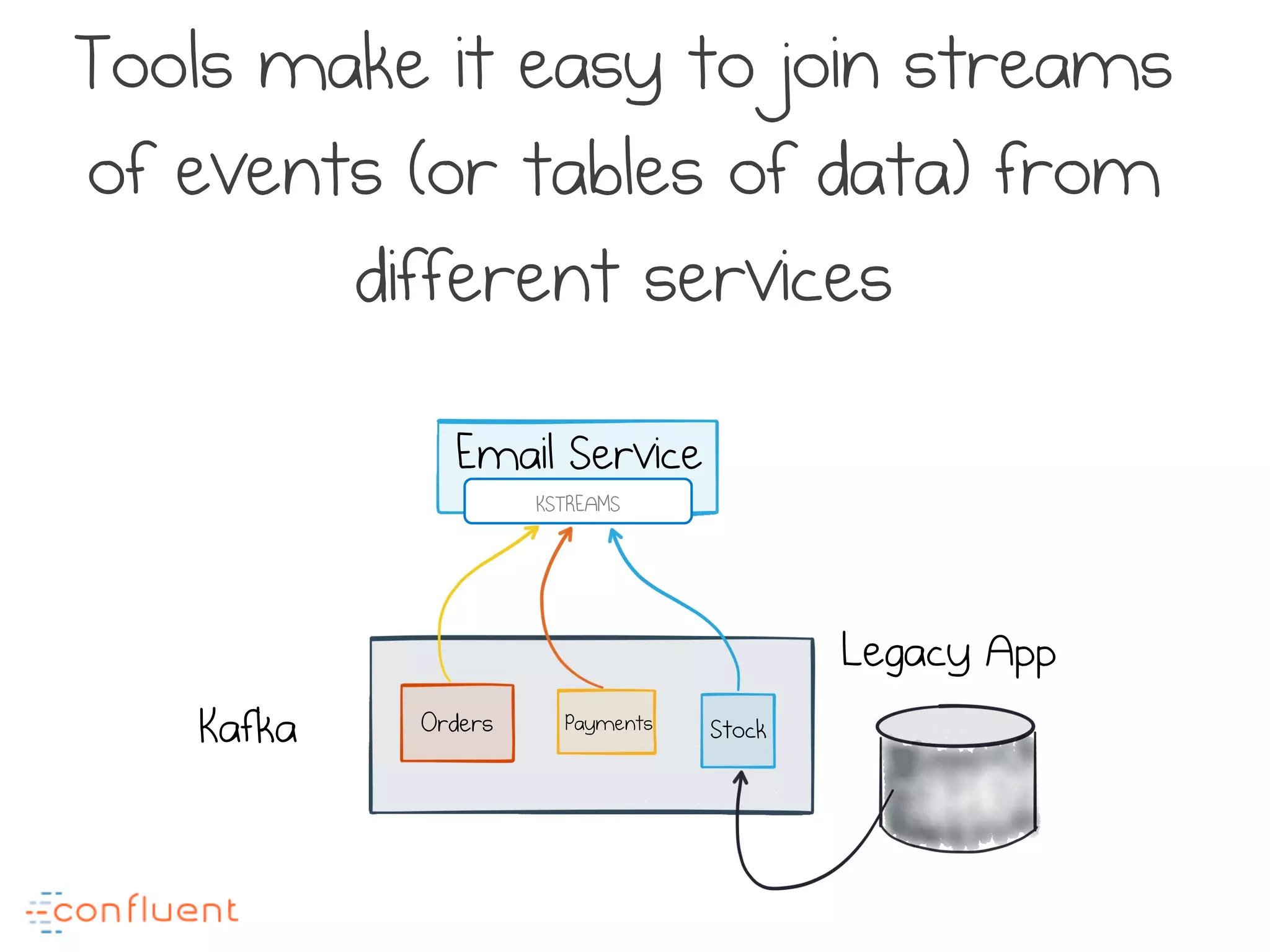

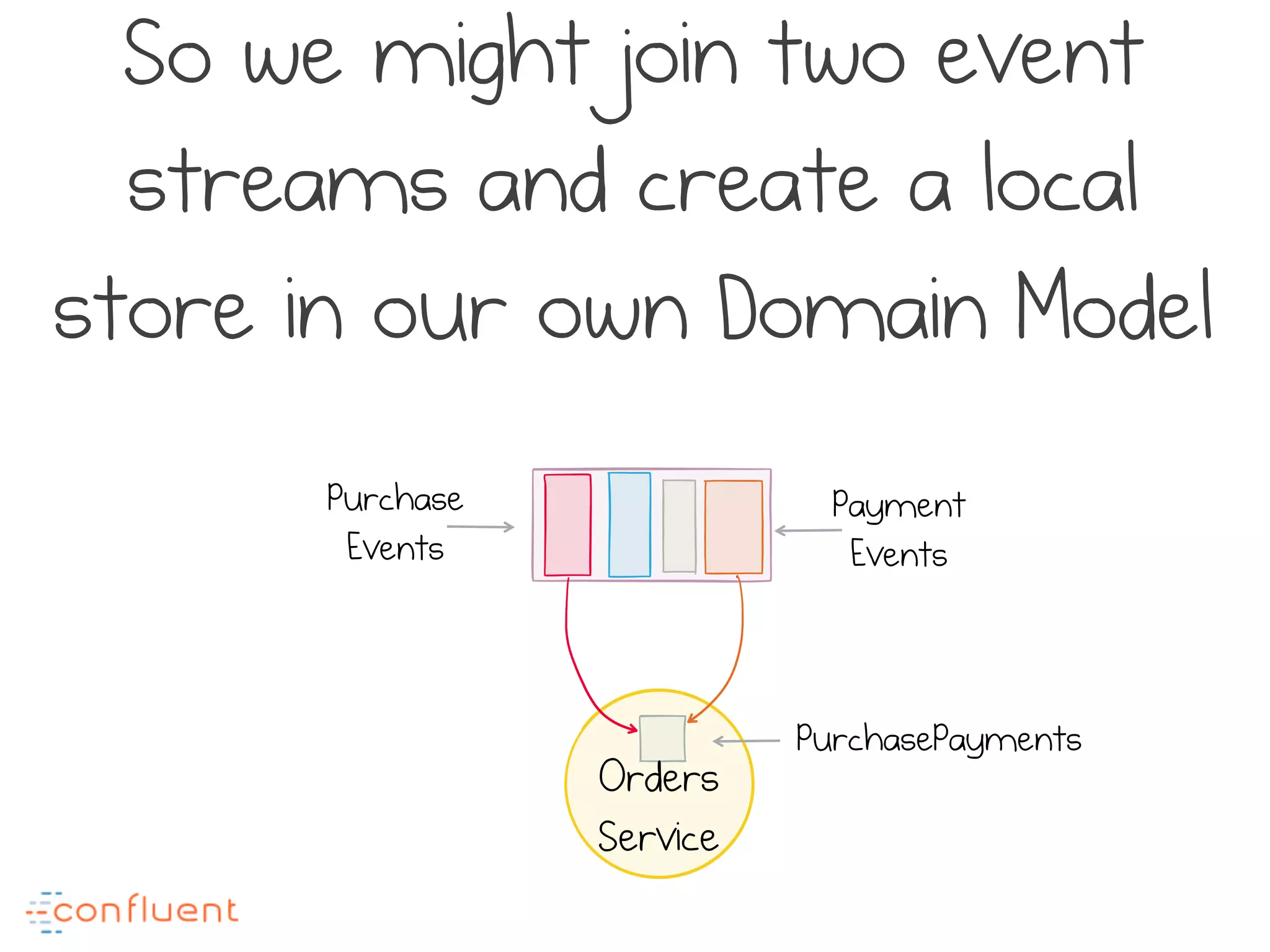

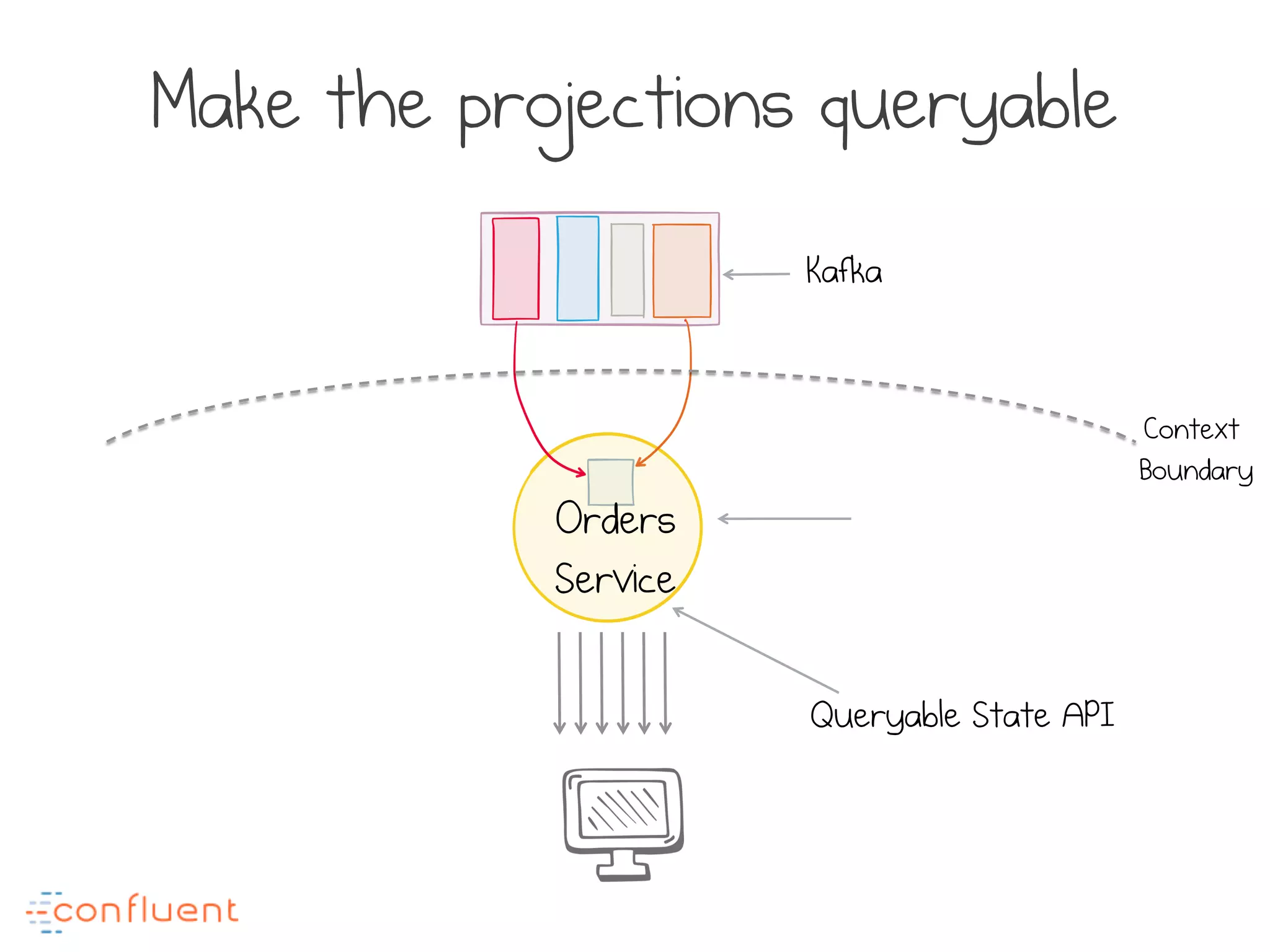

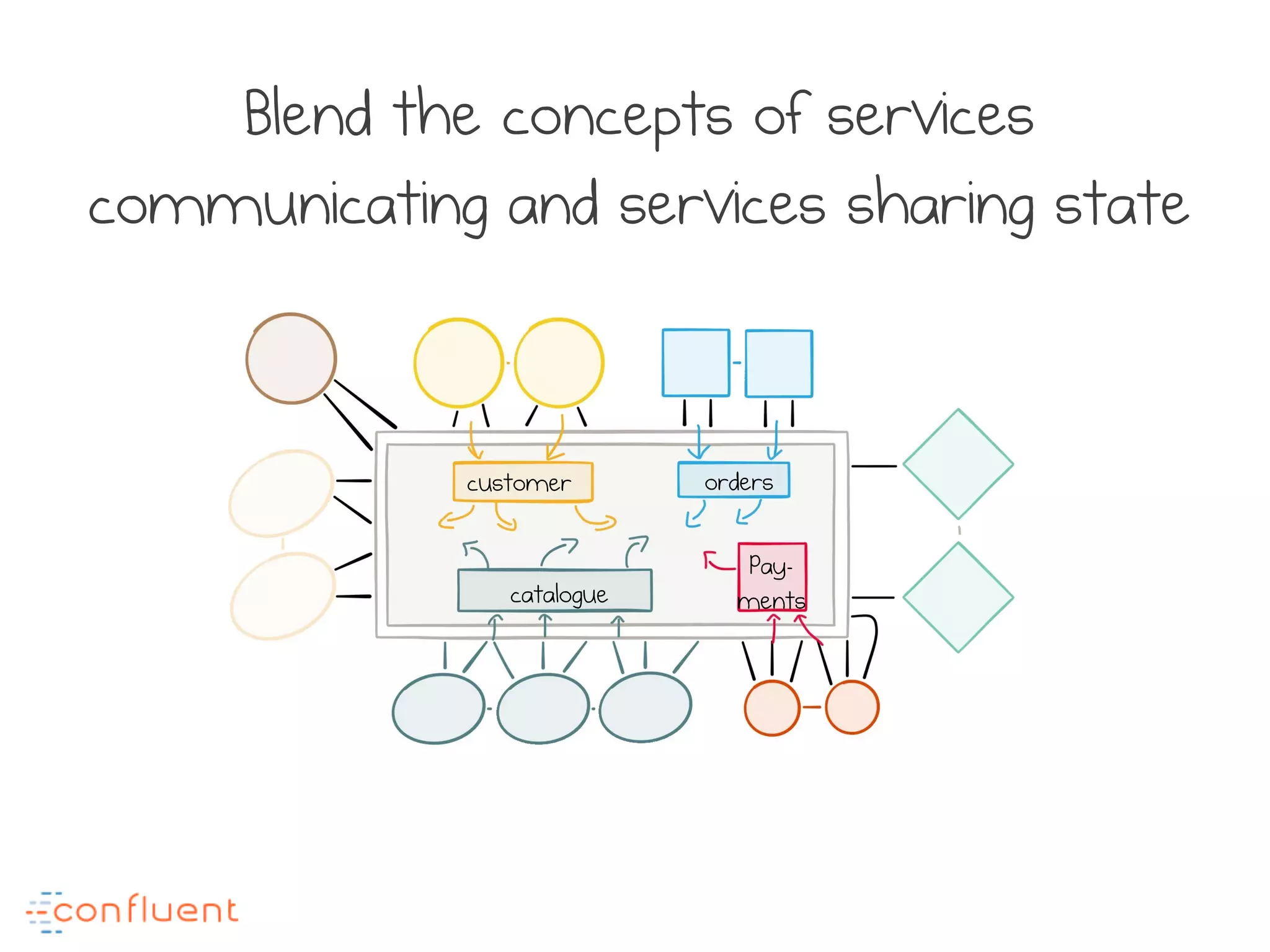

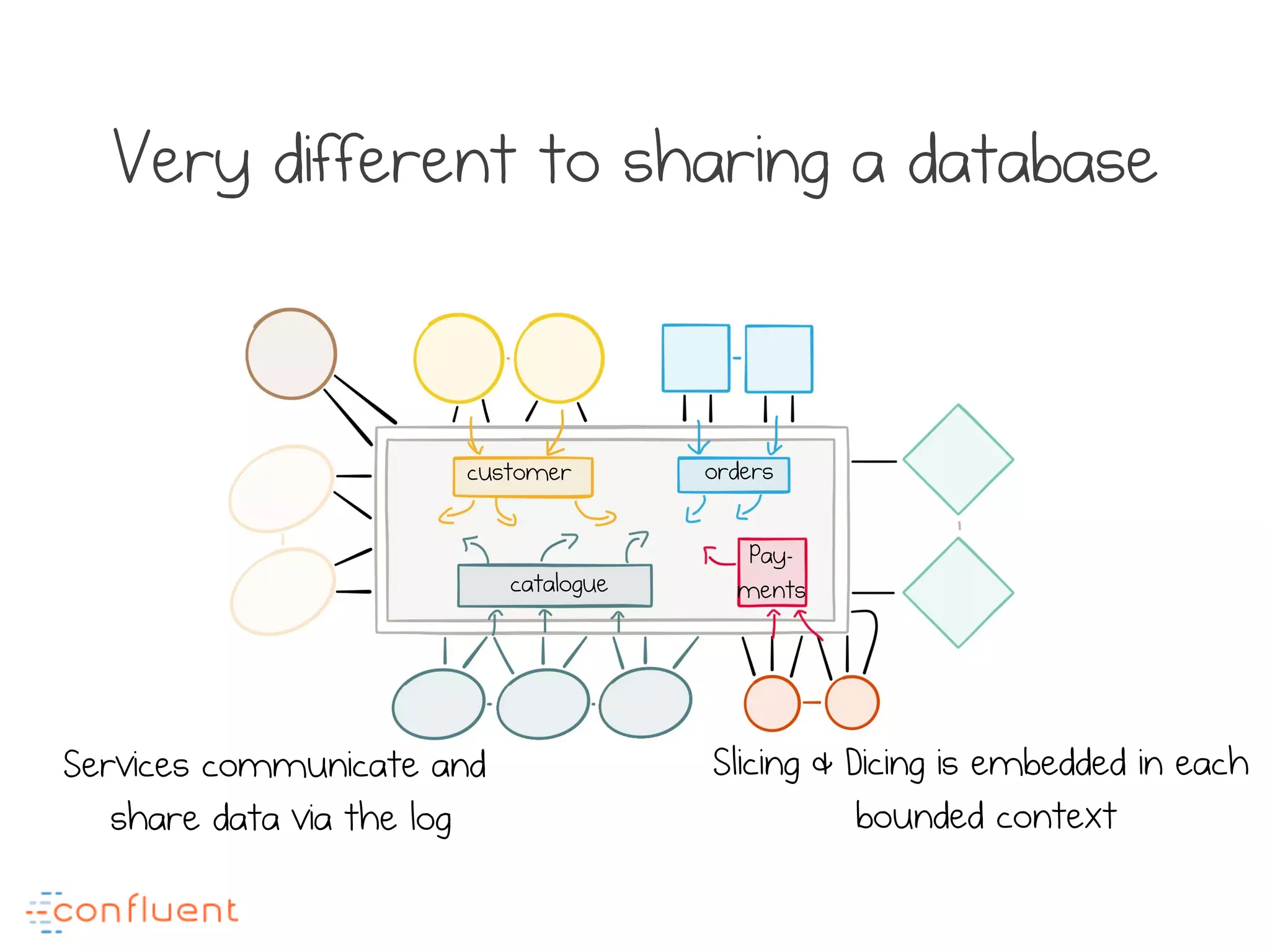

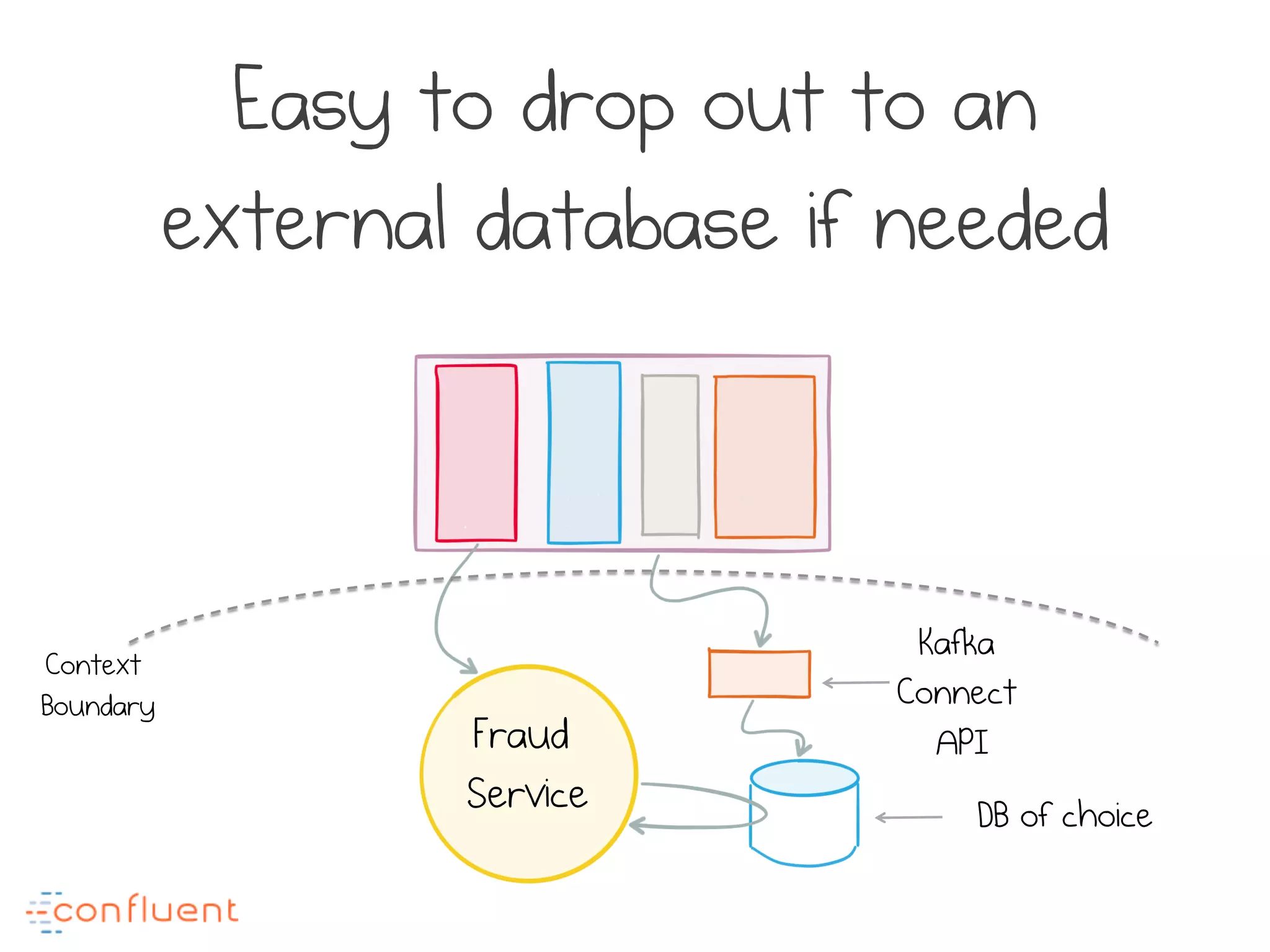

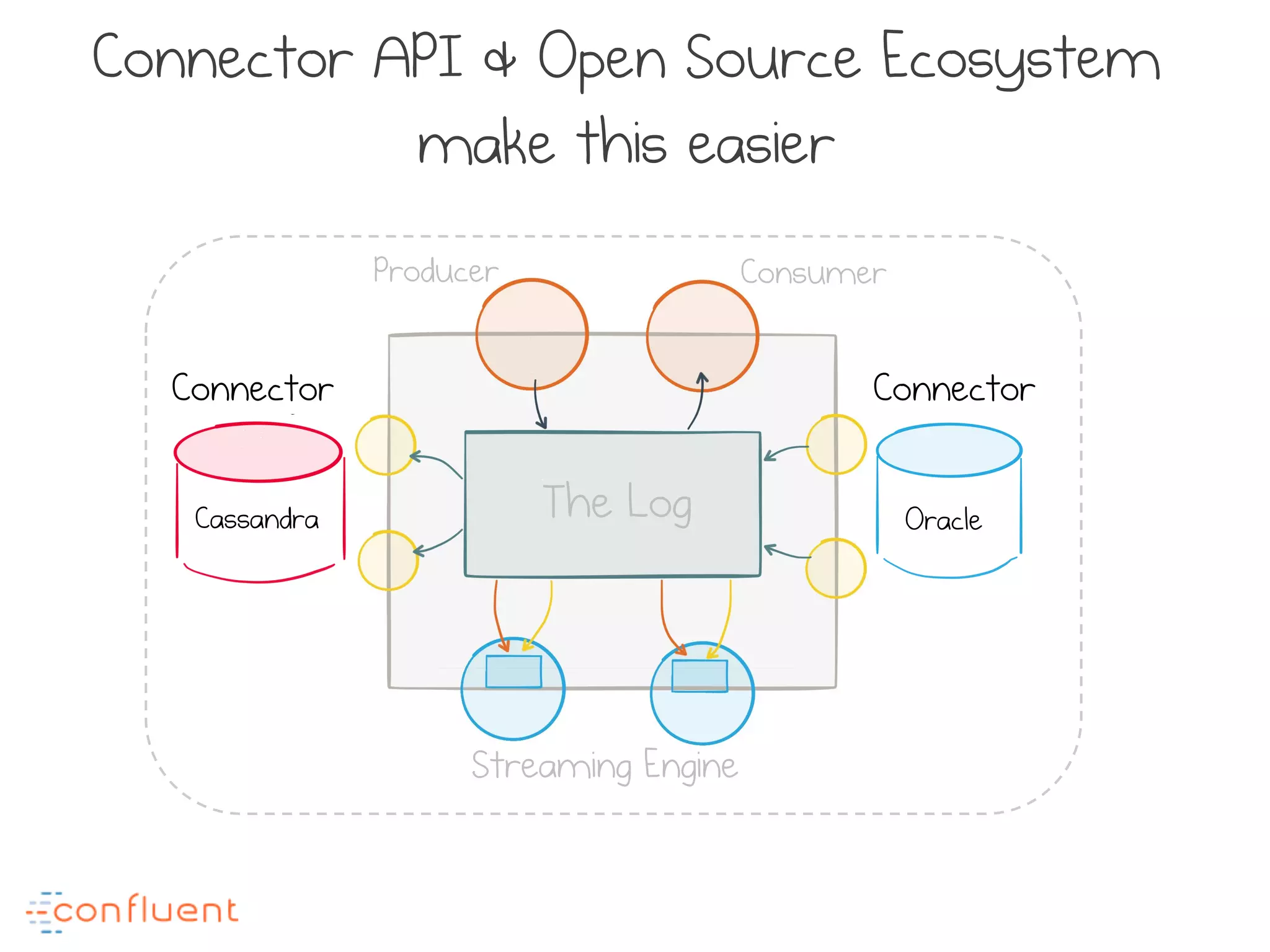

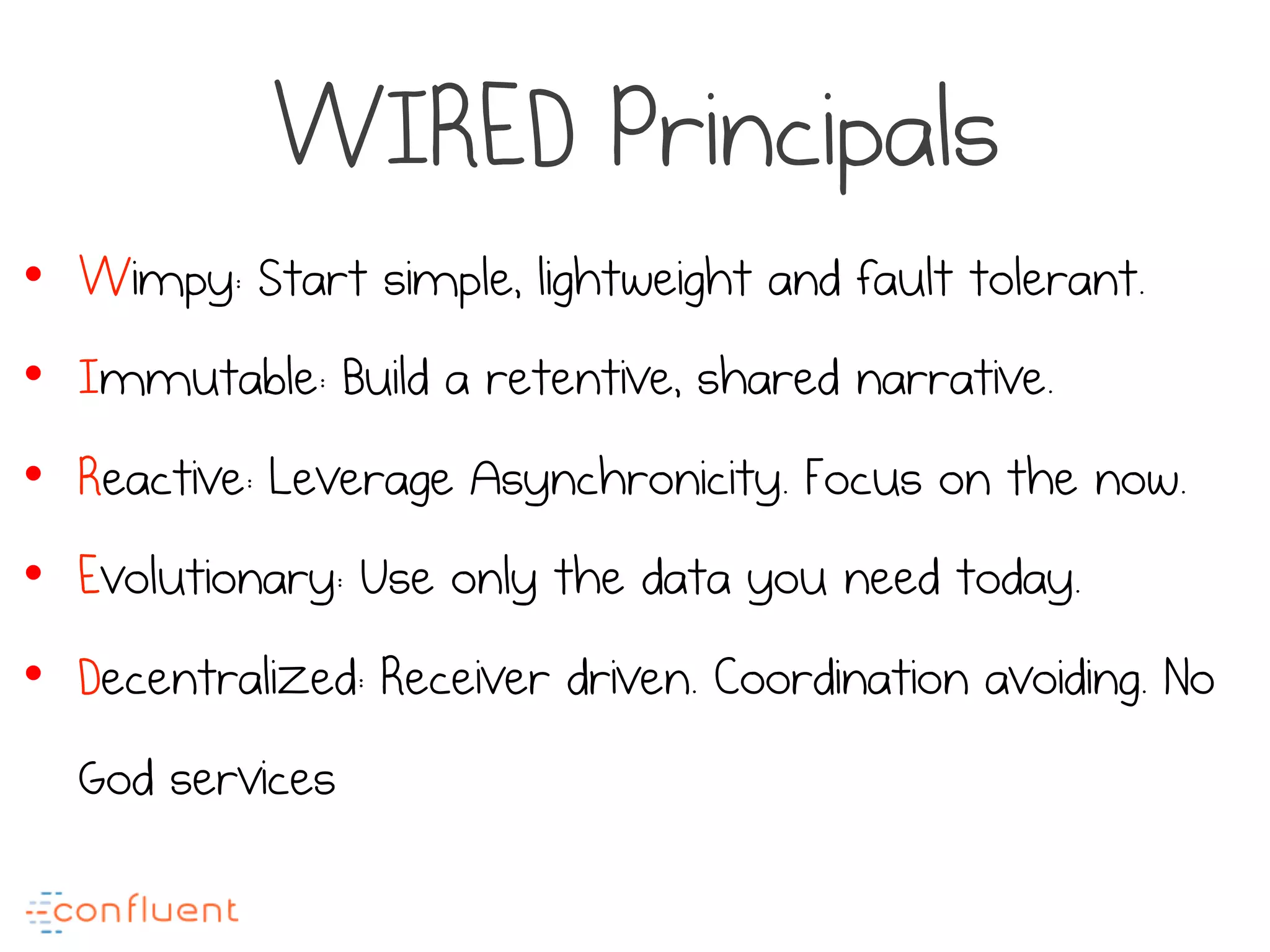

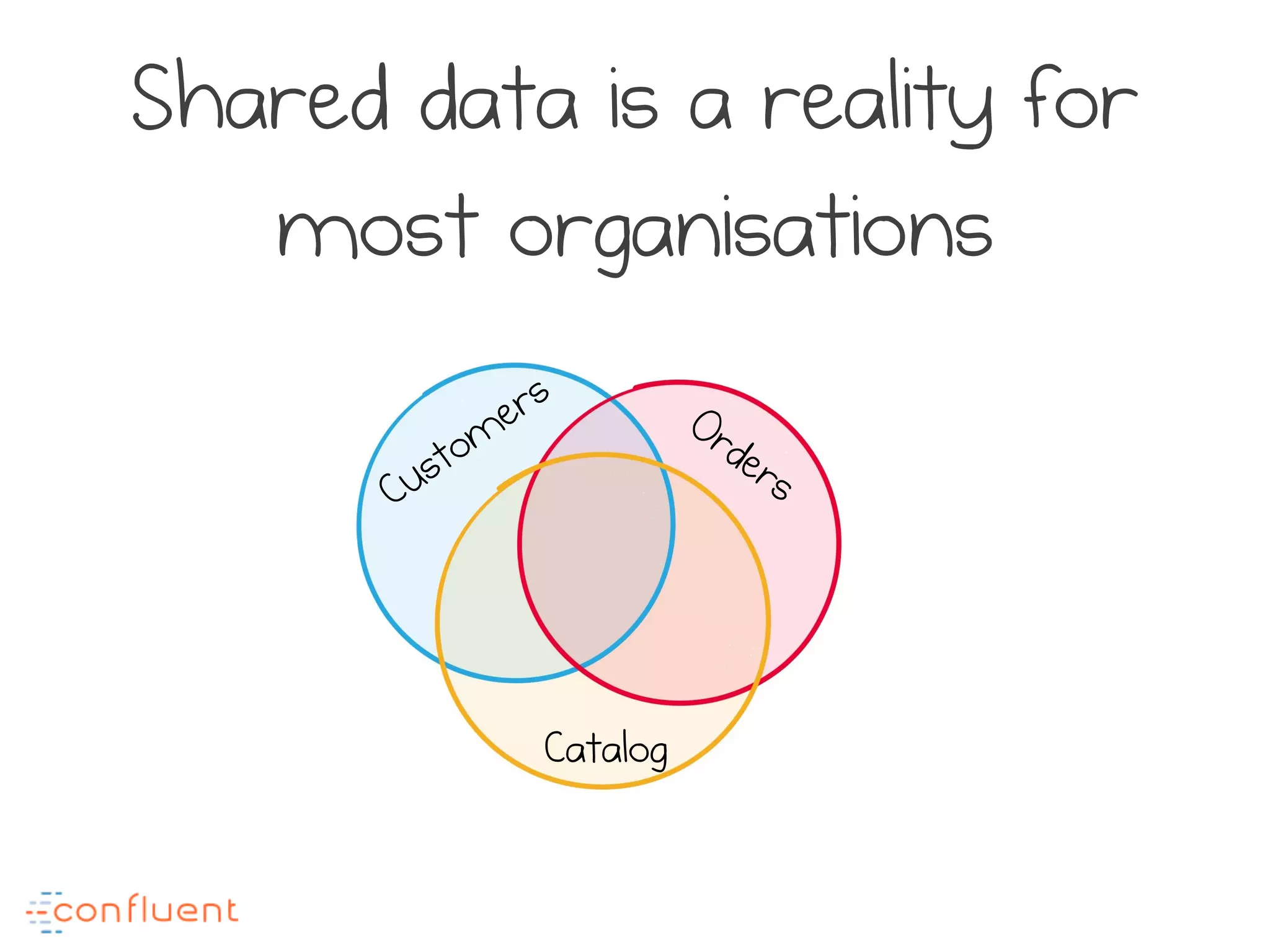

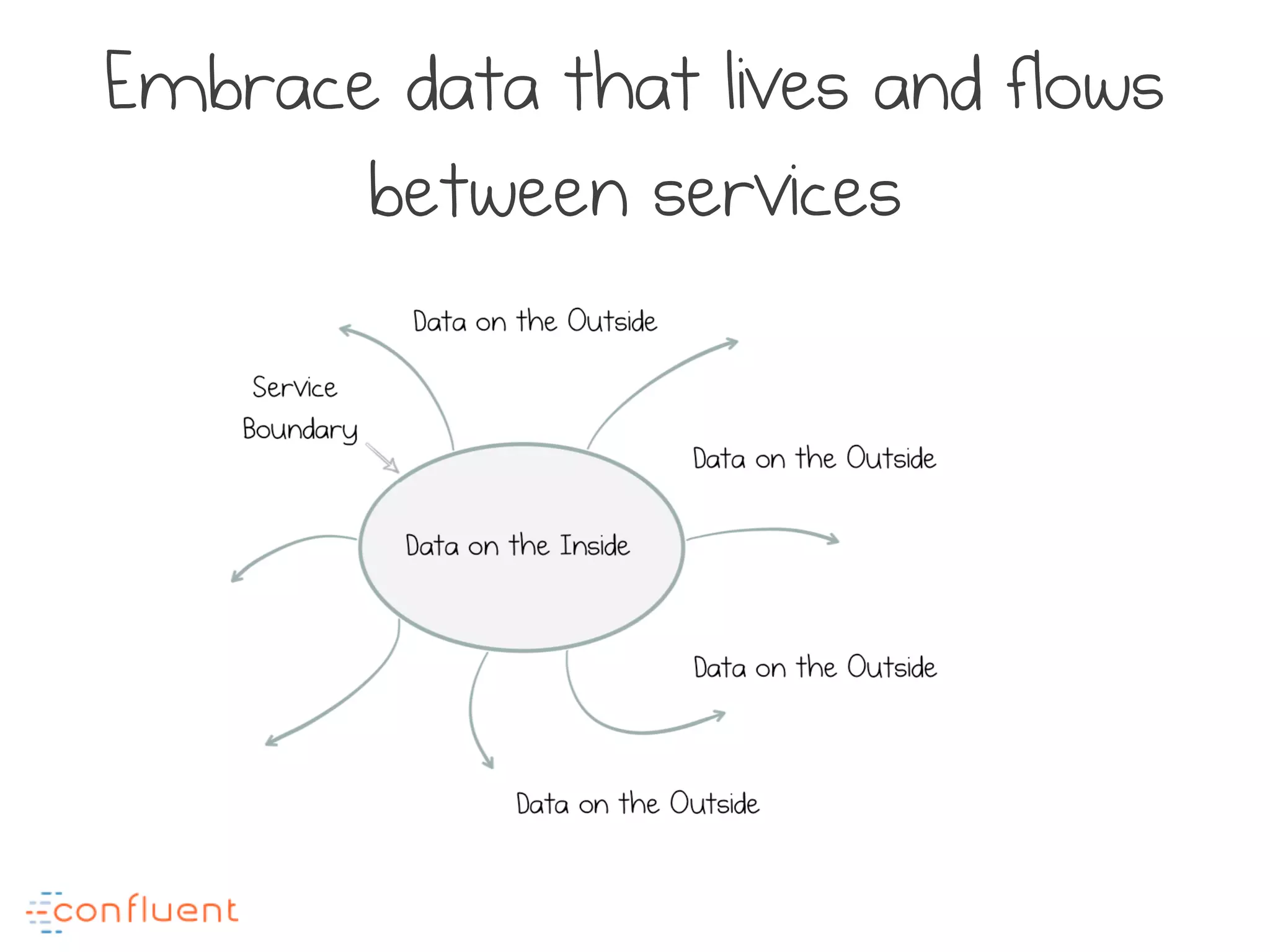

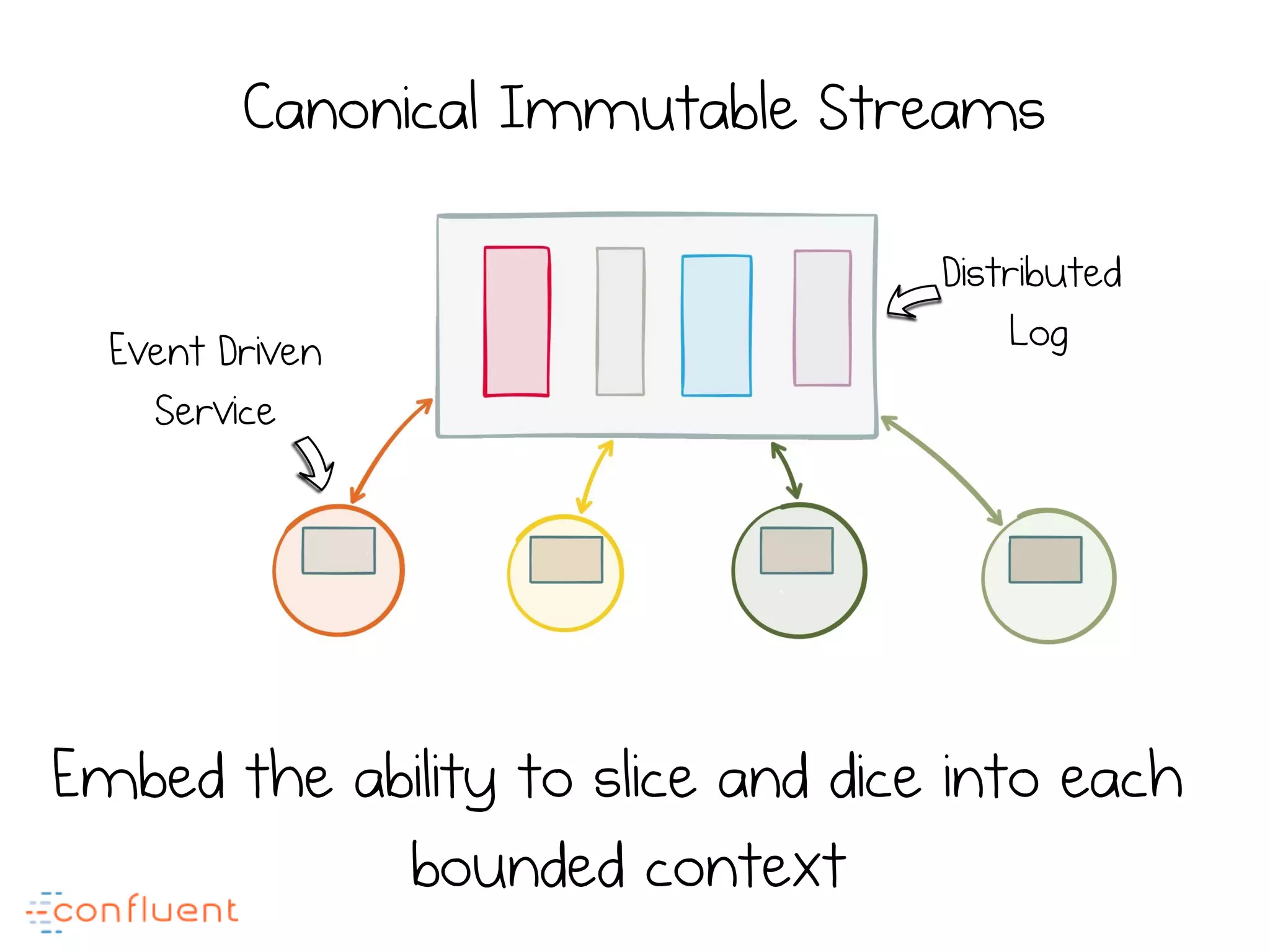

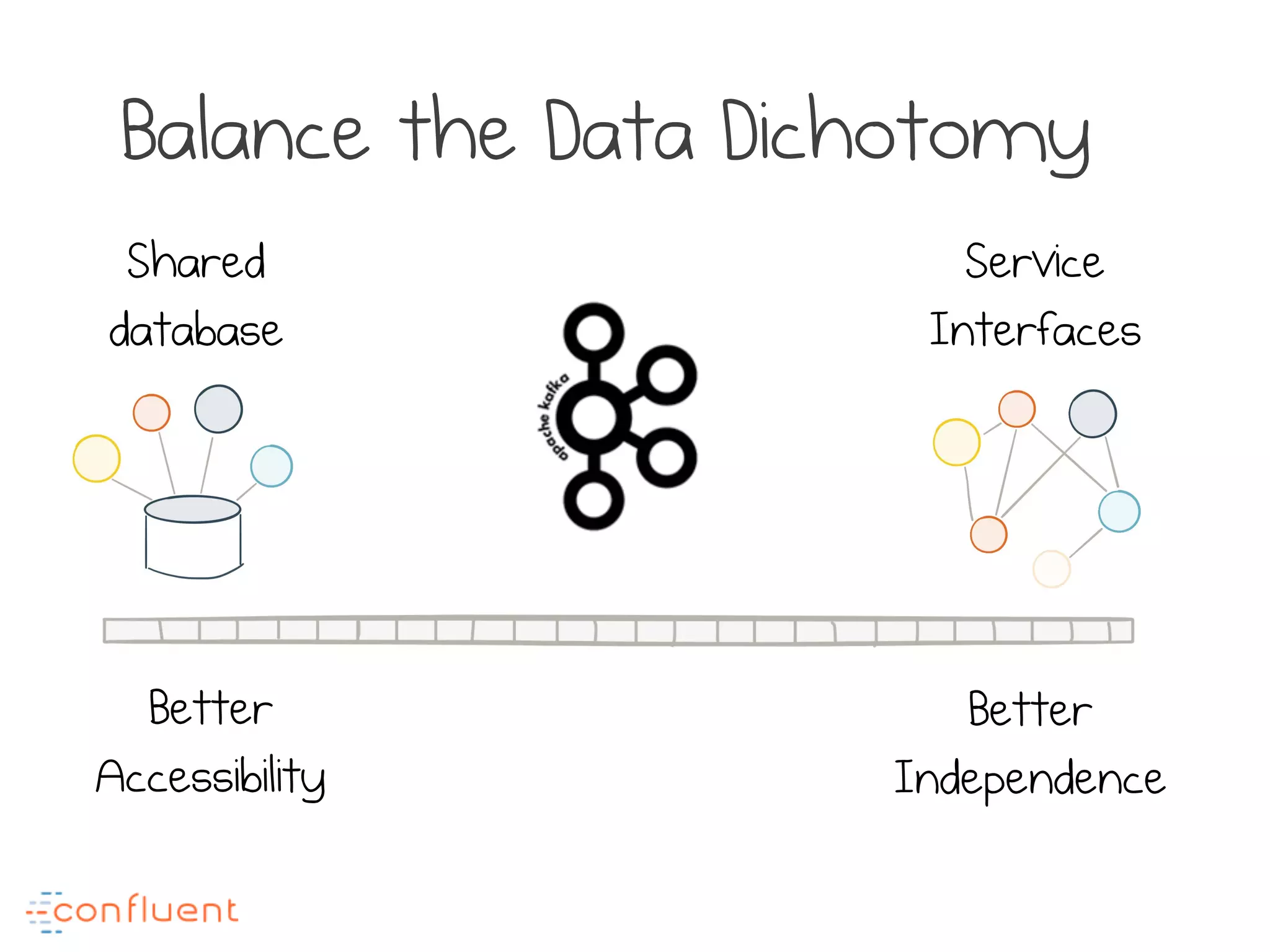

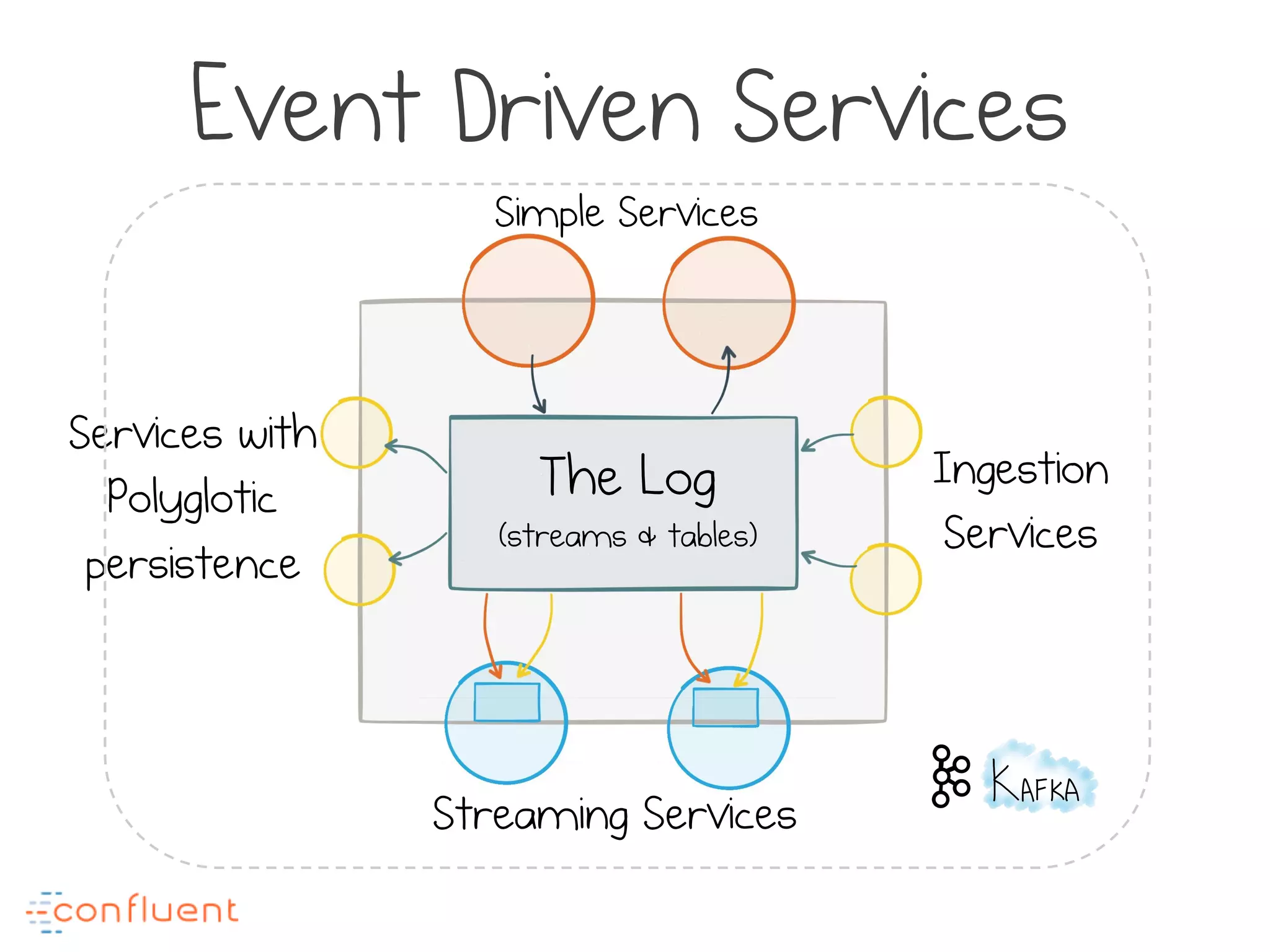

The document discusses rethinking data and services using streams. It argues that microservices are about independence but this comes at a cost of data divergence. It proposes using streams as a shared data backbone, with immutable event streams providing a central narrative. This allows services to remain independent while accessing shared data, and for data to be sliced and diced within each service boundary using stream processing. It concludes that embracing shared streaming data can balance the "data dichotomy" between exposing data in databases and hiding it within services.