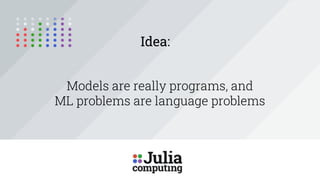

The document discusses the Julia programming language and its uses for software development and machine learning. Some key points:

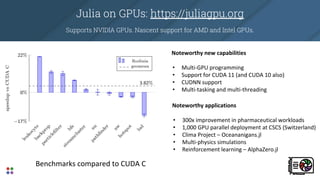

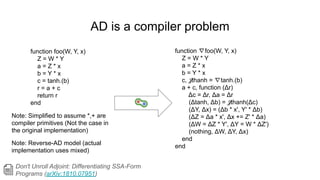

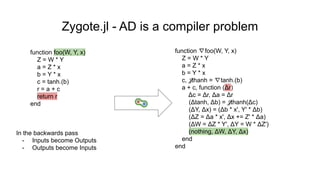

- Julia aims to solve the "two language problem" by allowing both algorithm development and production deployment on a single platform.

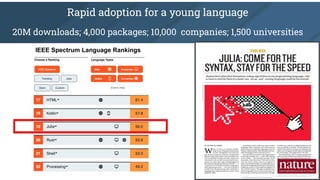

- Julia has seen rapid adoption in recent years, with over 20 million downloads and use by over 10,000 companies and 1,500 universities.

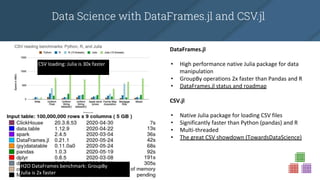

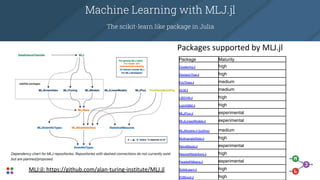

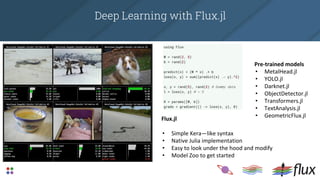

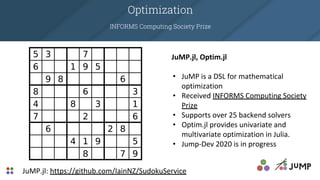

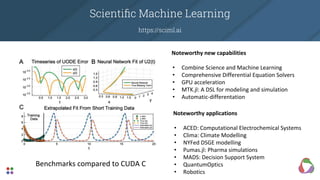

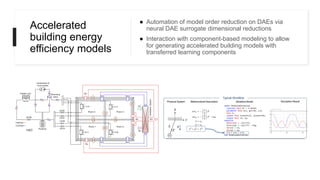

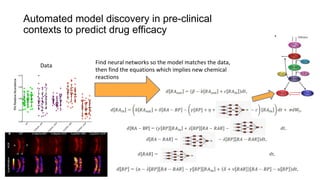

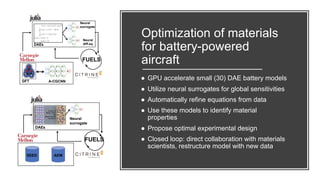

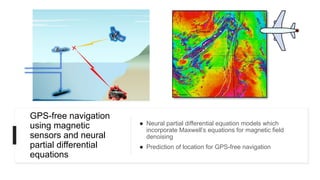

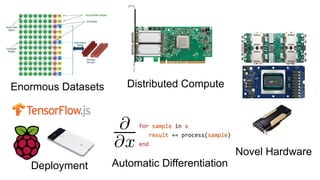

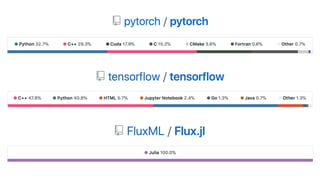

- Julia can perform well for tasks like data science, machine learning, scientific computing and has packages for domains like computer vision, robotics and more.

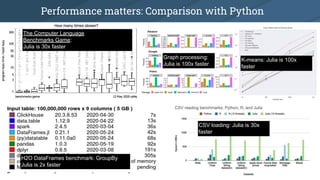

- Julia's just-in-time compilation allows it to often outperform Python for numeric tasks, while its ease of use aims to be between Python and C++.