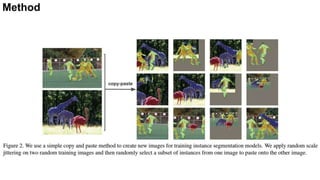

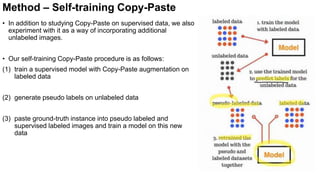

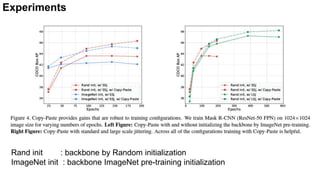

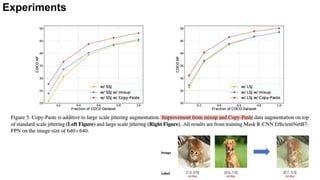

- The document presents a method called Copy-Paste for augmenting instance segmentation datasets by pasting object instances from one image into another.

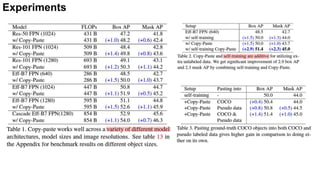

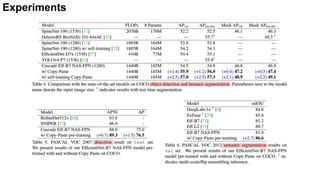

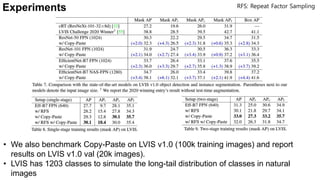

- Copy-Paste improves mask AP on COCO by +0.6 and box AP by +1.5 over previous state-of-the-art. It also improves mask AP on rare categories in LVIS by +3.6 when added to the winning entry.

- The Copy-Paste method is simple, involving randomly selecting and pasting objects between images while adjusting annotations, and provides significant gains with minimal increased training cost or inference time.