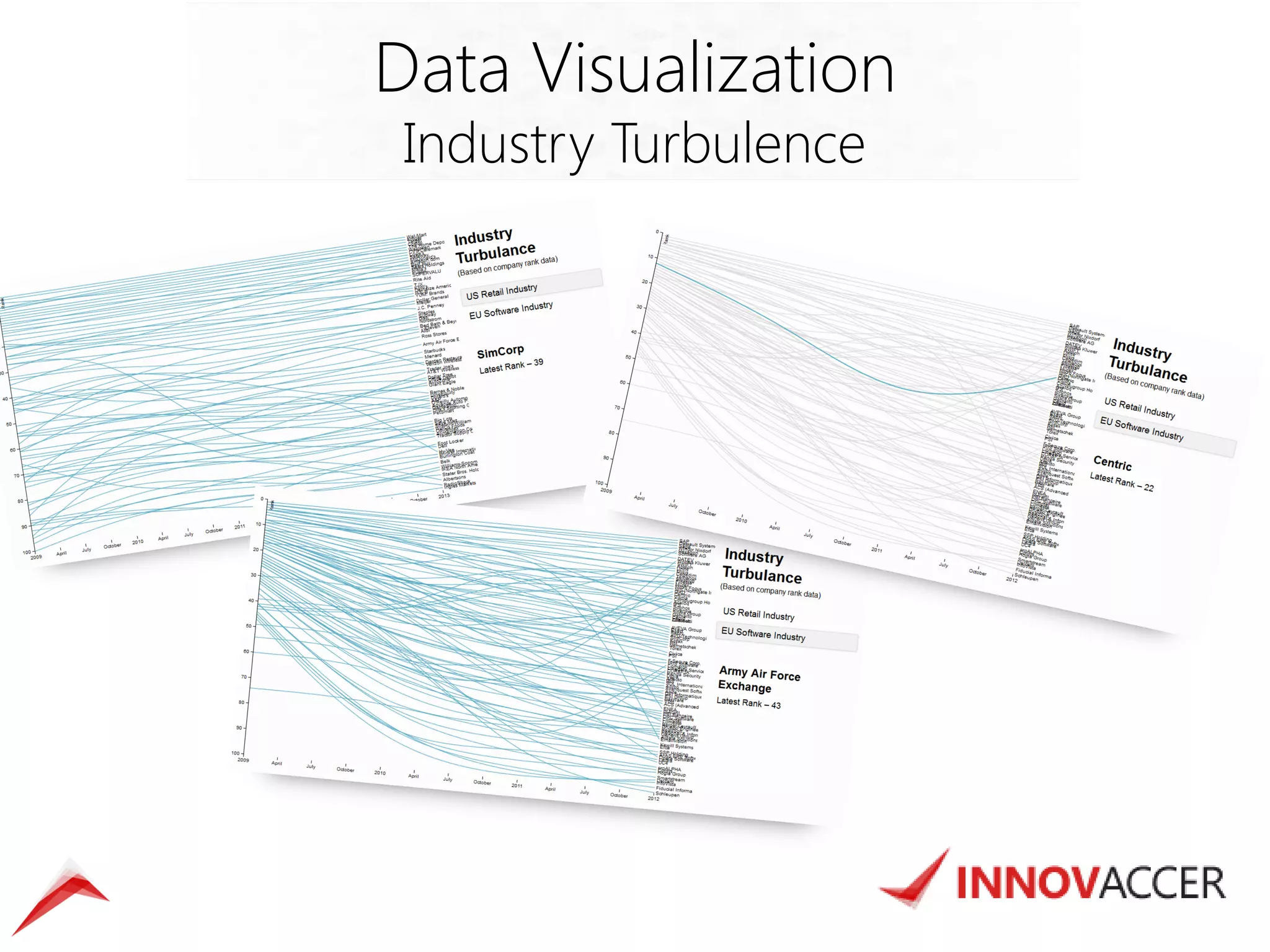

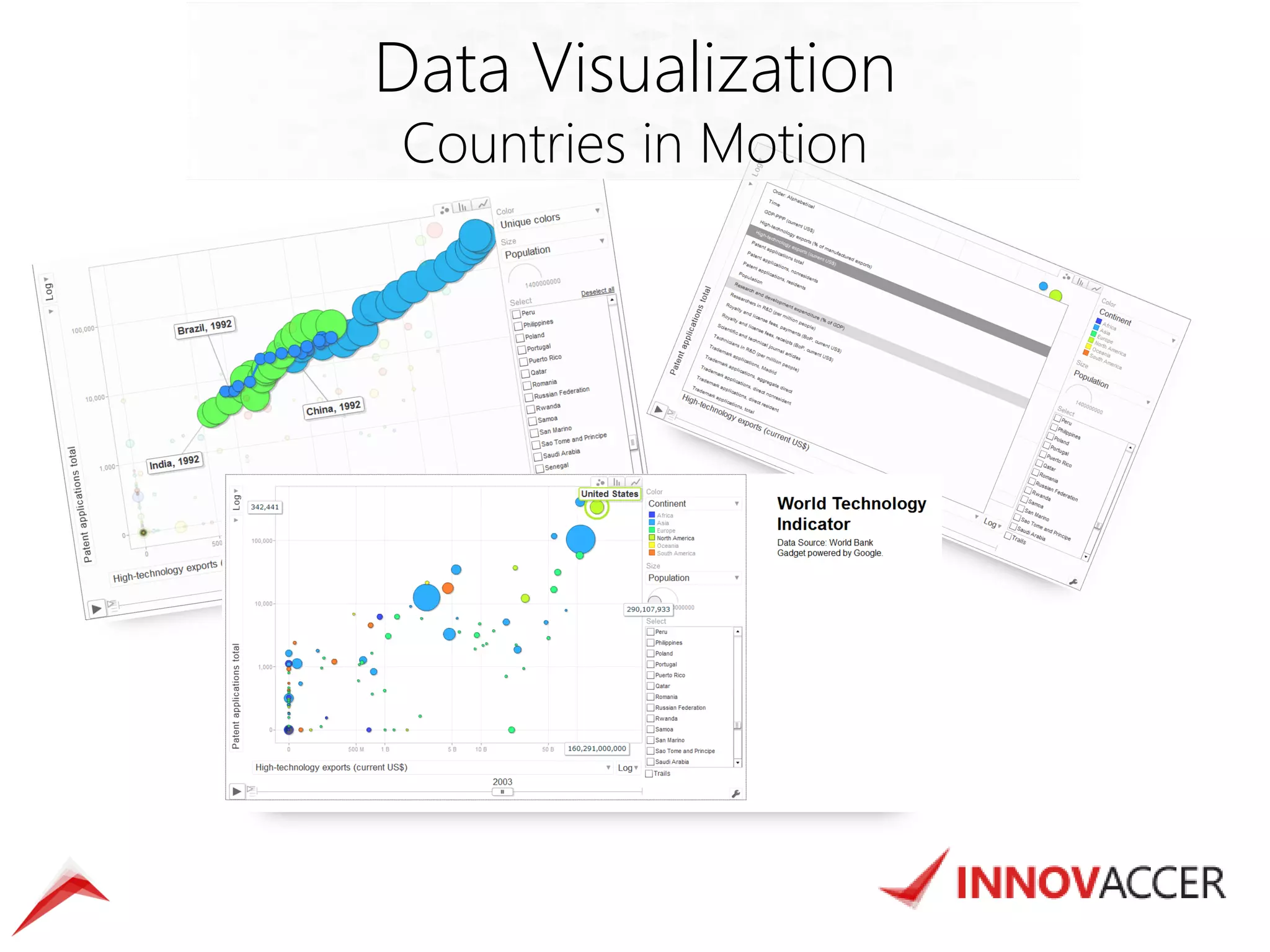

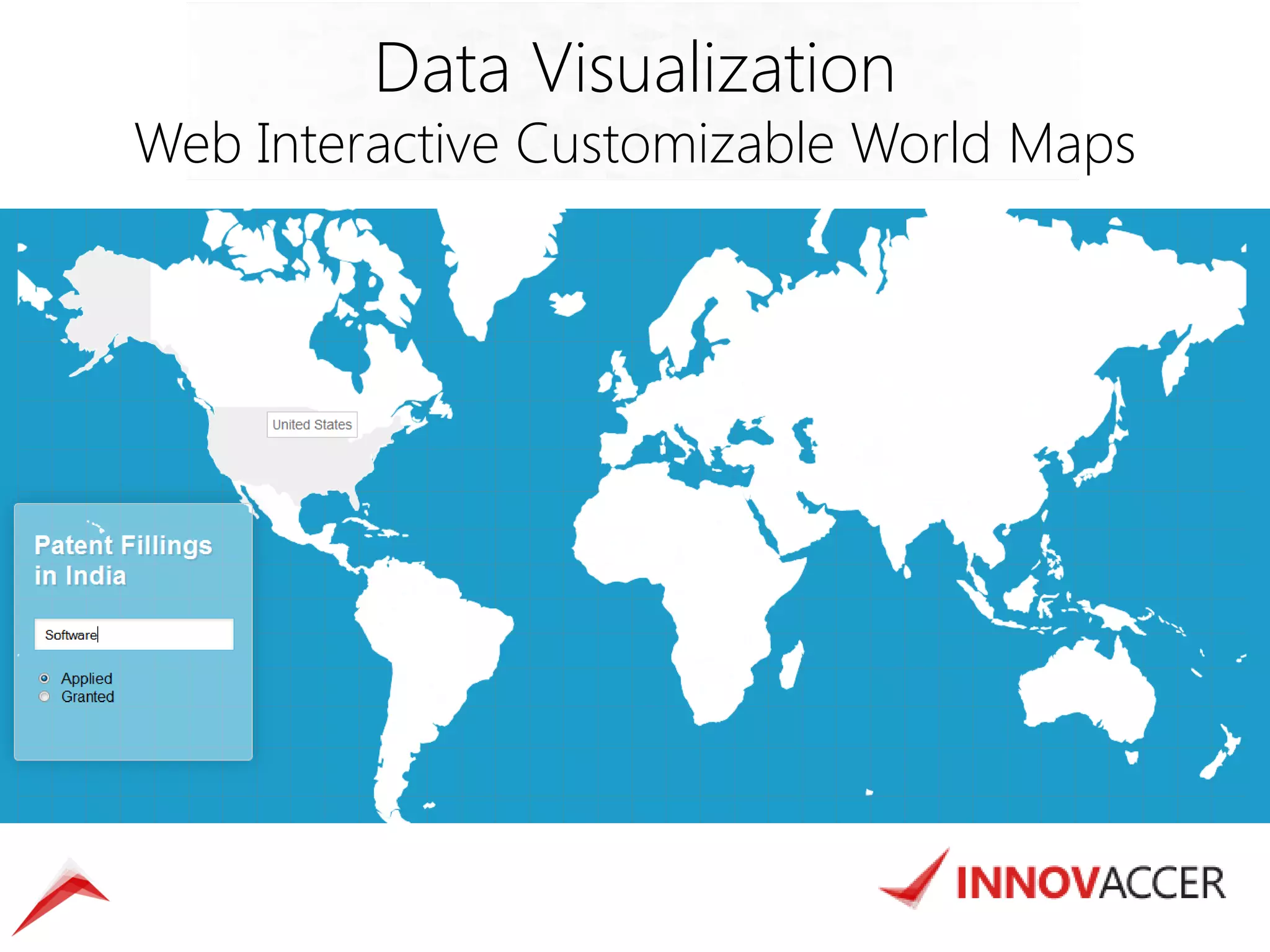

The document outlines various data mining and analysis projects undertaken by a team of data experts to create actionable insights from diverse datasets, including Indian patent data, CEO compensation, earning conference calls, and hospital readmission rates. It describes the automation of data harvesting, cleaning, and statistical modeling, as well as the use of technologies like Hadoop and custom applications for efficient data processing and visualization. Additionally, the document highlights the implementation of cloud-based solutions for mobile surveys and email campaigns to enhance data collection and dissemination efficiency.