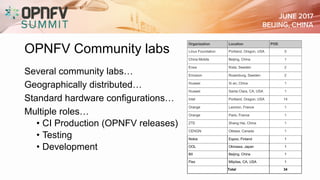

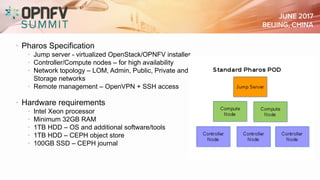

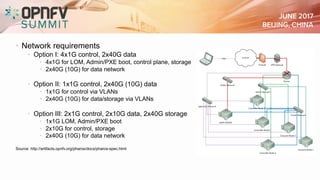

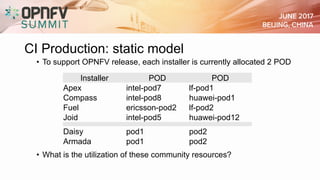

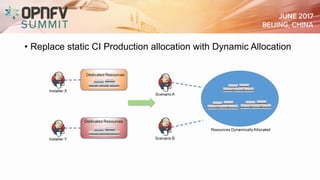

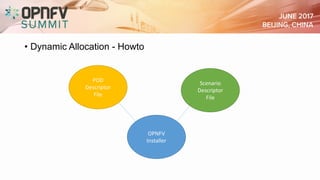

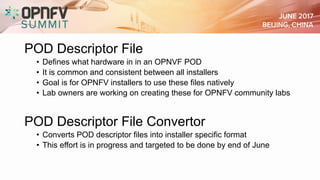

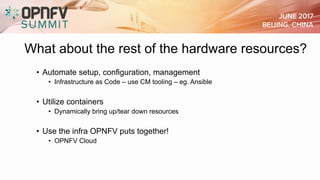

The document discusses the need for improved utilization of OPnfv community hardware resources as the community expands. It outlines current lab configurations, proposes dynamic allocation of resources instead of static models, and emphasizes the necessity for automation and best practices in managing these resources. The goal is to enhance efficiency and support community needs through clear strategies and collaborative feedback.