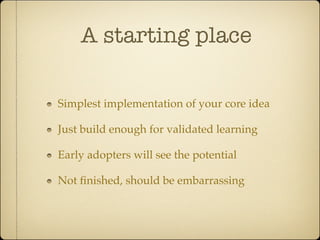

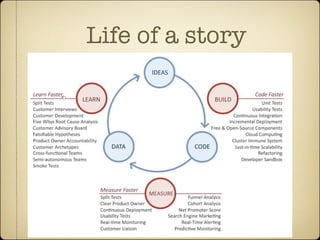

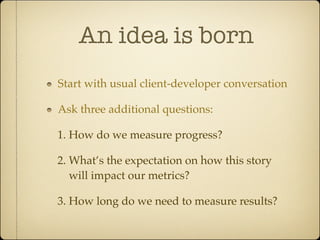

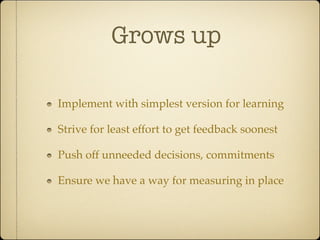

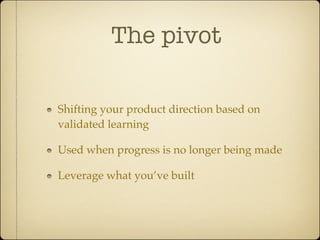

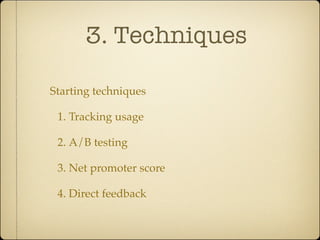

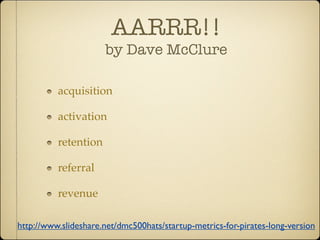

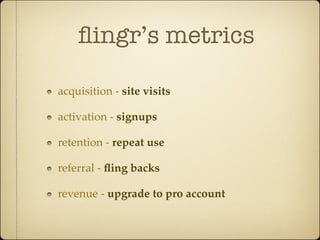

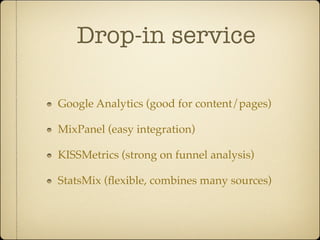

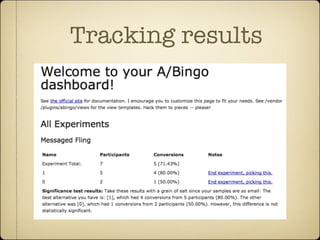

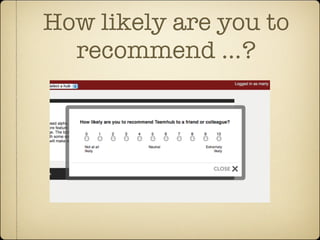

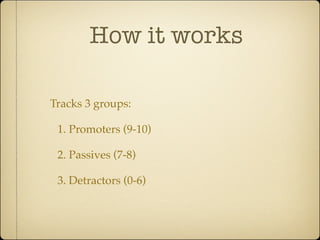

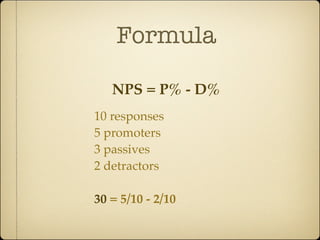

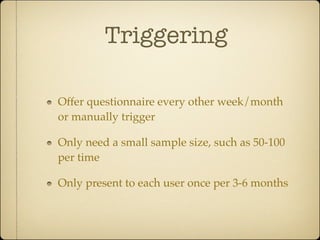

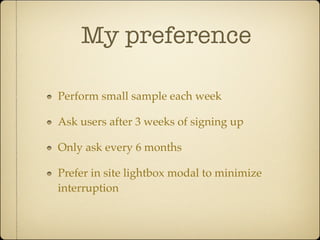

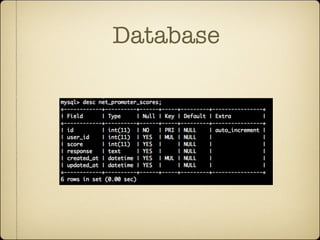

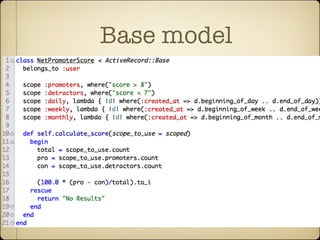

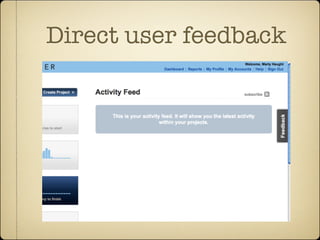

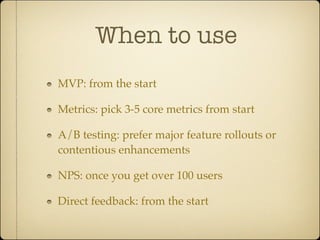

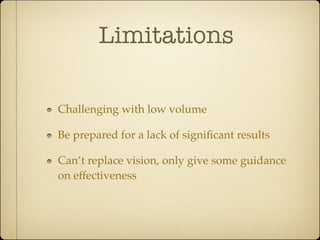

FbDD is a technique for product development that relies on customer feedback to guide decisions. It emphasizes building minimum viable products and testing hypotheses through techniques like A/B testing, tracking usage metrics, net promoter scores, and direct feedback. The goal is to continuously learn what customers need through iterative releases and adjusting the product vision based on validated learning from customer interactions and data.